The Race Toward FTQC: Ocelot, Majorana, Willow, Heron, Zuchongzhi

Table of Contents

Introduction

Quantum computing is entering a new phase marked by five major announcements from five quantum powerhouses—Zuchongzhi, Amazon Web Services (AWS), Microsoft, Google, and IBM—all in the last 4 months. Are these just hype-fueled announcements, or do they mark real progress toward useful, large-scale, fault-tolerant quantum computing—and perhaps signal an accelerated timeline for “Q-Day”? Personally, I’m bullish about these announcements. Each of these reveals a different and interesting strategy for tackling the field’s biggest challenge: quantum error correction. The combined innovation pushes the field forward in a big way. But let’s dig into some details:

- Yesterday, China’s quantum computing powerhouse, the Zuchongzhi research teams, unveiled Zuchongzhi 3.0, a new superconducting quantum processor with 105 qubits.

- Few days ago, AWS unveiled “Ocelot,” a prototype quantum chip built around bosonic cat qubits with error correction in its very design.

- Only a few days earlier Microsoft introduced “Majorana 1,” (to massive media hype, I must say) claiming the first quantum processor using topological qubits that promise inherent stability against noise.

- Last December, Google announced their “Willow” chip which pushes superconducting transmon qubits to unprecedented fidelity, demonstrating for the first time that adding qubits can reduce error rates exponentially (a key threshold for fault tolerance).

- And in November last year IBM announced upgraded “Heron R2” quantum chip which similarly advances superconducting qubit architecture with more qubits, tunable couplers, and mitigation of noise sources, enabling circuits with thousands of operations to run reliably.

These developments are significant because they directly address the roadblock of quantum errors that has limited the progress of quantum computers. And they do it in different ways. By improving qubit stability and error correction, they are collectively pushing the industry closer to practical, large-scale, fault-tolerant quantum computing. In the race toward a useful quantum computer, progress is measured not just in qubit count, but in overcoming noise and scaling issues – and that is exactly what these announcements target.

Equally important, these breakthroughs illustrate diverse and complementary approaches. AWS’s bosonic qubits aim to reduce the overhead of error correction by encoding information in oscillator states. Microsoft’s topological qubits seek to harness exotic states of matter to intrinsically protect quantum information at the hardware level. Google and IBM, while both sticking with superconducting circuits, are dramatically improving coherence and using clever engineering (surface codes in Google’s case, and tunable couplers and software optimizations in IBM’s) to inch toward fault-tolerant operation. Zuchongzhi is at the same time time demonstrating the improvements that can be achieved by better noise reduction in the circuit design and better qubit packaging.

Each development is critical in its own way. Together, they represent a concerted push toward the long-sought goal of a practical quantum computer – one that can maintain quantum coherence long enough, and at a large enough scale, to solve real problems beyond the reach of classical machines.

Breakdown of Each Announcement

AWS Ocelot: Bosonic Cat Qubits and Built-In Error Correction

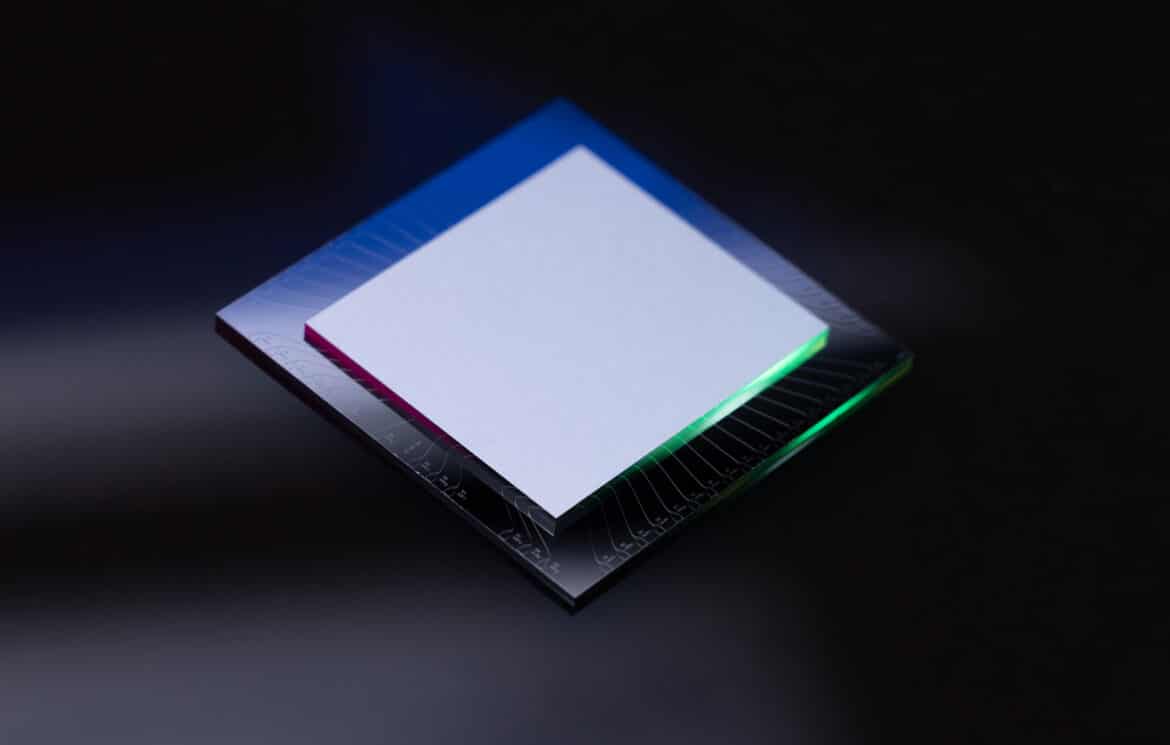

AWS’s announcement of the Ocelot quantum processor marks a paradigm shift in hardware design by making quantum error correction a primary feature of the chip, rather than an afterthought. Ocelot is a prototype consisting of two stacked silicon dies (each ~1 cm²) with superconducting circuits fabricated on their surfaces. It integrates 14 core components: five bosonic “cat” data qubits, five nonlinear buffer circuits to stabilize those cat states, and four ancillary qubits to detect errors. The term cat qubit refers to a qubit encoded in a quantum superposition of two coherent states of a harmonic oscillator (analogous to Schrödinger’s famous alive/dead cat thought experiment). Each cat qubit is realized in a high-quality microwave resonator (oscillator) made from superconducting tantalum, engineered to have extremely long-lived states. The key advantage is that these qubits exhibit a strong noise bias: bit-flip errors (i.e. flips between the two oscillator coherent states) are exponentially suppressed by increasing the photon number in the oscillator. In fact, AWS reports bit-flip error times approaching one second – over 1,000× longer than a normal superconducting qubit’s lifetime. This leaves the primary remaining error mode as phase-flips (relative phase errors between the cat basis states), which occur on the order of tens of microseconds. By dramatically reducing one type of error at the physical level, Ocelot can focus resources on correcting the other.

To catch and correct phase-flip errors, Ocelot uses a simple repetition code across the five cat qubits. The cat qubits are arranged in a linear array and entangled via specially tuned CNOT gates with the four transmon ancilla qubits, which act as syndrome detectors for phase errors. In essence, a phase-flip on any one cat qubit is detected through parity-check measurements (enabled by those ancillas), and the information is redundantly encoded so that a single phase error can be identified and corrected (much like a classical repetition code would correct a bit flip). Meanwhile, each cat qubit’s attached buffer circuit and the noise-biased design of the CNOT gates ensure that the process of error detection doesn’t introduce too many bit-flip errors in return. This concatenation of a bosonic code (for reducing bit-flips) with a simple classical code (for correcting phase-flips) is what AWS calls a hardware-efficient error correction architecture. Notably, the entire logical qubit (distance-5 repetition code) in Ocelot uses only 5 data qubits + 4 ancillas = 9 physical qubits in total, compared to 49 physical qubits that a standard distance-5 surface code would require. AWS’s Nature paper reports that moving from a shorter code (distance 3) to the full distance-5 code significantly lowered the logical error rate (especially for phase flips) without being undermined by additional bit-flip errors. In fact, the total logical error per cycle was roughly 1.65% for the 5-qubit code, essentially the same as the ~1.72% for the 3-qubit code. This demonstrates that Ocelot maintained a large bias in favor of phase errors – the added redundancy suppressed phase flips faster than any new bit-flip opportunities could hurt it. In practical terms, Ocelot achieved a fully error-corrected logical memory that spans five physical qubits, with a net error rate far lower than any individual qubit.

While Ocelot is only a single logical qubit prototype, its specifications are impressive. The cat qubits’ Tbit-flip (bit-flip lifetime) is ~1 s and Tphase-flip ~20 µs. By comparison, a typical transmon qubit might have T1 and T2 in the 0.02–0.1 ms (20–100 µs) range. Thus, Ocelot’s qubits are orders of magnitude more robust against bit-flips. The trade-off is that phase errors remain frequent, but those are exactly what the repetition code handles.

One potential scaling challenge for this approach will be implementing logical gates between multiple cat-qubit logical units – so far Ocelot demonstrates a memory qubit (it stores a quantum state with improved fidelity), but not a logic gate between two logical qubits. Extending the scheme to a fully programmable computer will require linking many such encoded qubits and orchestrating complex syndrome measurements, all while preserving the delicate noise bias. This will demand further integration (more resonators, couplers, and readout circuitry) and likely new techniques to manage higher photon-number states across many modes. Additionally, while repetition codes are simple, more powerful error-correcting codes (with higher distance) might be needed for logic operations, which could increase overhead. AWS, however, is optimistic – they note that if this bosonic approach scales, a full fault-tolerant quantum computer might need only one-tenth the number of physical qubits that conventional architectures would require. Ocelot’s success is a proof-of-concept that bosonic qubits can be integrated into a chip and outperform equivalent transmon-based logical qubits, potentially accelerating the timeline to a useful quantum computer by several years.

For more information see: AWS Pounces on Quantum Stage with Ocelot Chip for Ultra-Reliable Qubits.

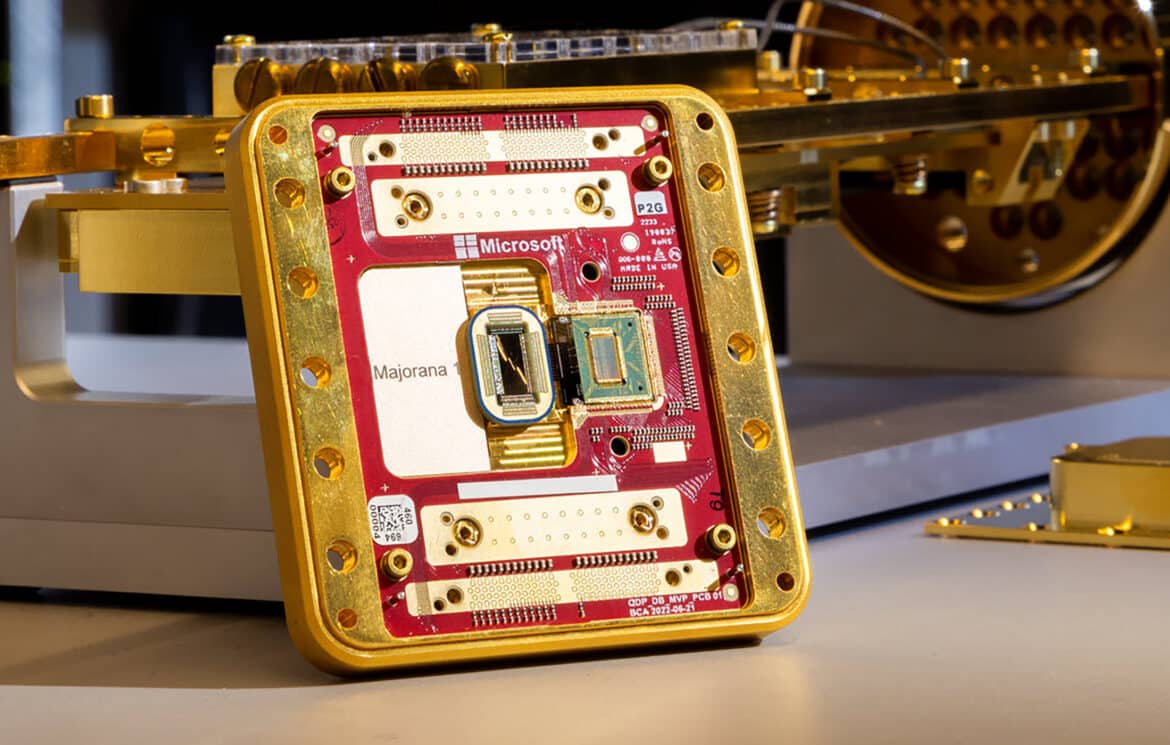

Microsoft’s Majorana 1: Topological Qubits and the Quest for Stable Qubits

Microsoft’s Majorana 1 chip represents a long-awaited breakthrough in topological quantum computing. It is the first prototype quantum processor based on Majorana zero modes (MZMs) – exotic quasiparticles that emerge at the ends of specially engineered nanowires and that behave as quasi-particles which are their own antiparticles. In theory, pairs of these MZMs can encode quantum information in a non-local way that is inherently protected from many forms of noise. The Majorana 1 chip is a palm-sized device housing eight topological qubits on a single chip, fabricated with a new materials stack of indium arsenide (InAs) semiconductor and aluminum superconductor. These materials form what Microsoft calls “topoconductors,” creating a topological superconducting state when cooled and placed in a magnetic field. In this state, each tiny nanowire (on the order of 100 µm in length) can host a pair of Majorana zero modes at its ends. Four MZMs (for example, the ends of two nanowires) together encode one qubit’s state in a distributed manner – essentially storing quantum information in the parity of electrons shared across the wire ends, rather than at any single location. This topological encoding is expected to be highly resistant to local disturbances: an error would require a global change that alters the topology (e.g. breaking the pair or moving a Majorana from one end to the other), which is energetically or statistically very unlikely. As a result, a qubit encoded in MZMs should remain coherent far longer than a conventional qubit, without active error correction – at least for certain types of errors (notably, bit-flips in the topologically protected basis).

In announcing Majorana 1, Microsoft revealed that after nearly two decades of research (!), they finally achieved the creation and detection of Majorana zero modes in a device that allows qubit operations. The chip’s eight qubits are arranged in a way that is designed to be scalable to millions of qubits on one chip. Each qubit is extremely small (about 1/100th of a millimeter, or ~10 µm, in size) and fast to manipulate via electronic controls. One of the headline achievements in the accompanying Nature publication was a demonstration of a single-shot interferometric parity measurement of the Majorana modes. In simpler terms, they can read out the joint state of a pair of MZMs (which reveals the qubit’s value) in one go, without needing to average over many trials. This is crucial for using these as qubits. The Nature paper’s peer-reviewed findings confirm that Microsoft created the conditions for Majorana modes and measured their quantum information reliably. However, it’s worth noting an important caveat: while Microsoft has announced the creation of a topological qubit, the Nature reviewers included a comment that the results “do not represent evidence for the presence of Majorana zero modes” themselves, but rather demonstrate a device architecture that could host them. In other words, the scientific community is cautious – they want more definitive proof that the observed behavior is truly due to MZMs. For more on this controversy and other past controversies with Microsoft Majorana team, see my article: Microsoft’s Majorana-Based Quantum Chip – Beyond the Hype.

From a theoretical perspective, a stable topological qubit is like the holy grail of quantum hardware. Its implications are profound. Firstly, stability by design could drastically reduce the overhead needed for error correction – you might not need to constantly perform syndrome measurements or have dozens of physical qubits guarding one logical bit, if the physical qubit is already extremely immune to noise. Microsoft envisions scaling Majorana qubits such that a single chip can host on the order of 1,000,000 qubits. They argue that only with such massive scaling (enabled by the small size and digital controllability of topological qubits) will quantum computers reach the complexity for transformative applications. A million topological qubits, if each is much more reliable than today’s qubits, could theoretically perform the trillions of operations needed for useful algorithms like breaking down complex molecules or factoring large numbers.

It’s sobering, however, that currently Majorana 1 has just eight qubits, and even those have not yet been shown performing arbitrary quantum logic gates – the announcement focused on initialization and measurement (parity control) of the qubits. The next steps will likely involve demonstrating qubit operations like braiding (exchanging Majoranas to perform logic gates) and two-qubit interactions, and showing that these operations obey the expected topological properties (e.g. certain gates being inherently fault-tolerant). If any of these pieces falter – for instance, if environmental factors like quasiparticle poisoning disturb the MZMs too often – additional error correction would still be needed on top of the topological protection. Microsoft did acknowledge that not all gates are topologically protected; for example, the so-called T-gate (a non-Clifford operation) would still be “noisy” and require supplemental techniques. In summary, Majorana 1 is a daring bet on a fundamentally different approach to quantum computing. After years of setbacks and skepticism, Microsoft’s latest results have started to convince the community that topological qubits might finally be real. If the claim stands, it’s a watershed moment: a new state of matter (topological superconductor) harnessed to create qubits that are naturally resilient. That could eventually translate to quantum processors with vastly higher effective performance, as error correction overhead is slashed. In the near term, Majorana 1 will be used internally for further research – it’s not yet solving any useful problems – but it lays a theoretical foundation that could leapfrog other technologies if it scales as hoped.

For more information see: Microsoft’s Majorana-Based Quantum Chip – Beyond the Hype.

Google Willow: A 105-Qubit Transmon Processor Achieving Error-Correction Thresholds

Google’s Willow quantum chip is the latest in the line of superconducting processors from the Google Quantum AI team, and it comes with two major achievements:

- it significantly boosts coherence and fidelity such that adding more qubits actually decreases the overall error rate (crossing the coveted error-correction threshold), and

- it demonstrated an ultra-high-complexity computation in minutes that would take a classical supercomputer an astronomically long time.

Willow contains 105 superconducting qubits of the transmon variety, arranged in a 2D lattice suitable for the surface code error-correcting scheme. The qubits are connected by couplers in a layout similar to Google’s previous 54-qubit Sycamore processor, but with notable architectural improvements. One key upgrade is that Willow retains the frequency tunability of qubits/couplers (as Sycamore had) for flexible interactions, while dramatically improving coherence times: the average qubit energy-relaxation time T1 on Willow is about 68 µs, compared to ~20 µs on Sycamore. This ~3× improvement in coherence is partly due to better materials and fabrication (Google cites a new qubit design and mitigation of noise sources) and partly due to improved calibration techniques (leveraging machine learning and more efficient control electronics). In tandem, two-qubit gate fidelities were roughly doubled compared to the Sycamore generation. If Sycamore’s CZ gates had error rates on the order of 0.6%, Willow’s are around ~0.3% or better (single-qubit gates are even higher fidelity). These numbers put Willow in the regime of the best superconducting qubits reported in any lab to date.

Crucially, this hardware boost allowed Google to demonstrate scalable quantum error correction for the first time. Using the surface code (a topological quantum error-correcting code on a 2D grid of qubits), the team encoded a logical qubit into increasing sizes of code: a 3×3 patch of qubits (distance-3 code), a 5×5 patch (distance 5), and a 7×7 patch (distance 7). With each increase in code distance, they observed an exponential suppression of error rates – specifically, each step up reduced the logical error rate by about a factor of 2 (a 7×7 code’s logical error was ~4× lower than that of a 3×3 code). By the largest code (49 physical qubits encoding 1 logical qubit), the logical qubit’s lifetime exceeded that of the best individual physical qubit on the chip. This means the logical qubit is actually higher quality than any bare physical qubit – a landmark known as “beyond break-even” quantum error correction. In the language of error correction, Google had crossed the fault-tolerance threshold: their operations are in a regime “below threshold” where adding more qubits to the code yields net fewer errors. This is the first time a superconducting quantum system has definitively shown such behavior in real time (previous attempts either saw no improvement with code size or only marginal improvement). For more in-depth information about the Google’s error correction achievement, see the Nature paper accompanying the announcement: Quantum error correction below the surface code threshold. Achieving this required not only excellent qubits, but also real-time decoding and feedback. Google implemented fast error syndrome extraction cycles and decoding algorithms (with help from classical compute and custom ML algorithms) that can identify and correct errors on the fly, faster than they accumulate. In the Nature article published alongside Willow, they report that with the 7×7 code, the logical error probability per cycle was cut in half compared to the 5×5 code, firmly establishing that they are operating in the scalable regime. In summary, Google Willow is the first platform where quantum error correction “works” in practice – reaching the point where bigger truly means better in terms of qubit arrays.

Another headline from Google’s announcement was a demonstration of raw computational power. Willow executed a random circuit sampling benchmark of unprecedented size, completing it in about 4.5 minutes. Google claims that the Frontier supercomputer (currently the world’s fastest, at ~1.35 exaflops) would take on the order of 1025 years to perform the equivalent task. This massive separation (quantum vs classical) far exceeds the 2019 “quantum supremacy” demonstration, where the task was estimated to take 10,000 years on an IBM supercomputer. In fact, after optimizations, that 2019 task was brought down to a matter of days on classical machines, but Google notes that for this new experiment, even accounting for future classical improvements, the quantum speedup is growing “at a double exponential rate” as circuit size increases. The benchmark involved entangling all 105 qubits in a complex pattern and performing many layers of random two-qubit gates, a test that is both computationally hard for classical simulation and pushes the quantum chip to its limits. The ability to run such a large circuit (5000+ two-qubit gate operations in total) was enabled by Willow’s lower error rates and the error correction capability – indeed, IBM had run a similarly large circuit (2880 two-qubit gates) in 2023 on their 127-qubit Eagle, but required heavy error mitigation to get a valid result. Google’s milestone indicates that quantum supremacy has been re-affirmed on a larger scale, and now with a machine that is closer to being error-corrected. It is a proof that increased qubit count plus error reduction can yield computational results vastly beyond classical reach, reinforcing confidence that scaling up will unlock useful quantum advantage.

From an architectural standpoint, Willow doesn’t introduce radical new qubit types – it’s still a transmon chip – but it showcases incremental advances coalescing into a big leap. The coherence improvements (68 µs T1, ~50–70 µs T2) came from material upgrades like better substrate and surface treatment and possibly using indium bump-bonds (though unconfirmed) to reduce loss. The tunable couplers and qubits allow flexibility in isolating qubits when idle and reducing crosstalk (a technique IBM also employs in its chips), which contributes to lower error rates. Additionally, Google employed advanced control software: automated calibrations, machine learning for fine-tuning pulses, and a reinforcement learning agent to optimize error correction performance. The integration of all these pieces is what allowed Willow to hit the below-threshold regime. One challenge ahead for Google is how to continue scaling qubit count while keeping errors down. Their roadmap, like others, will require modularity – possibly linking multiple 100+ qubit chips or developing larger wafers – since simply doubling qubits on one die could re-introduce noise and fabrication difficulties. But the surface-code approach has now been validated: if each module can have, say, 1000 qubits at a few 0.1% error rate, one can start assembling logical qubits of very high quality by using enough physical qubits. Google’s achievement with Willow gives a clear quantitative target: a logical qubit with error ~10-3 (0.1%) per operation was achieved with 49 qubits; pushing that error down further to, say, 10-6 will require perhaps a few hundred physical qubits per logical qubit. Willow is the stepping stone demonstrating that the scaling curve holds as expected.

In short, Google’s Willow announcement is a strong validation of the transmon/surface-code path to fault tolerance: it showed that with improved hardware and clever coding, one can now suppress errors exponentially with system size. This moves the field closer to practical quantum error correction, and along with it, closer to running useful algorithms reliably on a quantum machine.

For more information see my summary of the announcement: Google Announces Willow Quantum Chip.

IBM Heron R2: Tunable-Coupler Architecture and Enhanced Quantum Volume

IBM’s announcement of the Heron R2 processor is an evolution of their superconducting quantum hardware focused on scaling up qubit count while maintaining high performance. Heron R2 contains 156 qubits arranged in IBM’s signature heavy-hexagonal lattice topology. This is a qubit connectivity graph where each qubit connects to at most 3 neighbors in a hexagonal pattern with missing connections, which IBM uses to reduce crosstalk and enable efficient error-correcting codes. The Heron family is notable for introducing tunable couplers between every pair of connected qubits, a feature first seen in Heron R1 (a 133-qubit chip debuted in late 2023). In R2, IBM increased the qubit count to 156 by extending the lattice and incorporating lessons from their 433-qubit Osprey system’s signal delivery improvements. The tunable coupler design allows two qubits’ interaction to be turned on or off (and adjusted in strength) dynamically, which greatly suppresses unwanted coupling and frequency collisions when multiple operations are happening in parallel. This effectively eliminates a lot of “cross-talk” errors that plague fixed-coupling architectures. According to IBM, Heron demonstrated a 3–5× improvement in device performance metrics compared to their previous generation (the 127-qubit Eagle) while “virtually eliminating” cross-talk. Specific numbers from Heron r1 (133 qubits) showed a quantum volume (QV) of 512 – a measure combining number of qubits and gate fidelity – which was a new high for IBM at that time. Heron R2 likely pushes that even further.

The Heron R2 chip also introduced a new “two-level system” (TLS) mitigation technique in hardware. TLS defects in the materials (like microscopic two-level fluctuators at surfaces or interfaces) are a known cause of qubit decoherence and sporadic errors. IBM built circuitry or calibration procedures into Heron R2 to detect and mitigate the impact of TLS noise on the qubits. The result is improved stability of qubit frequencies and, by extension, better gate fidelity and coherence times. While IBM hasn’t publicly quoted average T1/T2 for Heron R2, their emphasis on TLS mitigation suggests each qubit’s coherence is more consistently near the upper limits (potentially several hundred microseconds). They also improved the readout and reset processes (IBM has been developing fast, high-fidelity qubit readout and qubit reuse via reset to speed up circuits). In terms of integration with software, Heron R2 is delivered via IBM’s Quantum Cloud and is fully compatible with Qiskit runtime improvements. In fact, IBM highlighted that by combining the Heron R2 hardware with software advances like dynamic circuits and parametric compilation, they achieved a sustained performance of 150,000 circuit layer operations per second (CLOPS) on this system. This is a dramatic increase in circuit execution speed – by comparison, in 2022 their systems ran ~1k CLOPS, and by early 2024 around 37k CLOPS. Faster CLOPS means researchers can execute deeper and more complex algorithms within the qubits’ coherence time or gather more statistics in less wall-clock time.

The most concrete evidence of Heron R2’s advancement was IBM’s announcement that it can reliably run quantum circuits with up to 5,000 two-qubit gates. This is nearly double the 2,880 two-qubit gates used in IBM’s 2023 “quantum utility” experiment on the Eagle chip. In that experiment (published in Nature), IBM showed that a complex many-qubit circuit could be executed with enough fidelity – using error mitigation – to get a meaningful result beyond the reach of brute-force classical simulation. Now, with Heron R2, circuits almost twice as long can be run accurately without custom hardware tweaks, using the standard Qiskit toolchain. In other words, Heron R2 pushed IBM’s quantum processors firmly into the “utility scale” regime, where they can explore algorithms that are not toy models. Importantly, this 5,000-gate capability was achieved by both hardware improvements (lower error rates per gate) and software error mitigation. IBM mentions a “tensor error mitigation (TEM) algorithm” in Qiskit that was applied. TEM is a method to reduce errors in circuit outputs via classical post-processing and knowledge of the noise, which IBM integrated into its runtime. So Heron R2, paired with such techniques, can execute long circuits 50× faster than was possible a year before, and with enough accuracy that the outputs are trustworthy.

In terms of raw metrics: IBM’s median two-qubit gate error on Heron R2 is not explicitly stated in the announcement, but given the performance, it is likely on the order of ~0.5% or better, with some qubits achieving 99.5–99.7% fidelity. Single-qubit gates are usually above 99.9%. The heavy-hex topology is slightly less connectivity than a full grid, but it has an advantage for the surface code (which IBM uses in some experiments) because it naturally forms a planar grid of data and measure qubits when laid out appropriately. IBM has been testing small distance surface codes on previous chips and will presumably do so on Heron as well. However, IBM’s near-term strategy has also emphasized error mitigation and “quantum utility” over full error correction, meaning they try to find ways to get useful results from the hardware at hand by combining it with classical processing. Heron R2 is a continuation of that philosophy: improve the hardware just enough to push the envelope of what can be done in the NISQ era, while laying groundwork for truly fault-tolerant hardware in the future. The Heron architecture (with tunable couplers) is in fact the template for IBM’s upcoming larger systems and modular systems. IBM plans to connect multiple Heron chips via flexible interconnects and a special coupler (codenamed Flamingo) to scale to larger effective processors. They already demonstrated a prototype of this modular approach, showing that two Heron chips could be linked with an entangling gate across a ~meter distance with only minor loss. So Heron R2 is not just a stand-alone 156-qubit device, but also a module in IBM’s System Two quantum computer architecture, which envisions combining modules to reach thousands of qubits. In summary, IBM’s Heron R2 announcement is about refinement and integration: more qubits, better noise control (tunable couplers + TLS mitigation), and faster software all coming together. The result is a quantum processor that significantly extends IBM’s ability to run complex algorithms (approaching the 100×100 qubit-depth challenge they posed). While it may not boast a fundamentally new qubit type or a dramatic physics breakthrough, it is a critical incremental step. It shows that IBM can scale up without sacrificing performance, which is essential on the march toward a fault-tolerant machine.

Zuchongzhi 3.0: China’s Breakthrough in Superconducting Quantum Hardware

Chinese researchers have officially unveiled Zuchongzhi 3.0, a 105-qubit superconducting quantum processor that sets a new benchmark in computational speed and scale. In its debut demonstration, the chip executed an 83-qubit random circuit sampling task (32 layers deep) in only a few minutes, producing results that would take a state-of-the-art classical supercomputer on the order of 6.4 billion years to simulate. This represents an estimated 1015-fold speedup over classical computation and roughly a one-million-fold (6 orders of magnitude) improvement over Google’s previous Sycamore experiment. The feat, published as a cover article in Physical Review Letters, reinforces China’s advancement in the race for quantum computational advantage, marking the strongest quantum advantage achieved to date on a superconducting platform.

Zuchongzhi 3.0 features significant upgrades from its 66-qubit predecessor. The new processor integrates 105 transmon qubits in a two-dimensional grid with 182 couplers (interconnections) to enable more complex entanglement patterns. It boasts a longer average coherence time of about 72 µs and high-fidelity operations (approximately 99.9% for single-qubit gates and 99.6% for two-qubit gates) – achievements made possible by engineering improvements such as noise reduction in the circuit design and better qubit packaging. These technical innovations allow Zuchongzhi 3.0 to run deeper quantum circuits than earlier chips and even support initial quantum error-correction experiments. The team has demonstrated surface-code memory elements (distance-7 code) on this chip and is working to push to higher error-correction thresholds, highlighting the new capabilities enabled by the processor’s improved stability and scale.

Expanded Technical Comparison

Each of these five quantum computing approaches brings something unique to the table. In this section, I’ll try and compare their technical metrics and how they contribute to the overarching goal of fault-tolerant quantum computation.

Qubit Type and Architecture

The five quantum processors employ three distinct qubit technologies.

AWS Ocelot

AWS Ocelot uses superconducting cat qubits, where each qubit is encoded in two coherent states of a microwave resonator. This design intrinsically suppresses certain errors by biasing them (bit-flip errors are strongly suppressed). Ocelot’s chip integrates 14 core components: 5 cat-qubit resonators serving as data qubits, 5 buffer circuits to stabilize these oscillator qubits, and 4 superconducting ancilla qubits to detect errors on the data qubits. Notably, it’s a hardware-efficient logical qubit architecture: only 9 physical qubits yield one protected logical qubit thanks to the cat qubit’s built-in error bias. The chip is manufactured with standard microelectronics processes (using tantalum on silicon resonators) for scalability. In essence, AWS has taken a transmon-based circuit and augmented it with bosonic oscillator qubits to realize a bias-preserving quantum memory.

Microsoft Majorana-1

In contrast, Microsoft Majorana-1 uses an entirely different approach: topological qubits based on Majorana zero modes (MZMs). These qubits are realized in a special “topological superconductor” formed in indium arsenide/aluminum nanowires at cryogenic temperatures. Each qubit (a so-called tetron) consists of a pair of nanowires hosting four MZMs in total (two MZMs per wire, at the ends). Quantum information is stored non-locally in the parity of electron occupation across two MZMs, which makes it inherently protected from local noise. The Majorana qubits are manipulated through braiding operations or equivalent measurement-based schemes, rather than the gate pulses used for transmons. Majorana-1 is an 8-qubit prototype (meaning it can host 8 topological qubits) implemented as a 2D array of these nanowire-based devices. It’s the first processor to demonstrate this Topological Core architecture, which Microsoft claims can be scaled to millions of qubits on a chip if the approach proves out. The challenge of reading out a topological qubit’s state (since the information is “hidden” in a parity) is solved by a novel measurement mechanism: coupling the ends of the nanowire to a small quantum dot and probing with microwaves. The reflection of the microwave signal changes depending on whether the qubit’s parity is even or odd, allowing single-shot readout of the Majorana qubit’s state.

The remaining three chips – Google Willow, IBM Heron R2, and Zuchongzhi 3.0 – all use superconducting transmon qubits, but with different circuit architectures.

Google Willow

Google’s Willow is a 105-qubit superconducting processor that builds on Google’s prior Sycamore design. The qubits are laid out in a 2D planar grid with tunable couplers or fixed capacitive couplings forming a near-nearest-neighbor topology. Willow’s lattice is effectively a dense rectangular grid (15×7 array) similar to a heavy-square lattice, with an average coordination of ~3.5 couplings per qubit. (Each qubit interacts with 3 or 4 neighbors, facilitating two-qubit gates in parallel.) Google optimized Willow’s design for both high connectivity and low cross-talk – for example, by using an iSWAP-like two-qubit gate that can be applied on many pairs simultaneously without excessive interference. The Willow chip is fabricated in a multi-layer process with superconducting aluminum circuits on silicon, and includes integrated microwave resonators for readout of each qubit.

IBM Heron R2

IBM Heron R2 is also a superconducting transmon processor, but IBM employs its signature heavy-hexagon lattice architecture. Heron R2 contains 156 transmon qubits arranged such that each qubit has at most 3 neighbors (a heavy-hex lattice). This geometry deliberately “prunes” the connectivity relative to a square grid in order to reduce cross-talk and correlated errors. Crucially, Heron uses tunable coupler elements between qubits. These couplers (based on additional Josephson junction circuits) can be activated to mediate two-qubit interactions or deactivated to effectively isolate qubits when no gate is intended. This tunable coupling architecture dramatically suppresses unwanted interactions and cross-talk during idle periods. The first revision Heron R1 had 133 qubits; the revised Heron R2 expanded to 156 qubits in the same architecture. Each qubit is coupled to a dedicated resonator for state readout, and the chip features advanced signal delivery (high-density flex wiring and packaging) to control so many qubits in parallel. IBM’s design emphasizes modularity: 156-qubit Heron chips are the building blocks for larger systems, and multiple Heron chips can be connected via microwave links in IBM’s Quantum System Two for scaling beyond a single die.

USTC Zuchongzhi 3.0

Zuchongzhi 3.0, developed by USTC in China, likewise consists of superconducting transmon qubits in a planar array. It has 105 qubits laid out in a 2D rectangular lattice (15 rows × 7 columns). Unlike IBM’s heavy-hex, Zuchongzhi uses a relatively high connectivity: each qubit is coupled to up to 4 nearest neighbors (except on edges), similar to Google’s approach. In fact, the device is noted to be quite similar to Google’s Willow in terms of qubit count and connectivity. One distinctive aspect of Zuchongzhi 3.0’s architecture is its flip-chip integration: it is built from two bonded sapphire chips. One chip contains the 105 transmon qubits and 182 coupling circuits (the in-plane couplers between qubits), and a second chip mounts on top containing all the control wiring and readout resonators. This 3D integration separates the dense control interconnects from the qubit plane, reducing interference and allowing a more compact qubit layout. The transmons are implemented using superconducting tantalum/aluminum fabrication (USTC introduced tantalum material to improve quality factors). The use of flip-chip and novel materials in Zuchongzhi 3.0 shows a strong engineering focus on scaling up superconducting qubit count without sacrificing coherence.

Summary of Qubit Types and Architectures

Ocelot and Majorana-1 pursue radical qubit designs (bosonic and topological, respectively) to embed error resilience at the hardware level, whereas Willow, Heron R2, and Zuchongzhi 3.0 refine the well-established transmon approach with clever layout and coupling innovations. The transmon-based chips pack the largest qubit counts (105–156 qubits) and have demonstrated complex circuit benchmarks, while the cat-qubit and Majorana devices, though smaller in qubit number, represent proof-of-concept leaps toward fault-tolerant architectures built on novel physics.

Coherence Times (T1 and T2)

Coherence time is a critical metric for qubit performance, as it determines how long a qubit can retain quantum information. There are two relevant timescales: T1, the energy relaxation time (how long the qubit stays in an excited state before decaying to ground), and T2, the dephasing time (how long superposition phase coherence is maintained). In an ideal two-level qubit, the excited state population decays as $$e^{-t/T1}$$, and off-diagonal elements of the qubit’s density matrix decay as $$e^{-t/T2}$$. Longer T1 and T2 are better, allowing more operations to be performed before errors occur. The five chips show significant differences in coherence, largely stemming from their differing qubit implementations:

AWS Ocelot

The cat qubit architecture achieves an extreme asymmetry in coherence times. By encoding the qubit in a pair of oscillator states, Ocelot’s qubits exhibit a bit-flip error time (T1) exceeding 10 seconds in experimental demonstrations – several orders of magnitude longer than ordinary transmons. In other words, spontaneous transitions between the logical |0⟩ and |1⟩ states (which correspond to two distinct coherent states of the resonator) are extraordinarily rare. This 10+ s T1 for bit-flips is 4–5 orders larger than previous cat qubits and vastly larger than any transmon T1. However, this comes at a cost: the phase-flip coherence (T2) of the cat qubit is much shorter, because the environment can more easily cause dephasing between the two cat basis states. The reported phase-flip time is on the order of $$~5×10^{-7}$$ s (sub-microsecond) in current cat qubit experiments. In essence, Ocelot’s qubits have a highly biased noise: bit-flip processes are suppressed by ~107 relative to phase-flip. The buffer circuits in Ocelot are designed to prolong the phase coherence somewhat, but T2 is still much shorter than T1. This is acceptable since the system will use active error correction to handle phase errors. The key point is that Ocelot’s qubits rarely lose energy (T1 ~ 10 s), but they lose phase coherence relatively quickly, meaning their superpositions need periodic stabilization.

Microsoft Majorana-1

Majorana qubits are expected to be intrinsically long-lived because the qubit states are stored non-locally. In the initial Majorana-1 device, the team reported that external disturbances (e.g. quasiparticle poisoning events that flip the parity) are rare: roughly one parity flip per millisecond on average. We can treat this as an effective T1 on the order of 1 ms for the topological qubit, meaning the probability of a qubit spontaneously changing state is about $$10^{-3}$$ per millisecond. This is already an order of magnitude longer lifetime than typical superconducting qubits. It implies, for example, that during a 1 µs operation, the chance of an environment-induced error is on the order of $$10^{-6}$$, which is very low. As for T2, a topologically encoded qubit should be largely immune to many dephasing mechanisms since local phase perturbations do not change the global parity state. The practical T2 might be limited by residual coupling between the Majorana modes or fluctuations in the device tuning (e.g. magnetic field noise), but quantitative values have not been fully disclosed. The Majorana qubits have demonstrated the ability to maintain quantum superposition without decay over experimental timescales shorter than the 1 ms parity lifetime, indicating T2 on the order of at least hundreds of microseconds in the current device. In essence, Majorana-1 shows millisecond-scale coherence – a significant leap – thanks to topological protection. (The first measurements had ~1% readout error, which is more a readout infidelity than a coherence limit, and the team sees paths to reduce that error further.)

Google Willow

Willow represents a big improvement in coherence over Google’s earlier Sycamore chip. Google reports mean coherence times of T1 ≈ 98 µs and T2 (CPMG) ≈ 89 µs for the qubits on Willow. This is about a 5× increase over Sycamore’s ~20 µs coherence. Such improvement was achieved by materials and design changes (for example, using improved fabrication to reduce two-level-system defects and better shielding to reduce noise). A T1 of 98 µs means an excited qubit loses energy with a time constant of nearly 0.1 ms, and T2 of 89 µs indicates phase coherence is maintained nearly as long. These figures are among the highest reported for large-scale superconducting chips. Crucially, Willow’s coherence is uniform across its 105 qubits – the averages imply most qubits are in that ballpark, which is important for multi-qubit operations. With ~100 µs coherence and gate times on the order of tens of nanoseconds, Willow’s qubits can undergo on the order of $$10^3$$ operations before decohering (in the absence of error correction). This long coherence was key to enabling Willow to run relatively deep circuits and even error-correction experiments successfully. It’s worth noting that Google employed dynamical decoupling and CPMG sequences (hence quoting T2,CPMG) to extend effective T2 to ~89 µs. The true T2 (Ramsey dephasing without echoes) might be lower, but through echo techniques they mitigate inhomogeneous dephasing.

IBM Heron R2

IBM’s Heron family also achieved substantial coherence times, though IBM often emphasizes other metrics (like gate fidelity) over raw T1/T2 in public disclosures. The heavy-hex design and introduction of a TLS mitigation layer in R2 specifically targeted improving coherence across the whole 156-qubit chip. By reducing two-level-system defects and material losses, IBM likely has many qubits with T1 on the order of 100 µs or more. In earlier IBM devices (e.g. 27-qubit Falcon chips), T1 ~ 50–100 µs and T2 ~ 50 µs were typical. Heron R2, being a new revision, likely pushed T1 further. Indeed, one source notes Heron’s two-qubit gate fidelity improvements came partly from better coherence and stability across the chip due to TLS environment control. Without official IBM numbers in this text, we extrapolate: Heron R2’s coherence should be comparable to Willow’s range. IBM’s focus on uniformity means no outlier qubits with very low T1 – they design for a stable floor of performance. It’s reasonable to assume T1,T2 on Heron are in the few × $$10^1$$ µs range (tens of microseconds). IBM has reported individual qubits with T1 > 300 µs in the past, but for Heron’s large array, a safer estimate is T1 ~100 µs, T2 ~100 µs average. This is supported by IBM’s introduction of new filtering and isolation techniques that “improve coherence and stability across the whole chip.” In summary, Heron R2’s transmons have high but perhaps slightly lower coherence than Willow’s best (IBM prioritizes reducing noise and cross-talk in other ways as well). The heavy-hex layout itself helps coherence by minimizing frequency crowding and interference. Thus, IBM’s coherence times are in the same ballpark as Google’s – on the order of $$10^{-4}$$ s – ensuring that hundreds to a thousand operations can be executed per qubit before decoherence if error mitigation is applied.

USTC Zuchongzhi 3.0

The USTC team made coherence enhancement a major goal in Zuchongzhi 3.0, and they achieved a marked improvement over their prior 66-qubit chip. They report an average T1 ≈ 72 µs and T2 (CPMG) ≈ 58 µs across the 105 qubits. These values, while slightly below Google Willow’s, are still very high for a large device (for comparison, Zuchongzhi 2.0 had significantly lower coherence, though exact numbers weren’t given in this snippet). The team credits several engineering strategies for this improvement: adjusting qubit capacitor geometries to reduce surface dielectric loss, improved cryogenic attenuation to cut environmental noise (boosting T2), and using tantalum/aluminum fabrication to get better material quality. They also implemented an indium bump bonding process (flip-chip) which reduced interface contaminants and improved T1 by mitigating Purcell effect and other loss channels. The results speak to a careful balancing act: after adding more qubits and couplers, they still increased average coherence (T1 ~72 µs) relative to the previous generation. However, as the comparison published shows, Zuchongzhi 3.0’s T1/T2, while excellent, are a bit lower than Willow’s (98/89 µs) – likely due to the slightly denser integration or materials differences. Still, with ~60–70 µs coherence, Zuchongzhi’s qubits can handle many operations within their coherence window. The team found that this coherence boost directly translated to lower gate errors (single-qubit and two-qubit error rates dropped accordingly).

Summary of Coherence Times

Majorana-1 and Ocelot offer novel forms of extended coherence: Majorana qubits with ~1 ms parity stability and cat qubits with an astounding ~10 s T1 (but short T2). The transmon-based chips (Willow, Heron R2, Zuchongzhi 3.0) all achieve T1,T2 on the order of $$10^{-5}$$ to $$10^{-4}$$ seconds (tens of microseconds to nearly 0.1 ms), which is state-of-the-art for superconducting qubits at their scale. These coherence times are long enough that, if combined with quantum error correction, qubit errors can be significantly suppressed. From a mathematical perspective, if a gate operation takes $$t_g$$ and a qubit has $$T2$$, the decoherence error per gate is roughly $$1 – \exp(-t_g/T2) ≈ t_g/T2$$ for $$t_g \ll T2$$. For example, Willow’s two-qubit gate time of ~42 ns on a qubit with $$T2≈89 µs$$ yields an intrinsic dephasing error ~$$42\text{ns}/89\text{µs} ≈ 5×10^{-4}$$, consistent with its measured two-qubit error on the order of $$10^{-3}$$ (since control errors and T1 contribute additional small error). Each platform has pushed coherence to a regime sufficient for complex multi-qubit experiments: the superconducting platforms do so with improved materials and design, while the cat and topological platforms do so via fundamentally different qubit encodings that eliminate or postpone certain decay channels.

Error Rates and Error Correction Techniques

Because quantum computations are so sensitive to errors, all these platforms employ strategies to minimize and correct errors at either the physical or logical level. We distinguish physical error rates (errors per gate or per time on individual qubits) from logical error rates (errors in encoded qubits that use many physical qubits with an error-correcting code). A key concept is the fault-tolerance threshold: if physical error rates can be pushed below some threshold (around 1% for many codes like the surface code), then increasing the code size will exponentially suppress the logical error rate. Each of the five chips approaches this challenge differently.

AWS Ocelot

Ocelot attacks the error problem by biasing the qubit noise so that one type of error is extremely rare. In Ocelot’s cat qubits, bit-flip errors (X errors) are essentially eliminated at the hardware level (bit-flip time ~10 s as noted). This means the physical error rate for bit-flips is astronomically low (on the order of $$10^{-8}$$ per second or less). The dominant remaining errors are phase flips (Z errors), which occur with much higher probability (phase coherence time ~0.5 µs). However, phase errors can be detected and corrected by a simpler code since bit-flips don’t occur to compound the problem. Ocelot essentially builds quantum error correction into the qubit architecture from the start. The 5 cat qubits plus 4 ancillas on the chip implement a small quantum error-correcting code that continuously stabilizes the logical qubit. Although AWS hasn’t publicly detailed the exact code, it is likely a repetition code or parity-check code across the cat qubits that detects phase flips. The result is a hardware-efficient logical qubit: AWS achieved one logical qubit from only 9 physical qubits, versus the thousands of physical qubits that a surface code would require to get similar logical stability.

In percentage terms, AWS claims Ocelot’s approach reduces the qubit overhead for error correction by up to 90%. This is a tremendous resource saving. The trade-off is that Ocelot’s current logical qubit is just a single qubit – error correction is used to keep that qubit stable, but the chip doesn’t perform multi-qubit logic yet. Still, demonstrating a logical qubit with 9 physical qubits is an error-correction breakthrough. The logical error rate achieved hasn’t been explicitly stated, but presumably the logical qubit has a dramatically longer lifetime than any single transmon. AWS also implemented the first noise-bias-preserving logic gates on the cat qubits. These are gates designed not to mix X and Z errors (so that a phase error in a cat qubit doesn’t accidentally cause a bit-flip). By “tuning out” certain error channels in the gate operations, they keep the error bias intact even during computations.

In summary, Ocelot’s strategy is prevent most errors, then correct the rest. By combining the cat qubit (which inherently corrects bit-flips) with a small outer code for phase errors, Ocelot’s logical qubit can operate in a regime where logical error per circuit is extremely low – potentially low enough for practical algorithms with far fewer qubits than other approaches would need.

Microsoft Majorana-1

Microsoft’s approach is to make qubits that are almost error-free at the physical level by using topological protection. In theory, a topological qubit in a perfect Majorana device would have zero bit-flip or phase-flip errors (aside from very infrequent non-local errors). In practice, Majorana-1 has shown that many of the usual error mechanisms are absent – for example, the qubit is unaffected by local noise that would disturb a conventional qubit. The only operation that is not topologically protected is the so-called T-gate (a $$\pi/4$$ phase gate), which requires introducing a non-topological resource (magic state injection). This means that while Clifford gates can be done essentially without error by braiding or measurement sequences, the T-gate will have some error that must be corrected via higher-level encoding. The Majorana qubit thus pushes most error correction overhead to a very high level (only needed for handling those T-gates). The measured performance so far: initial readout of the qubit had an error of ~1% per measurement, which can be improved by refining the quantum dot sensor, and qubit parity flips are ~$$10^{-3}$$ per ms as mentioned. If we interpret that in an error-per-operation sense, consider a Majorana qubit undergoing a sequence of operations each ~1 µs long: in 1 µs, the chance of a spontaneous error is ~$$10^{-6}$$ (since 1 µs is 1/1000 of the 1 ms T1). That is an incredibly low physical error probability, far below typical threshold (which is around $$10^{-2}$$).

So Majorana qubits operate firmly in the below-threshold regime at the single-qubit level. Microsoft’s plan is to leverage this by building logical qubits that require far fewer physical qubits. They estimate that a million physical Majorana qubits could yield on the order of a million logical qubits – essentially one physical one per logical, because each is already (almost) a perfect qubit. In practice, some small overhead will be needed for the T-gates: likely they will implement a lightweight error correction or distillation just for those operations. But the overhead is constant, not huge, because all other gates are protected. This is a qualitatively different scenario from, say, superconducting approaches where every operation on every qubit must be error-corrected by redundancy. The phrase used is that Majorana qubits could be “almost error-free” in operation. The Nature paper by Microsoft demonstrated the existence and control of these topological qubits (a big step), and the follow-up roadmap (on arXiv) lays out how to go from the current 8-qubit device to a scalable fault-tolerant machine.

In summary, Microsoft’s error correction philosophy is to build qubits that need far less correction. By achieving physical error rates well below threshold (parity flips ~$$10^{-6}$$ per operation), they sidestep the need for large quantum codes for most operations. They will still need to correct the rare errors (e.g. using repetition codes to catch any parity flips that do occur, and using magic state factories for T-gates), but the resource overhead is vastly smaller. This is why they claim their chip could solve industrial-scale problems “in years, not decades” – because if the physics holds, they won’t have to wait for a million physical qubits just to get 100 logical qubits; they can use those physical qubits directly as useful qubits.

Google Willow

Google’s approach stays within the conventional transmon qubit paradigm but pushes the physical error rates low enough to meet the threshold and then uses standard quantum error correction (QEC) codes. On Willow, the physical gate error rates are impressively low: single-qubit error ≈0.035% and two-qubit error ≈0.14% on average. These error rates (roughly $$3.5×10^{-4}$$ and $$1.4×10^{-3}$$ per gate respectively) are below or on par with the surface code threshold (~1% for a standard surface code, potentially a few tenths of a percent for more realistic noise models). This means Willow’s qubits are good enough that adding redundancy will reduce errors, not amplify them. Google explicitly demonstrated this: they implemented quantum error correction on Willow and on a smaller 72-qubit device, achieving landmark results. In a recent experiment, they realized a distance-5 surface code using 72 qubits and a distance-7 surface code using 105 qubits (the full Willow). The logical error rate of the distance-7 code was significantly lower than that of the distance-5, confirming the threshold condition has been met. In fact, Google reported that their distance-5 logical qubit reached “break-even” – meaning the logical qubit’s error rate was about equal to the best physical qubit’s error rate, and the distance-7 logical qubit lasted twice as long as the best physical qubit on the chip. This is a historic milestone: it’s the first time a logical qubit outperformed the physical components in a solid-state device. Mathematically, in the surface code the logical error $$p_{\text{logical}}$$ should scale approximately as $$p_{\text{logical}} \approx A \left(\frac{p_{\text{phys}}}{p_{\text{thresh}}}\right)^{(d+1)/2}$$ for large code distance $$d$$. Google observed exactly this exponential suppression: as they went from distance 3 to 5 to 7, the logical error dropped in line with an error-per-gate of ~$$0.1%$$ being below threshold. They also ran repetition codes up to distance 29 (using many qubits for a simple linear code) and saw logical error decreasing until very rare correlated error bursts (like cosmic ray hits) set an eventual floor at about one error per hour for the largest code. Those correlated events (which cause simultaneous errors in many qubits) are non-Markovian and weren’t corrected by the code; Google mitigated some by improving chip fabrication (gap engineering to reduce radiation-induced error spikes by 10,000× was mentioned in a commentary). For common error sources, though, Willow’s QEC worked as expected.

The upshot is that Google has demonstrated fault-tolerant operation in a prototype form: they can store quantum info longer with a logical qubit than any single qubit can hold it. The logical error per cycle in their distance-7 code was about $$2.8×10^{-3}$$, roughly half the physical error of the best qubit. As they improve physical qubit fidelity further and scale to larger codes (distance 11, etc.), the logical error will shrink exponentially. Google uses the surface code (a 2D topological code) for these experiments, which requires a 2D array of qubits with nearest-neighbor gates – exactly what Willow provides. They also built a custom high-speed decoder to keep up with the ~MHz-scale cycle time of the code. In summary, Willow’s error correction technique is the traditional one: encode logical qubits in many physical qubits (a distance-7 surface code used 49 physical qubits) and perform syndrome measurements to correct errors in real-time.

What’s important is Willow crossed the threshold: physical two-qubit error 0.14% < 1% means logical errors can be suppressed exponentially. So unlike previous devices that were “too noisy to correct,” Willow’s qubits are good enough to benefit from QEC. This opens the path to scaling – though a lot more qubits will be needed to do something like run a fault-tolerant algorithm, Google has proven the concept on actual hardware.

IBM Heron R2

IBM has also been steadily reducing physical error rates, though at the time of Heron R2’s debut, IBM had not publicly shown a logical qubit beating physical qubits. IBM’s two-qubit gate error on Heron is around 0.3% (99.7% fidelity) on average, and single-qubit errors are on the order of 0.03% (99.97% fidelity). These are comparable to Google’s numbers, albeit slightly higher two-qubit error. This places IBM right around the threshold regime as well. IBM’s strategy for error correction revolves around the surface code in the future, but in the near term they have focused on error mitigation and “quantum utility” using uncorrected qubits. Because Heron R2 can execute circuits with thousands of two-qubit gates reliably (see Benchmarking section), IBM can attempt algorithms with shallow depths or use techniques like zero-noise extrapolation to mitigate errors rather than fully correcting them. That said, IBM’s roadmap explicitly includes scaling up to error-corrected quantum computing. They have been developing the software and classical infrastructure for QEC (e.g., fast decoders, as well as investigating hexagonal lattice variants of the surface code that map well to heavy-hex qubit layout). The heavy-hex lattice is compatible with a rotated surface code, albeit with some boundary adjustments due to degree-3 connectivity. IBM has argued that heavy-hex actually reduces the overhead for the surface code by cutting the number of connections that need to be managed, thereby potentially improving threshold behavior by reducing correlated errors.

The tunable couplers in Heron help suppress cross-talk errors, which means errors are more local and stochastic – an assumption underlying most QEC codes. For example, when qubits are idle, the couplers are off, greatly reducing unintended two-qubit error (which can otherwise create correlated errors that are harder for QEC to handle).

Additionally, IBM introduced “two-level system (TLS) mitigation” in R2, which addresses a specific noise source (spurious defects interacting with qubits). By stabilizing the TLS environment, they reduce fluctuations that could cause multiple qubits to err at once.

These advances are crucial to approach the fault-tolerance threshold with a large chip. While IBM hasn’t announced a qubit-level logical encoding demo like Google did, they have done smaller QEC experiments in the past (e.g., on 5-qubit devices they showed repetition code and small Bacon-Shor code error detection). We can expect IBM to attempt a logical qubit on a bigger system soon. In IBM’s vision, error correction will be integrated with a modular architecture – they talk about concatenated codes or connecting patches of surface code across multiple chips in the future. IBM also often cites the figure that about 1,000 physical qubits per logical qubit might be needed for real applications with surface codes. Heron R2’s error rates (~0.3%) are still a bit above optimum threshold, so IBM is likely aiming to get errors down to ~0.1% or less in next generations (with improved materials, as indicated by their ongoing research). In the meantime, IBM leans on error mitigation: for instance, they use readout error mitigation and probabilistic error cancellation in software to improve effective circuit fidelity without full QEC. This allowed them to do things like successfully execute circuits with 5,000 two-qubit gates and still get meaningful results. Those results are not fully error-corrected, but error-mitigated. The difference is that mitigation doesn’t give exponential suppression of error with size, but it can extend what’s classically simulable or improve accuracy for specific tasks. So one could say IBM is straddling the line – pushing physical qubits as far as possible and using any available error reduction technique, until their hardware is just good enough to justify the jump into full QEC. Given Heron R2’s fidelities, IBM is very close to that line.

In short, IBM’s error rate per gate is approaching the threshold, and their focus is on systematic error reduction (cross-talk, leakage, correlated events) to meet all the criteria for fault-tolerance. As soon as they cross that threshold decisively, they will employ the standard QEC codes (likely a surface code on heavy-hex) to yield logical qubits. Their designs and roadmap have considered this, ensuring the architecture can support fast syndrome measurement cycles and scale logically. IBM also often mentions fault-tolerant quantum operations by 2026 as a goal, implying Heron’s successors (e.g., the 433-qubit Osprey and 1121-qubit Condor and beyond) will incorporate QEC.

USTC Zuchongzhi 3.0

The USTC team has not reported building a logical qubit yet; instead, they focused on demonstrating quantum computational advantage (an ultrahard task for classical computers). Nevertheless, the error characteristics of Zuchongzhi 3.0 are in the same league as Google/IBM, which means it is in principle capable of running QEC codes. The average two-qubit gate error on Zuchongzhi 3.0 is ~0.38% (slightly higher than IBM’s 0.3% or Google’s 0.14%), and single-qubit error ~0.10%. These are just below the 1% threshold. By improving those fidelities a bit further (which could come from the continued materials optimization they described), they could attempt surface code experiments too. In their arXiv paper, the USTC researchers note that increasing qubit coherence and reducing gate errors directly “pushes the limits of current quantum hardware capabilities” and lays the groundwork for exploring error correction in larger circuits. Notably, even without full error correction, they ran a loose error mitigation during their random circuit sampling: they reset qubits frequently (via measure-and-reinitialize) to avoid error accumulation, and they performed statistical verification of outputs to ensure errors were not dominating the results.

For error correction proper, USTC might leverage similar codes as Google (since their lattice is compatible with surface code as well). They have expertise in quantum error correction from the photonic side (Pan’s group demonstrated some bosonic codes with photons). It wouldn’t be surprising if a next milestone from USTC is an error-corrected logical qubit too.

One interesting note: Zuchongzhi 3.0’s architecture with flip-chip could be beneficial for QEC because the second chip can incorporate circuitry for fast feedforward or crosstalk isolation needed in error correction cycles. Also, their use of active reset of qubits (via gates) between rounds is essentially part of an error correction cycle (resetting ancilla qubits).

In summary, while Zuchongzhi 3.0 hasn’t demonstrated a logical qubit, its physical error rates (~0.1–0.4%) are low enough to be at the brink of the error correction threshold. The team’s priority so far was achieving a quantum advantage experiment, but the same hardware improvements (longer T1, T2, better gates) directly translate to enabling error-corrected computations in the near future. Given that Willow and Zuchongzhi are so similar, we can expect that if Google can do distance-7 QEC, USTC’s device could replicate a similar feat with some refinement. The researchers explicitly state that their advances in coherence and fidelity “open avenues for investigating how increases in qubit count and circuit complexity can enhance the efficiency in solving real-world problems” – which hints at error correction as one such avenue for real-world algorithms.

Summary of Error Rates and Error Correction Techniques

Two complementary paradigms have emerged among these five: (1) Intrinsic error reduction: AWS and Microsoft drastically reduce physical error rates by qubit design (cat qubits, topological qubits), aiming to minimize the burden on error correction codes. (2) Active error correction: Google, IBM, and USTC improve their superconducting qubits to the threshold regime and then apply quantum error correcting codes (like the surface code) to suppress errors further. Both approaches are racing toward the same goal of fault-tolerant quantum computation. A simple threshold condition inequality $$p_{\text{phys}} < p_{\text{thresh}}$$ underpins both – AWS/Microsoft satisfy it by making $$p_{\text{phys}}$$ extremely small for certain errors, and Google/IBM/USTC satisfy it by engineering $$p_{\text{phys}}$$ below the known $$p_{\text{thresh}} \sim 1%$$ for surface codes. Ultimately, all five efforts aim to realize logical qubits with error rates low enough for deep, reliable quantum circuits. Already, we see Ocelot achieving a working logical qubit with an 85% resource reduction, and Willow demonstrating a logical memory that outperforms any single physical qubit. These are strong validations that error correction – whether hardware- or software-intensive – is on the verge of making quantum computing scalable.

Benchmarking and Performance Metrics

To compare quantum processors, a variety of benchmarks and metrics are used, from device-independent metrics like Quantum Volume to task-specific benchmarks like random circuit sampling. We will consider a few key performance measures: quantum volume (QV), Circuit Layer Operations Per Second (CLOPS), algorithmic benchmarking (quantum advantage experiments), and other published performance numbers like fidelity scaling in large circuits.

Quantum Volume

QV is a holistic metric introduced by IBM that accounts for number of qubits, gate fidelity, and connectivity by finding the largest random circuit of equal width and depth that the computer can implement with a success probability > 2/3. A higher QV (which is typically reported as $$2^d$$ for some integer $$d$$) means the machine can handle larger entangled circuits reliably.

IBM Heron R2

Among our five chips, IBM Heron R2 currently has the highest reported QV. IBM announced that Heron achieved Quantum Volume = 512 (since a heavy-hex 127-qubit Eagle had reached QV 128 earlier, Heron’s better fidelity pushed it to 512). QV 512 corresponds to successfully running a random 9-qubit circuit of depth 9 (since $$2^9=512$$). This indicates Heron can entangle at least 9 qubits deeply with good fidelity.

Google Willow and USTC Zuchongzhi 3.0

Neither Google nor USTC have formally reported QV for Willow or Zuchongzhi, but given their specs, one can infer. Willow with 105 qubits and 99.86% two-qubit fidelity could likely achieve a similar or higher QV (perhaps 512 or 1024) if measured, because it has even more qubits to trade for circuit depth. Google typically focuses on specific milestones rather than QV, but the capabilities demonstrated (like a full 105-qubit random circuit at depth > 30 in the advantage experiment) far exceed what QV 512 implies. In fact, QV becomes less informative at the cutting edge where targeted benchmarks are more illustrative (for instance, Google might say their quantum volume is effectively unbounded for random sampling tasks given they beat classical by a huge margin).

AWS Ocelot

AWS Ocelot’s QV is not directly applicable, since it currently realizes one logical qubit – QV is defined for circuits, so a single logical qubit can’t generate an entangled multi-qubit circuit. The concept of QV will apply to Ocelot only when it’s scaled to multiple logical qubits that can run a non-trivial circuit. However, one could consider the logical qubit’s lifetime or error rate as analogous performance measures (discussed above).

Microsoft Majorana-1

Microsoft Majorana-1’s QV is also not yet meaningful – with 8 physical qubits that are in early experimental stages, they haven’t run random circuits. The purpose of Majorana-1 isn’t to maximize QV at this stage but to validate the new qubit type. So in terms of QV: IBM leads with 512, and Google/USTC likely have comparable or higher effective circuit capabilities though they don’t frame it in QV terms. It’s worth noting that another company, IonQ, has claimed very high QVs (e.g., 2,097,152), but that is for trapped-ion systems with lower gate speed; our focus is on these five chips. Among these five, Heron R2’s QV 512 is a concrete benchmark of its balanced qubit count and fidelity.

CLOPS (Circuit Layer Operations Per Second)

This IBM-defined metric measures how many layers of a parameterized circuit the system can execute per second (including compilation and feedback overheads). It gauges the throughput or speed of executing quantum circuits.

IBM Heron R2

IBM Heron R2 (on IBM Quantum System Two) has achieved a CLOPS of 150,000+ layers per second. This is an impressive number, about 50× higher than what IBM had a couple of years prior. It reflects improvements in both the physical chip (fast gates, parallel operation) and the software stack (qubit reuse, better scheduling, and a new runtime). For context, a CLOPS of 150k means one can run 150k layers of a 100-qubit circuit in one second (if each layer is a set of single- and two-qubit operations across the 100 qubits). IBM achieved this by reducing the idle times between operations and by introducing parametric compilation (so repeated circuit executions don’t need full recompilation).

Google Willow

None of the other vendors have officially published a CLOPS for their devices, but qualitatively: Google’s processor has very fast gates (see Gate Speed section), so physically it could have high CLOPS, but Google’s software stack is not as openly benchmarked as IBM’s. IBM has put emphasis on making the entire system fast and user-friendly (since it’s accessible via cloud to users) – hence metrics like CLOPS. Google’s team, primarily focused on internal experiments, might not optimize for running as many circuits per second for external users. If we consider raw capability, Google’s 25 ns single-qubit and 42 ns two-qubit gates are faster than IBM’s ~35 ns and ~200 ns gates, so physically Google could do more layers per second on hardware. However, CLOPS includes compiler and control electronics latency. IBM’s achievement of 150k CLOPS was due to highly optimized classical control and streaming of circuits (they mention new runtime and data movement optimizations). It’s likely that Google’s system (as used in their lab) is not optimized for high-throughput in the same way; it is optimized for specific large experiments.

USTC Zuchongzhi 3.0

Meanwhile, USTC’s Zuchongzhi 3.0 – being a research prototype – likely has relatively low throughput (they took “a few hundred seconds” to collect 1 million samples of a 83-qubit random circuit, which implies on the order of $$10^3$$ – $$10^4$$ circuit layers per second, not nearly 150k). But again, that system wasn’t optimized for user throughput, it was optimized to push qubit count and fidelity.

AWS Ocelot and Microsoft Majorana-1

AWS Ocelot and Microsoft Majorana-1 also are not at the stage of needing CLOPS benchmarking; Ocelot runs one logical qubit (so CLOPS is moot), and Majorana-1’s focus is on qubit stability, not running many circuits. In summary, IBM leads in quantum computing throughput with 150k CLOPS on Heron R2, a metric that underscores the integration of a fast chip with an efficient software stack. This means IBM’s system can execute, say, variational algorithm circuits extremely quickly, which is useful for research and commercial cloud offerings. Google and USTC haven’t emphasized this metric, but their chips excel in other benchmarking areas as discussed next.

Quantum Advantage / Computational Task Benchmarks

Perhaps the most dramatic benchmark is solving a problem believed to be intractable for classical supercomputers – often termed “quantum supremacy” or quantum advantage demonstration. Both Google and USTC have excelled here with their latest chips:

Google Willow

Willow performed a random circuit sampling (RCS) task with 105 qubits and around 24 cycles (layers) of random two-qubit gates, generating a huge number of bitstring samples. They reported that Willow completed in about 5 minutes a computation that would take Frontier (the world’s fastest supercomputer) an estimated $$10^{25}$$ years to simulate. This is 10 septillion years – billions of billions of years, far longer than the age of the universe. In practical terms, this firmly establishes a quantum computational advantage – no existing classical method can replicate that specific random circuit sampling experiment. This experiment is essentially an extension of Google’s 2019 supremacy test (which was 53 qubits, 20 cycles). With Willow’s higher fidelity, they could go to 105 qubits and deeper circuits while still getting statistically meaningful results (verified by cross-entropy benchmarking). The results are staggering: they pushed the boundary of quantum sampling by six orders of magnitude in classical difficulty beyond the previous state-of-the-art (USTC’s earlier 56-qubit, 20-cycle experiment). This is not an “application” in the useful sense, but it is a crucial benchmark of raw computational power. It shows Willow can entangle over 100 qubits and perform >1,000 two-qubit gates in a complex circuit with enough fidelity that the output has structure that can be measured. The fidelity of the whole circuit was low but detectable (a tiny fraction of a percent, which is expected at that scale), yet it beat brute-force simulation by a huge margin.

USTC Zuchongzhi 3.0

Zuchongzhi 3.0 likewise demonstrated quantum advantage. They ran an RCS experiment with circuits of 83 qubits for 32 cycles, collecting one million samples in just a few minutes. They estimate Frontier supercomputer would take $$6.4×10^{9}$$ years (6.4 billion years) to do the same. While 6.4 billion years is less mind-boggling than Google’s $$10^{25}$$, it still qualifies as a quantum advantage demonstration by a huge margin. In fact, USTC’s earlier chip (Zuchongzhi 2.1) had already claimed an advantage (with 66 qubits, 20 cycles). Zuchongzhi 3.0’s advantage is more robust due to higher fidelity: by increasing circuit depth to 32 and qubit count to 83, they made the classical task exponentially harder. The quantum experiment is roughly on par in scale to Google’s 2019 experiment, and about 2–3 orders of magnitude beyond what classical algorithms had caught up to after 2019. It’s noteworthy that Willow’s experiment went even further: 105 qubits, similar cycles, thus pushing beyond Zuchongzhi 3.0 by a big factor (hence Google’s $$10^25$$ vs USTC’s $$10^9$$ year claim). The Quantum Computing Report compared Willow and Zuchongzhi 3.0 and found Willow had a slight edge in key qubit quality metrics, which allowed a larger, harder RCS experiment. Indeed, as listed earlier, Willow’s average two-qubit fidelity (99.86%) is higher than Zuchongzhi’s (99.62%), giving Willow an edge in executing deeper circuits with sufficient fidelity. Both chips outpaced IBM’s publicly reported experiments in this domain – IBM has not attempted an RCS at that scale publicly (IBM did a 127-qubit, depth-12 “utility” circuit which was classically simulable with effort, and IBM argues for focusing on useful tasks rather than contrived supremacy tasks ).

IBM Heron R2