Quantum Errors and Quantum Error Correction (QEC) Methods

Table of Contents

Introduction

Quantum computers process information using qubits that can exist in superposition states, unlike classical bits which are strictly 0 or 1. This enhanced power comes at the cost of quantum errors, which differ fundamentally from classical bit-flip errors. Qubits are highly susceptible to disturbances from their environment (decoherence) and imperfect operations, causing random changes in their state. Not only can a qubit’s value flip (0↦1 or 1↦0), but its phase can also flip (altering the relative sign of superposed states) without changing the bit value. Because qubits cannot be measured or copied without disturbing their state (due to the no-cloning theorem), these errors accumulate quickly and corrupt computational results if uncorrected.

Quantum error correction (QEC) is therefore critical for enabling large-scale or fault-tolerant quantum computing. Fault tolerance means a quantum computer can continue to operate correctly even when individual operations or qubits error out. Unlike classical error correction – which can simply duplicate bits and use majority vote – quantum error correction must delicately handle qubit errors indirectly (via entanglement and syndrome measurements) to avoid collapsing the quantum information. The development of QEC codes in the mid-1990s proved that robust quantum computation is possible in principle, so long as the physical error rates are below a certain threshold. Below this error-rate “threshold,” encoding qubits in larger codes yields exponentially suppressed logical error rates, enabling in theory arbitrarily long quantum computations. Achieving and operating below these error thresholds is one of the grand challenges on the road to practical quantum computers.

Types of Quantum Errors

Quantum errors can be classified by how they disturb the qubit’s state. On the Bloch sphere representation of a qubit, different error types correspond to rotations about different axes (the Pauli $$X$$, $$Y$$, $$Z$$ axes) or contractions of the sphere. The major error types include:

Bit-flip errors (X errors): These are analogous to classical bit flips. A bit-flip error flips $$|0\rangle$$ to $$|1\rangle$$ or vice versa, corresponding to the Pauli $$X$$ operator $$X = \begin{pmatrix}0 & 1\1 & 0\end{pmatrix}$$. Physically, a bit-flip might occur if a qubit gains or loses energy (e.g. a quantum of energy causing a $$|0\rangle\to|1\rangle$$ excitation). In terms of Bloch sphere, this is a 180° rotation about the X-axis, swapping the “north” and “south” poles. Mathematically, an $$X$$ error on a qubit in state $$\rho$$ transforms it as $$X \rho,X$$ (flipping the basis states). Bit-flips are one of the two basic types of quantum errors that must be corrected.

Phase-flip errors (Z errors): These errors change the relative phase of the $$|0\rangle$$ and $$|1\rangle$$ components of a qubit without swapping them. A phase flip corresponds to the Pauli $$Z$$ operator $$Z = \begin{pmatrix}1 & 0\0 & -1\end{pmatrix}$$, which leaves $$|0\rangle$$ unchanged but multiplies $$|1\rangle$$ by -1. On the Bloch sphere, this is a 180° rotation about the Z-axis (through the poles), flipping the “phase” of a superposition state. For example, it transforms $$(|0\rangle + |1\rangle)/\sqrt{2}$$ to $$(|0\rangle – |1\rangle)/\sqrt{2}$$. Phase-flip errors have no classical analog (a classical bit has no phase), yet they are just as prevalent as bit-flips in quantum systems. Together, arbitrary qubit errors can be viewed as combinations of an $$X$$ (bit) flip and a $$Z$$ (phase) flip (or the combined $$Y$$ flip, which is an $$X$$ and $$Z$$ applied together).

Depolarization errors: Also known as random Pauli errors, depolarizing errors occur when a qubit’s state randomly hops to one of the other states or gets fully randomized. The depolarizing channel is a common noise model where with some probability $$p$$ the qubit undergoes a completely random Pauli $$X$$, $$Y$$, or $$Z$$ error, and with probability $$(1-p)$$ it remains correct. This has the effect of “shrinking” the Bloch sphere towards the center (the maximally mixed state). For a single-qubit depolarizing channel one can write $$ \mathcal{E}_p(\rho) = (1-p)\rho + \frac{p}{3}(X\rho X + Y\rho Y + Z\rho Z)$$. If $$p=\frac{3}{4}$$, the qubit is completely mixed (any input state is replaced by the maximally mixed state). Depolarizing noise is a symmetric model capturing the effect of many decoherence processes, and while real devices may not have perfectly symmetric noise, this model is widely used in theory and QEC simulations.

Amplitude damping and leakage errors: These errors are caused by energy loss or leakage to outside of the computational basis. Amplitude damping refers to processes like spontaneous emission: a qubit in the excited state $$|1\rangle$$ decays to $$|0\rangle$$ (ground state) by emitting a photon. This is an irreversible process that tends to “damp” the amplitude of $$|1\rangle$$. In density matrix form, amplitude damping with probability $$p$$ can be represented by Kraus operators $$A_0 = \begin{pmatrix}1 & 0\0 & \sqrt{1-p}\end{pmatrix}$$ and $$A_1 = \begin{pmatrix}0 & \sqrt{p}\0 & 0\end{pmatrix}$$, which indeed send $$|1\rangle$$ to $$|0\rangle$$ with probability $$p$$. On the Bloch sphere, amplitude damping pushes all states towards $$|0\rangle$$ (the “south pole”), shrinking and shifting the Bloch vector towards the ground state. Leakage errors are similar in that the qubit leaves the computational $${|0\rangle,|1\rangle}$$ subspace entirely – for example, a superconducting qubit might leak into a higher energy level $$|2\rangle$$. Leakage is particularly troublesome because standard QEC codes assume qubits stay in the two-level system; a leaked qubit can propagate errors to others (e.g. a leaked state can cause a two-qubit gate to malfunction and corrupt the neighboring qubit). Special handling or ancillary protocols are often needed to detect or remove leakage, converting it into a standard Pauli error that codes can correct.

Each of the above error types can be described by Pauli matrices and visualized on the Bloch sphere. In general, an arbitrary error on a single qubit can be expressed as a combination of these basis errors ($$X$$, $$Y$$, $$Z$$), since they (along with the identity $$I$$) form a basis for 2×2 matrices. This fact underlies many QEC codes: if a code can correct these Pauli errors on any qubit, it can correct any error on that qubit. Real noise processes (like decoherence) often cause continuous, analog errors (e.g. small rotations), but a remarkable insight is that if those small errors are not too frequent, they can be digitized into discrete Pauli error events that QEC codes correct. This error discretization is why QEC is possible at all – and it justifies focusing on bit-flips, phase-flips, and related discrete errors when designing quantum codes.

Comparison with Classical Error Correction

Classical computers have long employed error-correction techniques such as parity bits, Hamming codes, and Reed–Solomon codes to detect and correct errors in data. These methods rely on copying or encoding classical bits into redundant patterns so that if some bits flip, the original information can be recovered by majority vote or by decoding the error syndrome. For example, a simple repetition code might encode a bit as 111 for logical “1”. If one of those bits flips to 0, the majority (two out of three) are still 1s, indicating the logical value was likely “1”. More sophisticated classical codes like Hamming codes add parity check bits that can pinpoint the location of a single-bit error in a block of data, allowing correction of that bit. These techniques are extremely useful and are ubiquitous in digital communications, memory, and storage systems.

However, classical error correction cannot be applied directly to quantum information for several fundamental reasons.

First, we cannot naively copy an unknown qubit state because of the no-cloning theorem, which forbids making identical copies of an arbitrary quantum state. A classical repetition code would require cloning the qubit, which is impossible. Instead, QEC schemes must encode a logical qubit into an entangled state of multiple physical qubits, so that the information is spread non-locally across qubits. Peter Shor’s breakthrough in 1995 was exactly to show how to do this: by entangling 9 qubits, one logical qubit’s state can be protected against any single-qubit error without ever making a direct copy of the state.

Second, measuring a qubit to check for errors will collapse its state, potentially destroying the superposition we are trying to preserve. In classical error correction one can directly measure bits (e.g. check parity) to diagnose errors. In quantum error correction, the trick is to perform syndrome measurements that reveal information about the error without revealing the qubit’s state. This is done by coupling the data qubits to ancilla (helper) qubits and measuring the ancillas. The measurement outcomes (the error syndrome) tell us which error (bit-flip, phase-flip, etc.) occurred and on which qubit, but crucially do not tell us the logical state of the qubit. For example, a code might have an ancilla that flips if an odd number of data qubits are $$|1\rangle$$ (revealing a parity error). By designing the syndrome extraction carefully, the quantum information remains encoded and unmeasured if no errors occur.

Finally, quantum errors are more varied than classical bit flips. In classical circuits, essentially the only error is a bit flip (or losing a bit). In quantum circuits, as discussed, we must contend with phase flips and combinations of flips as well. A classical code like a Hamming code is designed to correct bit flips; it does nothing for phase errors. In fact, a straightforward three-qubit repetition code can correct an $$X$$ error on a qubit but fails to detect a $$Z$$ error on that qubit. Quantum codes must therefore be more complex, often embedding a pair of classical codes (one to catch $$X$$ errors and one for $$Z$$ errors) or using other constructions to handle both types simultaneously. Despite these challenges, the theory of QEC has shown that as long as physical error rates are below a certain threshold (typically on the order of $$10^{-3}$$ to $$10^{-4}$$ for many codes), one can in principle reach arbitrarily low logical error rates by using sufficiently large codes and fault-tolerant protocols. In summary, classical error correction gave invaluable inspiration (the idea of redundancy and syndrome decoding), but quantum error correction required new ideas – entanglement, syndromes that avoid state measurement, and clever code constructions – to overcome the constraints of quantum mechanics.

Categories of Quantum Error Correction Approaches

Quantum error correction techniques can be broadly divided into a few categories: quantum error-correcting codes that actively correct errors by encoding qubits into entangled states, bosonic codes that leverage harmonic oscillator modes for hardware-efficient encoding, and error mitigation techniques that don’t fully correct errors but reduce their impact (especially useful for near-term devices). We outline each category below.

Quantum Error Correcting Codes (QECCs)

These are the standard QEC codes that encode logical qubits into multiple physical qubits and use measurements to detect and correct errors. Many can be understood in the framework of stabilizer codes, where a set of multi-qubit stabilizer operators (tensor products of Pauli $$X, Y, Z$$) are defined such that the code space is the joint +1 eigenspace of all stabilizers. Error syndromes are obtained by measuring these stabilizers. Important examples include:

Shor code (9-qubit code): The first quantum error-correcting code, discovered by Peter Shor, encodes 1 logical qubit in 9 physical qubits. It works by concatenating a 3-qubit repetition code for bit-flips with another 3-qubit repetition code for phase-flips. In essence, it uses 9 qubits arranged as three groups: first it triples the qubit (to protect against bit flips via majority vote), then it uses a clever Hadamard rotation and triples each of those to protect against phase flips. The Shor code can correct any single-qubit error (be it $$X$$, $$Z$$, or $$Y$$) on any one of the 9 qubits. It was a proof-of-concept that quantum redundancy and syndrome measurement can safeguard quantum information. While robust, the 9-qubit Shor code is quite resource-intensive (high overhead) and has given way to more efficient codes in practice.

Steane code (7-qubit CSS code): The Steane code is a 7-qubit code invented by Andrew Steane in 1996 that belongs to the class of CSS (Calderbank-Shor-Steane) codes. It is built from two classical codes: the $$[7,4,3]$$ Hamming code for bit-flips and its dual for phase-flips. The code encodes 1 logical qubit into 7 physical qubits and can correct any single-qubit error (distance $$d=3$$). In stabilizer form, it has 6 stabilizer generators (3 $$X$$-type and 3 $$Z$$-type checks). The Steane code was the first example of a quantum Hamming code. It is more efficient than Shor’s (using 7 qubits instead of 9 for one logical qubit) and is a precursor to many modern codes. Being a CSS code, it separately treats $$X$$ and $$Z$$ errors, which simplifies implementation. The Steane code has been demonstrated in small-scale experiments and is an important benchmark code in QEC research.

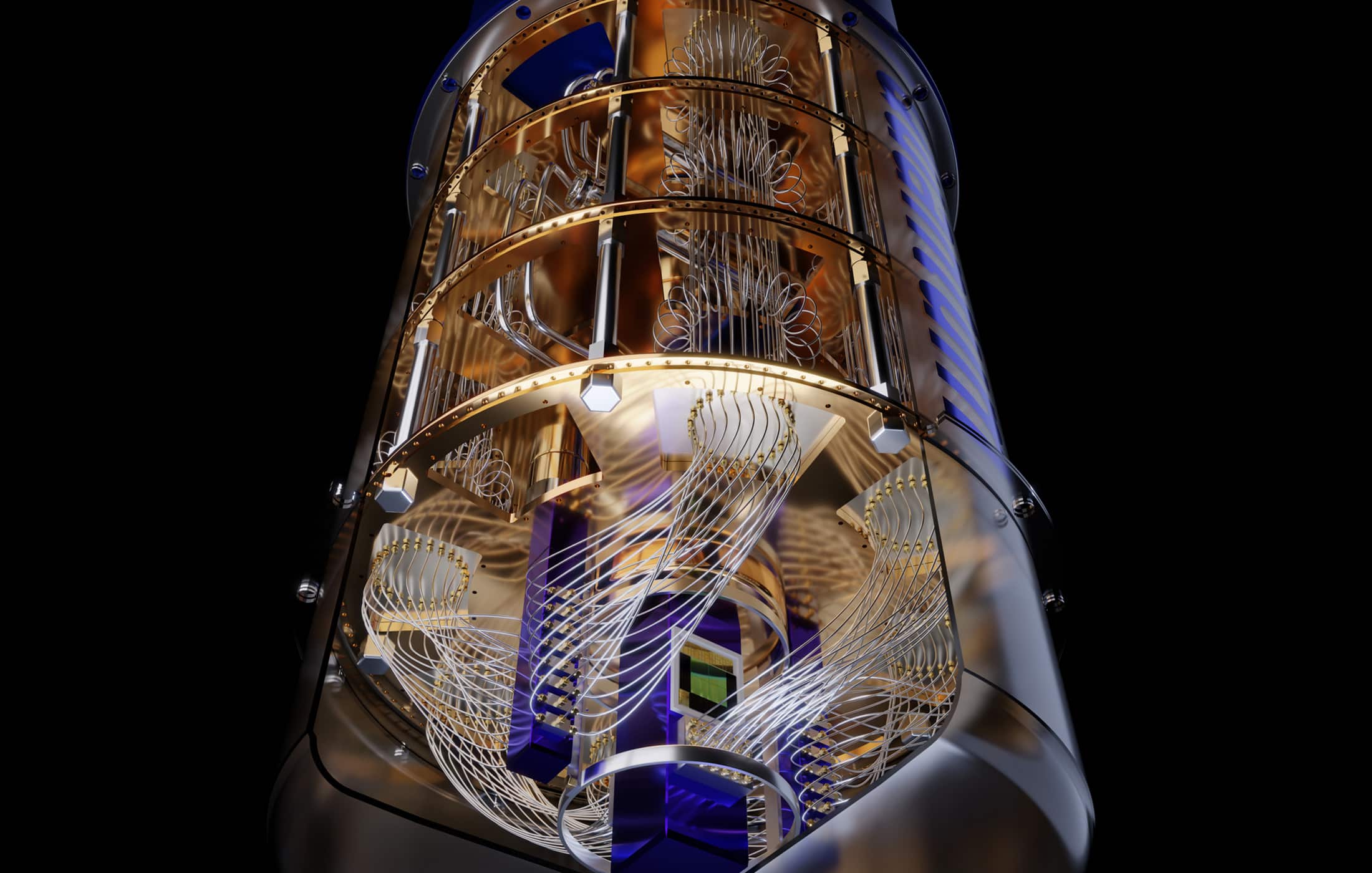

Surface code (topological code): The surface code is a leading QEC code in today’s quantum computing implementations due to its high error threshold (around 1% in ideal conditions) and geometric locality. It was introduced by Alexei Kitaev (as the “toric code”) and refined for planar architecture (surface code) in the late 1990s. Qubits in a surface code are arranged on a 2D grid (usually each qubit is on an edge of the grid), and stabilizers involve either four-qubit $$X$$ operators (“plaquette” stabilizers on a square of four qubits) or four-qubit $$Z$$ operators (“star” stabilizers around a vertex). The code is called “topological” because the logical qubits are encoded in non-local, topologically protected degrees of freedom (often corresponding to chains of errors that span from one boundary of the lattice to the other). The simplest surface code patch encodes one logical qubit into a lattice of $$d^2$$ physical qubits (plus some ancillas for measurement), where $$d$$ is the code distance. Larger $$d$$ means more qubits and more error correction overhead, but a higher ability to correct errors (it can correct up to $$\lfloor(d-1)/2\rfloor$$ errors). The surface code’s big advantages are that each stabilizer involves only local qubit interactions (ideal for 2D chips) and it tolerates relatively high error rates ≈1%. In fact, it is below threshold for many current devices, meaning increasing the code size is expected to suppress logical errors. Tech companies like Google, IBM, and others have all targeted the surface code or variants of it for their roadmap to scalable quantum computers (we discuss implementations later). The main downside of the surface code is the overhead – it may require hundreds or thousands of physical qubits per logical qubit to reach very low error rates, since $$d$$ must be large. Nonetheless, its experimental feasibility and high threshold make it a centerpiece of quantum error correction efforts.

Stabilizer codes and others: The above codes (Shor, Steane, surface) are all stabilizer codes, meaning they can be described by a set of commuting Pauli stabilizers. The stabilizer formalism, introduced by Gottesman in 1997–98, greatly simplified the theory of QEC by unifying it with binary linear codes (via Pauli matrices mapping to bits). Many other codes exist: for example, Bacon-Shor codes (a variant of Shor’s code with fewer stabilizers), color codes (another topological code in 2D/3D with multi-qubit stabilizers), and LDPC codes (recent quantum low-density parity-check codes that aim to reduce overhead). The stabilizer framework also connects to classical codes over GF(4) (the field of four elements), providing a rich source of code constructions. In practice, when designing a QEC system, one considers factors like the code’s distance (error-correcting capability), the number of physical qubits per logical qubit, and the connectivity requirements for implementing stabilizer measurements. A code like surface code has high threshold but requires a 2D grid; a code like a concatenated Steane code can work with all-to-all connections but may have a lower threshold. The choice of code is often tailored to the hardware and error rates available.

Bosonic Codes (for bosonic qubits)

Bosonic codes utilize quantum harmonic oscillators (modes of light or motion) to encode qubits in high-dimensional Hilbert spaces (instead of discrete two-level systems). Examples include modes of a superconducting resonator or an optical cavity. The idea is to leverage the infinite-dimensional space of an oscillator to redundantly encode a qubit in such a way that certain errors (like photon loss) can be detected and corrected with minimal overhead in additional physical qubits. Bosonic codes often result in biases in error rates – for instance, a properly engineered bosonic qubit might naturally suffer far fewer bit-flip errors than phase errors, allowing simplified error correction on the dominant error channel. Key bosonic code families are:

Cat codes: A cat code encodes a qubit into two (or more) superpositions of coherent states of an oscillator, reminiscent of Schrödinger’s cat being in a superposition of “alive” and “dead”. For example, one could encode $$|\bar{0}\rangle = |\alpha\rangle + |-\alpha\rangle$$ and $$|\bar{1}\rangle = |\alpha\rangle – |-\alpha\rangle$$ (properly normalized), where $$|\alpha\rangle$$ is a coherent state of a bosonic mode (a displaced vacuum) and $$-\alpha$$ is the phase-flipped version of that state. These cat qubit states have even or odd photon number parity, respectively. A single-photon loss (the dominant error in a superconducting resonator) changes the photon number by one, which flips the parity from even to odd or vice versa – effectively transforming an encoded basis state into the other basis state. This means a single photon loss corresponds to a logical bit-flip ($$X$$ error) on the cat qubit. However, cat codes can be designed to make phase-flip errors (caused by amplitude or frequency noise) exponentially small in the cat state size $$|\alpha|$$ while bit-flips are just caused by photon loss events. In practice, cat qubits exhibit a bias: bit-flip errors become much less frequent than phase-flip errors due to the hardware architecture. One can then apply simpler quantum error correction (like a repetition code) to correct the remaining dominant phase errors. Cat codes have been experimentally realized in circuit QED systems, demonstrating the extension of qubit coherence by encoding into cat states. They are attractive because they convert a physical error (photon loss) into a detectable syndrome (parity change) and because they require fewer physical qubits (the oscillator itself is one mode serving as the qubit, with maybe one ancilla for syndrome measurement).

Gottesman-Kitaev-Preskill (GKP) codes: The GKP code, proposed in 2001 by Gottesman, Kitaev, and Preskill, encodes a qubit into a continuous variable (oscillator) by using a grid of periodic wavefunctions in phase space. In an ideal GKP code, the logical $$|0\rangle$$ and $$|1\rangle$$ are represented by comb-like wavefunctions that are infinite superpositions of position eigenstates spaced by some period (or similarly in momentum). Intuitively, these are like two interleaved lattices of points in the oscillator’s phase space. Small shifts in position or momentum (which correspond to small analog errors) will move the state off the grid points, and a syndrome measurement (effectively measuring the stabilizers which are large displacements) will detect those shifts modulo the grid spacing, allowing one to correct them back to the nearest lattice point. In this way, the GKP code converts small continuous errors into discrete syndrome shifts. A big advantage is that the GKP code can, in principle, correct small arbitrary displacement errors (in both quadratures) up to some maximum shift. This directly targets errors like photon loss, jitter, or noise in an oscillator. GKP codewords are quite exotic (they require highly squeezed states or approximate finite-energy versions of them), which made them experimentally challenging for a long time. But recent progress in superconducting circuits and ion traps has seen partial demonstrations of GKP encodings, and the GKP code is now considered a frontrunner for bosonic QEC. One exciting aspect is that GKP codes, when concatenated with an outer qubit code, might significantly reduce the overhead required for fault tolerance by correcting most analog errors at the bosonic level.

Binomial codes: Binomial codes are a family of bosonic codes introduced in 2016 that encode a qubit into specific superpositions of Fock states (number states) of an oscillator. They are termed “binomial” because the coefficients of the superposition are chosen from binomial distributions. For instance, one simple binomial code (the (N=2, S=2) code) uses the Fock states $$|0\rangle$$, $$|2\rangle$$, $$|4\rangle$$ to encode logical states as $$|\bar{0}\rangle=(|0\rangle+|4\rangle)/\sqrt{2}$$ and $$|\bar{1}\rangle=|2\rangle$$. This particular code can detect one photon loss or gain error: losing a photon from $$|4\rangle$$ or $$|2\rangle$$ moves to a state outside the code space (e.g. $$|3\rangle$$ or $$|1\rangle$$), which can be detected by a parity-type measurement. In general, binomial codes are constructed to cancel out errors up to a certain order of photon loss, gain, or dephasing by appropriate choice of codewords. They approximately protect against continuous dynamics like damping. The big appeal of binomial codes is that they can be implemented with finite-energy states that are relatively easier to produce (compared to ideal GKP states or large cat states) and they can be tailored to the dominant error channel of the oscillator (for example, a code that protects against up to two photon losses). Binomial codes have been experimentally tested in superconducting cavity setups, showing the extension of qubit lifetime by actively correcting detected photon jumps. They represent a hardware-efficient approach, since one oscillatory mode encodes the qubit and only a few ancillary qubits and operations are needed for error syndrome measurement.

Bosonic codes effectively leverage physical oscillator systems to absorb certain errors at the hardware level, thereby reducing the load on higher-level digital QEC. Many times they result in an error bias (e.g. heavily suppressed $$X$$ errors relative to $$Z$$ errors). This bias can be exploited by simpler classical codes (like a repetition code on the bosonic qubits) to correct the remaining errors. A current theme in quantum hardware research is to develop bias-preserved logical qubits – if one can make one type of error extremely rare, the QEC overhead to handle the other type becomes much smaller. Bosonic encodings like cat and GKP are promising routes to achieve that.

Error Mitigation Techniques (for near-term devices)

Error mitigation refers to methods that reduce the effect of errors on computational results without encoding in large QEC codes. These methods are especially useful for today’s “NISQ” (Noisy Intermediate-Scale Quantum) devices, which have too few qubits to implement full QEC codes but still could benefit from some error reduction. Error mitigation does not prevent or correct errors in the same sense as QEC, but it tries to extrapolate or cancel out the error effects in software. A few common techniques are:

Zero-noise extrapolation (ZNE): This technique involves purposely varying the noise in the quantum computation and using the results to extrapolate to the zero-noise limit. In practice, one can “amplify” the noise in a circuit (for example, by stretching gate durations or repeating gates so that errors accumulate more) to obtain measurements at higher error rates, and then perform a fit/extrapolation (linear, polynomial, or exponential) of the observable’s value back to what it should be at zero error. For instance, one might run the same quantum circuit with normal noise, 2× noise, and 3× noise, and get outcome expectations $$E(1x), E(2x), E(3x)$$, then fit a curve and extrapolate to 0x noise to estimate the error-free result. Qiskit and other frameworks implement noise amplification by techniques like gate folding – e.g., replacing a gate $$U$$ with $$U U^\dagger U$$ (which ideally does nothing but in reality adds noise) to scale noise up. ZNE has been shown to improve the accuracy of computations like variational algorithms, though it is not bias-free (extrapolation assumes a certain noise model). It requires multiple runs of the circuit and classical post-processing but no extra qubits.

Probabilistic error cancellation (PEC): This is a more advanced technique where one characterizes the noise on the quantum gates, and then adds a probabilistic mixture of inverse error operations to cancel it out on average. Essentially, if you know the error process $$\mathcal{E}$$ affecting your gates, you can represent the ideal gate as a quasiprobability mixture of noisy operations $$\mathcal{E}^{-1}$$ applied with certain weights (some weights might be negative, hence “quasi”). By randomly sampling these operations according to the quasiprobabilities, one can unbias the expectation value of an observable, getting the correct result in expectation. The drawback is a sampling overhead: the variance of the results increases with the “negativity” of the quasiprobabilities, meaning you have to sample many more circuit runs to get a high-confidence estimate. PEC yields an unbiased estimator of the error-free outcome, unlike ZNE, but if the noise is large the sampling cost becomes impractical. PEC has been combined with noise characterization techniques and is available in libraries like Mitiq.

Dynamical decoupling: While not a computational post-processing like the above two, dynamical decoupling (DD) is a pulse control technique used on quantum hardware to suppress certain errors. It involves inserting sequences of pulses on idle qubits to average out low-frequency noise and undesired couplings. This is essentially a quantum analog of spin-echo techniques from NMR. For example, if a qubit is idle and prone to dephasing, applying an $$X$$ pulse halfway through the idle period can refocus static noise. More advanced DD sequences (XYX, KDD, etc.) apply multiple pulses. DD can mitigate coherent errors that accumulate while qubits sit idle. It doesn’t correct arbitrary discrete errors but can prolong coherence times. Many near-term experiments use DD to maximize qubit fidelity during algorithm execution. It is a form of error suppression at the physical level and can be combined with other mitigation methods.

Measurement-based error suppression: These methods involve using additional measurements to detect and reduce errors. One simple example is readout error mitigation – measuring a qubit can itself be error-prone (the classical outcome can be flipped). By calibrating the readout error rates and possibly repeating measurements, one can correct the bias in measurement results. Another example is postselection on symmetry checks: in certain quantum algorithms, the ideal state should satisfy a known constraint (like conservation of particle number or a parity). By measuring a related operator during the computation, one can detect if an error has likely occurred (the system fell outside the allowed subspace) and discard those runs. This doesn’t fix the error, but it filters out some error instances. For instance, in a variational quantum eigensolver for a molecule, one might enforce that the measured total spin or electron number is consistent; any deviation indicates an error and that run is excluded from averaging. More generally, quantum subspace expansion techniques use additional measurements of projectors onto error spaces to correct the final result via linear combination (a kind of recovery of likely error states in the output). All these approaches use measurements (during or after the circuit) to gain partial information about errors and then either correct the results or discard faulty runs. They require additional circuit runs and are not scalable in the long run (since discarding runs is inefficient when error rates are high), but they can noticeably improve the fidelity of near-term experiment results.

In summary, error mitigation trades increased sampling and calibration for improved accuracy without using the full overhead of QEC codes. These techniques will not by themselves allow quantum computers to scale indefinitely (for that, true error correction is needed), but in the near term they are vital for squeezing out as much performance as possible from noisy devices.

Comparison of Quantum Error Correction Methods

There is a spectrum of QEC methods, each with its own trade-offs in overhead, fault-tolerance, and practicality. Traditional quantum error-correcting codes like surface or Steane codes require a significant number of physical qubits per logical qubit, but they promise exponentially suppressed error rates when scaled. For instance, the surface code might need on the order of ~100 physical qubits to achieve a logical error rate of say $$10^{-3}$$, and many thousands for error rates ~$$10^{-9}$$ suitable for long algorithms, given current physical error rates. Concatenated codes (like a Steane code concatenated with itself multiple times) can also reach very low error rates, but again at the cost of recursively multiplying qubit overhead and gate counts.

Different codes target different regimes: small block codes (5-qubit, 7-qubit codes) can correct one error with modest overhead but have low threshold (they only work if physical error rates are extremely low, often below $$10^{-4}$$). Topological codes like the surface code have high threshold (~1%), meaning they can start improving error rates even when physical qubits error ~1 in 100, which is why they are favored for first implementations. However, surface codes have a large constant overhead: you might need dozens of physical qubits just to encode one logical qubit of distance 3 or 5.

Bosonic vs qubit codes: Bosonic codes attempt to get more mileage out of each physical quantum system by using its many levels. In terms of overhead, a bosonic code uses zero extra qubits (the encoding lives in one device, like a cavity mode), but it often requires one or two ancilla qubits to perform syndrome measurement on that mode. Bosonic codes also typically need higher circuit depth for error syndrome extraction (e.g., multiple gates between a cavity and ancillas). They can dramatically suppress one type of error (e.g. the cat code biasing against bit-flips ), which is advantageous if that error is dominant. However, if a bosonic mode suffers unanticipated errors or if the error rates are not as biased as expected, the benefits may diminish.

Error mitigation vs error correction: Error mitigation techniques have the advantage of low overhead – one uses the same quantum circuit but with clever post-processing or tweaks. They are invaluable in the near term but do not scale to arbitrary circuit lengths. They also often require detailed characterization of noise or repeated experiments, which can be time-consuming. In contrast, quantum error-correcting codes once activated in a fault-tolerant quantum computer will actively correct errors on the fly and, beyond a certain point, allow the quantum algorithm to run as long as needed (as long as the error rate is below threshold). The trade-off is that QEC codes need many more qubits and gates to implement.

Regardless of code, implementing QEC in hardware introduces new error sources and complexity. Syndrome measurements require ancilla qubits and extra gates, which themselves can fail. A fault-tolerant design must ensure that even if a syndrome extraction fails, it doesn’t propagate and cause more harm (this leads to complex protocols like syndrome extraction with Shor or Steane ancilla states, or using flag qubits to catch ancilla faults). Issues like crosstalk (qubits unintentionally interacting) and state leakage (qubits leaving the code space) can violate the assumptions of the code and require additional handling. There’s also a significant overhead in classical processing: decoding the syndrome (especially for the surface code) is a non-trivial graph matching problem that must be solved in real-time faster than errors accumulate. Fortunately, efficient decoders exist and have been demonstrated for moderate code sizes.

Another concern is fabrication yield and control electronics for large qubit arrays – if one qubit in a surface code patch is dead or faulty, it could compromise the code. Some proposals consider using redundancy or alternative codes that can handle a few bad qubits. Moreover, the resource cost in terms of additional gates for QEC can slow down the effective computational speed (each logical gate might need many physical gate operations). Balancing the error suppression versus overhead is an ongoing engineering optimization.

Future Prospects and Challenges

Achieving full fault-tolerant quantum computing will require surmounting several remaining challenges: improving physical qubit quality, engineering larger systems with thousands or millions of qubits, and perhaps developing new codes or architectures that reduce the overhead. From a theoretical perspective, the threshold theorem assures us that if we can get physical error rates below the threshold (and maintain certain independence assumptions), we can build quantum computers of arbitrary size.

In practice, thresholds for common codes like surface codes are around $$10^{-2}$$ per gate; many state-of-the-art qubits today have error rates in the $$10^{-3}$$ to $$10^{-4}$$ range for single-qubit gates and a bit higher for two-qubit gates, so we are in a hopeful vicinity.

In the next decade, we can expect:

Better QEC codes and decoders: Research is ongoing into codes with higher rate (more logical qubits per physical qubits) and efficient decoding algorithms. For example, quantum LDPC codes promise constant overhead per logical qubit (instead of overhead growing with 1/distance). As quantum devices grow, these codes might become testable. Machine learning and AI are also being used to optimize error decoders and even error suppression sequences.

Integration of hardware and QEC: We will likely see specific hardware improvements that make QEC easier. This could mean microwave packaging that allows thousands of qubit connectivity, cryogenic classical processors to handle syndrome processing on the fly, and better isolation to reduce correlated errors (which QEC assumes are low). Companies are investing in custom control chips and modular architectures (for instance, dividing qubits into error-corrected nodes connected by photonic links).

Fault-tolerant logical operations: Thus far, most experiments focus on storing a logical qubit. But a quantum computer needs to compute on logical qubits. Certain gates, like the CNOT, are relatively straightforward in surface codes (through lattice surgery or braiding), but others like T-gates require state injection and magic state distillation, which are very resource-intensive. A big challenge will be demonstrating a universal set of logical gates with acceptable overhead.

Combining error mitigation and correction: Even when true QEC is running, synergistic use of error mitigation can help, for example by improving the estimates of error syndromes or discarding the rare uncorrectable events. We might see hybrid techniques ensuring that the effective error on logical qubits is pushed down even further, potentially enabling demonstrations of algorithms like Quantum Volume tests or small algorithms running for many more steps than previously possible.

If these challenges are met, the payoff is enormous. Reliable error-corrected qubits would enable running deep quantum algorithms that are beyond the reach of classical simulation. For instance, quantum chemists anticipate solving complex molecular structures and reaction dynamics that classical computers cannot handle, once hundreds of logical qubits are available. Cryptographers brace for demonstrations of Shor’s algorithm factoring large numbers – which might require thousands of logical qubits and billions of operations, only feasible with full error correction. Other potential applications include optimization problems, finite field arithmetic for cryptography, and simulation of high-energy physics, all of which need long circuit depths.

To put it in perspective, today’s noisy devices can maybe manage circuits of depth in the tens before noise dominates. A fully error-corrected quantum computer could run circuits of depth in the millions or more, unlocking algorithms like Grover’s search or large iterations of quantum phase estimation. However, to get there we likely need on the order of $$10^6$$ to $$10^7$$ physical qubits if using surface-code-like overheads – a number far beyond the few hundred qubits available now. This will require radical improvements in fabrication (for solid-state qubits) or trapping technology (for ions/atoms), as well as cryogenic and room-temperature infrastructure.

In conclusion, quantum error correction has transitioned from a theoretical idea to experimental reality over the past 20+ years. The next milestones will be achieving logical qubits that outperform the best physical qubits by orders of magnitude, and then using them as building blocks for logical quantum processors. Every aspect – from materials science for qubits to algorithms for decoding – will play a role in this effort. The ultimate vision is a scalable quantum computer that, through error correction, can run complex algorithms reliably for days or weeks if needed, something impossible on today’s noisy devices.