Adiabatic Quantum (AQC) and Cyber (2024 Update)

Table of Contents

Introduction

Quantum computing promises to solve certain classes of problems that are intractable for classical computers by exploiting quantum-mechanical phenomena like superposition, entanglement, and tunneling. There are multiple models of quantum computation, with the gate-based (circuit) model being the most widely known. Gate-based quantum computers apply sequences of quantum logic gates to qubits (quantum bits) analogous to how classical computers use Boolean gates on bits. This “universal” gate model can perform any computation a classical computer can (and more efficiently for specific problems like factoring via Shor’s algorithm), which is why companies like IBM and Google focus on it.

However, gate-based quantum computing is not the only approach. Adiabatic Quantum Computing (AQC) is an alternative paradigm that uses an analog process based on the quantum adiabatic theorem. Instead of discrete gate operations, AQC involves slowly evolving a quantum system’s Hamiltonian such that it remains in its lowest-energy (ground) state, effectively “computing” the solution as the system’s final state. AQC and its practical subset known as quantum annealing are particularly geared toward solving optimization problems by finding minima of cost functions.

AQC is important for several reasons. First, it provides a fundamentally different route to quantum computation that may be easier to implement for certain technologies. Quantum annealing devices (like those from D-Wave Systems) already boast thousands of qubits dedicated to AQC, far surpassing the qubit counts of current gate-model machines – albeit with significant differences in capability. Second, AQC is theoretically powerful: it has been proven that under ideal conditions, the adiabatic model is polynomially equivalent to the gate model of quantum computing (meaning any problem solvable by one can be solved by the other with at most polynomial overhead). This implies that a universal AQC can run any quantum algorithm, including those known to threaten classical cryptography. Third, because AQC naturally tackles optimization, it aligns well with many real-world problems (logistics, machine learning, etc.) and could potentially revolutionize how we approach complex security tasks like threat detection or network optimization.

From a cybersecurity perspective, the rise of quantum computing – whether gate-based or adiabatic – is a double-edged sword. On one hand, quantum computers pose a serious threat to classical cryptography. Most of today’s encryption, digital signatures, and key exchange protocols rely on mathematical problems (factoring, discrete logarithms, etc.) that are infeasible for classical computers but could be solved efficiently by sufficiently large quantum computers. For example, Shor’s algorithm on a universal quantum computer can factor large integers and compute discrete logarithms in polynomial time, breaking RSA and ECC. Grover’s algorithm can speed up brute-force searches, undermining symmetric ciphers and hash functions (though only quadratically, meaning doubling key lengths restores security). Adiabatic quantum computers, being computationally equivalent in power, in principle share these abilities. Any quantum computing breakthrough threatens current cryptographic systems, and experts project that a cryptoanalytically relevant quantum computer (CRQC) could emerge within the next couple of decades. This looming “Q-Day” (the day when quantum attacks break our crypto) has spurred initiatives like NIST’s Post-Quantum Cryptography program to standardize quantum-resistant algorithms.

On the other hand, quantum computing can also enhance cybersecurity. Quantum techniques enable new cryptographic primitives such as quantum key distribution (QKD), which offers information-theoretic secure communication by physics (not math assumptions). Quantum algorithms might help improve security analytics – for example, by solving complex optimization problems in network hardening or by speeding up pattern-matching in large data for threat detection. Adiabatic quantum optimization, in particular, is well-suited to tasks that can be formulated as minimizing a cost function, which describes many problems in cybersecurity (e.g. finding minimal sets of malware signatures, optimal intrusion response strategies, etc.). Researchers are already exploring the integration of quantum annealers with artificial intelligence for real-time threat detection.

In-Depth Explanation of Adiabatic Quantum Computing (AQC)

Adiabatic Quantum Computing is built on a key principle from quantum physics: the quantum adiabatic theorem. In essence, the adiabatic theorem says that if a quantum system is initialized in the ground state (lowest energy state) of some Hamiltonian (which is essentially an “energy landscape” describing the system), and if the Hamiltonian is changed slowly enough, the system will remain in its ground state throughout the evolution. By the end of the process, if the Hamiltonian has been transformed into a new final Hamiltonian, the system will end up in the ground state of that final Hamiltonian (provided a few conditions like no level crossings or sufficiently large energy gaps). This is the foundation of AQC: encoding a computational problem into a Hamiltonian whose ground state represents the solution, then using slow (adiabatic) evolution to guide the quantum system to find that ground-state solution.

Let’s break down step-by-step how AQC solves optimization problems using this principle:

- Problem Mapping to Hamiltonian: First, we take an optimization problem and express it as finding the minimum value (optimal solution) of some cost function $$C(z)$$. In AQC, such a cost function is translated into a quantum Hamiltonian $$H_P$$ (the “problem Hamiltonian”) whose ground state corresponds to the optimal solution. For example, if the problem is a combinatorial optimization like 3-SAT or the Traveling Salesman, one can construct $$H_P$$ so that each possible configuration of variables corresponds to a quantum state, and the energy of that state in $$H_P$$ equals the cost (e.g., number of unsatisfied clauses, or the path length). The ground state (minimum energy) of $$H_P$$ thus encodes the best solution (satisfying assignment with zero clauses unsatisfied, or shortest route, etc.). Constructing such a Hamiltonian often involves using qubits to represent binary variables and coupling them so that undesirable configurations have higher energy.

- Choose an Initial Hamiltonian: Next, we need a starting point that we know how to prepare. We choose an initial Hamiltonian $$H_I$$ whose ground state is easy to prepare. Usually, $$H_I$$ is something simple like a magnetic field that forces all qubits to align in a known state. A common choice is $$H_I = -\sum_{i} \Delta \sigma_i^x$$, essentially a transverse field that makes the ground state an equal superposition of all possible basis states (all qubits in a balanced state of 0 and 1). The ground state of $$H_I$$ can be prepared by initializing all qubits to $$|0…0\rangle$$ and applying a Hadamard (in gate terms) or just letting the transverse field bias them into an equal superposition.

- Adiabatic Evolution (Quantum Annealing Process): Now the system is initialized in the known ground state of $$H_I$$. We then slowly interpolate the Hamiltonian from $$H_I$$ to the problem Hamiltonian $$H_P$$. Formally, we define a time-dependent Hamiltonian: $$H(t)=(1−s(t)) HI + s(t) HP,H(t) = (1-s(t))\, H_I \;+\; s(t)\, H_P,H(t)=(1−s(t))HI+s(t)HP$$, where $$s(t)$$ goes from 0 to 1 over the run time $$T$$. At $$t=0$$, $$s(0)=0$$ so $$H(0)=H_I$$; at the end $$t=T$$, $$s(T)=1$$ so $$H(T)=H_P$$. The interpolation schedule $$s(t)$$ must be slow enough to satisfy the adiabatic condition. “Slow enough” means the system’s evolution rate is small compared to the square of the minimum energy gap between the ground state and first excited state encountered during the process as explained in Wikipedia. If this condition holds, the adiabatic theorem guarantees the system will remain in the instantaneous ground state of $$H(t)$$ throughout. Intuitively, the system’s state “tracks” the shifting energy landscape, continuously adjusting to stay in the lowest-energy configuration available. Importantly, if the schedule is too fast (non-adiabatic), the system can get excited to a higher energy state (i.e., jump out of the ground state), yielding an incorrect result. The critical bottleneck is often the smallest energy gap $$g_{\min}$$ that occurs during the interpolation; near that point the evolution must be extremely slow to avoid transition (the required runtime $$T$$ generally scales inversely with some power of $$g_{\min}$$, often $$T \propto 1/g_{\min}^2$$ for a linear schedule).

- Ending in the Solution State: At the end of the evolution ($$t=T$$), the Hamiltonian is just $$H_P$$. By the adiabatic theorem, assuming everything went perfectly, the system is now in the ground state of $$H_P$$. This quantum state encodes the solution to our original problem. We then measure the qubits in the computational basis to read out the solution bits. Because the state was the ground state corresponding to the optimal solution, the measurement yields the optimal solution with high probability. (If the ground state is degenerate or the process had some probability of ending in an excited state, one might repeat the annealing run a few times and take the lowest-energy result found.)

To summarize this procedure in simple terms: “Initialize the qubits in an easy state, slowly morph the Hamiltonian so it represents your problem, and the qubits will settle into the answer”. The above steps 1–4 outline exactly how an adiabatic computer operates. It is essentially performing a physical energy minimization process, akin to gently guiding a ball to roll into the deepest valley of a landscape without getting stuck in smaller ditches along the way.

AQC intrinsically solves optimization problems by this method of finding ground states. Many NP-hard problems (like 3-SAT, MAX-CUT, graph coloring, etc.) can be formulated as finding minima of some cost function and thus, in principle, attacked by AQC Indeed, the original 2001 paper by Farhi et al., which introduced the Quantum Adiabatic Algorithm, applied it to random instances of an NP-Complete problem (exact cover / 3-SAT). Since then, researchers have tried AQC on various combinatorial optimizations and shown it can find solutions for small instances. However, whether it offers a significant speedup over classical algorithms for large-scale NP-hard problems remains an open question – often hinging on how the minimum spectral gap scales with problem size and whether clever strategies can avoid exponentially small gaps.

It’s important to clarify the differences between “universal” AQC and quantum annealing, as these terms are sometimes used interchangeably but actually denote different scopes:

- Universal AQC refers to an adiabatic quantum computer that is fully programmable to perform any arbitrary computation (within quantum complexity class BQP). This would require the ability to realize a wide variety of Hamiltonians and adiabatic paths, sufficient to encode any quantum algorithm. In theory, as mentioned, AQC is as powerful as the gate model. But achieving universality imposes stringent requirements on the types of Hamiltonians the hardware can implement and the level of control over the interpolation. For AQC to be universal, one must be able to implement a Hamiltonian that effectively represents a quantum circuit of arbitrary complexity, as explained in a report from Sandia National Laboratories. This often means the ability to have multi-qubit interactions or at least to embed such interactions via gadget constructions, and possibly the ability to have “non-stoquastic” terms (Hamiltonians that include sign-changing quantum terms that are thought to be necessary for universal quantum computing). Universal AQC is largely a theoretical construct today – no current hardware can realize all the Hamiltonians needed for universal quantum computation, though proposals exist, such as, for example, designs using superconducting qubits or Rydberg atoms with programmable interactions.

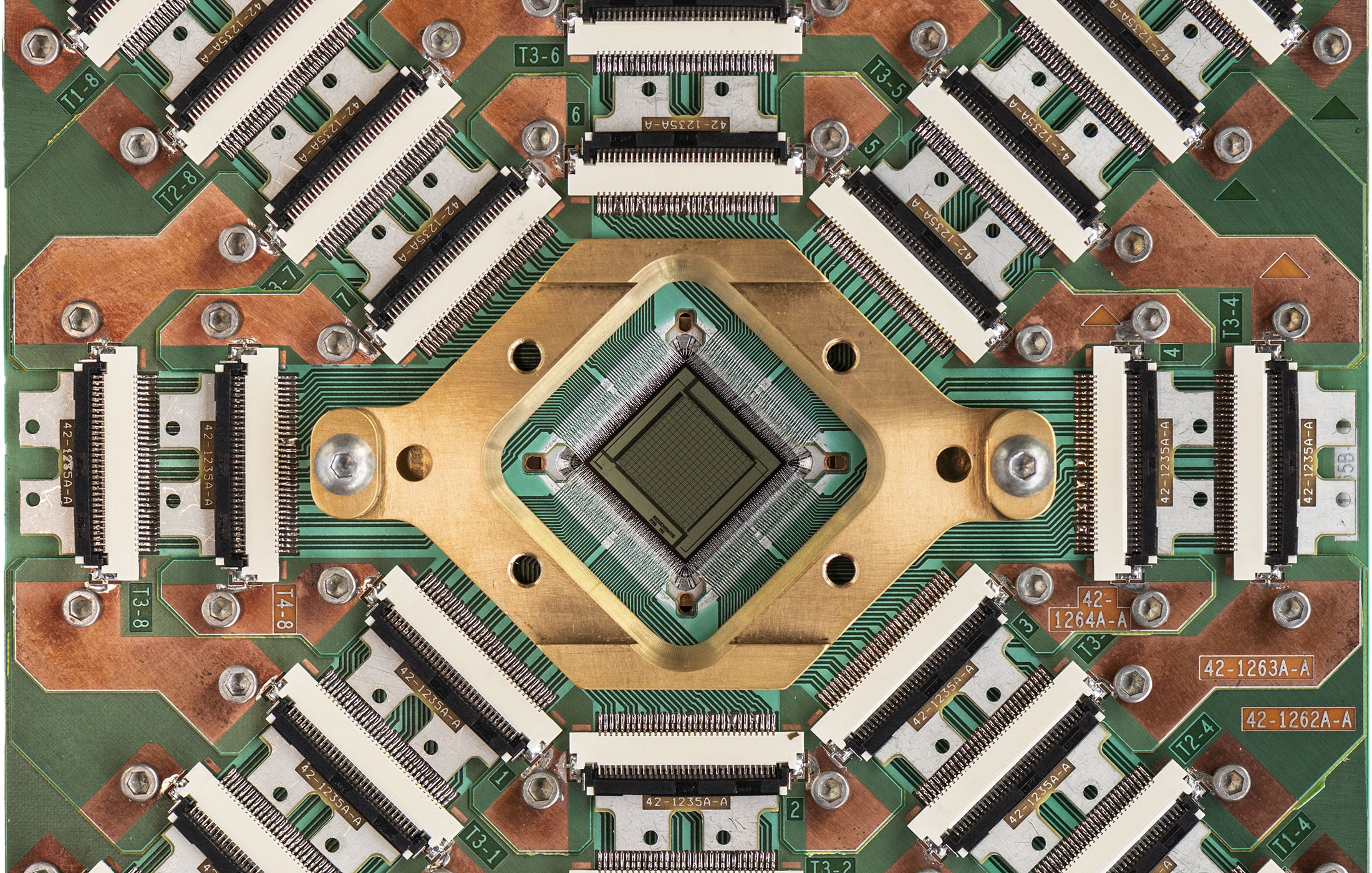

- Quantum Annealing (QA) usually refers to a practical, non-universal implementation of AQC focused specifically on optimization problems (often classical optimization problems). Quantum annealers implement a limited type of Hamiltonian, typically the Ising spin model or equivalently a Quadratic Unconstrained Binary Optimization (QUBO) form. For example, D-Wave’s annealers realize a Hamiltonian of the form: $$HP=∑ihiσiz+∑i<jJij σizσjz,H_P = \sum_{i} h_i \sigma_i^z + \sum_{i<j} J_{ij}\, \sigma_i^z \sigma_j^z,HP=∑ihiσiz+∑i<jJijσizσjz$$, with $$\sigma^z$$ being Pauli Z operators on qubit $$i$$, and programmable parameters $$h_i$$ (local fields) and $$J_{ij}$$ (couplings) between qubits. This corresponds to an Ising energy function on binary variables $$s_i \in {\pm1}$$: $$E(s) = \sum_i h_i s_i + \sum_{i<j}J_{ij} s_i s_j$$. Many optimization problems can be embedded into this form (by translating binary variables and constraints into an Ising model). However, this form is not fully general for quantum computation – it’s essentially an optimization problem Hamiltonian with only $$\sigma^z$$ (diagonal) interactions. The initial Hamiltonian on D-Wave is typically something like $$H_I = -\sum_i \sigma_i^x$$ (a transverse field on each qubit) to provide quantum fluctuation. So D-Wave performs an anneal from $$-\sum \sigma^x$$ to the Ising Hamiltonian $$H_P$$.

The crucial difference is that quantum annealing devices are not universal quantum computers; they cannot implement arbitrary quantum algorithms outside the framework of finding the ground state of an Ising-model Hamiltonian. For instance, you couldn’t directly run Shor’s algorithm or Grover’s algorithm on a standard quantum annealer, because those algorithms aren’t naturally phrased as optimization ground-state problems of the Ising type (at least not without enormous overhead). In contrast, a universal AQC, if one could build it, could simulate a circuit for Shor or Grover by constructing an appropriate Hamiltonian with a spectrum that drives the computation – this is theoretically possible because any quantum circuit can be encoded into a Hamiltonian whose ground state corresponds to the circuit’s output, using techniques from Feynman’s Hamiltonian computation and Kitaev’s circuit-to-Hamiltonian construction.

In summary, quantum annealing is a specialized subset or variant of AQC that relaxes the adiabatic requirement somewhat in practice and focuses on solving particular optimization formulations. Annealing devices may run relatively faster (not always fully adiabatic), and often operate at finite temperature, so the process is partly quantum, partly thermal annealing. They provide a kind of heuristic quantum optimization rather than exact, guaranteed adiabatic evolution. Universal AQC demands a slower, carefully controlled evolution with a much richer Hamiltonian design to cover all computations.

To give notable implementations: The prime example of AQC in action is D-Wave’s quantum annealers. D-Wave Systems (a Canadian company) pioneered building superconducting flux qubit processors that perform quantum annealing. Over successive generations, D-Wave’s machines grew from 128 qubits (D-Wave One in 2011) to 512, 1024, and the D-Wave 2X (around 1000 qubits), then 2000Q (≈2048 qubits), and most recently the D-Wave Advantage system with over 5000 qubits (5640 qubits) in its newest model. These qubit counts far exceed those of any gate-model quantum computer as of 2025. However, the qubits are connected in a particular sparse topology (initially a Chimera graph, now a Pegasus graph for the Advantage system), meaning not every qubit can directly interact with every other. That necessitates embedding larger problems onto the hardware graph with some overhead (using more physical qubits to represent one logical variable if needed). D-Wave’s devices operate at extremely low temperatures (~15 millikelvin) in a dilution refrigerator to minimize thermal noise, since thermal excitations could kick the system out of the ground state during the anneal. The programming of the device involves setting the coefficients $${h_i, J_{ij}}$$ for the problem and then invoking the annealing process which might take on the order of microseconds to milliseconds.

It’s important to note that D-Wave’s annealers are not “error-corrected” quantum computers. They are analog devices subject to noise and imperfections. While gate-based quantum computers usually strive for error correction (encoding one logical qubit into many physical qubits to actively correct errors), annealers currently rely on energy gap protection and the hope that annealing is robust enough to yield correct ground states with some probability. Researchers have developed mitigation techniques specific to QA, such as Quantum Annealing Correction (QAC) where groups of physical qubits are combined into a “logical qubit” via penalty terms and majority vote to reduce errors. Experiments with D-Wave showed that using such error suppression strategies (e.g., chaining 8 physical qubits together with strong ferromagnetic coupling to act as one, so they vote on the correct state) can significantly boost success probabilities. Still, a full error-corrected AQC would require far more qubits and engineering to implement robust logical qubits, which is an active research area.

Another notable implementation effort is by researchers aiming to build analog Hamiltonian simulators that could be turned to AQC purposes – for example, using cold atoms in optical lattices or trapped ions with continuously varying interactions. While these are often used for quantum simulation of physics models, in principle they could solve optimization problems by engineering the right Hamiltonian. To date, no one has announced a universal AQC machine or an annealer fundamentally different from D-Wave’s in scale, but academic prototypes (with smaller qubit counts) have demonstrated AQC principles using various technologies (superconducting circuits, nuclear spins, etc.). For instance, a 2019 experiment by Google demonstrated a simplified annealing process on their superconducting qubits to solve a small optimization problem, and researchers at NASA/USC have tested AQC algorithms on the D-Wave machines they house.

In theoretical models, proposals exist for universal AQC using superconducting qubits with more complex coupling circuits or non-stoquastic drivers (non-stoquastic meaning the off-diagonal elements of the Hamiltonian can have both signs, avoiding the so-called sign problem that limits classical simulation). One paper by Biamonte and Love (2007) outlined what Hamiltonian terms would be needed for universality and concluded that having the ability to program 2-qubit $$X!X$$ and $$X!Z$$ interactions in addition to the Ising $$Z!Z$$ would suffice for universal AQC. As of now, all implemented AQC/QA devices (like D-Wave) use “stoquastic” Hamiltonians (essentially no sign problem), which some theorists suspect may be efficiently simulatable by classical algorithms in many cases, thus limiting quantum advantage. The introduction of non-stoquastic terms is an area of research to potentially give QA a boost beyond classical simulation.

Comparison with Gate-Based and Universal Quantum Computing

Having outlined AQC, let’s compare it to the more standard gate-based quantum computing (GBQC) model and discuss the relative strengths and weaknesses.

Fundamental Differences in Approach

Gate-based quantum computing is a digital approach – it uses sequences of discrete operations (quantum gates like NOT, CNOT, Hadamard, etc.) on qubits. It is analogous to a classical computer running a program, but with quantum bits and reversible unitary gates. AQC, by contrast, is an analog approach – the “program” is encoded in continuous parameters of a Hamiltonian, and the computation is carried out by the natural time evolution of the quantum system. One way to think of it: in gate-based QC, we explicitly orchestrate how the state changes step by step; in AQC, we set up an energy landscape and rely on the physics of slow evolution to do the work of finding the low-energy state.

Because of this, AQC and gate-based QC have different resource requirements and error modes. Gate-based machines require precise pulsing of control signals for each gate and typically need error correction to achieve long computations, since each gate introduces some error and qubits decohere over time. AQC machines require maintaining the Hamiltonian control parameters very accurately over a relatively long time and need to preserve coherence at least until near the end of the anneal (some argue that even if the system thermalizes, as long as it stays near the ground state distribution, it might still find the solution). AQC is often considered somewhat more robust to certain types of noise – for example, if the evolution is slow, minor random perturbations might not violently kick the system out of the ground state, whereas in a gate model a single error in a critical gate can spoil the algorithm. However, AQC is not immune to noise: decoherence and diabatic transitions (going too fast) both reduce the success probability.

Universality

The gate model is universal by design – any unitary operation can be approximated with a sequence of logic gates (in fact, the Solovay-Kitaev theorem guarantees we can approximate with polynomial overhead using a discrete gate set). AQC can also be universal in principle, but only if one has the ability to implement complex Hamiltonians. In practice, current AQC devices are not universal, whereas even a small gate-based computer (if fully error-corrected) is universal. That said, a landmark theoretical result by Aharonov et al. (2005) proved that any gate-based quantum computation can be efficiently translated into an adiabatic process. In other words, AQC is computationally equivalent to the standard circuit model. The translation essentially encodes the quantum circuit’s operations into a Hamiltonian whose ground state encodes the history of the computation. The catch is that this Hamiltonian can be complex (usually 3-local or 4-local interactions, which then might be reduced to 2-local with gadgets at the cost of more qubits). So from a complexity theory standpoint, AQC and gate model both solve problems in BQP (bounded-error quantum poly-time). However, without error correction, real-world gate QCs and AQC may differ in what they can practically solve: e.g., a near-term gate computer might implement Shor’s algorithm up to a certain size before errors dominate, whereas a near-term annealer might tackle an optimization of a certain scale before noise and finite temperature cause too many mistakes.

Advantages of AQC

- Specialized performance on optimization: For problems that naturally map to the Ising or QUBO format, a quantum annealer can leverage quantum tunneling to escape local minima and potentially find better solutions than classical heuristics. There have been instances where D-Wave’s annealer reached high-quality solutions faster than some classical algorithms for specific problems like certain scheduling or molecular folding tasks, especially when tuned well. Quantum tunneling (qubits flipping via superposition) can sometimes hop through energy barriers that would trap a purely thermal annealing process.

- Large qubit count (scaling hardware): AQC devices currently can have thousands of qubits because their qubits are relatively simple (fixed analog circuits rather than requiring individually addressable logic gates for each pair). This means, for certain applications, one can embed larger problem instances on current annealers than one could on current small gate-model QCs. For example, D-Wave’s 5000+ qubit system can (with minor embedding overhead) handle Ising problems with thousands of variables, whereas a gate quantum computer with even 50 high-quality qubits is still state-of-the-art in 2025 for general algorithms.

- Potential robustness: As mentioned, AQC might gracefully degrade – if the evolution is not perfectly adiabatic or noise-free, the system might still end up near the ground state. It’s an analog optimization process, so one could run multiple times and take the lowest energy result obtained. In contrast, a gate algorithm might outright fail (give a wrong answer far from correct) if a few gate errors occur, unless error correction is in place. AQC’s analog nature is sometimes likened to an “optimization analog computer” – even if imperfect, it might still give a pretty good answer. This is attractive in domains like machine learning or heuristics where an approximate solution is acceptable.

- No need for complex gate scheduling: Programming an annealer can be simpler in concept – you set coefficients for your cost function – vs. writing a full quantum circuit. This high-level approach is more akin to formulating an optimization problem than worrying about gate sequences. For certain users (like operations research specialists), formulating QUBOs is more familiar than quantum circuit design.

Disadvantages of AQC

- Lack of error correction: Currently, AQC lacks the sophisticated error correction frameworks being developed for gate QCs. While error correction is theoretically possible for AQC (through energy penalties and encoding as discussed with QAC), implementing it requires many extra physical qubits per logical qubit. Without error correction, scaling AQC to very long adiabatic runs or very large problems faces the same decoherence obstacle: the system can only stay quantum-coherent for so long, and noise can cause excitations. Gate-based approaches at least have a roadmap to error-corrected, fault-tolerant computation (though it is enormously challenging, involving thousands of physical qubits for one logical qubit). Annealers currently largely operate in the “mostly coherence for part of the anneal, then maybe some thermal relaxation” regime, which may not extend to solving really hard instances that require long runtimes.

- Limited problem types (in current form): As noted, quantum annealers solve basically “find ground state of Ising model” problems. This is a useful but narrow class. Many quantum algorithms that give exponential speedups (factoring, Grover’s search, quantum simulation of arbitrary quantum systems, etc.) do not fit this mold straightforwardly. While one can sometimes reduce a problem to an optimization (e.g., factoring can be turned into an optimization problem as we’ll discuss in the cryptography section), it’s not always efficient to do so. Gate QCs, by contrast, can directly implement those algorithms. For example, Shor’s algorithm for integer factorization requires a circuit with modular exponentiation and Quantum Fourier Transform – something an annealer cannot natively do. AQC could factor via different means (possibly via optimization formulations or a different algorithm encoded in Hamiltonians, but that often blows up the resource requirements).

- Analog control challenges: AQC demands extremely fine control over analog parameters. The anneal schedule must be carefully calibrated; the analog values of thousands of couplings must be set with precision. Manufacturing imperfections can lead to biases or noise in those values (D-Wave, for instance, has to calibrate each qubit’s bias and coupling strengths, and still users often see minor errors requiring tweaking). Gate-based machines, although also analog in physical realization, deal with errors at the gate level and increasingly use calibration and error mitigation per gate. The analog nature of annealers means that each machine might have its own quirks (qubit 137 might systematically have more noise, etc.). This can make it harder to guarantee performance or to port a “program” from one machine to another without re-tuning.

- No guaranteed speedup for all cases: One of the big questions is whether AQC/QA offers a quantum speedup (i.e., asymptotic performance better than the best classical algorithms) for any problem of practical interest. Thus far, evidence of a clear quantum speedup with annealers is mixed. Some studies have shown that D-Wave machines do not outperform optimized classical algorithms like simulated annealing or exact solvers on various benchmark problems, except potentially in carefully contrived instances. Others have demonstrated modest speedups for certain crafted problems or in certain scaling regimes. The adiabatic algorithm could, in worst-case scenarios, take exponential time (e.g., if an exponentially small gap occurs). The same is true of classical algorithms on NP-hard problems, of course. But gate-model quantum algorithms (like Grover’s providing a quadratic speedup for unstructured search) have proven guarantees in complexity. AQC’s performance is harder to characterize and often problem-dependent. It may shine as a heuristic even if it doesn’t always give a clean complexity-theoretic advantage.

How AQC aligns or diverges from universal quantum computation

In principle, as stated, AQC can do anything a universal gate quantum computer can do. There’s even a technique to convert an adiabatic algorithm back into a circuit algorithm – basically simulate the adiabatic evolution with small time-step trotterization or other methods. So algorithmically, they have the same power. In practice, today’s AQC systems diverge from the path of universal quantum computation: they are more like special-purpose accelerators for optimization. Meanwhile, gate-based machines (though small) are being used to demonstrate universal algorithms (like small versions of Shor’s algorithm, variational quantum eigensolvers for chemistry, etc.). An interesting middle-ground is emerging in the form of hybrid algorithms like the Quantum Approximate Optimization Algorithm (QAOA), which is a gate-based algorithm inspired by adiabatic evolution. QAOA essentially performs a series of alternating operations akin to evolving with $$H_I$$ and $$H_P$$ for short bursts (rather than continuously) and can be seen as a discretized version of AQC that runs on gate hardware. This shows cross-pollination of ideas: QAOA is universal (for large depth it approximates AQC and in theory can achieve the same results), but at low depth it’s a heuristic for combinatorial optimization amenable to NISQ (noisy intermediate-scale quantum) gate devices.

In summary, AQC vs Gate QC trade off generality for focus: AQC (especially annealing implementations) focus on one type of problem really well – it’s like having a quantum optimiser – whereas gate QCs are the general computing machines that can eventually do everything including optimization (though perhaps with less efficiency on some specific tasks). In the long run, if error-corrected universal quantum computers become available, they could simulate an adiabatic algorithm or just run better algorithms outright. But it’s possible that specialized annealing hardware might always have an edge for certain optimization tasks due to having many qubits and analog efficiency, much like analog special-purpose devices can sometimes beat general digital computers for specific tasks.

Quantum Annealing vs. Universal Adiabatic Quantum Computing

The distinction between quantum annealing (QA) as practiced today and a hypothetical fully universal AQC is worth exploring in a bit more detail, as it has practical implications.

Quantum Annealing (QA)

In QA, the goal is specifically to solve optimization problems by exploiting quantum effects. A typical QA algorithm (like what D-Wave machines use) starts at $$H_I = -\sum \sigma^x$$ (all qubits in superposition) and slowly turns on problem Hamiltonian $$H_P$$ that represents, say, an Ising model or a QUBO. Ideally, if run slowly, this would be an adiabatic algorithm reaching the optimum. In practice, QA often runs at the edge of adiabaticity or even non-adiabatically – sometimes deliberately so to shorten run times, relying on the fact that even if it’s not perfectly adiabatic, the system might still land in a good low-energy state with some probability. Also, QA is usually an open system: the qubits are at low temperature but not absolute zero, so thermal excitations and relaxations occur during the anneal. This can help as well as hurt – in some cases a bit of thermalization can help the system hop out of excited states (quantum annealing in presence of a heat bath can be viewed as a form of quantum-assisted simulated annealing).

One can think of QA as a metaheuristic that uses quantum fluctuations instead of thermal fluctuations to search for the global minimum of a cost function. QA doesn’t strictly require the process to fulfill the adiabatic theorem conditions; it may finish in a state that’s not the exact ground state but hopefully near it. To improve the chances, one can repeat the anneal many times and take the best result. QA is essentially what current hardware does: run many relatively fast anneals (microseconds each) and statistically find a good solution.

Universal AQC

This implies adhering to the adiabatic theorem in a more rigorous way for an arbitrary programmable Hamiltonian path. A universal AQC could solve not just classical optimization problems, but also perform arbitrary quantum algorithms by adiabatically evolving through Hamiltonians that entangle qubits in more complex ways (including off-diagonal terms in the computational basis that encode algorithmic transitions). Universal AQC would need to handle Hamiltonians beyond the Ising form – for example, terms that act like $$\sigma^x_i \sigma^x_j$$ or multi-qubit interactions or non-stoquastic drivers that cannot be gauged away. It would also need a high degree of control precision since implementing an arbitrary unitary evolution via an adiabatic path might involve fine-tuning.

Key Differences

- Hamiltonian Complexity: QA typically deals with a fixed form (like Ising with transverse field). Universal AQC would allow a variety of terms and could effectively emulate a sequence of quantum gates by the right Hamiltonian. For instance, to be universal with 2-local Hamiltonians, one might require both $$\sigma^z \sigma^z$$ interactions (for cost encoding) and $$\sigma^x \sigma^x$$ or $$\sigma^x \sigma^y$$ interactions (which together can generate non-stoquastic mixing needed for universal computation). D-Wave’s machines currently cannot directly do that; their mixing term is a transverse field (summing single-qubit $$\sigma^x$$), which keeps the Hamiltonian stoquastic. Research suggests adding certain catalyst Hamiltonians or non-stoquastic terms could enhance the power of QA, possibly allowing it to bypass some limitations, but those are not yet implemented in hardware.

- Quantum Annealing’s practical heuristic vs AQC’s theoretically guaranteed approach: If one runs QA very slowly, it approximates AQC. But often to solve problems faster than classical, one might try to shorten anneal times – then QA becomes a heuristic without guarantee of success. Universal AQC as an algorithmic paradigm typically assumes you can make the runtime long enough to succeed with high probability (which might be very long if the minimum gap is tiny). In other words, QA prioritizes practical solution-finding, even if not always staying adiabatic, whereas universal AQC prioritizes maintaining quantum adiabatic conditions at the cost of potentially long runtimes.

- Applications and Limitations: QA has seen a range of practical applications: optimization in vehicle routing, scheduling, portfolio optimization, machine learning (training Boltzmann machines, feature selection), protein folding, and more. For example, VW and D-Wave collaborated on a traffic flow optimization demo for taxis in Beijing, formulating it as a shortest path problem for many vehicles – a kind of problem that maps to a QUBO and was attempted on a D-Wave annealer. Another example is using QA for training certain types of neural network models by finding low-energy states corresponding to optimal parameters. While these are promising, QA’s limitations include relatively shallow circuits equivalent – one cannot do deep algorithmic primitives like arithmetic or Fourier transforms easily. Additionally, mapping a problem to Ising form can introduce overhead: sometimes you need many auxiliary qubits to represent constraints as penalties, etc., which can eat up the thousands of qubits quickly. QA also struggles with problems that have very rugged energy landscapes with many near-degenerate minima and tiny gaps, as the system can get stuck or require impractically slow annealing. One known challenge is the problem of “freeze-out”: as the anneal nears the end, if the temperature is not zero, the system might freeze into some excited state once the gap is smaller than thermal energy or when dynamics slow down near a second-order phase transition.

Quantum Annealing in practice vs theory

In theory, if QA is run perfectly adiabatically and closed-system, it is AQC. In practice, quantum annealers deviate from the ideal AQC in several ways: finite temperature, noise, and sometimes deliberate use of shorter times. This has led some researchers to develop enhanced strategies: for instance, adjusting the anneal schedule (pause mid-way when the gap is smallest to give the system more time in the critical region), or reverse annealing (where you start from a classical candidate solution, add quantum fluctuations and then re-anneal to hopefully improve that solution), or quantum partial annealing where you stop partway and do classical post-processing. These hybrid approaches aim to get practical mileage out of QA despite not being fully adiabatic.

Can quantum annealing be made universal? There are theoretical proposals to achieve universality by extending QA. One approach is to implement a sequence of annealing steps piecewise, effectively doing a trotterized adiabatic evolution (this becomes like a gate sequence). Another is to incorporate additional Hamiltonian terms as mentioned. A research paper by Hen and Spedalieri (2015) discussed that one could use perturbative gadgets to create effective 3-local interactions and thus approximate any Hamiltonian needed for universal AQC on an Ising machine, but this requires many extra qubits and strong coupling ratios. Moreover, universality might require the ability to prepare more complex initial states than a simple transverse field ground state.

In summary, quantum annealing is a presently realized subset of AQC focused on optimization, while fully universal AQC remains a theoretical goal. QA’s strength is that it exists now and can tackle certain problems, but its weakness is that it’s limited in scope and performance guarantees. Universal AQC would be as powerful as any quantum computer but building one means adding features to QA that are not yet present (and solving the same scalability challenges the circuit model faces, like error correction).

From an end-user perspective (say a cybersecurity professional considering QA vs universal QC), the difference is: QA can already be used for specific optimization tasks in security (like scheduling scans, optimizing network design, clustering anomalies) by formulating them into QUBO form, whereas a universal QC (gate model or universal AQC) that could run, e.g., Shor’s algorithm to break RSA, is still in the future.

Cybersecurity Impact of Adiabatic Quantum Computing

Quantum computing’s biggest impact on cybersecurity is often discussed in terms of cryptography – the fear that a quantum computer could break current encryption and digital signature schemes. Adiabatic quantum computers must be analyzed in this context: could an AQC or quantum annealer be leveraged to compromise our cryptosystems? Additionally, we should consider the flip side: ways AQC might enhance cybersecurity defenses. In this section, we address both angles:

How AQC Could Break Cryptography

Breaking Public-Key Encryption (RSA, ECC)

The poster-child quantum algorithm for breaking cryptography is Shor’s algorithm, which runs on a gate-based quantum computer and factors integers (breaking RSA) or computes discrete logs (breaking Diffie-Hellman and ECC) exponentially faster than any known classical method. AQC, being equivalent in power, could in principle run Shor’s algorithm if it were realized as a universal quantum computer. However, executing Shor’s algorithm in an adiabatic fashion would require a non-trivial construction of a Hamiltonian whose ground state encodes the prime factors of a given number or the discrete log. One theoretical approach could be to encode the periodicity finding (at the heart of Shor’s) into a ground-state problem, but that is essentially as hard as building the circuit.

Instead of running Shor’s algorithm, researchers have explored whether quantum annealing can solve factoring directly by optimization. The idea is to formulate integer factorization as an optimization problem: find non-trivial factors $$p, q$$ such that $$N = p \times q$$. This can be written as a system of equations or a cost function that is zero when factors are correct. For example, one can set up a polynomial whose roots relate to the factors or use binary multiplier logic encoded as clauses. In 2002, a Microsoft Research paper titled “Factoring as Optimization” proposed a mapping of factoring into a degree-4 polynomial whose global minimum encodes the factors. The approach essentially translates factoring into finding a ground state of a constructed Hamiltonian.

Subsequent research indeed attempted integer factorization on quantum annealers. One of the earliest experiments was factoring 21 (=3*7) using a D-Wave 2 machine by formulating it as an optimization problem on 8 qubits (this is trivial classically, of course, just a proof of concept). More impressively, in 2019 a group of Chinese scientists factored the integer 1,005,973 (a 20-bit number) using a quantum annealer approach with only 89 qubits. They effectively mapped the factoring problem into an optimization (a set of binary quadratic equations solved by QA). For comparison, performing Shor’s algorithm on a gate quantum computer for a 20-bit number would require many more qubits (thousands of noisy qubits if error-corrected). This result suggested that for small integers, a tailored QA approach can be more qubit-efficient. Following that line, in 2021-2022 researchers factored slightly larger numbers (e.g. 11-bit, 15-bit) and improved the encoding methods. Just recently, a paper reported factoring a 22-bit integer using D-Wave’s quantum annealer, which shows incremental progress.

However, it is crucial to put these in perspective: 22-bit or even 20-bit numbers are astronomically far from 2048-bit RSA keys. The difficulty of factoring grows super-polynomially (roughly exponential in the bit-length for best classical algorithms, sub-exponential for number field sieve). The fact that an annealer factored a 20-bit number with 89 qubits does not mean an annealer with 2048/20 * 89 ≈ 9100 qubits could factor a 2048-bit number – the relationship is not linear. As numbers grow larger, the required qubit count and the complexity of the Hamiltonian blow up. The Chinese study showed that their method could factor a 20-bit number; they did not demonstrate scaling to even 30 or 40 bits. In 2023, claims by some researchers that they had used a form of quantum optimization to factor a 48-bit number turned out to be controversial and largely discredited as not truly scalable.

So, can AQC or QA break RSA? At present, no. The largest factorizations by QA are trivial by classical standards (classical computers have factored numbers with hundreds of digits (several thousand bits) using number field sieve). That said, the research is valuable because it explores alternatives to Shor’s algorithm. If one day QA could factor large numbers faster than classical algorithms, that would be a novel quantum speedup separate from Shor’s approach. The 2019 result led the authors to speculate that quantum annealers might pose a more immediate threat to RSA than gate-based QCs in the near term, because gate-based QCs need full error correction for Shor which is a far-off goal, whereas annealers have many qubits now. D-Wave’s latest machine has 5640 qubits, theoretically enough to map a much larger factoring problem if the encoding is extremely efficient.

However, skeptics point out that just having more qubits doesn’t guarantee success in factoring; the annealing algorithm might hit other roadblocks. For instance, the energy landscape for factoring large $$N$$ might have exponentially many local minima, or the required precision in coupler strengths might exceed what hardware can do, or the minimum gap might become exponentially small, meaning you’d have to anneal longer than the age of the universe to stay adiabatic.

One interesting possibility is if someone finds an alternative quantum algorithm (not exactly Shor’s) that is adiabatic in nature for factoring. There was an attempt using the “counterdiabatic” or “quantum variational annealing” approach to emulate adiabatic evolution faster (a paper titled “Not-so-adiabatic quantum computation for the shortest vector problem“ looked at lattice problems, for example). So far, no fundamentally more efficient method than Shor’s algorithm has appeared for factoring or discrete log.

Discrete Log (ECC and Diffie-Hellman)

This is in the same category – Shor’s algorithm also breaks these. Adiabatic methods could in principle solve these via optimization (e.g., solving discrete log as a satisfy problem), but again, not at a scale beyond classical yet. If RSA falls, ECC falls as well by similar reasoning.

Symmetric Cryptography and Hashes

Symmetric ciphers (AES, 3DES, etc.) and cryptographic hash functions aren’t broken by quantum algorithms in the same devastating way; the best known quantum attack is Grover’s algorithm which gives a quadratic speedup for brute force search (key search or preimage search). Grover’s algorithm is a circuit-based algorithm that sequentially amplifies the amplitude of a correct answer. Could a quantum annealer run Grover’s algorithm? Possibly not directly – Grover’s relies on an oracle (a function evaluation) and careful phase inversion, which QA cannot do natively. However, one could encode the search for a preimage as an optimization problem: the cost function is 0 if a hash’s input matches a target output, else something higher. But that cost landscape is very flat (all wrong inputs look the same except the correct one has cost 0). An unstructured search is actually the worst-case scenario for annealing, because the energy landscape has a single needle in a haystack – there’s no guiding slope, it’s essentially spin glass with a huge degeneracy of equal excited states. QA would basically be guessing randomly in that scenario (unless augmented by some heuristic). Thus, Grover’s algorithm remains superior on a gate QC for brute force searching. In any case, Grover’s quadratic speedup is not considered a dire threat: it can be countered by doubling symmetric key lengths (e.g., AES-256 would require ~$$2^{128}$$ operations under Grover, which is still infeasible, and SHA-256’s preimage resistance similarly would drop to 128-bit security which is acceptable). So AQC doesn’t particularly change the story for symmetric crypto; it’s the same as for gate QCs – just use longer keys, and current QA offers no special trick to crack, say, AES apart from naive (and ineffective) energy landscape search for the key.

Lattice-Based and Post-Quantum Cryptography

What about the new family of cryptosystems designed to resist quantum attacks, like lattice-based encryption (NTRU, Kyber), code-based (Classic McEliece), multivariate, etc.? These were chosen because even a gate-model quantum computer running known algorithms doesn’t solve these problems faster than classical (no known equivalent of Shor for lattices, for example). Could an adiabatic algorithm solve lattice problems (like finding short vectors in a lattice or decoding random linear codes) faster? Researchers have investigated this. One study, “Finding dense sublattices as low energy states of a Hamiltonian,” explored embedding the Shortest Vector Problem (SVP) into an Ising Hamiltonian. The results thus far indicate that straightforward adiabatic algorithms don’t easily solve lattice problems either. In fact, solving something like SVP via QA would require huge numbers of qubits and again likely faces tiny gap issues; one paper gave numerical evidence that even going beyond the strictly adiabatic regime doesn’t give exponential speedups for SVP. Therefore, the consensus is that post-quantum schemes (lattice, etc.) appear to be safe against known quantum annealing approaches as well. Until a radically new quantum algorithm is found, AQC doesn’t magically break those either.

Hash-based cryptography and others

These rely on security of hash functions (which quantum only quadratically impacts) or structured problems like Supersingular Isogeny Diffie-Hellman (SIDH) – which was broken classically anyway. Adiabatic methods don’t seem to target these differently.

Summary

In summary, AQC’s threat to cryptography currently centers on the same targets as gate QCs (RSA, ECC) but via different means. There is active research on using QA to perform these attacks with fewer qubits or sooner than a gate QC could. I would, however, caution not to ignore AQC – as I noted in my previous article on this topic, we must consider the “rapid development of AQC hardware and algorithms that could challenge RSA-2048 in a manner suitable for AQC”. The idea of a surprise factorization method using an annealer can’t be entirely ruled out. Indeed, a future large D-Wave (with say 5000+ qubits) combined with clever optimization algorithms might factor certain sizes before a universal QC is online. Quantum annealing may ultimately offer a faster path to breaking RSA keys compared to classical, and therefore deserves attention, but it is not clear whether a large enough quantum annealer will be available before a large enough gate-based quantum computer capable of running Shor.

Given the uncertainty, the prudent approach in cybersecurity is to prepare for any type of quantum attack – whether from gate or annealing. That means transitioning to quantum-resistant cryptography in the coming years. The good news is that the post-quantum cryptography (PQC) algorithms being standardized (like lattice-based Kyber for encryption, Dilithium for signatures, etc.) are believed to resist all known quantum attacks, not just Shor’s. AQC doesn’t provide an obvious backdoor to them either. For example, finding the secret key in lattice-based encryption reduces to solving a closest vector problem in a high-dimensional lattice, which so far seems hard for QA as well as classical.

Other cryptographic impacts

It’s worth noting that quantum annealing can also potentially assist in some cryptanalysis that’s formulated as optimization. There are studies on using QA for attacking cryptographic primitives by turning the problem into SAT or XORSAT instances and then into Ising form. For instance, a QA was used to try and find collisions in hash functions or recover keys by solving equation systems, but generally, classical SAT solvers often still outperform the QA on such tasks, except maybe on certain crafted hard instances. Grover’s algorithm (which requires a gate model) remains the best general quantum attack on symmetric crypto.

Bottom line for cryptography

Adiabatic quantum computers, in theory, can do what gate-based ones can (factor, discrete log, Grover search). In practice, current AQC/QA devices pose no immediate threat to standard cryptography beyond what a classical computer can do. However, the field is evolving. With each new generation of annealer and clever algorithmic mapping, the risk increases that smaller key sizes could be tackled. While RSA-2048 is out of reach for the foreseeable future, one should already avoid deprecated sizes (RSA-1024, 160-bit ECC) not just because of classical progress but also as a hedge against nearer-term quantum advances. The safe course is to migrate to quantum-resistant cryptography (PQC) well before any large quantum computer (adiabatic or otherwise) is built that could break current crypto. NSA and NIST have been advising this course of action. Governments are actively tracking quantum threat timelines to estimate when data might be at risk. I personally believe that within 7 to 10 years there is a significant chance a quantum computer could break RSA-2048. Interestingly, if one model (annealing vs gate) were to reach that point first, it’s still game-over for vulnerable crypto – the encrypted data doesn’t care which model cracked it.

How AQC Could Enhance Cybersecurity

Beyond threatening cryptography, quantum computing can also be a tool for defenders. Adiabatic quantum computing offers a novel way to tackle certain hard computational problems in cybersecurity:

Quantum-Enhanced Security Protocols

One example here is the design of cryptographic protocols that rely on solving hard problems quickly. Consider secure network design – configuring a network’s defenses (firewalls, routing rules, etc.) optimally is a complex optimization problem. It might be formulated as “minimize risk exposure subject to cost constraints,” which could translate into a combinatorial optimization (perhaps a variant of the NP-hard set cover or cut problems). AQC/QA can potentially help compute near-optimal solutions faster than classical solvers, leading to stronger security postures.

Another area is password hashing or key stretching: one could design schemes that intentionally involve solving a moderately hard optimization problem (sort of a proof-of-work or proof-of-resources) as part of the protocol, knowing that legitimate parties have access to a quantum annealer to solve it quickly while an attacker might not. This is speculative, but it suggests that if quantum annealing becomes accessible, protocols could integrate quantum steps for advantage.

Optimization for Secure Network Design

Designing a secure network – e.g., deciding where to place intrusion detection sensors, how to segment networks, how to allocate limited patching resources – often boils down to complex optimization under constraints. Researchers have begun framing some of these as QUBO problems. For instance, one could encode the selection of a subset of defense measures that maximize a security utility function into an Ising model. AQC can then try to find the best combination.

A tangible example is optimizing the placement of decoys or honeypots in a network to maximize the chance of catching an attacker: this can be a combinatorial optimization where each potential honeypot placement is a variable, with constraints on budget and coverage. A quantum annealer might explore this large search space more effectively than classical greed heuristics.

Quantum-Assisted Threat Detection

In cyber threat detection (like intrusion detection systems or SIEM event correlation), one faces large data sets and the need to find subtle patterns (anomalies that indicate a breach). Some aspects of this can be cast as optimization or machine learning problems. There is pioneering research on using quantum annealing for machine learning in cybersecurity. For instance, training a classifier to distinguish malicious activity from benign might involve optimizing a complex objective – like minimizing classification error with regularization. Quantum annealers have been used to train models like Support Vector Machines (SVMs) by solving the quadratic optimization at their core, or to do feature selection by picking an optimal subset of features that maximize detection accuracy. A 2024 study integrated quantum annealing with AI for threat detection: they used a D-Wave annealer to optimize a cost function representing threat likelihood as part of an AI pipeline. The annealer would find the minimum of a function that flags a combination of events as an attack, which was then fed into classical machine learning for final classification. This hybrid quantum-classical approach improved detection accuracy and speed in their experiments.

Another use-case is clustering or community detection on graphs (network traffic can be represented as graphs, where clustering might reveal an infected subnet). Quantum annealing algorithms for graph clustering or partitioning can help identify anomalous groupings in network logs. D-Wave, for example, offers a QUBO formulation for clustering problems. If an organization had a quantum annealer, it could feed its massive log data transformed into a QUBO that, say, partitions events into normal vs abnormal clusters.

Cryptanalysis for Good

Just as adversaries could use AQC to attack cryptography, cryptographers could use AQC to analyze the strength of systems. For example, QA could be a new tool to try to find vulnerabilities in algorithms – by formulating the search for a cryptographic weakness as an optimization. There’s a concept of using QA to solve the “satisfiability” representation of cipher cracking problems which can help test ciphers. This is more relevant to cryptographers ensuring their designs have no shortcuts.

Quantum Key Distribution (QKD) and Quantum-safe comms

While QKD is not done via AQC (it uses photon-based quantum mechanics), the advent of quantum tech in general means defenders can start employing QKD for extremely high-security links (already being done in some government and bank networks). This ensures that even if AQC or any quantum computing breaks algorithms, the keys exchanged via QKD remain secure (because QKD security is based on physics, not math complexity). QKD and AQC are separate quantum subfields, but a security-conscious organization might invest in both: QKD for safe key exchange and AQC for solving internal optimization for security.

Post-Quantum Cryptography Transition Support

AQC could also assist in the transition process to PQC. How? Possibly by optimizing compatibility or configurations. For instance, choosing which subset of devices to upgrade first to maximize security might be formulated as a knapsack or scheduling problem – QA could optimize a migration plan under constraints – like limited resources to update systems, needing to maintain interoperability, etc.

Summary

Overall, quantum annealing can enhance cybersecurity primarily by solving certain hard problems faster or better than classical computers, thereby enabling stronger security measures. However, a current caveat is that quantum annealers themselves are still relatively scarce and not broadly available to defenders (though cloud access to D-Wave is available). Additionally, one must formulate the security problem appropriately to benefit from QA, which requires expertise in both cybersecurity and quantum programming.

We should also consider if AQC introduces any new types of defensive cryptographic protocols. One theoretical idea: quantum public-key encryption. There are proposals for encryption schemes that involve quantum states (for example, leveraging the difficulty of sampling or of finding ground states as the security assumption). Adiabatic quantum solvers might help implement or even define such schemes. For instance, a form of cryptography could involve a public key that is a description of a Hamiltonian and a private key that is the solution (ground state). Without a quantum computer, solving that might be infeasible, but with an annealer, the legitimate party can decrypt. These are very early-stage ideas and not near deployment, but they illustrate the mindset of using quantum computing (including AQC) to create cryptographic schemes, not only break them.

In conclusion, while the offensive impact of AQC on today’s cryptography is a concern, the defensive opportunities are also compelling. Cybersecurity professionals should keep an eye on quantum optimization solutions in areas like network hardening, incident response planning, and machine learning for threat detection. If a quantum solution can shave off significant time or find a more optimal configuration than classical means, it could translate to a real security advantage (for example, catching an attack that might have been missed, or mitigating a threat faster than an attacker can exploit it). As one research article demonstrated, solving certain cybersecurity problems with QA is not science fiction – a quantum annealer was successfully used to optimize aspects of a cyber threat detection system, improving accuracy and response time.

Current Developments in Adiabatic Quantum Computing

Academic Research on Universal AQC

In academia, AQC has been a vibrant research topic since its inception in 2001. Researchers like Edward Farhi (MIT, now Google), Daniel Lidar (USC), and others have led efforts to understand the capabilities and limits of AQC. One major theoretical effort was proving universality, as discussed. Building on that, there have been many papers on how to implement specific algorithms in the adiabatic paradigm. For example, adiabatic versions of Grover’s algorithm have been formulated (which basically amount to an evolving Hamiltonian whose ground state amplifies the marked item). Another line of work is designing adiabatic algorithms for NP-hard problems that might outperform classical heuristics. Scientists have looked at random satisfiability, maximum cut, graph coloring, etc. For small cases, quantum speedups have been hard to definitively show because classical heuristics are also quite good; nevertheless, these studies are crucial to map out where AQC could win.

A key academic question: What is the complexity of AQC for hard problems? Are there instances where AQC exponentially outperforms classical simulated annealing or diffusion Monte Carlo? There have been carefully crafted problem instances (like a problem with a tall, thin energy barrier where quantum tunneling helps) that show a separation: quantum annealing could tunnel through in polynomial time whereas a classical algorithm would take exponential time to climb over the barrier. One such example is the “Grover problem” (unstructured search formulated as an energy landscape) where AQC can achieve the $$\sqrt{N}$$ speedup akin to Grover by essentially having a small gap of order $$1/\sqrt{N}$$ and running in that time – though in other setups the gap might be much smaller. Generally, proving a clear advantage is tricky and an ongoing research battle.

Quantum Speedup Controversy

There’s a famous debate around whether D-Wave’s devices have shown a quantum speedup. Around 2014, a study by researchers at ETH Zurich and USC compared D-Wave 2X to classical solvers on random spin-glass instances and found no speedup. Later, D-Wave and others found instances where quantum did better than some classical solvers, but then improved classical algorithms caught up. This back-and-forth actually advanced classical optimization algorithms too. As of late 2020s, there have been specific cases (like an excited spin dynamics simulation problem) where D-Wave’s quantum processing did outperform a classical algorithm by a noticeable factor, suggesting at least a limited quantum advantage. Academic research continues to search for the “killer application” of annealers that shows indisputable speedup.

Hardware Research – Toward Universal AQC

On the hardware side, beyond D-Wave, other efforts are exploring AQC implementations:

- Researchers in Japan (like at Tokyo Institute of Technology) have worked on quantum annealing with different types of qubits or even quantum annealing using quantum dots.

- A notable project is at NASA Ames and USRA (University Space Research Association) in partnership with Texas A&M, trying to design new annealer architectures, possibly with non-stoquastic Hamiltonians.

- Companies like IBM and Google have largely focused on gate-model, but Google’s quantum AI team did publish on quantum annealing as well, especially in the context of quantum simulation.

- There have been proposals for adiabatic quantum transistors or adiabatic chips that could be scaled modularly – this is still conceptual.

Government and defense research agencies are certainly interested. IARPA (Intelligence Advanced Research Projects Activity) ran the QEO (Quantum Enhanced Optimization) program which funded multiple research groups to explore more coherent quantum annealers and better algorithms for optimization. The goal was to design an annealer that could achieve a $$10,000\times$$ speedup on certain hard optimization problems. Several universities and labs (MIT Lincoln Lab, USC, Sandia, etc.) participated. They investigated things like: using more coherent qubits (with longer coherence times so that quantum effects remain longer), implementing non-stoquastic drivers (like adding $$\sigma^x \sigma^x$$ interactions or 4-body terms), and even leveraging environment engineering (like coupling qubits to a specific environment that helps get to ground state faster). Results from QEO have been partially published: for instance, a team at Los Alamos and University of Southern California showed how adding a so-called “diagonal catalyst” term to the Hamiltonian can sometimes widen the minimum gap and speed up annealing. Another outcome was improved understanding of the noise: one group made a more coherent small 8-qubit annealer that could be studied in detail, showing how coherence vs thermal transitions contribute.

Commercial and Industry Progress

The only commercial seller of quantum annealers is D-Wave Systems. They have continuously improved their machines: the latest D-Wave Advantage2 aims for 7000+ qubits and improved connectivity (the degree of each qubit is higher, meaning less embedding overhead). D-Wave has also introduced features like reverse annealing (start from a classical state and anneal backwards a bit then forwards, to do local search) and pause-and-quench schedules (where you pause mid-anneal to let system equilibrate then quench to a solution). They also launched a hybrid solver service, which automatically decomposes large problems and uses quantum annealing as a subroutine.

In 2022, D-Wave made a surprise announcement that they are also working on a gate-model (universal) superconducting quantum computer. This doesn’t directly relate to AQC, except that D-Wave’s long expertise in superconducting qubits and control might help them eventually build a universal AQC or gate system with error correction. So the landscape now has D-Wave straddling both annealing and gate approaches.

Other companies have taken interest in quantum optimization but via different means:

- Fujitsu developed a “Digital Annealer,” which is not a quantum computer at all but a CMOS chip that mimics the form of quantum annealing (it solves QUBOs using parallelism, essentially a specialized classical solver).

- Similarly, NEC and other Japanese firms explored “quantum-inspired” annealing algorithms on classical hardware for things like the travelling salesman problem. This somewhat competes with quantum annealing by providing some benefits of the same formulation (and in absence of large quantum speedups, these classical analogs often perform quite well).

Government use and interest

The Canadian government and aerospace companies (Lockheed Martin, for example) have been involved with D-Wave from early on. Lockheed Martin bought one of the first D-Wave 512-qubit machines for research into verifying mission-critical software (casting it as a satisfiability problem) – a cybersecurity adjacent domain (formal verification of code).

The US Los Alamos National Lab also bought a D-Wave system to study optimization problems relevant to stockpile stewardship and cybersecurity. The NSA and other agencies are of course deeply interested in any quantum developments that could impact cryptography; while most public emphasis has been on gate-based threats, they are certainly watching AQC too.

Universal AQC in Academia

One notable academic achievement was building small adiabatic quantum processors in the lab to test specific ideas. For instance, in 2016, a team at USC built a 3-qubit superconducting circuit that was programmable and showed evidence of tunneling assisting computation on a toy problem. Other experiments have used NMR (nuclear magnetic resonance) to implement adiabatic algorithms on a handful of spins to solve small instances of NP-hard problems.

Software and Algorithms

On the software side, companies like 1QB Information (1QBit) and D-Wave’s own software team developed tools to help map real-world problems to QUBO/Ising form, which is crucial for adoption. Techniques like minor embedding (mapping a fully connected logical problem to the sparse hardware graph) have improved, with more automated algorithms to do that efficiently and even use fewer physical qubits than naive methods. New algorithmic tricks like Quantum Monte Carlo simulations and better classical optimizers are often used in tandem (there’s something called a “technique freeze-out” where one might do a partial anneal, then classical local search, then anneal again).

Quantum Annealing and AI/ML

A crossover development is the use of quantum annealing in machine learning (not just for cybersecurity, but broadly). There’s academic work on Quantum Boltzmann Machines and quantum-assisted neural network training. Some of these techniques are being trialed in industry for things like logistics optimization (which is security-relevant in supply chain security or military logistics).

In the context of cybersecurity, government initiatives such as those by NIST and ENISA (EU’s security agency) often mention quantum computing as a threat but also as an innovation. For instance, NIST in its reports acknowledges quantum computing could revolutionize certain computations that might help in secure information processing.

Standardization and Benchmarks

The IEEE and national labs have begun developing benchmarks to fairly evaluate annealers vs classical solvers on combinatorial optimization problems. This is important to know when a quantum advantage is truly achieved. There are annual competitions (like the Quantum Annealing Workshop) where new results are shared. The community is cautious due to earlier hype, but optimistic that specialized improvements (like non-stoquastic chips or error mitigation) could produce a clear win in coming years.

Feasibility and Timeline for Cryptographic Relevance

A critical question for security professionals is: When (if ever) will adiabatic quantum computers become a practical threat to encryption? We often hear estimates for gate-based universal quantum computers (like “by 2030s or 2040s, we might break RSA-2048”). AQC needs separate consideration.

Current State

As of 2025, no existing AQC or QA machine can break any widely used cryptographic algorithm. Factoring 2048-bit numbers, breaking 256-bit ECC, or even attacking 128-bit symmetric keys are far beyond their capability. Even the most advanced annealer, D-Wave Advantage with 5000+ qubits, has not demonstrated factoring beyond 3×5-digit (22-bit) numbers. The gaps to target are enormous.

Comparative Development Speed

Gate-based quantum computers (like those from IBM, Google, IonQ) are steadily increasing qubit counts and fidelity, but they still need breakthroughs in error correction for crypto-breaking scale. Some experts think we need on the order of a million physical qubits with error correction to factor RSA-2048 in reasonable time, which might be 20+ years away (if progress continues). Adiabatic quantum computing has an advantage of already high qubit counts, but they are analog, noisy qubits. D-Wave has increased qubit counts almost an order of magnitude every few years (128 -> 512 -> 2000 -> 5000…). If this trend continues, we might see >10,000 qubit annealers by the late 2020s. However, simply having more qubits doesn’t directly translate to breaking larger keys, because the problem mapping can be the bottleneck.

Barriers to AQC’s cryptographic application

- Scalability of Connectivity: For factoring via QA, each logical bit of the number may need to connect to many others in a multiplier circuit. D-Wave’s sparse connectivity means you need to chain qubits together, costing more physical qubits. The 2019 factoring of 20-bit used 89 physical qubits for 20 logical bits – about 4.45 qubits per bit. If that ratio held, factoring 2048 bits naïvely would need ~9100 qubits. That’s within the range of next-gen annealers possibly, but this assumes you can even embed the necessary interactions and that the machine can handle the required precision.

- Coherence and Noise: Annealers operate somewhat close to the quantum-classical boundary. The larger the system, the more points for noise to couple in. Maintaining quantum coherence across thousands of qubits even for the duration of an anneal (which might be say 100 microseconds) is challenging. D-Wave’s qubits have coherence times on the order of few microseconds, but quantum annealing can still work if decoherence is mainly in certain bases and if the system remains in a quasi-static distribution. However, to truly leverage quantum effects at scale (like entanglement spanning the whole register to tunnel through global barriers), coherence needs to persist. Improving coherence in annealers might involve better isolation, materials improvements, or lower temperatures – all active engineering pursuits.

- Precision and Control: Cryptographic problems might require extremely fine energy resolution. For example, in factoring, the difference between a correct factorization and an almost-correct one might be 1 bit off, which should reflect in the energy difference. If the Hamiltonian energy gap for correct vs next-best solution is on the order of $$10^{-6}$$ relative to overall energy, the device must distinguish that. Noise in controls (flux noise in superconducting circuits) could wash out such small gaps, making it hard to tell solutions apart or requiring slower anneals to resolve them. Current annealers have analog control errors of maybe 5% of coefficient magnitudes – not an issue for many optimization tasks where large gaps exist, but potentially an issue for something like precise cryptographic calculations.

- Need for Error Correction: To factor a 2048-bit number, you might need a sequence of operations or iterations that is longer than one anneal run. You might consider iterative approaches (like use QA to get half the bits, then refine). But as the problem size grows, at some point error correction becomes necessary to scale further – this is analogous to gate QCs. Error correction for AQC could be done via repetition codes or more advanced schemes, but at significant qubit overhead. An error-corrected annealer with thousands of logical qubits would need maybe tens of thousands of physical qubits at least, plus longer run times for correction cycles. This is in nascent stages of research; D-Wave has published some experiments using small repetition codes.

- Algorithmic breakthroughs: One wild card in timeline is if someone invents a new algorithm tailor-made for QA that is much more efficient for factoring or breaking something than known methods. If such an algorithm exists (none known yet), it could drastically shorten the timeline. Barring that, QA will rely on improvements in hardware and incremental algorithmic tricks.

Timeline Estimates

According to surveys like the Global Risk Institute’s Quantum Threat Timeline report, experts have a wide range of opinions. Some pessimistic (or perhaps realistic) views say it’s “highly unexpected” to have a quantum computer breaking RSA-2048 before 2030. Many cluster around the 2035-2040 timeframe as a 50% likelihood for some quantum breakthrough in cryptanalysis. While I personally predict 2031-2032 for the arrival of cryptanalytically relevant quantum computers. AQC specifically isn’t always distinguished in these surveys, but a few experts note that specialized machines could appear sooner but might not scale easily to full cryptographic break.

Let’s consider two scenarios:

- Gate-model leads: If error-corrected gate QCs are achieved by early-to-mid-2030s with sufficient scale, they will run Shor’s algorithm and break RSA/ECC directly. In this scenario, AQC might never need to catch up; the cryptographic risk is realized by gate QCs first. AQC might then be used for other things but not needed for factoring since a better method exists.

- AQC leapfrogs interim: If progress in gate QCs stalls (say qubit count saturates at 1000 without error correction for a while) and meanwhile annealers scale to 50k or 100k qubits with some error suppression, it’s conceivable that someone could use QA to factor medium-sized keys (like 512-bit RSA or 1024-bit RSA). Breaking RSA-1024 (which is already not considered safe by NIST but still used in legacy systems) might be within reach with, say, an error-corrected annealer of 50k physical qubits if algorithms improve. If that happened by late 2020s, it would be a huge wake-up call, though RSA-1024 is largely phased out. For RSA-2048, one might speculate maybe by 2040 an annealer with millions of qubits (or modules of 100k qubits each) could attempt it, but that’s a lot of speculation.

In terms of “cryptographically relevant”, what matters is the point at which a quantum computer (of any model) can break something like RSA-2048 within, say, a day. Experts consider that point likely around when the machine can perform ~$$2^{30}$$ to $$2^{40}$$ quantum operations effectively, which in Shor’s algorithm terms is maybe a few thousand logical qubits running for a few billion gate operations – hence the million physical qubit estimates with error correction. For AQC, “operations” aren’t gates but rather time steps or anneal runs. If an annealer can solve a problem of size N in one hour that a classical would take 10,000 years, that’s effectively breaking it. Achieving that requires the combination of enough qubits, minimal decoherence, and algorithmic smartness to avoid exponential slowdown.