Why I Chose Photonic Quantum Computing

Table of Contents

My Journey into Photonic Qubits

The race to build a quantum computer is heating up. Different “modalities” or architectures are vying for attention – from superconducting circuits in ultra-cold fridges to trapped ions suspended in vacuum traps. As the founder of a budding quantum startup, I decided to bet on photonic qubits – quantum bits encoded in particles of light. Why go with photons?

Advantages of the Photonic Modality

Photonic quantum computing has some distinct appeals that set it apart from matter-based qubits like superconductors or ions:

- No Extreme Cryogenics: Photonic qubits can operate at or near room temperature, unlike superconducting or ion-trap qubits that require bulky dilution refrigerators and ultra-cold vacuum setups. (The photons themselves don’t need any cooling at all – only the sensitive detectors might need modest cooling to a few kelvin, not the near-absolute-zero temperatures other hardware demands .) For a scrappy startup, not having to build a $$^3$$He/$$^4$$He cryostat is a huge practical advantage.

- Resistance to Decoherence: Because photons barely interact with their environment, they are largely immune to many common noise sources. A single photon can zip through optical fiber or even open air for long distances without losing its quantum state. In other words, photonic qubits have inherently long coherence lengths/times – a big plus when trying to maintain delicate quantum information.

- Built for Networking: The fact that photons travel at the speed of light and already live in fiber-optic cables makes them natural candidates for communication. It’s easy to envision connecting photonic quantum chips with existing telecom fiber – enabling distributed quantum computing or secure quantum networks with minimal signal conversion. This “quantum internet” potential is very appealing; photonic qubits could send entanglement across a campus or across the world using the same fibers that carry our data today.

- High Speed and Low Power: Optical systems operate at incredibly high frequencies, so in principle photonic quantum gates can be fast – we’re talking picosecond-scale operations with ultrafast lasers and switches. Moreover, since there’s no need to keep qubits chilled, photonic processors could run with far less power and complexity (no massive cryogenic cooling infrastructure). The prospect of energy-efficient, high-speed quantum computing at room temperature was a strong motivator for choosing this modality.

- Scalability via Chip Integration: Researchers have started integrating optical circuits on silicon chips to manipulate photons – essentially creating “quantum photonic chips.” For example, in 2009 a team at University of Bristol demonstrated a small photonic chip that could factor the number 15, using waveguides fabricated with standard semiconductor processes . This hinted that we might leverage the existing silicon photonics industry to scale up quantum devices. As a startup, the idea of mass-manufacturing optical quantum circuits (instead of hand-assembling lab optics) was very attractive for long-term scalability.

In short, photons offered a path to quantum computers that don’t look like giant lab freezers. They promised stability (little decoherence), easy networking, and compatibility with today’s telecom and semiconductor tech. Those were compelling advantages for my team when we set out to build a photonic quantum prototype.

Challenges and Drawbacks of Photonic Quantum Computing

Of course, no quantum technology is perfect – and photonics comes with its own set of headaches that were well understood even in 2014. Some of the key challenges we grappled with include:

- Two-Qubit Gates Are Hard: The very thing that makes photons stable (their lack of interactions) is a double-edged sword. Photons don’t naturally interact with each other, which means implementing entangling two-qubit logic gates is tricky. Unlike ions that can be entangled via their mutual Coulomb forces, or superconducting circuits that couple via electrical currents, two photons won’t influence one another unless you coax them indirectly. In practice, photonic gate schemes often rely on interference and measurement tricks (like the KLM protocol) that succeed only probabilistically. A basic two-photon entangling operation might have, say, a 50% success chance – and if it fails, the photons are typically absorbed or lost. This probabilistic gating means you need lots of extra photons and complex optical circuitry to attempt operations over and over until they succeed. Achieving high-fidelity, deterministic two-qubit gates in a pure photonic system remained an unsolved challenge.

- Scaling Requires Massive Overhead: Because of those probabilistic gates and losses, scaling up a photonic quantum computer demands an overhead of components and photons that can be daunting. You might need to generate many redundant photons and use multiplexing networks to route them, just to effectively realize one logical qubit or gate operation. Every beam splitter, phase shifter, and detector also introduces some loss. By the time you try to string dozens of operations together, you’ve lost many of your photons – a serious error issue unique to photonic qubits. Error correction is theoretically possible for photonic qubits, but the codes must address photon loss (erasure errors) which adds even more redundancy. All of this was (and still is) a huge engineering challenge: it’s like needing millions of parts to get a handful of very reliable logical qubits. Photonic quantum computing is still mostly at the few-qubit proof-of-concept stage, precisely because of these scaling difficulties.

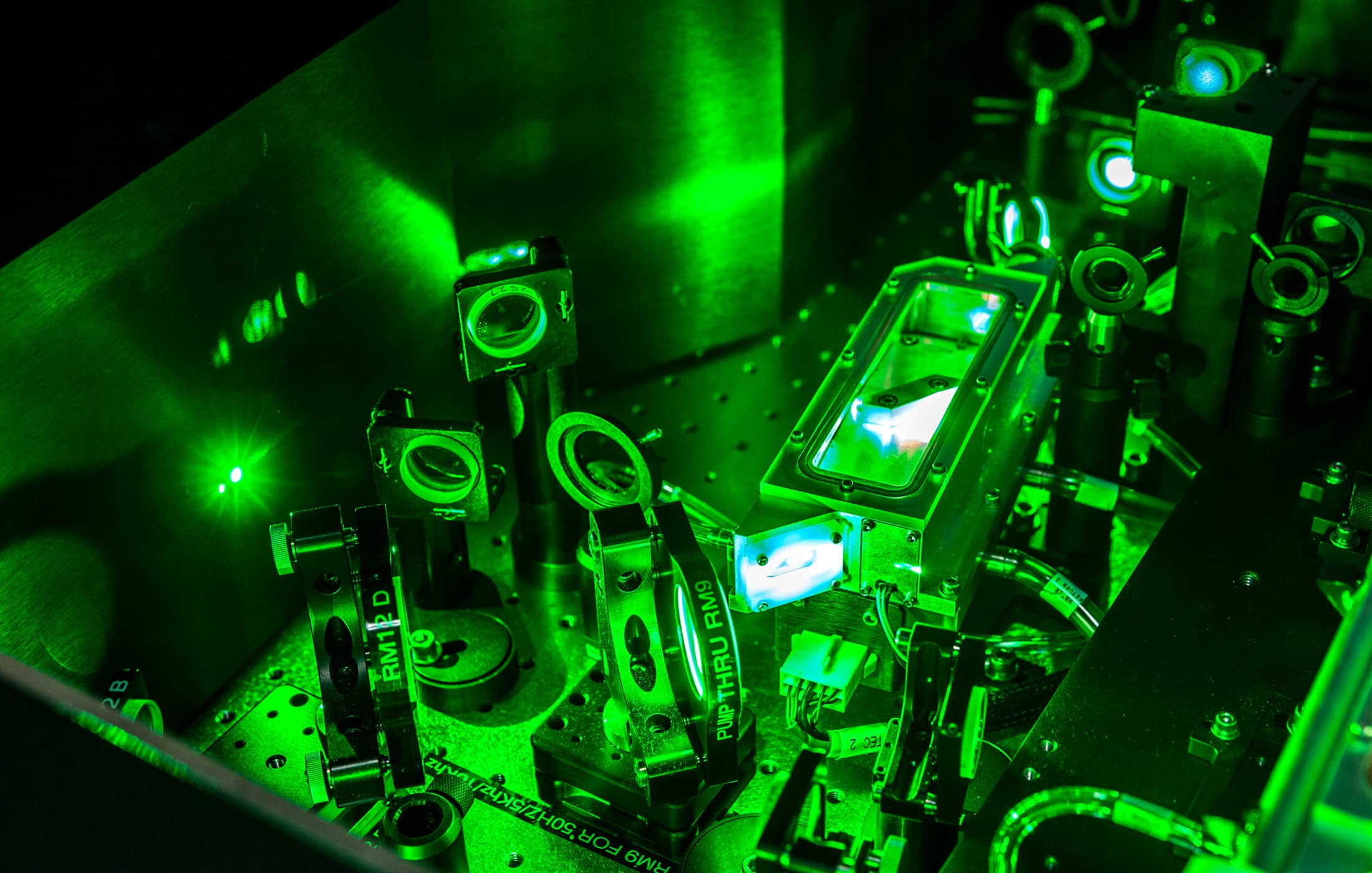

- Precision Optical Control: Working with photons means dealing with precision optics. You need stable lasers, aligned interferometers, and carefully timed pulses. Small errors in phase or alignment can scramble your qubits. In the lab, our early photonic experiments often involved tables full of mirrors and beam splitters, painstakingly aligned with micrometer precision. This setup is inherently less convenient than having qubits on a chip controlled by voltages. (Integrated photonic chips aim to solve this, but those are still rudimentary.) As one quip goes, “sending a single photon exactly where you want it to go isn’t easy” – the equipment can be bulky and finicky. For a startup, managing a large free-space optical setup is a non-trivial drawback, forcing us to invest in vibration isolation and top-notch photonics expertise.

- Sources and Detectors Limitations: Another practical challenge is getting reliable single-photon sources and detectors. Ideally, you want identical photons on demand (for interference to work perfectly). In reality, producing single photons with the right quality (indistinguishable, no extra stray photons) is difficult; often we use faint laser pulses or parametric down-conversion sources that are probabilistic. Sources are improving but still far from ideal – which bottlenecks any photonic quantum processor. A colleague jokingly said our motto became “pray for photons” when running experiments. On the detection side, we needed ultra-sensitive single-photon detectors (like SPADs or SNSPDs). While these can have high efficiency, they sometimes require cryogenic cooling and can be expensive. The bottom line: the supporting cast of photonic hardware (sources, detectors, switches) added complexity and cost. Even experts noted that having truly on-demand single photons was still “difficult to achieve” at that time , which limited our ability to just “add more qubits” easily.

Despite these challenges, my team remains optimistic. We knew we were attempting one of the hardest routes in quantum computing, but the potential payoff – a scalable, room-temperature quantum computer leveraging existing photonic technologies – keeps us motivated. The disadvantages are essentially engineering problems that we believe could be solved with time, ingenuity, and incremental improvements.

Wearing My CISO Hat – The Security Motive

Beyond the technical pros and cons, there was another personal reason I chose to sit in the front row of quantum computing development: security. As a CISO by trade, I’m charged with protecting information and managing cyber risks. And if there’s one future risk that looms large, it is the threat of quantum computers to cryptography.

Ever since Peter Shor discovered his quantum factoring algorithm in 1994, it’s been clear that a sufficiently powerful quantum computer could break the encryption that underpins most of our digital security. RSA, Diffie-Hellman, elliptic-curve crypto – all would be vulnerable if a quantum computer can run Shor’s algorithm on large numbers. In plain terms, quantum computing promises to “render ineffective” many encryption tools widely used today for banking, e-commerce, emails, and more. That’s a big deal for anyone in the security field. We call it the “Y2Q” problem – the (future) day when quantum cracks our codes – and it could expose sensitive data unless we’ve migrated to quantum-resistant algorithms in time.

By getting involved in quantum computing early, I hope to understand the real threat up close. It’s one thing to read theoretical papers about factoring RSA; it’s another to be in the lab, seeing how quickly (or slowly) quantum hardware is actually progressing. This hands-on vantage point helps me gauge how far away the danger truly is. Are we 5 years or 20 years from quantum decryption of secrets? Which schemes seem most promising, and what obstacles might delay an adversary’s capabilities? Being a part of a quantum startup gave me insider intuition on these questions, informing my risk assessments as a CISO. Essentially, I am hedging against the quantum threat by becoming part of the solution – or at least staying one step ahead of the “bad guys” who might eventually exploit quantum computing.

Moreover, photonic quantum tech has direct security uses too. It’s the backbone of quantum communication protocols like Quantum Key Distribution (QKD), which can enable provably secure communication links. Our work on photonic qubits dovetailed with developments in quantum cryptography – another area I paid keen attention to. So from both the offensive (threat) and defensive (solution) angles, photonics felt like the right place to be for a security-conscious innovator.

Conclusion

Choosing photonic quantum computing for my startup is equal parts ambition and pragmatism. The ambition is that photons, with their long coherence and networking talent, could unlock scalable quantum computers without the cryogenic baggage of other approaches. The pragmatism was that by immersing myself in this cutting-edge field, I could better prepare for the quantum revolution that will shake up cybersecurity. Photonic qubits offer a unique blend of promise: room-temperature quantum logic, compatibility with fiber optics, and ultra-fast operation – coupled with very real hurdles in gating and scaling that we were eager to tackle.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.