Quantum Computing Hardware Update

Table of Contents

Introduction

I’ve been following quantum computing hardware developments throughout the 2000s with equal parts excitement and realism. As a cybersecurity researcher, I’m bullish on the long-term promise – one day these machines could break our cryptography – but I also know we’re not there yet. As 2006 comes to a close, it feels like a good time to reflect on how far quantum hardware has come. Across superconducting circuits, trapped ions, photonic systems, and even nuclear magnetic resonance (NMR) and other exotic modalities, researchers have made significant strides. We’ve progressed from single-qubit experiments to multi-qubit prototypes that can entangle particles and perform basic quantum operations. At the same time, technical limitations such as decoherence, scaling, error rates, and control precision remain daunting. In this post, I’ll tour the state of quantum computing hardware in 2006, balancing my long-term optimism with a clear-eyed view of current capabilities.

Superconducting Qubits

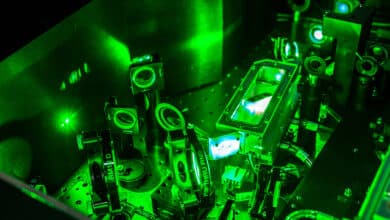

Superconducting quantum chip (D-Wave prototype): Superconducting qubits are implemented using tiny Josephson junction circuits on chips cooled to millikelvin temperatures. These devices look like conventional microchips, but operate at ultra-cold temperatures to exhibit quantum behavior. The field really kicked off in 1999, when Nakamura and Tsai demonstrated that a superconducting circuit could behave as a coherent two-level quantum system – essentially the first solid-state qubit. Since then, multiple types of superconducting qubits have been explored: charge qubits (Cooper-pair boxes), flux qubits (superconducting loops with two persistent current states), and phase qubits (Josephson junctions biased in the superconducting state). Early on, coherence times were very short (only nanoseconds to microseconds), but steady engineering improvements in circuit design and materials have extended coherence and reduced noise. By the mid-2000s, groups at institutions like NIST (John Martinis’s team), Yale (Robert Schoelkopf and Michel Devoret), NEC in Japan, and others had managed to perform single-qubit manipulations (Rabi oscillations, etc.) reliably on these circuits. For example, the “quantronium” design in 2002 introduced echo techniques to prolong coherence, and coupling qubits via microwave resonators (circuit QED) was pioneered around 2004-2005, greatly improving control.

The big milestone in 2006 was the first entanglement between two superconducting qubits. This year, a team at UC Santa Barbara (formerly NIST) successfully created a Bell state between two coupled Josephson phase qubits and verified it via full quantum state tomography. The entangled two-qubit state had a fidelity of about 87%, which was a record for solid-state qubits. Achieving two-qubit gates in a superconducting system is a huge step – it means we can now perform basic quantum logic (like controlled-NOT) on a chip, something that was only a theory a few years prior. Major players in this area include university labs and industrial research groups: for instance, Martinis’s group (now at UCSB) focusing on phase qubits, Yale focusing on transmon qubits in cavities, Delft University and Stony Brook on flux qubits, and NEC continuing their work on charge qubits. Even companies are dipping in – Canadian startup D-Wave Systems has been pursuing an approach using superconducting flux qubits for adiabatic quantum computing. They’ve built a multi-qubit “quantum annealer” chip (16 qubits so far) and have announced plans to publicly demonstrate it.

Despite the progress, I remain realistic about near-term superconducting capabilities. We have, at most, a handful of qubits on a chip that we can entangle or run simple algorithms with. Two-qubit entanglement with ~87% fidelity, while impressive, is still far from the >99% fidelities needed for robust computing. Decoherence is the constant enemy: these qubits lose their quantum state in microseconds due to interactions with their environment. Keeping them cold (≈20 mK in dilution fridges) and isolating them from stray noise is mandatory, and even then we battle issues like unwanted crosstalk and parameter drift in the circuits. Scaling up is non-trivial; adding more qubits means complex wiring and calibration to control many microwave lines, and variability in fabrication can make each qubit slightly different. There’s also a significant overhead in error correction needed – and with current gate error rates, a large error-corrected superconducting processor is still a distant dream.

In short, superconducting qubits have shown they work as qubits and can even entangle, but achieving dozens or hundreds of them working in concert will require serious engineering breakthroughs (better materials, 3D integration, tunable coupling, etc.). I’m bullish because these are essentially electrical circuits – a technology we know how to scale – but I’m also conscious that quantum coherence doesn’t scale as easily as transistor density did.

Trapped Ions

In trapped-ion quantum computers, the “bits” are individual atoms suspended in electromagnetic traps, manipulated by laser beams. Trapped ions have been a fantastic testbed for quantum computing because ions are identical, pristine qubits with very long coherence times. Throughout the early 2000s, ion traps have arguably led the field in achieving high-fidelity operations. David Wineland’s group at NIST Boulder and Rainer Blatt’s group at University of Innsbruck have been at the forefront. By 2003, they had realized fundamental quantum logic gates with ions – for example, Blatt’s team demonstrated the first Cirac-Zoller two-qubit CNOT gate in 2003, and even implemented a simple quantum algorithm (the Deutsch-Jozsa algorithm) on a two-ion system. In 2004, the Innsbruck group also achieved deterministic quantum teleportation of an ion’s quantum state to another ion – a mind-blowing validation of quantum information protocols in a computing context.

The entangling capability of trapped ions now far outpaces other platforms. Just last year (Dec 2005), researchers in Innsbruck reported entangled states involving up to 8 ions in a W-state, with full tomographic verification of the entanglement. In a complementary feat, the NIST team in the U.S. has created GHZ states with up to 6 ions in a trap. To put that in perspective, that’s 6 or 8 qubits all entangled in a controlled way – the largest entangled registers of any quantum computer so far. Trapped-ion systems also excel in accuracy: two-ion gate fidelities on the order of 95-99% have been achieved in experiments (for example, using Mølmer-Sørensen laser gates). In fact, ions hold many of the records for quantum logic fidelity to date. We also saw a major step toward quantum error correction with ions: the NIST group demonstrated all the steps of a quantum error-correcting code on three beryllium ion qubits. They used one ion as the data qubit and two as “helper” ancillas, and showed they could detect and correct a bit-flip error in the quantum state automatically. This was the first full error correction cycle in any system, and it’s encouraging that it worked on ions (which many of us consider a leading candidate for a full-scale quantum computer).

It’s not just NIST and Innsbruck; other groups like Chris Monroe’s team (formerly at NIST, now establishing his lab at University of Maryland) and various university labs in England, Australia, and Japan are pushing ion trap technology. We’ve seen innovations in trap design – for instance, linear RF Paul traps are being miniaturized, and there are efforts to create 2D ion trap arrays for shuttling ions around. The Holy Grail for trapped ions is the “quantum CCD” architecture, where ions can be moved between trapping zones so that any pair of ions can be entangled by bringing them together. This year, researchers have developed prototype multi-zone traps (including a two-dimensional trap structure) that move us toward that scalable architecture. The University of Michigan (and others) have been working on incorporating ion traps onto micro-fabricated chips, and though the full vision of hundreds of ion traps interlinked is still in progress, the path is conceptually clear.

Of course, trapped ions face their own hurdles. The experiments are delicate: you need ultra-high vacuum, stable lasers for cooling and gating, and precision timing. Operations are comparatively slow (gate times are on the order of tens of microseconds to milliseconds, versus nanoseconds for superconducting circuits), which means an ion quantum computer runs at a snail’s pace in raw operation speed. Scaling to more ions in a single trap can be problematic because a long chain of ions gets harder to control (vibrational modes become dense and complex). The 8-ion entanglement was a tour-de-force that required careful handling of collective motion modes. Beyond ~10-15 ions in one trap, most experts think we’ll need to network multiple traps. There’s active research on using photons to entangle ions in different traps (ion-photon quantum networking) – e.g. the University of Maryland and others have shown entanglement between remote ions via photon links. So while an ion-based quantum computer might eventually be a modular device with many traps, right now we’re mostly dealing with single linear traps holding up to a few dozen ions at most. I’m encouraged by the fact that ions have achieved virtually every key milestone (high-fidelity gates, small algorithms, entanglement of many qubits, error correction) on a small scale. The challenge now is engineering – integrating these feats into one system and automating the whole apparatus. It’s a bit like going from a single transistor to a full microprocessor; we know the physics works, but we have to build the supporting technology to scale it up.

As of 2006, trapped ions remain a strong front-runner, combining excellent control with quality qubits, and I’m confident continued research will keep pushing their capabilities.

Photonic Quantum Computing

Photons – particles of light – are another platform for quantum computing hardware, offering very different strengths and weaknesses. Photonic quantum computing uses single photons as qubits, typically encoding quantum information in the polarization or path of the photon. The appeal is obvious: photons don’t interact with the environment much, so they can maintain coherence over long distances (think fiber optics) and at room temperature. You also don’t need exotic cryogenics or vacuum chambers to keep them coherent. The catch? Photons don’t like to interact with each other either, which makes building logic gates hard. There’s no natural two-photon interaction, so quantum gates require clever schemes using beam splitters, interference, and measurement-induced effects.

Back in 2001, Knill, Laflamme, and Milburn (KLM) famously proved that linear optics plus projective measurements could in principle enable universal quantum computing. This sparked the field of linear optical quantum computing – essentially using beam splitters, phase shifters, and photon detectors to create entanglement between photons. In the years since, experimentalists have taken up the challenge. By 2003 we saw the first experimental two-qubit photonic logic gates. Two independent groups – one at Johns Hopkins/APL (Pittman et al.) and one led by Jeremy O’Brien (then in Australia) – each demonstrated a probabilistic optical CNOT gate using linear optical elements and photon detectors. These experiments use the fact that if you post-select on certain detection events, the remaining photons have effectively undergone a conditional logic operation. The gates weren’t deterministic (they succeeded with a certain small probability), but it was a proof that photonic qubits can perform non-trivial logic.

Photonics has also excelled at producing entangled states of multiple qubits. The record here was set in 2004, when a team led by Jian-Wei Pan demonstrated entanglement of five photons in a GHZ state. Five qubits may sound modest, but generating and detecting five photons simultaneously entangled was a huge technical achievement – and notably, 5 is the minimum number of qubits for certain error-correction codes, so it had theoretical significance. With those 5 entangled photons, they even demonstrated a form of quantum teleportation (an “open-destination” teleportation protocol) to illustrate quantum network concepts. Earlier, three- and four-photon entanglement had been shown (Zeilinger’s group did a 4-photon GHZ circa 1999), so we’re seeing steady progress in scaling up photonic entanglement. There’s also work on cluster states (the backbone of one-way quantum computing) – the one-way quantum computer model proposed in 2001 uses large entangled clusters of qubits that are processed via measurements. By the end of 2006, small cluster states of four photons had been created, and just after 2006 there were demonstrations of simple algorithms (like Deutsch’s algorithm) on 4-photon cluster states. It’s encouraging that academia is moving photonic experiments from pure physics (entanglement, Bell tests, etc.) towards more computational demonstrations.

That said, photonic quantum computing in 2006 is still very much in the proof-of-concept stage. The experiments are typically table-top setups with lasers, nonlinear crystals (for generating entangled photon pairs), and a maze of mirrors and beam splitters on an optical bench. Each additional photon cuts the success probability drastically – e.g. a 5-photon state might be produced only once in many thousands of trials due to the low likelihood of all required photons being detected. There’s a scalability issue: to scale probabilistic gates, you need tricks like quantum repeat-until-success or giant overheads in ancillary photons, which is daunting. Also, single-photon sources and single-photon detectors come with efficiency and noise limitations. We’ve got some big players in photonics too: University of Vienna (Zeilinger’s group) and USTC in China (Pan’s group) are entangling photons left and right (sometimes literally between continents, for quantum communication, though that’s beyond computing). University of Queensland and now University of Bristol have teams (like O’Brien’s) working on photonic logic circuits, even creating rudimentary photonic chips with waveguides. In the U.S., groups like at NIST and UIUC (Paul Kwiat’s group) have done photonic quantum memory and teleportation experiments.

The advantages of photonic systems – room-temperature operation, easy distribution of qubits (think quantum networks) – make them very attractive for quantum communication and possibly distributed computing. For pure computation, however, the near-term capabilities are limited by the lack of a straightforward, deterministic two-qubit gate. There is progress on nonlinear optical materials and measurement-based protocols to mitigate this, and even some hybrid approaches (like coupling photons with matter qubits for storage). In fact, just this year (2006) we saw an interesting demonstration: the University of Copenhagen teleported quantum information between light and atoms – essentially a photon-to-atom memory transfer, combining photonic and atomic systems. This hints at a future where photons distribute quantum information and matter qubits do the processing. But as of 2006, a photonic quantum “computer” is mostly a handful of photons in an optical experiment doing one-off tricks.

I’m optimistic that photonic techniques will play a role in scaling (especially for connecting modules of other types of qubits), but I’m also realistic that pure photonic computing has a long road ahead. We need better photon sources, detectors, and perhaps a few more miraculous insights to make it fully scalable.

NMR

No retrospective of quantum hardware would be complete without mentioning NMR quantum computing, which was a dominant player in the late 1990s and early 2000s. NMR (nuclear magnetic resonance) uses molecules in solution; each molecule has several nuclear spins that serve as qubits, and collective magnetic resonance techniques manipulate these spins. In fact, the first working quantum computers in a tangible sense were NMR machines: in 1998-2000, researchers used NMR to demonstrate small algorithms on 5-qubit and 7-qubit systems. For example, a 5-qubit NMR quantum computer was built at the Technical University of Munich in 2000, and a 7-qubit system at Los Alamos shortly thereafter. The big headline was in 2001 when IBM Almaden and Stanford showed Shor’s algorithm factoring 15 using a 7-qubit NMR device (in a liquid-state ensemble of $$10^{18}$$ molecules, each molecule contributing 7 qubits). That experiment famously factored the number 15 = 3×5, which sounds laughably easy, but it was a full implementation of Shor’s quantum factoring algorithm on actual qubits – a landmark moment for the field. NMR also demonstrated other algorithms and protocols (Deutsch-Jozsa, Grover search on small databases, error correction codes) earlier this decade, keeping quantum computing in the scientific limelight.

However, NMR’s drawbacks became increasingly apparent. In 1999, Braunstein and others pointed out that the bulk NMR experiments performed up to that point contained essentially no entanglement – the mixed states were so highly mixed that they didn’t violate entanglement criteria. This critique struck at the heart of whether NMR was truly a quantum computer or just a cleverly driven analog classical system. The issue is that NMR uses an ensemble of molecules at room temperature, which means the spins start mostly random (high entropy). They employ “pseudo-pure states” to pretend a tiny fraction of the ensemble is a pure state, but as you add more qubits, the signal (and effective pure fraction) decreases exponentially. By about 10-12 qubits, the signal is lost in the noise. In fact, 12 qubits is roughly the record for NMR: this year (2006) a team at the Institute for Quantum Computing (Waterloo) and MIT managed to benchmark a 12-qubit NMR quantum information processor. It’s an impressive technical achievement to control 12 nuclear spins through carefully designed pulse sequences. But it’s telling that to do so, they had to find a special molecule with 12 addressable nuclei and run on a very high-field NMR spectrometer – and even then, this is likely the swan song of liquid-state NMR QC, because going beyond 12 qubits would be extraordinarily difficult. The signal-to-noise just collapses and, as Braunstein noted, without entanglement you don’t get the exponential quantum advantage.

The consensus is that NMR quantum computing is not the route to scalability, though it provided a great training ground for quantum control techniques. A lot of insights about pulse shaping, error mitigation, and composite rotations came from NMR work. There are still niche efforts (like solid-state NMR and transient rotating frame NMR) trying to overcome the limitations, but most researchers have shifted focus to other platforms.

I tip my hat to NMR for being the first to hit many qubit-count milestones (7 qubits in 2000, 12 qubits in 2006), and for showing us small quantum algorithms in action. Yet, the reality is that NMR machines with more qubits won’t scale in the way we need for a general quantum computer, due to the fundamental signal dilution problem. As a result, many of the pioneers in NMR (like Isaac Chuang, Neil Gershenfeld, David Cory, Raymond Laflamme) have either moved to other implementations or to quantum theory.

Other Modalities

Aside from the “big three” modalities (superconductors, ions, photons) and NMR, there are several other intriguing approaches in play:

Semiconductor Quantum Dots (Spin Qubits)

Researchers are exploring using single electron spins in quantum dot nanostructures as qubits. This was proposed by Loss and DiVincenzo in the late 90s, and by now there have been some key experiments. Just this year, a team at Delft University of Technology created a device that can manipulate the spin state (“up” or “down”) of a single electron confined in a quantum dot. This essentially demonstrates single-qubit control in a quantum-dot system.

Spin qubits in quantum dots are attractive because they leverage semiconductor fabrication (potential for scaling like chips) and can have fairly long coherence (especially in isotopically purified materials or using electron/nuclear spin echo techniques). We’re seeing the first one- and two-qubit experiments: there have been reports of two coupled quantum dot spins executing a conditional gate, and single-shot readout of a single spin was achieved around 2004-2005.

It’s still early days – controlling individual spins and coupling them (often via tunneling or exchange interactions) is quite challenging. But groups at Delft, Harvard, Stanford, and UNSW in Australia are pushing this line, and each year the coherence times inch up and gate fidelities improve. By now, coherence of microseconds to milliseconds has been shown in certain spin systems, and there’s active work on using electron spin resonance on chips. We can also include silicon donor spins (the Kane quantum computer proposal) in this category: the idea is to use phosphorus dopant atoms in silicon as qubits. In 2006 there was a notable result of demonstrated ability to read out the state of a single nuclear spin on a phosphorus donor in silicon – a step towards the Kane architecture. Maintaining quantum coherence in such solid-state spins while controlling them electrically is tough, but if it works, it inherits the manufacturability of the semiconductor industry.

Nitrogen-Vacancy (NV) Centers in Diamond

The NV center is a specific defect in diamond (a substitutional nitrogen next to a vacancy) that behaves like an isolated atom trapped in the solid. It has an electron spin that can be initialized and read out with light, and nearby nuclear spins (like a $$^{13}$$C or the host $$^{14}$$N) that can serve as additional qubits. NV centers have garnered attention because they have fantastic coherence properties – the electron spin can maintain coherence for milliseconds at room temperature (!), which is unheard of in other systems. In 2004-2006, researchers like Jelezko and Wrachtrup in Germany demonstrated coherent control of single NV centers and even basic quantum algorithms in that system. For instance, they realized a two-qubit conditional gate between an NV’s electron spin and a neighboring $$^{13}$$C nuclear spin, achieving about 90% fidelity. They effectively created a small two-qubit quantum register in diamond and showed quantum dynamics (like entanglement between the electron and nuclear spin). This is a big deal because it’s a solid-state system operating at room temperature. The downside is scaling: getting two NV centers to interact (for multi-qubit operations) is very challenging because you typically need them to be nanometers apart or use photonic links.

Right now, NV centers are great for quantum sensing and as single-qubit memories, but connecting many of them into a computing architecture is unresolved. Still, the progress by 2006 proves NV centers are a viable qubit, and maybe in the future they’ll be networked via photons or coupled through novel nano-structures.

Neutral Atoms in Optical Lattices/Tweezers

Similar to trapped ions, but using neutral atoms (like rubidium or cesium) trapped by laser light in arrays. Neutral atoms don’t carry charge, so trapping and addressing them needs different techniques (optical “tweezers” and lattice potentials). One advantage is you can trap a lot of neutral atoms in a grid (hundreds easily, as in Bose-Einstein condensate optical lattices), so a high-density array of qubits is conceivable. The challenge is performing controlled interactions – usually done via transient excitation to Rydberg states or using spin-dependent collisions.

In 2006, there have been pioneering steps: for example, a University of Bonn team managed to use laser tweezers to sort and line up 7 caesium atoms in a row as a precursor to implementing quantum gates. They basically created a conveyor belt of optical traps and arranged atoms with single-site precision, which is vital if you want them to interact in a controlled way.

Other groups (like Immanuel Bloch’s team in Munich) have demonstrated coherent spin dynamics in optical lattice arrays, and even simple two-atom entanglement via controlled cold collisions in 3D lattice cells (circa 2003). There’s also work at Harvard and UW Madison on Rydberg-mediated gates between neutral atoms separated by a few micrometers.

By the end of 2006, neutral atom quantum computing is still in early demo stages – we don’t have a full two-qubit gate in a neutral system published yet, but we do have entanglement of neutral atoms via Rydberg blockade reported just around this time. Neutral atoms promise huge scalability (lots of qubits) if we can get the gate fidelity and crosstalk under control. It’s a bit like having a gigantic checkers board of qubits – now we need to selectively make them “talk” to each other. I suspect in the coming years we’ll hear more from this approach.

Others

There are even more exotic proposals out there – superfluid helium qubits, optomechanical resonators, topological qubits with anyons (pioneered by Microsoft’s Station Q, though no experimental anyon qubit exists yet in 2006), and adiabatic quantum annealers like those pursued by D-Wave (which I lump in with superconducting, as they use superconducting flux qubits but for optimization tasks). Each has seen some laboratory curiosity or early result (for instance, this year Vlatko Vedral’s group entangled photons with vibrations of a macroscopic mirror, blending light and mechanics in a quantum way).

However, most of these are a long way from forming a programmable quantum computing device. They are important to mention because quantum computing is a very diverse field – we haven’t yet converged on one standard “quantum bit” like classical computing converged on the transistor. It’s still a rich exploration of many physical phenomena.

My personal take is that some approaches will win out for specific niches: e.g. ions for highest fidelity, superconductors for speed and integration, photons for communication, etc., and eventually we might see hybrid systems combining the best of each.

Challenges: Decoherence, Scalability, and the Reality Check

In surveying these hardware efforts, it’s clear that common challenges loom for all of them. The biggest issue is decoherence. Quantum states are fragile and tend to lose coherence when interacting with their environment. No matter the platform – flipping currents in a superconductor or hyperfine states in an ion – if it couples to external noise, the phase information that is key to quantum computing decays. One of the greatest challenges in building a quantum computer is overcoming decoherence. We typically isolate qubits in extreme conditions (ultra-low temperatures, high vacuum, electromagnetic shielding) to lengthen their coherence time. We’ve made progress: e.g., trapped ions can maintain coherence for seconds (and even minutes under certain conditions) because they’re so well isolated, and in NMR the spins are bathed in carefully controlled fields to avoid random perturbations. But once we start doing operations – applying laser pulses, microwave pulses, etc. – we inevitably introduce some decoherence.

A related issue is error rates in quantum gates. We need gate operations to be extremely precise; an error of even 1% per gate is huge because it may take hundreds or thousands of gates to do something useful. Currently, only trapped ions are flirting with sub-1% error rates. Superconducting qubits have error rates on the order of a few percent per gate (the 87% entanglement fidelity implies ~13% error for that two-qubit operation, for instance). Photonic gates are probabilistic with even lower success probabilities (though error per successful gate is not exactly the same concept there). NMR had low control error, but state preparation was so poor that effectively there’s error in initializing (and reading out an ensemble average isn’t a “single-shot” readout anyway).

Scalability is the elephant in the room. Thus far, we’ve been talking about 2 qubits here, 5 qubits there, maybe 8 or 12 in some special cases. But we know algorithms like Shor’s will need thousands of logical qubits (and each logical qubit might require hundreds of physical qubits with error correction). How do we go from 5 to 500 to 5,000 qubits? Each technology has a plan but also a pain point. For superconductors, fabricating, wiring, and controlling that many qubits with microwave lines and keeping them all coherent in one cryostat is a herculean task (though I can imagine in the long run, integrating qubits on chips like today’s VLSI circuits). For ions, transporting and networking more than ~50 ions is uncharted territory – maybe we’ll need trapping zones interconnected by photonic links, which adds complexity and lowers rates. For photons, generating and managing thousands of indistinguishable photons with low loss seems nearly impossible with current sources and detectors (though who knows, maybe one day). Essentially, the control systems might not scale linearly – we can’t just copy-paste a 5-qubit setup into a 1000-qubit setup without running into serious control overhead and noise issues. There are ideas like multiplexing control lines, or using classical electronics to handle some local operations, but those introduce their own challenges.

Another challenge is crosstalk and interference. Quantum devices are so sensitive that when you put many together, they might inadvertently interact or cause each other to decohere. This is already seen in ion traps (spectral crowding of modes) and in superconductors (cross-coupling between qubit circuits if not well isolated). Engineering the systems to have interactions when and only when we want (like turning on a gate between the right qubits at the right time) is tough. We often joke that building a large quantum computer is like trying to have a conversation in a room full of delicate wine glasses – speak too loudly and you shatter the “memory” of the system.

Despite all these challenges, my outlook remains optimistic in the long term. We’ve identified many of the error sources and are actively working to mitigate them. For instance, better materials in superconducting circuits have reduced decoherence (less microscopic two-level fluctuators in dielectrics), dynamical decoupling techniques in spins can refocus certain errors, and error correction itself provides a path forward if we can get the error rates low enough. In fact, the whole notion of fault tolerance tells us that if we can get error rates below a threshold (around $$10^{-3}$$ or so), we can in principle scale indefinitely with quantum error correction. We’re not there yet in 2006, but trapped ions and maybe some solid-state systems are within an order of magnitude of that target. Researchers are steadily improving the metrics year by year. Dietrich Leibfried from NIST (one of the ion trap pioneers) put it well – after demonstrating error correction on 3 ions, he said the “instrumentation and procedures for manipulating qubits need to be improved to build reliable quantum computers, but such improvements seem feasible”. I take heart in that statement: it’s not violating physics to improve coherence or reduce error rates; it’s an engineering problem, and engineers thrive on solving such problems given enough time and resources. We’ll likely need a lot of both.

Hype vs. Reality

Before wrapping up, I’d like to reflect on the contrast we sometimes see between academic milestones and vendor marketing claims. In 2006, quantum computing is hot enough that companies and funding agencies are paying attention – which is great, but it also means hype can get ahead of reality. Case in point: D-Wave Systems, the Canadian startup I mentioned earlier. They’ve been very public about their goal to build the first commercial quantum computer. As of late 2006, D-Wave claims to have a 16-qubit superconducting “Orion” processor and has been touting a live demonstration (scheduled for Feb 2007 at the Computer History Museum) where it will solve a Sudoku puzzle and other simple problems. They even speak of scaling to 28 qubits and beyond shortly. This has generated a lot of buzz – some headlines are implying the quantum age of computing is here. As someone deeply immersed in the field, I have mixed feelings. On one hand, I’m excited to see a private company throw resources at the problem and any demonstration of a multi-qubit device is welcome news. On the other hand, I know that a 16-qubit adiabatic quantum optimizer (which is what D-Wave’s device actually is) is not the same as a 16-qubit universal gate-based quantum computer with error correction. D-Wave’s approach might solve certain optimization problems by essentially finding the ground state of a 16-spin system – interesting, but classical algorithms can likely handle that size of problem anyway. Moreover, it’s unclear how quantum the device truly is; skeptics have pointed out that demonstrating entanglement or a true quantum speedup in that kind of system is tricky. So while D-Wave’s marketing is bold, I remain cautiously observant. I won’t declare their machine a breakthrough until I see peer-reviewed data that shows it outperforming the best classical methods or at least verifiably exploiting quantum effects.

This pattern – hype versus reality – appears elsewhere too. From time to time, a press release will claim “Quantum computer built that does X!”, when in fact X is a very narrowly defined academic experiment. For instance, when Shor’s algorithm was implemented for factoring 15 on 7 qubits, some media spun it as “Quantum computer factors number, could threaten encryption.” Technically true, but the number was 15 and it took months of experimental setup – we’re not exactly cracking RSA-2048 here. As a cybersecurity expert, I often get asked, “So, are our codes broken yet by quantum computers?” and I have to laugh. No – not even remotely. The academic reality is that we can barely juggle a dozen qubits in ideal lab conditions. Every new qubit we add is a victory. We’re working toward the ability to do something classically hard, but as of 2006, no quantum computer can beat a classical computer at a useful task. In fact, I like to remind people that even a simple 8-bit classical calculator from the 1970s vastly outperforms today’s quantum devices in terms of reliable computation. That’s not a knock on quantum – it’s just a reminder of how much further we have to go.

Another example: NMR quantum computing was sometimes hyped in the early 2000s as well – you’d hear “10-qubit quantum computer built”, but the context (liquid NMR, no entanglement) wouldn’t make it into the headlines. The vendor/industrial perspective tends to gloss over the fine print that we in academia fuss over (like what constitutes a “qubit” or whether the system is truly quantum as opposed to analog classical). On the flip side, academics can be overly cautious in language, which might undersell progress. I try to maintain a balance in my own mind.

The good news is that despite occasional hype, the trend is positive. We are hitting the milestones that were laid out in roadmaps like the ARDA Quantum Computing Roadmap of 2002. We have gone from single-qubit demos (1990s) to two-qubit gates (early 2000s) to >5 qubit entanglement (mid-2000s), and even simple quantum error correction (now). The next milestones on the horizon are perhaps ~10-20 qubit systems that are fully controllable. I’d say we’re still at the vacuum-tube era of quantum computing, maybe akin to where classical computing was in the 1940s or early 50s. There’s a long way to go to reach the solid-state transistor era (let alone integrated circuits). But lots of smart people and significant funding are now directed at this challenge. Governments are investing (e.g., the EU and US have quantum information initiatives, and labs like Los Alamos, NIST, Sandia, etc., are heavily involved). Big companies like IBM and HP have small teams too (IBM had been quiet after the NMR work, but rumor has it they’re looking at superconducting qubits again; Microsoft is betting on exotic topological qubits).

In summary, as 2006 ends I remain confident yet realistic. We’ve seen genuine, hard-won progress in quantum hardware: superconducting circuits entangling, ions scaling up with superb control, photons performing logic, and even completely different technologies proving their quantum chops. We’ve also seen that each platform has serious challenges to overcome – none is a silver bullet in the near term. The dream of a large-scale quantum computer is alive and well, but the timeline should be thought of in decades, not years.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.