Cisco’s Full-Stack Approach and the Road to Quantum Data Centers

Table of Contents

[Disclosure: I have no affiliation with Cisco and hold no financial interest in the company. This post reflects my personal technical curiosity and analysis based only on public source. Not investment advice.]

Introduction

Cisco took on an ambitious full-stack strategy to make distributed quantum computing a reality sooner than many expect. Instead of waiting for a single perfect quantum processor with millions of qubits, Cisco is building the hardware, software, and architecture needed to network today’s smaller quantum machines into unified quantum data centers.

This approach mirrors how classical computing scaled – connecting many modest nodes to achieve supercomputing power – and could similarly accelerate quantum advantage.

With Cisco’s recent announcement of “Software That Networks Quantum Computers Together and Enables New Classical Applications“, I decided to dig a bit deeper into Cisco’s strategy.

Cisco’s Quantum Networking Vision: A Full-Stack Approach

Cisco is approaching quantum networking with the same systems-level philosophy that made it a leader in classical networking. Just as the internet was built by co-designing custom silicon, hardware systems, and protocols, Cisco aims to build a complete quantum networking stack from the ground up. Vijoy Pandey, Cisco’s SVP of “Outshift by Cisco”, frames it as: “Today’s quantum computers are stuck at hundreds of qubits when real-world applications need millions. We can either wait decades for perfect processors, or network existing ones together now. We believe the time is now.” [I wouldn’t quite say they are “stuck” but I understand the need for a marketing soundbite.]

This vision led Cisco to announce a suite of prototype technologies spanning software and hardware, all designed to make multiple quantum processors “work together instead of alone”.

At a high level, Cisco’s quantum networking stack has three layers:

- Devices layer: The physical quantum devices and an SDK/API to interface with them (including both actual hardware and simulated devices). This includes Cisco’s own quantum network chip for entanglement generation and any quantum processors or network components in the system.

- Control layer: The network control software – protocols, algorithms, and controllers that manage entanglement distribution and device coordination via well-defined APIs. This layer ensures the “plumbing” of the quantum network (switches, quantum channels, etc.) delivers the necessary entangled states when and where needed for applications.

- Application layer: High-level quantum networking applications for both quantum and classical use cases. This includes Cisco’s new network-aware quantum compiler for distributed quantum computing, as well as example applications like Quantum Alert and Quantum Sync (explained below).

By advancing all these in concert, Cisco hopes to create a seamless integration between quantum hardware and software, much as classical network stacks abstract away physical links into reliable services.

Notably, Cisco emphasizes a vendor-agnostic approach – their framework is meant to work with any type of quantum processor (superconducting, ion traps, photonic, etc.), not picking hardware “winners” but providing the networking fabric to interconnect them. This is a wise strategy; in classical networking Cisco’s strength was connecting diverse systems. In quantum, where different qubit technologies each have pros and cons, a unifying network could allow heterogeneous quantum processors to collaboratively tackle problems.

This full-stack vision is backed by significant investment. Cisco opened a dedicated Cisco Quantum Labs facility in Santa Monica and published a detailed architecture blueprint in an academic paper (I’ll examine this shortly). The lab’s mandate is to prototype everything from the physical layer up to applications, bridging theory and practical implementations. So let’s break down the key components Cisco is building across software, hardware, and architecture – and how they fit together into a “quantum data center” vision.

Hardware Foundations: Entanglement Chips and Silicon Spin-Photon Qubits

Any quantum network requires a foundation of specialized hardware to create and distribute entanglement. Cisco’s approach starts with a quantum network entanglement chip – a photonic integrated circuit that generates entangled photon pairs at high rate. First unveiled in 2025, this chip is a breakthrough in performance and practicality:

- Telecom-band operation: It produces entangled photons at standard 1550 nm telecom wavelengths, meaning it can leverage existing fiber optic infrastructure for long-distance quantum links.

- Room-temperature PIC: The device is a miniaturized photonic integrated circuit (PIC) that works at room temperature, avoiding the need for complex cryogenics and easing deployment in real systems.

- High brightness: The chip generates on the order of 106 high-fidelity entangled pairs per second per channel, and up to 200 million pairs per second in total. This makes it one of the brightest chip-scale entanglement sources to date.

- Low power: It consumes <1 mW of power, remarkably low for the rate of entanglement produced.

- High fidelity: It can produce entangled pairs with fidelity as high as ~99% (under certain conditions), providing low-noise entangled states for quantum operations.

Cisco achieved this by leveraging spontaneous four-wave mixing in III-V semiconductor waveguides integrated on a silicon photonics platform.

In essence, laser pulses in the chip’s nonlinear waveguides yield pairs of photons that are entangled (e.g., in polarization or time-bin). By integrating many such sources on one chip (an array of waveguide sources), Cisco can massively multiplex the generation of entanglement. Professor Galan Moody of UCSB, Cisco’s collaborator, noted that integrated photonics allows many sources on one device, and with fiber packaging and electronics, “a single device can boost entanglement rates for many users” on the network. In other words, the entanglement chip can be scaled to serve multiple node pairs in parallel – a critical need for a quantum data center where many qubit pairs may need entanglement simultaneously.

Why does this matter? High-rate entangled photon sources are essential to networking quantum processors. They are the analog of transceivers in classical networks. Cisco’s chip provides the “entangled bits” (ebits) that remote qubits will consume to perform distributed quantum operations. And by operating at telecom wavelengths and room temperature, these sources can potentially be deployed in standard data centers and network fiber, which is a huge practical advantage. Competing approaches like certain quantum dot or nonlinear crystal sources often face tradeoffs in brightness or require non-telecom wavelengths and conversion. Cisco’s choice to invest in photonic integrated circuits (PICs) for entanglement generation aligns with a strategy of scalability and interoperability – make quantum links as plug-and-play as possible with today’s telecom infrastructure.

Beyond photons, the other side of the hardware equation is quantum memory/interfaces – the qubits that will be connected by these photons. Many quantum processors (e.g. superconducting qubits) do not natively emit photons, so bridging different systems requires transducers or special interface qubits. Here Cisco is exploring multiple options. One approach highlighted in Cisco’s research is a dual-frequency entanglement source using rubidium vapor cells. This produces entangled photon pairs where one photon is near-infrared (matching common quantum memory resonances, like atomic or ion qubits) and the other is telecom. Effectively, this acts as a built-in frequency bridge: the near-IR photon can directly interact with or be stored by an atomic qubit, while its telecom partner travels through fiber. Such sources could solve a “last-mile” problem of connecting a trapped-ion or neutral-atom processor (typically operating at visible/near-IR wavelengths) with the long-distance fiber network without needing separate quantum frequency converters.

Another promising hardware platform – coming from academia but highly relevant – is the use of silicon color centers as spin-photon qubits. In September 2025, researchers at Simon Fraser University (SFU) demonstrated an electrically-triggered spin-photon device in silicon known as a T-center, achieving the first-ever electrically injected single-photon source in silicon. T-centers are atomic-scale defects in silicon that act as quantum bits (with an electron spin) and emit photons at telecom wavelengths (~1326 nm). Previously these T-center qubits were controlled optically with lasers, but the new device integrates diode-like electrical control to excite the color center and emit single photons on demand. This is a significant breakthrough: it means quantum emitters can be built on silicon chips and triggered by electrical pulses, much like classical LEDs or laser diodes. The SFU team showed they could fabricate nano-photonic devices where T-centers are coupled to cavities and both optical and electrical control are combined. According to lead author Michael Dobinson, this opens the door to many applications in scalable quantum processors and networks: “the optical and electrical operation combined with the silicon platform makes this a very scalable and broadly applicable device“.

For Cisco’s vision, technologies like T-centers could be game-changers in the long run. Imagine quantum processors built on silicon that have built-in photon interfaces for networking – effectively quantum NICs (network interface cards) at the chip level. Cisco’s research already talks about developing quantum NICs that connect processors to the network and handle conversion between “computing frequencies and networking frequencies”. A silicon defect qubit emitting telecom photons is a natural embodiment of that idea (spin = processing qubit, photon = flying qubit). While T-centers are still in early research (the SFU demonstration, though promising, needs to be scaled up and integrated into actual qubit arrays), they underscore a broader point: the hardware platform for quantum networking will likely leverage photonics and semiconductor tech for scalability. Whether through solid-state defects like T-centers or other transducers, the ultimate goal is to seamlessly interface stationary qubits with photonic links.

In summary, Cisco’s hardware stack consists of entanglement sources (photon pair generators), quantum switches and optical interconnects, and network interface devices at the quantum nodes. Their entanglement PIC and experiments with dual-frequency sources address the distribution of entangled photons at scale. Emerging tech like silicon spin-photon qubits point toward future quantum nodes that could directly emit/receive photons. Both are needed: photons to carry entanglement across the data center or internet, and quantum memories or processors to use that entanglement for computing.

Software Stack: Quantum Compiler and Networked Applications

On the software side, Cisco has developed a prototype network-aware quantum compiler and two showcase applications (Quantum Alert and Quantum Sync) that run on its unified quantum networking stack. These represent the higher layers of the stack – the “brain” that decides how to use the quantum network to achieve either computational or classical outcomes.

The Network-Aware Quantum Compiler

Distributed quantum computing introduces new challenges: one must decide how to split a quantum algorithm across multiple processors and when/how to communicate (entangle) between them. Cisco’s Quantum Compiler is described as the industry’s first compiler explicitly designed for networked quantum processors. It takes a quantum circuit and partitions it among different QPUs (quantum processing units) in a data center, scheduling the necessary entanglement generation and distribution to link those parts when needed. In essence, it’s a compiler that knows about the network topology and latency, and it can insert quantum teleportation operations or other remote gate operations into the program to coordinate the distributed execution.

A key feature is support for distributed quantum error correction (QEC). This is an “industry first” claim by Cisco – existing compilers target a single device and cannot encode a quantum error-correcting code across multiple machines. Cisco’s prototype can compile an error-corrected logical qubit or circuit that spans networked QPUs. For example, if one wanted to implement a large error-correcting code that no single processor can hold, the compiler could allocate check qubits to different nodes and use entanglement to perform syndrome measurements across the network. This is highly ambitious (and we’ll return to the challenges later), but it’s a necessary step if distributed quantum computing is to eventually tackle algorithms requiring error-corrected qubits.

How does the compiler/orchestrator work under the hood? Cisco’s research paper outlines a two-step process: circuit partitioning followed by network scheduling. In the partitioning step, the logical qubits of a program are mapped to physical qubits on specific QPUs, aiming to minimize the number of “remote” operations (because inter-node gates will be slower and noisier than local gates). This can be framed as a graph partitioning problem: qubits that interact frequently should ideally be on the same QPU, to reduce costly between-QPU gates. The compiler uses heuristics and known algorithms to find a qubit assignment that, for instance, keeps clusters of interacting qubits together and limits the need for entanglement between clusters. It can also account for hardware constraints (like if a QPU has limited connectivity or if certain interactions are easier intra-rack vs inter-rack). One interesting aspect: in a hybrid network (e.g. with racks), the compiler can decide which QPU goes into which rack to balance the use of short-range vs long-range entanglement, formulating it as an optimization problem.

After partitioning, the network scheduler plans the execution of the circuit step-by-step, orchestrating when entangled pairs must be generated and which optical switch configuration is needed to connect the participating QPUs for a given operationg. Cisco uses a scheme of gate teleportation for remote two-qubit gates. In gate teleportation, two entangled pairs (EPR pairs) are consumed to enact an effective gate between qubits on different nodes. The scheduler ensures that these EPR pairs are generated just-in-time for the gate – an “on-demand” entanglement approach. It sends instructions to the quantum network hardware (the switches, sources, etc.) via a classical control system to establish an entanglement link between the needed nodes, performs the remote gate (teleportation protocol), then moves on to the next set of operations. All of this happens under the coordination of a classical central controller that knows the network topology and monitors entanglement attempts. The output of the compiler/scheduler is essentially a list of machine instructions for the networked quantum data center – which qubits to entangle, which switches to route where, which measurements to perform for teleportation corrections, etc., in sequence.

Cisco made the Quantum Compiler prototype available for experimentation at their 2025 Quantum Summit. For organizations planning quantum infrastructure, such a tool is useful for “right-sizing” a quantum data center – figuring out how many nodes are needed and of what type for a given algorithm. For example, a pharmaceutical algorithm for drug discovery might be far too large for any single quantum computer in the next decade, but with a compiler one could estimate how it might be distributed across, say, 4 superconducting processors and 2 photonic processors networked together. The compiler can identify bottlenecks (like entanglement rate requirements between certain nodes) and thus inform the design of the network.

In summary, the network-aware compiler/orchestrator is the brains of the operation, translating high-level algorithms into actions on a distributed quantum fabric. It is a cornerstone of Cisco’s software stack and the glue between the application layer and the control/hardware layers.

Quantum Alert and Quantum Sync – Quantum Networking for Classical Applications

While the Quantum Compiler focuses on quantum algorithms, Cisco also demonstrated two applications that use the quantum network to solve classical problems in new ways – Quantum Alert and Quantum Sync. These are essentially proofs-of-concept that quantum networks can deliver immediate value for security and coordination, even before large-scale quantum computing is available.

Quantum Alert

Quantum Alert is an eavesdropper-detection system providing security guarantees rooted in physics. It uses entangled photons to detect any interception of communication. The principle is similar to quantum key distribution (QKD) in that any attempt by an eavesdropper to measure or tamper with the quantum signals will perturb them and be immediately noticeable.

In Cisco’s demo, entangled photon pairs are distributed through the network alongside normal data traffic; if a “bad actor” tries to snoop, the quantum state is disturbed and triggers an alarm. Because this leverage’s quantum physics (no interceptor can copy quantum states perfectly or measure without trace), it offers provable security beyond classical encryption.

Cisco envisions Quantum Alert as a complementary layer to classical encryption (like post-quantum cryptography), guarding against “harvest-now, decrypt-later” attacks by immediately signaling if a line is compromised. In practice, Quantum Alert could be deployed between data centers or branches – any critical link where intrusion detection is paramount. It’s essentially a quantum-based IDS (intrusion detection system) that doesn’t rely on computational assumptions, only on the laws of quantum mechanics.

Quantum Sync

Quantum Sync is a decision synchronization application that uses entanglement to coordinate outcomes across distributed systems without exchanging messages. Cisco describes it with a fun analogy: Alice and Bob each have a box with a quantum-correlated coin. When they open their boxes, if Alice finds “Heads,” Bob is guaranteed to find “Tails,” and vice versa. In other words, their coin flips are perfectly anti-correlated even though they didn’t communicate to decide who gets heads and who gets tails.

This kind of shared randomness or correlated decision making can be useful in scenarios like high-frequency trading or distributed databases, where two parties need to make consistent split-second decisions without the luxury of network round trips. For example, two trading centers might need to decide which one executes a trade to avoid double-selling – normally they’d send messages back and forth which introduces timing delays and uncertainty. With entanglement, they could agree in advance that “whoever gets heads will execute trade X, the other will refrain,” and by measuring their entangled qubits at the agreed moment, they instantly get coordinated (yet random) outcomes. The benefit is zero latency coordination after initial entanglement setup.

Cisco’s Quantum Sync demo used a network simulator with real quantum protocols to show how correlated decisions could be implemented. This concept is related to quantum-enabled protocols for distributed consensus, leader election, or clock synchronization – all critical functions in distributed systems that normally require communication, which quantum correlations can reduce.

These two demos run on the same unified Cisco stack and hardware. They highlight an important point: quantum networks aren’t just for quantum computing – they can enhance classical systems too. Secure communications (via quantum alerting or QKD), ultra-precise timing (using entanglement to synchronize clocks), and distributed decision making (quantum sync) are near-term applications often cited as low-hanging fruit for quantum networking. Cisco is wise to pursue these because they provide value even if quantum computing progress is slower than hoped. It’s a way to get ROI from a quantum network now, by upgrading classical infrastructure with new capabilities.

To implement these, the heavy lifting is in the protocols and control software (the Control layer). For Quantum Alert, the system must manage entangled photon distribution and monitor correlations to detect anomalies. For Quantum Sync, it must distribute entangled pairs to the parties at the right rate and ensure measurement coordination.

Cisco’s unified software stack is built to accommodate such applications on top of the same entanglement distribution fabric. This demonstrates the flexibility of a full-stack approach: once you have the core ability to distribute entanglement on demand, you can build various services on top by adding the appropriate logic.

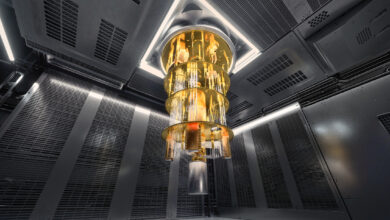

Quantum Data Center Architecture: From Clos Networks to Distributed QPU Clouds

Central to Cisco’s vision is the notion of a Quantum Data Center (QDC) – essentially a modular cluster of quantum processors connected by a photonic network, analogous to a classical data center of servers connected by switches. In the QDC, many small or medium-scale QPUs can act together as a larger quantum computer. But how should one design the network fabric of a quantum data center? Cisco’s researchers turned to classical data center networking for inspiration, exploring several topology designs: Clos (fat-tree), BCube, DCell, HyperX, etc.

In classical terms, a Clos network (also known as a fat-tree) is a multi-layer switch topology that provides high bandwidth and full pairwise connectivity using scalable stages of switches. A BCube or DCell are examples of server-centric networks where each server has multiple network ports and connectivity is achieved by direct links between servers and small switch units, often in a recursively scalable manner. Cisco studied both switch-centric designs (like Clos/fat-tree, HyperX) and server-centric designs (like BCube, DCell) for quantum networking.

Switch-Centric (Clos/Fat-tree)

In Cisco’s proposal, quantum processors are grouped in racks, each rack having a top-of-rack (ToR) optical switch that links the QPUs within that rack. The racks are then interconnected via higher-level optical switches in a fat-tree hierarchy. This is depicted in Cisco’s architecture as a Clos topology (Figure 3a in their paper).

Importantly, the switches in the network are not ordinary data switches – they must be quantum-capable switches equipped with hardware for entanglement generation and routing. For example, a ToR switch might have built-in Bell-state measurement (BSM) modules for entangling qubits within the rack, or entangled photon sources that feed into the rack’s quantum channels.

Cisco’s design uses two wavelength regimes: within a rack (short distances, a few meters of fiber) they operate at the qubit’s native wavelengths (often visible/near-infrared for many platforms), whereas between racks (longer distances), they operate at telecom wavelength for low loss. This means a ToR switch serves as a boundary where wavelength conversion might happen: a photon coming from a qubit (say at 800 nm) is converted to 1550 nm to travel to another rack, or alternatively, Cisco suggests using non-degenerate entanglement sources that directly produce one photon at NIR and its twin at telecom.

In other words, you could have an entangled photon pair source where one photon goes into the local rack (NIR, directly interacting with qubits) and the other photon goes out over fiber at 1550 nm. This clever approach avoids having to place quantum frequency converters at every interface (which are lossy and not yet highly efficient) – instead the entanglement source itself bridges the wavelength gap.

Within a rack, Cisco leans towards using emitter-emitter or emitter-scatterer entanglement protocols (more on these protocols in the next section) because those can operate at the qubits’ native frequency and provide fast local entanglement. Between racks, they plan to use scatterer-scatterer protocols, which naturally fit with the idea of a non-degenerate photon pair source feeding two distant qubits. The rationale is to minimize overhead: intra-rack entanglement can be quick and high-fidelity (no conversion, short distance), whereas inter-rack entanglement might be slower or lower fidelity due to conversions or longer fiber. By doing as much as possible “locally” (within racks) and only resorting to the trickier inter-rack links when necessary, the network can handle moderate-sized jobs with minimal performance penalty. Essentially, it’s a hybrid strategy to optimize for the strengths of each scale.

Server-Centric (BCube/DCell)

In a server-centric quantum network, the quantum processors themselves have multiple ports and can act as part of the routing of entanglement. Cisco illustrated this with a BCube-inspired design (Figure 3b). In a BCube, each QPU connects to a small switch (like a 4-port) forming a unit (BCube0). Then multiple of those units are connected via another layer of switches, and so on, in a recursively defined network. The key difference is that not every pair of QPUs has a direct optical path; entanglement between two distant QPUs may require hopping through intermediate QPUs acting as quantum repeaters. For example, to entangle QPU1 in cluster A with QPU2 in cluster B, QPU1 might first entangle with QPUX in cluster B (hop 1), then QPUX entangles with QPU2 (hop 2), and QPUX performs entanglement swapping (a Bell measurement on its qubits) to link QPU1 and QPU2 together. In this scenario, some QPUs carry the extra burden of entanglement swapping and routing – analogous to multi-hop routing in classical networks, but here the hops are quantum swaps.

Server-centric designs can be more cost-flexible (using many small switches rather than large ones) and allow incremental scaling by adding more nodes with some extra links. They also leverage the fact that quantum nodes can perform deterministic operations (like a controlled-NOT between two qubits it holds) as part of entanglement swapping, potentially with higher success than probabilistic photonic BSMs in a pure switch-centric design.

Cisco notes that in a data center setting, unlike in an open quantum internet, we have a global controller and a structured topology, which can make managing these repeater chains easier than the general case of long-distance quantum networks. For instance, because there may be many parallel paths between two QPUs in a BCube, the orchestrator could schedule simultaneous attempts on multiple paths to speed up entanglement, or choose the least busy path, etc.

However, Cisco also acknowledges that a “full network-aware orchestrator” for a server-centric topology is quite complex and left for future work. In their current work, they focus on switch-centric (Clos) for running simulations and demonstrating the orchestrator, since managing the routing of entanglement through intermediate nodes (with all the necessary synchronization and resource allocation) adds another layer of complexity.

To compare these architectures, Cisco developed simulations to benchmark entanglement rates, latency, and fidelity for representative topologies. In the arXiv paper, they analyze metrics like average circuit execution time and network utilization on a Clos network under various job loads. They also tabulate resource counts for different topology families (e.g., how many switches, how many qubit-to-photon interfaces needed for Clos vs BCube for a given number of QPUs). The high-level finding is that one-to-one peer networks (simple point-to-point links) don’t scale well beyond a few nodes, whereas structured topologies can maintain reasonable entanglement rates as the number of QPUs grows by adding switching infrastructure. This mirrors classical results – a fully connected mesh becomes unwieldy past small N, so indirect networks are necessary. They also demonstrate that by using a modular hierarchical network, one can accommodate heterogeneous device technologies and still keep high fidelity by localizing as many operations as possible. The Clos network, for instance, allowed on-demand all-to-all connectivity via the optical switch fabric, and their scheduler could manage entanglement such that most remote gates used intra-rack links (higher fidelity) except when absolutely needing an inter-rack link.

Importantly, Cisco’s work emphasizes that these architectures aren’t mutually exclusive – a real quantum data center might employ a hybrid. For instance, clusters of QPUs connected server-centrically, with those clusters themselves interconnected by a higher-level switch-centric backbone. The best design may depend on the state of technology (e.g., if quantum switches are harder to scale than using QPUs as repeaters, or vice versa). By prototyping various approaches, Cisco is exploring the trade space of cost, complexity, and performance for quantum data center networking.

Entanglement Distribution Protocols: Emitter vs. Scatterer Tradeoffs

To get qubits entangled across a network, several distinct quantum link protocols can be used. Cisco’s work classifies three main entanglement generation schemes by how the end nodes’ “communication qubits” operate: Emitter-Emitter, Emitter-Scatterer, and Scatterer-Scatterer. Each has pros and cons in terms of success probability, fidelity, and hardware requirements. Understanding these is key to appreciating Cisco’s design choices (as we saw, they assign different protocols to intra-rack vs inter-rack links).

Emitter-Emitter protocol

Both qubits emit photons which are then interfered at a middle station (e.g. a beam splitter with a Bell-state measurement module). If the two photons arrive simultaneously and certain detector clicks occur, an entangled state between the qubits is heralded. This is a widely studied method (used in many NV-center and ion trap experiments): each quantum node must be able to produce a photon entangled with its qubit (e.g., by spontaneous emission or Raman transition), and those photons must interfere indistinguishably. The upside is it’s relatively simple conceptually and symmetric.

The downside is the success probability is typically low – fundamentally, linear optics BSM with two single photons can succeed at most 50% of the time even under ideal conditions (and that’s without considering losses). Equation (10) in Cisco’s paper gives the heralding success probability in a basic emitter-emitter setup: it’s on the order of η₁η₂/2, where η are the transmission probabilities from each emitter to the detector. Loss directly reduces success, and even a single-photon detection can sometimes be a false herald if one photon was lost, leading to a mixed (less entangled) state. They note that if a photon is lost, the single click event produces a “noisy Bell state” (partial entanglement). Time-bin or polarization encoding can eliminate some false positives and reach higher fidelity at the expense of needing two-photon coincidence detection. In any case, emitter-emitter requires precise photon arrival timing (optical path lengths equal to within a photon wavelength) and simultaneous emission from both sides.

This can be challenging as the network scales; path stabilization and synchronization of many sources becomes non-trivial. Cisco’s analysis indeed points out that multi-photon synchronization demands can be a limitation of the emitter-emitter scheme. The benefit of emitter-emitter is that it doesn’t need an extra photon source – the qubits themselves provide the photons – and it doesn’t require one node to actively receive a photon (both just send). Cisco leverages this protocol for short intra-rack links where high speed and not having extra sources is convenient, and where losses are minimal so success rates can be acceptable.

Emitter-Scatterer protocol

One qubit emits a photon, the other qubit interacts with (scatters) that photon, and a subsequent detection heralds entanglement. In this setup, one end (the emitter) must have an emissive qubit; the other end (scatterer) might be a qubit that can reflect or absorb a photon in a state-dependent way.

A simplified picture: the emitter qubit is prepared in a superposition and emits a photon entangled with its state; that photon travels to the second qubit, which is maybe in a superposition of “transparent vs absorbing” states (like a cavity QED system or a spin that can flip the photon’s phase if in state |1>). By the time the photon reaches a detector beyond the second qubit, it carries information that entangles the two qubits. The second qubit’s role as a scatterer means the photon’s outcome depended on its state, thereby entangling them. Notably, here only one photon is in flight, not two, which greatly eases synchronization issues. As Cisco’s paper states, “only a single photonic qubit is transmitted… fewer requirements for stabilization and synchronization… emitter-scatterer is more advantageous when multiple photonic qubits pose significant challenges.”

The success probability of this scheme will be something like η (the chance the emitted photon is detected after interacting with the scatterer), which can be higher than emitter-emitter’s η₁η₂/2 if one path is much more efficient than two.

Additionally, Cisco notes that emitter-scatterer can achieve nominally unity fidelity of the heralded entangled state (aside from detector dark counts). This is because, in an ideal scenario, any detection corresponds to a successful entanglement with no false positives – the presence of the photon at the detector implies the whole protocol went as intended (if the scatterer failed to entangle, presumably the photon wouldn’t be detected with the correct signature).

Practically, emitter-scatterer might be realized in systems like a trapped atom that emits a photon caught by another atom’s cavity, or a quantum dot emitting a photon that is then absorbed by another quantum dot. Cisco sees this protocol as useful especially when dealing with one side that is more passive. It might be needed in some server-centric cases or where one device cannot easily produce photons. However, emitter-scatterer is not compatible with all photonic encodings; their paper mentions it doesn’t work with simple Fock (number) basis photons, instead they consider time-bin encoding for it.

Scatterer-Scatterer protocol

Neither qubit emits a photon; instead, separate entangled photon pairs are sent to both qubits which then scatter those photons, and a joint measurement links the two qubits. This is the most complex but also in some ways the most powerful approach.

Cisco’s design of scatterer-scatterer involves two entanglement photon sources producing two pairs of photons. Imagine source 1 creates photons A (NIR) and B (telecom) that are entangled; source 2 creates photons C (NIR) and D (telecom) entangled. Photon A goes to qubit 1 (to interact with it), photon C goes to qubit 2, and photons B and D go to a BSM station in the middle. If everything succeeds – both qubits scatter their respective photons (which are then detected or cause a state change), and the BSM succeeds on photons B & D – then qubit 1 and 2 end up entangled. Essentially, this is like performing entanglement swapping with the qubits as part of the swap rather than quantum memories.

The advantage of scatterer-scatterer is that it allows fully non-emissive qubits to be entangled, and it leverages non-degenerate entangled photon sources so that, for example, photon A & C are at a frequency well-suited to the qubits (NIR), and B & D are at telecom for transmission. This directly addresses the integration challenge we discussed – you can entangle two remote qubits of possibly different types by giving each a photon that “speaks” its language while the partner photons meet in the middle.

It’s essentially the architecture Cisco described for inter-rack: a dual-frequency entanglement source feeds the two racks (scatterer qubits) and does a BSM at the switch.

The downside, as one might guess, is uber-low success probability if done naively. Here you need three things to happen at once: both entangled sources produce a pair at the same time, both qubit-photon interactions succeed, and the BSM on the photons succeeds. Each of these is probabilistic (SPDC sources have Poisson statistics; BSM has 50% success even with photons; scattering might be probabilistic unless using certain deterministic interactions). Cisco explicitly notes the “stochastic nature of photon pair generation, combined with requiring three detection events, results in significantly low end-to-end generation rates”. They analyze a “brute-force” approach where you just keep pumping the sources and trying, with qubits being reinitialized if a partial attempt occurs (e.g., one photon hit a qubit but the other didn’t, you reset and try again). Surprisingly, they find the distribution of time to succeed is exponential (memoryless) with some rate λ, meaning attempts eventually yield a steady average rate. They justify that even without quantum memories to store intermediate results, this brute-force parallel attempt approach can give a finite entanglement rate because of the finite photon coherence time providing a window for coincidences.

Also, like emitter-scatterer, scatterer-scatterer has no false-positive heralds in principle – only when all three detections occur do you declare success, so the entangled state is high fidelity (again ignoring dark counts). The huge benefit of scatterer-scatterer is flexibility and integration: using nondegenerate sources means you don’t need quantum frequency converters at all, and you can entangle systems that can’t emit or where emitting would be inefficient.

Cisco is effectively betting on this for long-distance and inter-rack entanglement because it plays well with telecom fiber and heterogeneous systems. But until source brightness and maybe quantum memory-assisted protocols improve, the rates will be much lower than short-range links.

To summarize the trade-offs:

- Emitter-Emitter: Simple concept, needs emissive qubits on both ends; challenging to synchronize many emitters; success probability ~ limited by 50% and losses; but good for short links now. Requires two photons per attempt.

- Emitter-Scatterer: One emitter, one receiver; simpler synchronization (one photon); can be high fidelity; asymmetric hardware requirements (one side needs emission capability, the other needs a quantum memory that can interact with a photon); potentially faster if one side is a stationary system that can do deterministic interaction.

- Scatterer-Scatterer: No emitters needed on qubits; uses independent entangled photon sources; great for mixing different frequencies; but triple-coincidence requirement makes it slow without heavy parallelization. Requires the most photonic hardware (two sources, a BSM station) but simplifies nodes.

Cisco’s performance modeling in the paper provides concrete numbers for expected entanglement rates for each protocol given certain hardware specs (loss, detector efficiency, source brightness, etc.). For example, with 10 dB loss and sources at MHz rates, an intra-rack emitter-emitter link might achieve on the order of thousands of entangled pairs per second on average.

Inter-rack scatterer-scatterer links, however, could be orders of magnitude lower if relying on many probabilistic steps – making it the bottleneck for large distances. That is why Cisco’s architecture tries to minimize the need for those inter-rack links for each job, effectively treating inter-rack ebits as a scarcer resource to be used only when necessary.

In summary, Cisco has multiple protocols in its toolbox and can deploy them where each fits best. This multi-protocol support is built into their control layer. The Quantum Compiler/orchestrator can even factor in fidelity differences: their rack assignment optimizer minimizes a weighted sum of inter-rack vs intra-rack entanglements, weighting them by the log of infidelity (i.e., penalizing lower-fidelity inter-rack links more). This ensures the compiled circuit uses high-fidelity links as much as possible. It’s an intelligent use of the protocols to get the most out of the network.

Strengths and Opportunities in Cisco’s Approach

Cisco’s entry into quantum networking brings several notable strengths:

Full-Stack Integration

Cisco is one of the few organizations building both quantum hardware and software under one roof for networking. They’re not just developing a quantum switch or just a compiler in isolation; they are co-designing the chip, the control protocols, and the applications together. This means the pieces are optimized for each other – e.g., the compiler knows the capabilities of Cisco’s entanglement chip and protocols, and the hardware design is informed by the needs of higher-level algorithms.

This holistic approach is likely to produce a more efficient system than a patchwork of disparate components. It’s similar to how Cisco approached classical networks (custom ASICs + IOS software + network protocols all co-developed).

Leveraging Classical Networking Expertise

Designing a quantum data center is as much a networking problem as a quantum physics problem. Cisco brings decades of experience in building reliable, high-throughput networks (switches, routers, data center fabrics). They are repurposing familiar concepts like Clos topologies, network interface cards, and layered stacks, adapting them to quantum contexts.

This is smart – rather than start from scratch, they use well-understood scalable architectures (fat-trees, etc.) as a template and add the quantum twist. That could accelerate development and avoid dead-ends. Cisco also understands operational aspects of data centers (management, monitoring), which will eventually be crucial for quantum data centers too.

Vendor-Agnostic / Interoperability

Cisco’s emphasis on being vendor-agnostic means their solutions aim to connect any type of quantum processor. In a nascent industry where it’s unclear which qubit tech will dominate, this neutrality is an advantage. They can work with superconducting qubits, trapped ions, photonic qubits – potentially even simultaneously if a use-case benefits from different strengths.

For example, one could envision a network where a superconducting QPU does fast logical operations while a stable ion trap QPU provides long-lived memory, networked together. Cisco’s approach of developing quantum NICs and using a dual-frequency entanglement source with rubidium cells suggests they are actively tackling the heterogeneity challenge.

This opens opportunities for partnerships – indeed Cisco mentions working with a startup on the rubidium technology and with UCSB on the chip. Being the “glue” between different quantum machines could position Cisco centrally in the ecosystem.

Immediate Applications (Quantum-Enhanced Classical)

By demonstrating things like Quantum Alert and Quantum Sync, Cisco shows it can deliver value before fault-tolerant quantum computing arrives. This not only is a business opportunity (selling quantum-secure networking or time-sync services to finance customers, for example) but also serves as a testbed to mature their technology in real-world conditions. Each of those applications forces them to refine the entanglement distribution, improve stability, and integrate with existing network infrastructure (since these apps work “alongside” classical systems).

It’s a savvy move to justify investment in quantum networking now, not just for the distant payoff of cloud quantum computing.

Quantum Entanglement Chip – High Performance

The specifications of Cisco’s entanglement PIC are state-of-the-art or beyond. Achieving millions of entangled pairs per second at telecom wavelengths with <1 mW power is a significant achievement. It indicates Cisco has solved some tough engineering problems (coupling light in/out efficiently, stabilizing photon pair generation, etc.)

This source could become a core piece of infrastructure for any quantum network, not just Cisco’s. If made available, others in the industry (research labs, other companies) would likely want to use such sources for their experiments, which could spur a de facto standard. Moreover, Cisco’s mention of integrating arrays of these sources and multiplexing suggests they are thinking about scaling entanglement distribution to many users simultaneously. That’s essential for a quantum internet backbone or a multi-node cluster.

Research Rigor and Transparency

Cisco backed up its announcements with an academic publication and detailed technical blog posts. This lends credibility – they’re not just marketing, they’re contributing to the scientific community’s understanding of quantum networks. The paper provides a foundation others can build on, and Cisco can attract top talent by being visible in research. Their collaborations with universities (UCSB, presumably others, and citing lots of recent literature in the paper) show they’re plugged into the cutting edge.

Overall, this open approach increases confidence that Cisco’s strategy is grounded in real physics and engineering, not hype.

Outshift and Quantum Labs Team

The organizational commitment via Outshift (Cisco’s innovation arm) and the dedicated Quantum Labs means there is a team focused solely on this long-term goal. Having a stable funding and a mandate to develop new tech (rather than needing immediate revenue) is a strength in an area like quantum that requires patience.

Cisco can play the long game, and the presence of experienced names (like Reza Nejabati who has a background in optical networking research, and others on the team) is an asset.

In essence, Cisco is bringing a credible, comprehensive plan to quantum networking. They are not trying to compete in building the biggest quantum computer; instead, they position themselves as the enabler of scaling through networking – a role that plays to their core competencies. If successful, Cisco could become as indispensable in the quantum era as it was in the internet era, providing the “quantum fabric” that underpins everything from distributed quantum computing to secure communications.

Remaining Challenges and Open Questions

Despite the promising progress, significant challenges remain before Cisco’s quantum networking vision can be fully realized. It’s important to critically assess these hurdles:

Quantum Error Correction over Networks

Cisco’s compiler touts support for distributed QEC, but executing an error-correcting code across multiple QPUs is extremely daunting with current technology.

QEC requires frequent, reliable parity checks between qubits – if those qubits reside in different machines, the network must deliver entanglement faster than errors accumulate. Given that today even local (on-chip) logical qubits are just at the cusp of exceeding error thresholds, doing this over a network with additional loss and latency seems out of reach in the near term.

Cisco’s support for distributed QEC is likely in simulation for now. The challenge is that, to correct errors, entanglement fidelity needs to be very high and latency very low, else the error-correction cycle cannot keep up. This will require dramatic improvements in entanglement rates (perhaps via massive multiplexing and parallel generation as Cisco’s chip allows) and perhaps quantum repeaters with memory to buffer entangled pairs so they are ready when needed.

It also raises an interoperability question: error correction protocols (like surface codes) have specific demands – how to synchronize syndrome extraction among nodes, how to route many EPR pairs reliably, etc. Cisco will need to demonstrate at least a small-scale distributed QEC (maybe two small codes on two nodes working together) to prove this capability. As of now, it remains theoretical.

Interface Compatibility and Transduction

While Cisco is platform-agnostic by design, actually connecting heterogeneous hardware is very non-trivial. Superconducting qubits need microwave-to-optical transducers to interface with photonic networks; leading approaches (electro-optic modulators, optomechanical crystals, etc.) are still inefficient and typically require cryogenic operation. Trapped-ion or Rydberg atom systems can emit photons, but often at visible/UV wavelengths that don’t travel far in fiber without conversion. Nitrogen-vacancy centers emit at 637 nm (lossy in fiber, though efforts exist to frequency convert them). The T-center in silicon emits at telecom which is great, but those qubits currently only work at a few Kelvin temperature and are not yet integrated into a full quantum processor.

Incompatibilities between qubit tech and communication channels are a major bottleneck. Cisco’s dual-frequency entanglement source (rubidium vapor cell) is one approach to bridging this, effectively acting as a photonic translator. Another approach is to use quantum transducers as add-on devices (like a quantum NIC box that sits next to a superconducting fridge and converts some of its qubits’ states to optics).

Either way, significant R&D is needed to achieve high efficiency, low noise transduction. Cisco acknowledges this by investigating multiple avenues (III-V PICs, atomic vapor, etc.), but until a few leading platforms demonstrate end-to-end connection (for example, entangling a superconducting qubit with a trapped ion via a fiber link), the heterogeneous network remains unproven.

Fidelity and Throughput Bottlenecks

The success of a quantum data center will depend on actual achieved entanglement rates and fidelities in practice. There are many potential bottlenecks: finite detector efficiency, fiber loss, coupling loss from qubits to photonic channels, decoherence of qubits while waiting for entanglement, etc. For instance, Cisco’s chip can create 200 million pairs/second, but if you need those pairs to go into memory qubits, you might lose most of them in coupling or have to throw away many due to synchronization issues.

Without quantum memory, probabilistic entanglement inherently leads to a lot of wasted attempts – one success means many failed tries that consumed time. Cisco’s simulations likely assume some optimistic hardware parameters (they cite numbers like 10 dB link loss, detector efficiencies, etc.); real-world might be worse.

Additionally, fidelity of entanglement decreases with loss and operation errors. Multi-hop entanglement swapping compounds the infidelity. So, a challenge is how to maintain high fidelity as the network grows. Quantum error correction could in principle help (correcting lost or decohered entangled pairs), but again, that’s far-off.

In the near term, Cisco might face a situation where adding more nodes actually drops the end-to-end entanglement quality or rate to a point where running a distributed algorithm yields no advantage. Overcoming this requires either hardware improvements (better sources, better memories, perhaps moderate-distance error suppression via encoding) or architectural tweaks (like having small clusters fully connected and doing most operations locally).

Scaling the Orchestrator and Scheduler

Orchestrating a few QPUs is one thing; orchestrating dozens or hundreds is another. The scheduling problem for distributing a large quantum circuit across many nodes is combinatorially hard (Cisco notes optimal qubit mapping is NP-hard). Their current solution uses static partitioning and heuristic scheduling. This may be fine for small examples, but as the field moves to bigger problems, more sophisticated methods (dynamic circuit re-routing, adaptive scheduling based on which entanglement succeeded, etc.) will be needed. Cisco’s paper hints at improvements like teleportation-based adaptive partitioning and better algorithms in future.

Moreover, the orchestrator will eventually need to handle concurrent jobs in a quantum data center – scheduling entanglement for multiple user programs at once, akin to how a classical data center handles many workloads. Issues of resource allocation, fairness, and deadlock can arise. None of these are insurmountable, but they add complexity. Cisco will likely need to demonstrate that their scheduler can handle realistic scenarios, perhaps by emulating a multi-user environment or integrating with a cloud quantum service as a backend.

Quantum Repeaters and Long-Distance Networking

Inside a data center (maybe tens or hundreds of meters of fiber at most), one can get away without complex repeaters – Cisco’s design mostly assumes direct fiber or a couple of swaps within the data center. But a lot of the ultimate promise (quantum internet, connecting data centers, etc.) will require linking distant sites. That introduces the need for quantum repeaters – intermediate nodes that store and forward entanglement, performing entanglement swapping with quantum memory.

While Cisco’s focus is on the data center scale, they do mention global quantum networks as a future outcome. Developing a quantum repeater that fits into their stack will be a challenge. Many academic groups are working on repeater protocols with memories, but no clear winner technology exists yet (NV centers, rare-earth doped crystals, neutral atom arrays, etc. have been proposed).

Cisco’s work with rubidium vapor could be an angle to a rudimentary repeater (since vapor cells can serve as memories on the order of microseconds to milliseconds). But building an end-to-end quantum network that spans kilometers with the same reliability as a data center network is a big leap. Likely, Cisco will keep within local or metro range networks for now, but the question looms how to extend beyond.

Standards and Interoperability

If Cisco is networking different vendors’ QPUs, there will need to be standards on the quantum network interface. Classical networks had to agree on things like TCP/IP, Ethernet, etc. For quantum, there are proposals (Quantum Internet Protocol stack ideas, etc.), but nothing like a fully standardized “quantum TCP.” Cisco might eventually push for certain standards (perhaps the way they propose an SDK and APIs for the device layer).

There’s also the interface between quantum and classical control – e.g., a common format for describing a distributed quantum circuit or a common entanglement service API that hardware can implement. As an industry challenge, this remains open. If each company does it differently, networking their machines will be harder. Cisco, with its influence, could help lead standardization, but it’s early.

Security and Classical Control Latency

A more practical challenge: all this entanglement generation and coordination relies on classical communication (to herald success/failure and to perform corrections like in teleportation). The speed of light and classical processing latency means that even if qubits are entangled, using that entanglement involves waiting for signals (e.g., a Bell measurement outcome needs to be sent to apply a Pauli correction for a teleported qubit). In a data center, that might be microseconds (fiber delay ~5 µs/km) plus some processing overhead – small, but when you need thousands of entangling operations, it adds up. Cisco’s orchestrator might need to pipeline operations to hide latency, which complicates things because you must manage dependent operations carefully.

Additionally, because the classical network carries critical signals (and also the synchronization of operations), its reliability and security become part of the quantum network’s reliability. Ensuring the classical control is fast, redundant, and not a single point of failure is an engineering challenge. Cisco likely can handle this (being experts in classical networks), but it’s worth noting that the quantum network is really a hybrid quantum-classical system, and the classical part must be ultra-low-latency and secure.

In summary, Cisco has a lot to prove. They have shown pieces (a great source, a simulator, small-scale demos) – the next step is integration: put it together in a convincing prototype. For instance, a near-term milestone could be entangling two distant superconducting qubits via their photonic entanglement source and a pair of quantum network interface modules, and running a simple algorithm divided between the two (like a distributed Grover’s search on 2 small QPUs). Or demonstrating quantum secure communication between two Cisco routers with the Quantum Alert functioning live. Each challenge above likely corresponds to a future project: developing a quantum memory to pair with the source, improving transduction, enhancing the scheduler algorithms, etc.

Key Benchmarks and Proof Points to Watch

To assess Cisco’s progress and claims, a few key proof points and benchmarks would be very compelling in the coming 1-3 years:

Multi-QPU Distributed Computing Demo

A concrete demonstration of their network-aware compiler running a non-trivial circuit across at least 2 or 3 quantum processors networked by Cisco’s system. For example, executing a small variational algorithm where half the qubits are on one device and half on another, with entanglement swaps orchestrated by Cisco’s compiler, would show end-to-end viability. Metrics to watch: total runtime vs a simulation, fidelity of the final result vs ideal, and how well the scheduler utilized network resources. If they can show that their approach scales a problem that is too large for one machine into two or more machines effectively, that’s huge.

Distributed QEC Experiment

As a stretch goal, demonstrating even a toy version of quantum error correction across two nodes (for instance, a simple repetition code or a small [[4,1,2]] code with two physical qubits on each of two nodes) would validate the concept of networked QEC. One could imagine entangling qubits on two chips to act as a single logical qubit and showing it has improved coherence. This would directly back up the “industry-first distributed QEC compiler” claim with data.

Entanglement Rate and Fidelity Measurements

Independent or published measurements of the Cisco entanglement chip’s performance in situ. For example, reporting that they achieved X entangled pairs per second over Y km of fiber with Z% fidelity and T ms latency would be useful. Especially if they integrate it with memory: e.g., entanglement between two nodes each holding the entangled qubits in memory for some time. Achieving, say, >1000 high-fidelity EPR pairs per second over 50 km would be a strong benchmark (current long-distance entanglement rates are typically much lower in academic experiments). Even within a lab, showing an end-to-end entangled qubit pair fidelity above 0.9 after going through all the hardware (source, fiber, detectors, etc.) would instill confidence.

Quantum Secure Networking in the Wild

Deploying Quantum Alert or a QKD-like system in a real network scenario – perhaps connecting two Cisco data centers or a Cisco office to a customer site – and demonstrating continuous operation, key generation rates, or detection of simulated eavesdropping attempts. If Cisco can show its quantum security layer running alongside classical traffic with minimal issues, it could push the industry toward adoption. Benchmarks like sustained quantum bit error rate (QBER) below a threshold, or the system successfully catching inserted tampering events, would validate the practicality of Quantum Alert.

Time Synchronization/Correlation Advantages

For Quantum Sync or related time sync, one could measure how much better two clocks or trading servers stay synchronized using entanglement vs classical methods. If, for example, entanglement allows coordination with effectively zero messaging, one could quantify the reduction in latency jitter or the improvement in event ordering between sites. A benchmark could be something like achieving synchronization accuracy below a nanosecond between two locations, surpassing GPS or White Rabbit sync, using quantum means.

Integration of a New QPU Technology

If Cisco can show that they successfully integrated, say, an ion trap system and a superconducting system on the same network via their NICs, that’s a powerful validation of the vendor-agnostic claim. A concrete example: entangle a qubit in an ion trap with a qubit in a superconducting chip via a photonic link. This would require a transducer (perhaps the Rb vapor approach or others). Achieving that would be a scientific breakthrough on its own (hybrid entanglement between disparate qubit types), and it would prove Cisco’s framework is flexible.

Scaling Metrics in Simulation

Cisco will likely continue to publish simulation results. Keeping an eye on those, we’d want to see evidence that as they increase number of nodes, their orchestrator and architecture can still deliver useful performance. For instance, simulating 8 or 16 networked QPUs running a distributed algorithm and showing how the time to solution scales or where the bottlenecks arise would be informative. A key proof will be if they can show a case where 2 small QPUs networked actually outperform a single hypothetical larger QPU of equivalent total qubits, due to parallelism or other advantages. That would validate the whole premise of distributed quantum computing.

In short, while Cisco has set the stage with design and prototypes, the next proof points will be integration and real-world testing. As Marin (founder of AppliedQuantum) and a keen observer of this field, I’ll be watching for those concrete demos and data. Quantum networking is moving from theory to practice, and it’s encouraging to see an industry giant like Cisco committing to technical rigor and transparency in that process.

Conclusion

Cisco’s foray into quantum networking represents a bold bet that networking is the key to scaling quantum computing. By assembling a full-stack program – custom entanglement-generating chips, quantum-aware network architectures, and sophisticated distributed computing software – Cisco is essentially trying to fast-forward the timeline for useful quantum applications, much as cluster computing accelerated classical computing. They are targeting both quantum for quantum (networking QPUs to solve bigger quantum problems) and quantum for classical (improving security and coordination in today’s systems), which is a savvy way to hedge bets and create value along the journey.

Technically, Cisco’s approach ticks many right boxes: use telecom photonics for distance, integrate with existing fiber, support any qubit type, and leverage known network topologies for scalability. The recent demonstration of electrically-controlled T-center qubits in silicon further hints at a future where quantum and classical tech converge in chip-scale devices, which would fit beautifully into Cisco’s plans. But formidable challenges lie ahead in turning these blueprints into a working quantum data center. It will require solving issues of loss, fidelity, timing, and error correction that are at the cutting edge of quantum research.

The next few years will be crucial. Cisco will need to transition from discrete prototypes (a chip here, a simulator there) to integrated systems that actually network quantum processors in the lab. If they can demonstrate even small instances of a quantum data center, it will be a landmark – showing the world’s first quantum computing cloud where multiple quantum chips function as one. That could validate the idea that we don’t have to wait for million-qubit processors; we can build them modularly.

From a strategic perspective, Cisco’s involvement also signals that quantum networking is coming of age. It’s not just a academic curiosity; it’s being engineered by a company famous for making networks work at global scale. This will undoubtedly spur competition and collaboration – other players will invest in similar directions, and standards will start to form. For the field of quantum computing, this networking approach might unlock new pathways: perhaps certain algorithms become viable by splitting them, or certain quantum services (like secure communications) become deployable at scale.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.