The DiVincenzo Criteria: Blueprint for Building a Quantum Computer

Table of Contents

Introduction

Quantum computing promises to revolutionize fields from cybersecurity to drug discovery, but building a functional quantum computer is an immense technical challenge. In the late 1990s, physicist David DiVincenzo outlined a set of conditions – now known as DiVincenzo’s criteria – that any viable quantum computing architecture must satisfy.

These seven criteria serve as a checklist and roadmap for engineers and investors alike, defining what it takes to turn quantum theory into a working machine. They have become a de facto benchmark for gauging the maturity of quantum hardware approaches, helping cut through hype by focusing on fundamental capabilities. They illuminate how close (or far) today’s devices are from realizing practical, scalable quantum computing.

Origin and Historical Context of the DiVincenzo Criteria

The idea of quantum computers was first proposed in the 1980s by pioneers like Yuri Manin and Richard Feynman, who pointed out that classical computers struggle to simulate quantum systems. By the mid-1990s, early experiments (for example, with trapped ions and NMR quantum bits) were hinting at quantum logic operations – but there was no clear consensus on what a full-scale quantum computer would require.

David P. DiVincenzo, then a researcher at IBM, tackled this question head-on. In 1996 he introduced a list of key requirements for constructing a quantum computer, later formalized in a 2000 paper titled “The Physical Implementation of Quantum Computation.” These became known as DiVincenzo’s five criteria, with two additional criteria added for quantum communication tasks.

DiVincenzo’s criteria emerged as a response to both excitement and over-optimism in the nascent field. He observed many impressive laboratory demonstrations that, nonetheless, addressed only tiny pieces of the larger puzzle. As DiVincenzo later quipped, the criteria were meant to “shake up” experimenters – a reminder “not to pretend that your impressive little experiment is actually a big step towards the physical realization of a quantum computer!” In particular, he was skeptical of approaches like liquid-state NMR quantum computing, which in the late 90s achieved small quantum logic gates but only in highly mixed (not fully quantum) states. NMR could perform computation on an ensemble of molecules without ever initializing a pure quantum state, violating one of the key criteria. DiVincenzo’s published list explicitly included terms like “scalable” and “fiducial (pure) initial state” as “warning phrases… pointed at the NMR crowd.” In short, the criteria set a higher bar for what counts as a true quantum computer, emphasizing long-term scalability and quantum purity.

Over twenty years on, the DiVincenzo criteria remain remarkably relevant. They have guided the development of leading platforms – from superconducting qubit chips to trapped-ion devices – and are now routinely cited as a yardstick for progress.

Notably, by the 2010s some researchers proclaimed that “all criteria are now satisfied” in certain systems. Indeed, small prototypes today can initialize qubits, perform universal gates, maintain brief coherence, and measure outputs, seemingly ticking all the boxes. However, meeting the criteria on a small scale is necessary but not sufficient for building a large, useful quantum computer. DiVincenzo himself reflected in 2018 that the criteria are “promise criteria” – achieving them means you have all the right components, “such that they could potentially be stuck together to form a system.” The system integration and scaling up is “a monumental task” beyond the basic criteria.

In practice, we have learned that issues like control electronics, crosstalk, cryogenics, and error correction create additional hurdles once you try to network hundreds or thousands of qubits. Nonetheless, the DiVincenzo criteria remain the foundational checklist. They are the first filter by which to evaluate any proposed quantum computing architecture – a way to tell if a given approach even has the ingredients necessary for quantum advantage.

The Five Core Criteria for a Quantum Computer

DiVincenzo’s original five criteria focus on the fundamental requirements for quantum computation itself (ignoring communication for the moment). In plain language, a hardware platform must have:

- A scalable physical system with well-characterized qubits.

- The ability to initialize qubits to a simple fiducial state (e.g., all zeros).

- Long relevant decoherence times, much longer than operation (gate) times.

- A universal set of quantum gates.

- A qubit-specific measurement capability.

Each criterion addresses a crucial aspect of what makes quantum computing possible. Let’s unpack these one by one, using analogies and examples to illustrate their meaning.

1. Scalable Physical System with Well-Characterized Qubits

A qubit is the quantum analogue of a bit – it can exist in state |0〉, |1〉, or any superposition of those. DiVincenzo’s first criterion demands a physical system that can host many qubits, and that each qubit’s behavior is well understood and controllable. There are two keywords here: scalable and well-characterized.

- Scalability means we can build a system with increasing numbers of qubits without a sudden loss of performance or manageability. In classical computing, scalability was achieved by integrating more and more transistors on chips (Moore’s Law) – a feat of engineering scaling. Likewise, a quantum platform must allow adding qubits (from tens to thousands and beyond) without fundamental roadblocks. If your qubits work only in a one-of-a-kind lab setup or if adding more qubits causes exponentially more noise, that system cannot scale to the large sizes needed for useful quantum algorithms. An analogy is building a skyscraper: using bricks (qubits) that can be stacked floor after floor without the building collapsing under its own weight. A scalable quantum system needs a solid architecture that can “rise” to thousands of qubits without breaking down.

- Well-characterized qubits means we thoroughly understand the quantum states and interactions of each qubit. In practice, this implies the qubit’s energy levels and error behaviors are well known and reproducible. All qubits of the same type should behave identically (or be calibrated to effectively be identical). Think of this like standardized parts in a machine – if each qubit were a mystery box with different properties, you could not reliably design complex circuits. Engineers need a datasheet for their qubits, so to speak. For example, superconducting qubits (like transmons) are designed as artificial two-level systems with a known resonance frequency and coupling strength. Trapped-ion qubits use identical atoms (e.g. all Ytterbium ions) so that each qubit has the same internal energy states. Well-characterized also implies the qubit can be mostly isolated to just two levels (suppressing higher unwanted levels) – otherwise it isn’t a true qubit. If a qubit occasionally leaks into a third state or behaves erratically, it’s like a digital bit that sometimes becomes “2” – clearly unacceptable.

Meeting Criterion 1 is a non-negotiable foundation. Without it, any quantum computer design is a dead end. Different hardware approaches have tackled this in different ways:

Superconducting circuits

(Used by IBM, Google, Rigetti, etc.) are seen as highly scalable because they leverage conventional chip fabrication to create many qubits on a chip. IBM’s latest processors integrate hundreds of transmon qubits on a single device. These qubits are relatively well-characterized: each transmon’s resonance frequency and error rates are measured and calibrated. However, maintaining uniform quality as the qubit count grows is challenging – device fabrication variations and microwave control crosstalk can make qubits less identical at scale.

Still, superconducting platforms have shown they can add qubits with design innovations (e.g. IBM’s 127-qubit Eagle chip used layered wiring to scale up without extra noise). Scalability is a strong point, though managing control complexity (wiring hundreds of qubits in a cryostat) remains a practical hurdle.

Trapped-ion systems

(Offered by IonQ, Quantinuum and others) excel in the “well-characterized” aspect. All ions of a given element are virtually identical by nature, which means each qubit has the same energy levels and error characteristics. This homogeneity is a big advantage – it’s like having perfect carbon-copy qubits. Trapped ions can also be reconfigured (shuffled in ion traps), giving flexibility in connectivity.

The scalability challenge, however, comes from controlling large numbers of ions. In a single electromagnetic trap, too many ions (50+ or so) lead to densely packed vibrational modes that are hard to address separately. As one research demo noted, beyond about ~100 ions in one chain, the usual gating methods “become practically unusable” because the qubits’ shared motion can no longer be cleanly controlled.

To scale up, ion-trap architects are exploring modular systems – multiple smaller traps networked together (the so-called QCCD architecture, like a “quantum conveyor belt” moving ions between trap zones). This is still an active engineering problem.

In summary, trapped ions meet the criterion on paper (they have high-quality identical qubits), but scaling to hundreds or thousands of ions will require new tricks (like modular traps or photonic interconnects) to avoid degrading performance.

Photonic qubit platforms

(Pursued by companies like PsiQuantum and Xanadu) approach scalability from yet another angle. Photonic qubits are typically encoded in particles of light (e.g. polarization or path of a photon). Photons do not interact with each other easily, which avoids some noise issues and means you can in principle have many photonic qubits flying around.

Moreover, photonics can leverage semiconductor fabrication to build optical circuits. Photonic qubits are well-characterized in the sense that a photon’s polarization states |H〉 and |V〉 (horizontal/vertical) form a clean two-level system, and photons of the same frequency are identical.

The big question is scalability in generating and manipulating large numbers of photons. Unlike matter qubits, photons are always traveling – so keeping, say, 1000 photonic qubits “in play” simultaneously requires complex sources, beam splitters, and detectors all working in concert. PsiQuantum has argued that photonics is the only path to a million-qubit machine because you can piggyback on existing semiconductor manufacturing for scaling. Indeed, they are investing in silicon photonic chips produced in large-volume fabs to generate and route photons.

As of now, photonic quantum computing is mostly at the few-qubit demonstrator stage, but it theoretically satisfies criterion 1: photons are uniform qubits and, if technology permits, one can imagine scaling sources and detectors to very large numbers. (One encouraging note: photonics doesn’t face a physical limit like space-charge or trap stability – the limits are more about engineering complexity and loss.)

In summary, Criterion 1 (Scalability & well-characterized qubits) forces us to ask of any quantum hardware: Can we make lots of qubits, and do we understand each qubit’s behavior in detail? If not, the approach may work for a science experiment but not for a real computer. This is often where investors should apply scrutiny. For example, early NMR quantum computing experiments in the 1990s could only use a fixed small number of nuclear spins in a molecule (not scalable) and those spins weren’t isolated as true two-level qubits (violating well-characterized), so NMR is no longer seen as a viable path to scale. By contrast, today’s leading platforms (superconducting, ion, photonic, and others like silicon spin qubits or neutral atoms) all have a story for how to add more qubits and maintain consistent control. Whether those stories pan out is the central question of the quantum hardware race.

2. Ability to Initialize Qubits to a Simple Fiducial State

The second criterion demands that we can reset the quantum register to a known starting state – typically the state |00…0〉 (all qubits in “0”). Just as every classical computation starts with memory in a cleared, known state, quantum algorithms require a clean slate. If you don’t know your initial qubit state with certainty, you can’t reliably interpret the output of your computation.

In DiVincenzo’s words, the hardware must be able “to set the qubits to a defined initial state”, often called a fiducial state. Usually this means being able to cool or otherwise prepare each qubit in its ground state (|0〉 for a qubit defined as a two-level system). Why is this so important? Imagine trying to do a calculation on a computer where each memory bit might randomly start as 0 or 1 – you’d get nonsense results. The same holds in quantum: without initialization, the quantum computer is effectively trying to compute on top of leftover junk data (or thermal randomness), and any entanglement or interference will include that unknown garbage.

For non-physicists, a useful analogy is booting up a computer. When you power on a classical PC, part of the boot process is to initialize memory and registers to zero before running a program. In quantum computing, we need a “boot-up” step for qubits. Another analogy: starting a board game, you reset all pieces to their start positions. In quantum terms, each qubit should be reset to a known basis state so the “game” (algorithm) begins in a standard configuration.

Different quantum hardware platforms approach initialization in various ways:

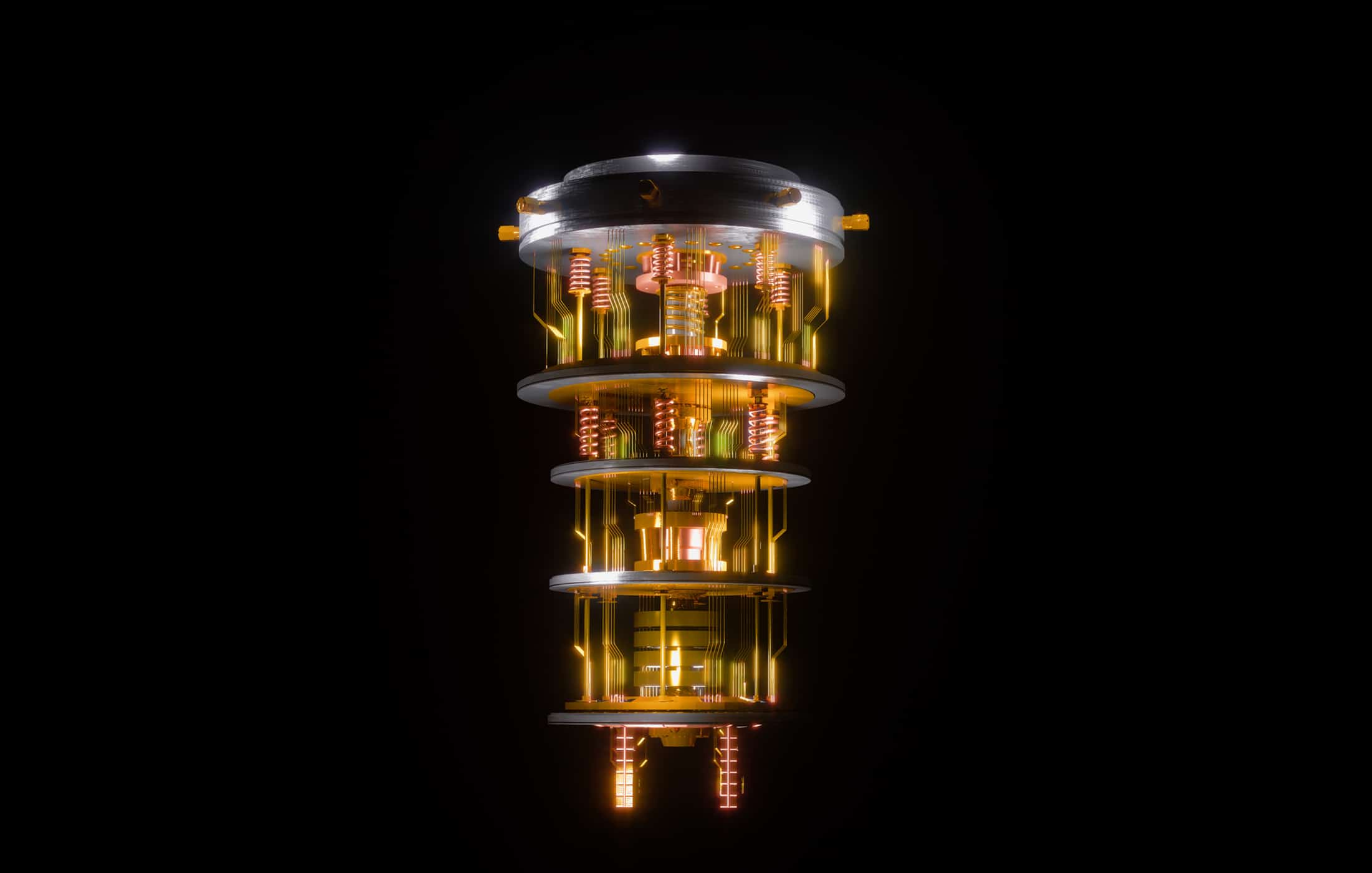

Superconducting qubits

These are often initialized by simply waiting and cooling. At the millikelvin temperatures of a dilution refrigerator, a transmon qubit will naturally relax to its ground state |0〉 with high probability. Any qubit that might be accidentally excited can be measured and reset or simply allowed to dissipate energy into the cold environment. IBM and others sometimes employ active reset schemes: measure the qubit, and if it’s in |1〉, apply a bit-flip gate to bring it to |0〉. Because T₁ relaxation times are on the order of tens of microseconds, passive thermalization is usually sufficient in superconducting systems (which effectively “freeze out” the excited state).

In practice, superconducting platforms satisfy criterion 2 “effortlessly”v– the qubits naturally end up in |0〉 because the excited state is short-lived at cryogenic temperature. This convenient property is a byproduct of using a quantum system that’s strongly coupled to a cold bath (which, while helping initialization, is ironically the same coupling that causes decoherence – a trade-off we’ll revisit).

Trapped-ion qubits

Ions are typically initialized using laser cooling and optical pumping. Laser cooling brings the ions’ motion to the ground state, and optical pumping uses carefully tuned laser light to drive any population from the |1〉 state to |0〉. For example, with Ytterbium ions, one can apply a laser that excites the ion if and only if it’s in the “1” state, causing it to eventually decay to the “0” state (a form of optical pumping). This can prepare >99% of the ions in |0〉 reliably.

Ion qubits thus have a well-established initialization routine: a brief laser pulse sequence that leaves all qubits in |000…0〉. The criterion is comfortably met; as one might say, ions don’t mind starting from zero. The only caveat is the time overhead – optical pumping might take some microseconds per ion, which is usually fine. (In early NMR QCs, by contrast, they could not truly initialize spins to |0…0〉 – they instead created a pseudopure state, which was a workaround that ultimately limited scalability.)

Photonic qubits

Here, initialization is conceptually different. Since photons are typically generated on the fly, “initialization” means being able to produce a known quantum state of light on demand. If you are doing a photonic quantum computation (say via a cluster state or interferometer), you need sources that emit photons in a specific state (usually a known polarization or vacuum+single-photon state). In linear optics quantum computing, one might start with vacuum (no photon, which is a known state) or a standard single-photon in a known mode as the |1〉 and vacuum as |0〉.

This criterion translates to: can we reliably generate our photonic qubits in |0〉 states? In practice, single-photon sources are sometimes probabilistic, but there have been advances in deterministic photon emitters (like quantum dot or defect-based sources that spit out one photon when triggered).

Photonic platforms satisfy this criterion in principle – one can prepare a photon in a known polarization, for example – but efficiency is the concern. If your source only produces a photon 50% of the time, then half the time your “qubit” is missing (which is like failing initialization).

Companies like PsiQuantum are working on integrated photon sources with high reliability. We can say photonic qubits can be initialized (you just choose not to create a photon in the |1〉 rail, etc.), but doing so at scale without loss is a technical challenge. Still, this is largely an engineering issue rather than a theoretical barrier: given good hardware, photonic qubits have well-defined initial states.

In summary, Criterion 2 (Initialization) is about ensuring a low-entropy starting point. It’s not glamorous, but absolutely essential. Quantum error correction, in particular, demands a continual supply of fresh |0〉 qubits to correct errors. If initialization is slow relative to gate operations, one might even need to park qubits offline to re-initialize them while computation continues (DiVincenzo mused about a “quantum conveyor belt” for qubits in systems where reset is slow). Fortunately, most leading platforms have figured out fast ways to reset qubits. When evaluating a quantum tech, one should still check this box: for example, a novel qubit that cannot be reliably prepared in a known state would fail this criterion. So far, superconductors and ions both demonstrate >99% preparation fidelity in the lab, which is sufficient for current needs. Initialization is one area where quantum hardware has made solid progress across the board.

3. Long Decoherence Times Much Longer Than Gate Operation Times

Perhaps the most critical (and famously difficult) requirement is Criterion 3: qubits must maintain coherence long enough to perform many operations. Decoherence is the process by which a qubit loses its quantum state information to the environment – essentially, the qubit’s delicate superposition “falls apart” and becomes a classical mixture of |0〉/|1〉. The decoherence time (often denoted T₂ for phase coherence, or T₁ for energy relaxation) is the characteristic timescale over which this happens.

DiVincenzo’s criterion doesn’t demand infinite coherence, but it does demand that the coherence time be much longer than the time it takes to perform a single gate operation. In practice, “much longer” usually implies by several orders of magnitude – often quoted as at least 104-105 times longer than gate time. The intuition is straightforward: you need to be able to execute a sequence of many logic gates before the qubits forget what they’re doing. If your qubits decohere in, say, 1 microsecond, and each gate takes 0.5 microsecond, you could only do on the order of 2 gates before losing the quantum state – clearly insufficient for any complex algorithm. Longer coherence means you can string together more operations (and/or apply error correction to extend effective coherence further).

An analogy here is writing in disappearing ink: you want the ink to remain visible long enough to finish writing a message (multiple characters). If the ink fades (decoheres) almost immediately, you can barely write a letter. But if it lasts a while, you can write a whole essay before it vanishes – and maybe periodically retrace (error-correct) it to keep it legible indefinitely. Coherence time is our “quantum ink” longevity.

How do the major qubit technologies fare on this front?

Superconducting qubits

Superconducting traditionally had short coherence times, but have improved dramatically. Early superconducting qubits (circa 2000s) had T₂ in the nanoseconds; today’s state-of-the-art transmons boast coherence times in the tens to hundreds of microseconds. For example, a transmon might have $$T_1 \approx 100~\mu$$s (energy relaxation) and $$T_2 \approx 20-50~\mu$$s (phase coherence), depending on shielding and materials. Meanwhile, a single-qubit gate can be executed in ~10-20 nanoseconds, and a two-qubit gate in perhaps ~100 nanoseconds. Thus, even with say 20 μs coherence and 0.1 μs (100 ns) two-qubit gate, one can perform on the order of $$20,\mu\text{s} / 0.1,\mu\text{s} = 200$$ two-qubit gates in sequence before decoherence (roughly – in practice error accumulates continuously).

In many cases, superconducting qubits have a ratio on the order of 103-104 between coherence time and gate time, which does meet the criterion. However, it’s a tight race – superconducting qubits are fast (great for gates) but also leaky (prone to decoherence from electromagnetic noise).

Improving coherence is an active research area: techniques like 3D packaging, better materials (to reduce loss), and noise filtering are all employed. IBM’s and Google’s devices today have each qubit’s environment engineered to minimize loss, but still, having only microsecond coherence means error correction will be needed to go much beyond a few hundred operations reliably. Achieving long coherence in superconductors has been “rather hard,” because the circuits are macroscopic and interact with many microscopic defect modes. The excited state in a transmon can decay in a few microseconds in a bad case, though best-case devices are longer.

The key point: superconducting platforms currently just satisfy criterion 3 in a quantitative sense, but they don’t have as generous a safety margin as ions or some other systems. This is why error rates are higher and quantum error correction is challenging for them without further improvements.

Trapped-ion qubits

Trapped-ion qubits are exemplary in terms of raw coherence. Because ion qubits are stored in internal atomic states that are well isolated from the environment, their coherence times can be extremely long – often measured in seconds or even minutes in certain configurations.

For instance, IonQ reports coherence times on the order of a few seconds for their ytterbium ion qubits. This is millions of times longer than typical gate times, which for ions might be in the tens of microseconds. One reason is that ions use hyperfine states of atoms (which are like stable clock states, very immune to perturbation). In fact, an idle ion qubit is almost like an atomic clock – it can stay coherent so long that other error sources (like ambient magnetic field noise) become the limiting factor.

Thus, trapped ions easily satisfy the criterion that $$T_{\text{coherence}} \gg T_{\text{gate}}$$. This is one of their biggest strengths: an ion can undergo thousands of gate operations before decohering, allowing fairly deep circuits.

However, there’s a catch – as you add more ions in a chain, the motional modes used for multi-qubit gates can decohere faster (vibrational modes are more fragile). In very long ion chains, the effective coherence for two-qubit entangling operations might drop, as noted by research findings (e.g., motional mode decoherence increases with chain length). But with moderate-sized chains and good laser stability, ions are the gold standard for long coherence.

Photonic qubits

Photonic are an interesting case. A single photon traveling through free space or a perfect fiber doesn’t decohere in the usual sense – it will maintain its quantum state (polarization, etc.) indefinitely, as long as it’s not absorbed or scattered.

In other words, photons don’t have a half-life of coherence; their primary “decoherence” mechanism is actually loss (the photon gets destroyed or leaves the system). If the photon remains in the system, it stays coherent. So, one could argue photonic qubits have effectively infinite $$T_2$$ for polarization or path degrees of freedom. This easily fulfills the spirit of Criterion 3: you can send a photon through many optical components (gates) as long as it’s not lost.

The challenge is that in real optical systems, loss is unavoidable – fibers attenuate photons over distance, optical components have finite transmission, etc. For instance, sending a photon through 10 km of fiber will likely absorb most of it, which is analogous to decoherence (the information is lost with the photon). But within an optical chip or a controlled setup, photons can pass through dozens of interferometer gate operations with low loss.

Photonic approaches like cluster-state computing also shift the burden: instead of one photon undergoing many gates, you generate entangled clusters and measure them, which resets things.

Overall, photonic qubits do satisfy the long coherence criterion in principle – their coherence is limited by optical stability and loss rather than an intrinsic short $$T_2$$. This is a big plus for quantum communication tasks especially (flying qubits can go long distances if loss is managed). However, any realistic photonic quantum computer must grapple with loss and mode-mismatch, which effectively limit how many gate operations (e.g., beam splitter interactions) a photon can undergo before being lost.

Current photonic experiments are small-scale, but if one envisions a million-qubit photonic machine, the architects (like PsiQuantum) are focusing heavily on keeping losses low and using error correction to overcome them.

In summary, Criterion 3 (Coherence vs. gate time) gets to the heart of “quantum mileage” – how far can you drive your qubits through a computation before they decohere? Trapped ions get a full tank of gas (seconds of coherence with millisecond gates), superconductors have a smaller tank but a faster engine (microsecond coherence, nanosecond gates), and photons don’t leak gas at all but can crash if the road (fiber) ends.

Meeting this criterion is also where quantum error correction (QEC) enters: if raw coherence is not long enough, you can use redundancy and active correction to extend effective coherence. But QEC itself requires qubits that initially satisfy a minimum fidelity and coherence threshold (often cited around error rates < $$10^{-3}$$ per gate, which ties directly to having sufficient coherence per gate). Investors looking at a quantum startup should pay close attention to reported coherence times and gate speeds. For example, a superconducting qubit company might tout 100 μs $$T_1$$ and 99.9% gate fidelities – indicators that Criterion 3 is just about satisfied enough for small error-corrected logic. In contrast, if a new qubit type has, say, 1 μs coherence and 1 μs gate time, that would be a red flag (only ~1 gate before decoherence!). All serious quantum hardware efforts devote huge R&D to lengthening coherence: it directly dictates how complex a quantum circuit you can run before noise overwhelms the signal.

4. A Universal Set of Quantum Gates

The fourth criterion requires the system to support a universal set of quantum gates. This means we must be able to perform any arbitrary quantum algorithm by composing a finite set of available elementary operations on the qubits. In classical computing, an analogy is having a complete set of logic gates (for example, NAND gates are universal for classical logic – you can build any circuit from NANDs). For quantum, it’s known that a set of all single-qubit rotations plus at least one kind of entangling two-qubit gate (like a CNOT) is universal – in fact, many choices of two-qubit gate would do, such as controlled-Z or iSWAP, etc., combined with arbitrary single-qubit rotations. Essentially, Criterion 4 says the hardware can entangle qubits and perform arbitrary one-qubit transformations; without universality, you could only run a very limited class of algorithms.

Another way to understand this: imagine a classical calculator that could only add but not subtract, or only perform bit shifts but not OR operations – it would be of limited use. Similarly, some quantum systems might naturally do one type of operation (say, they can naturally evolve in a certain Hamiltonian), but we need the ability to orchestrate any operation required by an algorithm, within some tolerance. In practice, universality for hardware means programmability – we can sequence different gate operations in any order to implement algorithms.

How do common platforms implement a universal gate set?

Superconducting qubits

These typically use microwave pulses for single-qubit rotations (X, Y, Z rotations on the Bloch sphere) and a tunable coupling for two-qubit entangling gates. For example, a standard gate set on transmons is {RX(θ), RZ(θ)} (arbitrary single-qubit rotations by adjusting microwave pulse amplitude and phase) plus a two-qubit CNOT or controlled-phase gate achieved by coupling two qubits via a shared resonator or a direct capacitive link.

Superconducting chips have demonstrated all the required gates: one-qubit gates with high fidelity (>99.9% in some cases) and two-qubit gates improving into the 99% fidelity range in best systems. Google’s Sycamore processor, for instance, uses a tunable coupler to enact an iSWAP-like entangling gate and had ~99.4% average two-qubit fidelity in the 2019 quantum supremacy experiment. IBM uses a fixed coupling but brings qubits on resonance to enact a CNOT (or an echoed cross-resonance gate), also hitting around 99% fidelity now.

The variety of gate schemes is actually a strength of superconducting tech – researchers have explored many two-qubit gate designs (couplers, resonator-mediated, flux-tuned, etc.) Universality is well-established; the challenge is doing gates accurately at scale. But as far as Criterion 4 is concerned, superconducting platforms check this box – they can perform any quantum circuit in principle. The speed of gates is a bonus here: sub-100ns entangling operations mean you can do many of them within coherence time (subject to error rates).

Trapped-ion qubits

Ions also naturally allow universal control. Single-qubit gates on ions are done with laser pulses (e.g., a resonant laser gives an “Rabi rotation” on the two-level qubit, equivalent to an X or Y rotation by some angle). Two-qubit gates are typically done via shared motional modes: for instance, the Mølmer–Sørensen gate uses a bichromatic laser field to entangle two ions by transiently exciting their motion, effectively realizing a controlled-NOT or a XX rotation between the qubits. This entangles the pair of ions. By combining such two-qubit gates with single-qubit rotations, one can construct any quantum algorithm. Ion traps have demonstrated a broad array of gates (CNOT, Toffoli with more qubits, etc.) with high fidelity.

In 2016, researchers showed two-ion gate fidelity of 99.9% in a controlled-phase gate, rivaling the best superconducting gates. IonQ and others routinely achieve >97-98% two-qubit gate fidelity on their devices, and 99.9% for single-qubit gates, which is enough for small error-correcting codes.

Universality is not a problem – indeed, one advantage of trapped ions is all-to-all connectivity (each ion can in principle interact with any other via the shared motion), which means you can perform two-qubit gates between arbitrary pairs without needing to swap qubits around. This can drastically reduce the gate depth needed for certain algorithms. The flip side is gates are slower (tens of μs) and as noted, too many ions can complicate the gating (frequency crowding of motional modes).

But conceptually, Criterion 4 is met: trapped ions give a fully programmable, universal gate set. You can prepare any entangled state among the ions by some sequence of laser pulses.

Photonic systems

Universal quantum computing with photons often relies on the KLM paradigm (Knill-Laflamme-Milburn) or more recently, measurement-based quantum computing (MBQC) using cluster states. Linear optics by itself (beam splitters and phase shifters) with adaptive measurements can implement any quantum computation, albeit probabilistically if using only linear elements. However, if you have the ability to generate massive entangled resource states (cluster states) and perform fast measurements, you can achieve universality in a one-way quantum computer architecture.

Another approach is incorporating non-linear interactions (like an optical non-linearity or using matter mediators) to get deterministic two-qubit gates between photons. As of now, photonic platforms lean on MBQC: create a large entangled cluster of photons and then perform single-photon measurements in certain bases to enact logic gates on the remaining entanglement. This is universal in theory. The criterion is satisfied in principle, but executing a full universal set is challenging physically. For example, a two-photon entangling gate can be done by interfering two photons on a beam splitter and using additional ancilla photons and detectors (this was the KLM scheme). It works with a certain probability and heralds success with a detector click. PsiQuantum’s plan is to have a million+ photonic qubits with fusion gates that build cluster states, thereby achieving a universal gate set with error correction overhead.

In simpler terms: yes, you can do a CNOT with photons (there have been small-scale demonstrations), and certainly any single-photon unitary (just a wave plate or phase shifter). The ingredients for universality are there; the issue is making them reliable and scalable. It’s analogous to having a complete toolkit but with tools that sometimes break – you have to apply error correction and repetition to effectively get deterministic gates.

Nonetheless, Criterion 4 doesn’t demand efficiency, just existence of a universal gate set. Photonic qubits pass that bar. Researchers have shown that five optical components (beam splitters and phase shifters) are enough to do any single-qubit unitary, and adding partial SWAP gates or measurement-induced gates covers two-qubit operations. A photonic chip with reconfigurable interferometers (like those used in BosonSampling experiments) is already capable of arbitrary unitary transformations on a set of modes – which equates to a multi-qubit gate set when interpreted appropriately.

In fulfilling Criterion 4 (Universal gates), the key metric to watch is gate fidelity – how error-prone is each operation? A system might be universal in theory, but if the gates are noisy, it limits practical computation. This is where we often hear about “quantum volume” or other composite benchmarks. IBM introduced Quantum Volume (QV) as a single number combining qubit count, connectivity, and fidelity of a system – essentially reflecting how large a random circuit can be successfully run. Achieving a higher QV requires both more qubits and a universal gate set with low error rates. Honeywell (now Quantinuum) set records with ion traps achieving QV=128 with just 6 qubits, thanks to very high fidelity gates. IBM surpassed that by increasing both qubit count and fidelity.

From an investment perspective, a platform that has demonstrated a universal gate set with some level of fidelity is at least viable; one that can’t perform a two-qubit entangling gate at all is a non-starter for general-purpose quantum computing. (Notably, D-Wave’s early quantum annealers bypassed gate logic entirely – they were not gate-model universal machines, so they didn’t meet this criterion. That’s fine for their specialized use, but it’s one reason the community distinguishes annealers from universal quantum computers.)

In short, all leading contenders do provide a universal gate set. The devil is in the details of how easy it is to calibrate and maintain those gates across many qubits. As complexity grows, issues like cross-talk (one gate inadvertently affecting neighboring qubits) arise. For example, superconducting systems experience frequency crowding and unwanted couplings when many qubits are connected. Ion traps likewise see gating slowdowns with long chains. But these are engineering problems; fundamentally, the criterion of universality has been achieved in multiple ways. A quantum computer is only as useful as the variety of algorithms it can run – so universality underpins the claim that these machines could eventually do anything a quantum computer should do, given perfect fidelity and enough resources.

5. Qubit-Specific Measurement Capability

Finally, the fifth core criterion: the ability to measure each qubit individually (qubit-specific readout) without disturbing the others. In a quantum computation, after applying all the gates, we need to extract an answer by measuring (observing) the qubits. Moreover, many algorithms and especially error correction procedures require measuring some qubits mid-computation and not collapsing the state of the rest. So this criterion insists that the hardware support targeted, single-qubit measurements at (or by) the end of the algorithm, with high fidelity and ideally in a quantum non-demolition manner (meaning measuring qubit A doesn’t randomly flip qubit B, etc.).

Imagine a memory chip where you can only read out all bits at once, or reading one bit would randomize the others – it would be nearly useless. We need the quantum equivalent of addressing a specific memory cell. This is straightforward classically because bits are well isolated; in quantum devices, measuring one qubit can be tricky because qubits might be coupled or because measurement apparatus can introduce cross-talk.

Let’s see how each platform does readout:

Superconducting qubits

The standard approach is dispersive readout using microwave resonators. Each qubit is coupled to a resonator with a unique frequency; by probing the resonator, one can infer the qubit’s state (0 or 1 shifts the resonator frequency slightly). In practice, each transmon qubit on a chip has its own readout resonator and amplifier chain. This allows simultaneous, individual readout of all qubits by frequency multiplexing – sending a comb of microwave probe tones and reading the reflected signals. Superconducting qubit readout fidelity has improved with quantum-limited amplifiers (like Josephson parametric amplifiers) to above 95-99% for single-shot measurements, though it sometimes still causes slight qubit disturbance. Importantly, measuring qubit A means dumping photons into resonator A and not into others, so it doesn’t directly measure qubit B. However, if qubits are coupled, there is a possibility of indirect effects (like if two qubits are strongly entangled, measuring one collapses the joint state – but that’s a fundamental quantum effect, not hardware crosstalk). Good engineering ensures each qubit’s readout is as isolated as possible. IBM and Google have demonstrated simultaneous multi-qubit readouts. The fidelity is not perfect, but enough for small algorithms and some error correction routines. Thus, superconductors do satisfy criterion 5 – you can choose a specific qubit and measure its state at the end of a run, with the rest (ideally) remaining in whatever state they were (which might be an entangled state that collapses partially, but that’s expected). One challenge is speed vs. fidelity: faster measurements can suffer more error. Currently ~50-100 nanoseconds per measurement with a few percent error is common. They are working to bring error down (e.g. using more photons in resonator until just below the point it would saturate or disrupt the qubit state). Another issue: in many quantum error correction schemes, you need quantum non-demolition (QND) readout, meaning you can measure a qubit’s syndrome without putting it in an excited state or mixing it. Superconducting readout is fairly QND (it usually leaves the qubit in |0〉 or |1〉 as it was measured, although some excitation can occur if not careful). Overall, this criterion is well handled in superconducting designs by giving each qubit a dedicated “sensor” resonator.

Trapped-ion qubits

Readout is typically done by laser-induced fluorescence. Each ion can be measured by shining a laser that causes, say, the |1〉 state to fluoresce (scatter photons) while the |0〉 state remains dark (this can be achieved by choosing an appropriate optical transition that one state participates in and the other doesn’t). Then a photodetector or camera collects the emitted photons – bright means qubit was |1〉, dark means |0〉. This method is inherently single-qubit-resolved if you can spatially distinguish the ions. In a linear ion trap, ions are in a line, separated by a few micrometers. With a good imaging system, you can focus on each ion or at least tell which ion is bright/dark on a camera sensor. Ion measurements are high fidelity (>99%) but relatively slow (because one often collects photons over tens of microseconds to a few milliseconds to be sure of the state). The slowness isn’t a huge problem if measuring at the end, but it can be if intermediate measurements are needed for feedback within algorithm runtime. Ion systems also sometimes use a trick: they can use auxiliary ions of a different species for measurement, to avoid perturbing the main qubits. For example, one can shuttle an ion to an interaction zone, entangle it with an ancilla, measure the ancilla, and leave the others untouched – but that’s an elaborate approach. In simpler terms, if you want to measure ion #5 out of 10, you shine the detection laser only on ion 5 (maybe via a focused beam or by selectively tuning that ion’s resonance via a shift). The other ions are either not resonant with that laser or are shielded, so they remain in their states. Thus, trapped ions do allow qubit-specific measurement – experimentally, it’s routine to read out the entire register at the end by imaging the chain (each ion’s state is known from whether it fluoresced or not). The criterion demands the ability to measure one without collapsing neighbors; ions can approximate that, though in practice reading one ion’s fluorescence might scatter a bit of stray light onto others’ detectors (a source of error). Researchers mitigate this by, for instance, using different ion species: one species serves as data qubits, another as readout ancilla that can be measured with a different-color laser that doesn’t affect the data ions. This was mentioned in the PennyLane text: using two species of ions can improve measurement isolation. It complicates gates between species, but it’s a known strategy for high-fidelity readout.

Photonic qubits

The measurement of photonic qubits is arguably the easiest of all – you just detect the photon with a single-photon detector. In photonic quantum computing, measurement is often a built-in part of the process (as in measurement-based computation, where measuring certain photons drives the computation forward). You can choose which photon/qubit to measure at which stage; since photons don’t hang around forever, typically you measure them when you’re done using them. There’s no concept of disturbing “neighboring” photonic qubits because photons don’t sit together in a register; they either have separate detectors or are distinguishable by some property (like spatial mode or time slot). Thus, photonic platforms inherently have qubit-specific measurement – you point a detector at the mode carrying the photon you want to measure, and that’s it. Photon detectors, especially superconducting nanowire detectors, can have efficiencies > 90% and give a digital click if a photon is present (which constitutes a measurement in the ${|0〉, |1〉}$ basis of “no photon” vs “photon”). If encoding is polarization, you might use a polarizing beam splitter to direct |H〉 to one detector and |V〉 to another, performing a basis measurement. The only complication is detectors sometimes have dark counts (false clicks) or might not distinguish number of photons (but if your qubit is defined as presence/absence of a single photon, that’s fine). Overall, photonic readout meets and exceeds this criterion – you can measure any qubit at any time by just capturing the photon. In fact, you must measure photonic qubits to do anything with them, since they don’t sit in memory. The ease of distributing and measuring photons is one reason they’re favored in quantum communication.

In meeting Criterion 5 (Qubit-specific measurement), the emphasis is on independence and accuracy. Each qubit’s measurement should accurately project that qubit to |0〉 or |1〉 (with high fidelity) and not mess up the quantum state of any other qubit. For error-corrected quantum computing, measurement fidelity is critical – measuring the wrong result or disturbing other qubits can introduce errors into the error correction itself. For example, in the surface code (a popular QEC scheme), one needs to repeatedly measure syndrome qubits. If those measurements fail or inadvertently flip nearby data qubits, the error correction will not work. Thus, massive engineering effort has gone into improving readout. IBM reported achieving 1% error rates in 50 ns measurements on their superconducting qubits by 2020s, which is quite impressive. Trapped ion companies use EMCCD cameras or multi-channel photomultiplier arrays to read out multiple ions simultaneously with >99% accuracy in a few milliseconds. The trade-off is speed: ions are slower to read, which could be a limiting factor for algorithms requiring adaptive feedback within runtime (though for final output it’s fine).

From an investor/CISO perspective, measurement capability influences how accessible and reliable the computational results are. A quantum computer isn’t useful if you can’t trust its outputs or if extracting the output destroys the computation. The good news is all main approaches have proven they can measure their qubits with high confidence. It’s often the least problematic criterion in the sense that even early experiments could often measure something (the challenging parts were keeping coherence and doing gates, not detecting states). For instance, nuclear spins in NMR were hard to initialize but easy to read as bulk signals; photons are easy to detect but hard to interact. In building a full system, one must also consider readout scaling: can you scale the readout apparatus to many qubits? Superconductors need many microwave lines or frequency-multiplexed readouts (up to 3-4 qubits per readout line currently). Ions need an optical system that can see many ions (some new ion trap designs integrate photonic waveguides to collect fluorescence from each ion more efficiently). So measurement infrastructure is a part of scalability. But conceptually, Criterion 5 is well in hand for the leading platforms.

The Two Additional Criteria for Quantum Communication

In addition to the five criteria above (which suffice for a standalone quantum computer operating in isolation), DiVincenzo added two more criteria to address quantum communication and networking. These come into play if you want to connect quantum computers together or interface a quantum computer with quantum communication channels (for tasks like quantum key distribution, quantum internet, or distributed quantum computing). The two extra criteria are:

- Ability to interconvert stationary and flying qubits.

- Ability to faithfully transmit flying qubits between specified locations.

In simple terms, these deal with sending quantum information from one place to another. A stationary qubit is one that sits in a quantum memory or processor (e.g., an ion in a trap or a superconducting qubit on a chip). A flying qubit is one that can travel – typically a photon through optical fiber or free space. Criterion 6 is about having an interface between the two: can you transfer a qubit’s state onto a photon (and vice versa)? Criterion 7 is about actually sending that flying qubit over distance without losing the quantum information (i.e., low-loss, low-decoherence transmission).

Why do these matter? For a quantum network or distributed system, you need to communicate qubits akin to how classical computers send bits over the internet. For example, quantum key distribution (QKD) protocols involve sending qubits (photons) to remote parties. Or a modular quantum computer might consist of several smaller processors connected by photonic links – requiring conversion of internal qubit states to photons that carry entanglement to another module.

Let’s break them down with examples:

6. Interconversion of Stationary and Flying Qubits

This is essentially a quantum transducer requirement. You have a qubit in your processor – say an electron spin or a superconducting circuit state – and you want to get that quantum state onto a photon that can fly away (or, conversely, take an incoming photon’s state and store it in your qubit).

Different platforms approach this differently:

Superconducting qubits

Superconducting qubits typically operate with microwave photons (with GHz frequencies) which don’t travel far (they get absorbed in most materials pretty quickly and don’t go through optical fiber). To send quantum information from a superconducting processor, one approach is to convert microwave qubit excitations to optical photons (telecom wavelength) which can travel long distances in fiber. This is an active research area: developing microwave-to-optical quantum transducers using devices like electro-optic modulators, optomechanical resonators, or rare-earth doped crystals. Some prototypes have achieved small-scale entanglement transfer from a superconducting qubit to an optical photon, but it’s still at the research stage. So currently, a superconducting quantum computer is not easily networked – it’s like a mainframe with no internet connection, so to speak. However, companies and labs are working on it, as networking will eventually be needed. One partial solution: quantum microwave links over short distances – connecting two chips in the same cryostat via superconducting coax or waveguides. But for long haul, optical is needed.

As of now, I’d say superconducting platforms do not yet fully satisfy criterion 6; they need an auxilliary system (probably an optical interface) to do so. There’s progress (e.g., demonstration of entangled microwave-optical photon conversion), but no commercial device can do it yet. This is an area investors might probe if a startup claims to have a modular superconducting system: How will you connect modules? If their answer is classical links (just send measured bits), then they lose the quantum advantage of distributed entanglement. So criterion 6 is a forward-looking must for them.

Trapped ions

Trapped ions are actually quite adept here: ions can emit photons that are entangled with their internal state. For instance, an excited ion can decay and emit a photon whose polarization is entangled with whether the ion fell to state |0〉 or |1〉. Experiments have used this to link ion traps in different locations by entangling an ion in trap A with a photon, an ion in trap B with another photon, and interfering those photons to entangle the ions (quantum teleportation or entanglement swapping protocols). This is the basis of ion-trap quantum networking: each ion can be a stationary qubit, and when needed, you excite it to generate a “flying” qubit (photon) that travels through fiber to another trap. IonQ and others have demonstrated remote entanglement of ions separated by meters of fiber, and projects exist to extend that to much longer distances using photons. So ions can interconvert – the stationary qubit (ion) couples to flying qubits (photons) via its optical transitions. It’s not 100% efficient, but it works well enough for proofs of concept.

Thus, trapped ions meet criterion 6; indeed, they are one of the leading contenders for distributed quantum computing because of this ability.

Photonic qubits

Photonic qubits by definition are flying qubits. So if your main qubits are photons, you trivially satisfy half of this: you don’t need to convert to a flying particle because they already are one. The question is, do photonic systems need to convert flying qubits to a stationary memory at some point? For purely photonic computing, maybe not – they could process and transmit all in photons. However, for storage or buffering of quantum information (like a quantum memory), one might want to map a photonic qubit into a stationary medium (e.g., an atomic ensemble or a quantum dot) and then later retrieve it. Quantum repeaters, for instance, use stationary qubits (like atomic ensembles or NV centers) to store entanglement between photons arriving, then swap it out. But if we restrict to the context of “a quantum computer connected to a network,” a photonic quantum computer inherently can send qubits out as photons (no conversion needed). If it needed to receive, it would essentially measure the photon or feed it into its optical circuit (which is again just an optical process).

So photonic platforms kind of sidestep criterion 6 – they achieve it trivially (everything is flying), though one could argue they lack a stationary qubit at all, so if anything they’d need the opposite (storing photonic qubits in memory). For the sake of DiVincenzo’s framework, we can say photonic systems satisfy criterion 6 in the sense that they operate in the flying qubit domain inherently, and there are known techniques to temporarily convert photonic qubits into stationary form (e.g., using small loop delays or optical memories) if needed.

Other platforms: It’s worth noting that some qubit types have built-in photonic links. For example, NV centers in diamond (a solid-state qubit approach) can emit photons entangled with their electron spin states, similar to ions, and have been used to entangle remote NV centers over fiber. These satisfy 6 by design (NVs and other quantum dot spins can be seen as intermediary that naturally talk to photons). Neutral atom arrays (Rydberg atom qubits) can also potentially emit photons or be coupled to optical cavities to facilitate photon emission/absorption. Essentially, any platform eyeing quantum networking tends to incorporate an optical interface for qubit state transfer to photons.

7. Faithful Transmission of Flying Qubits

This criterion addresses the actual channel that the flying qubits travel through. It requires that we can send flying qubits from point A to point B with high fidelity, i.e., without losing the quantum information (or at least with known, correctable loss). In practice, flying qubits are almost always photons, so this criterion boils down to: can we send photons over long distances (through fiber or free space) without absorption or decohering their state?

This is basically the domain of quantum communication research. Achieving long-distance quantum transmission has its challenges:

- Fiber optic transmission: Telecom fibers have loss of about 0.2 dB/km in the 1550 nm wavelength band, which means about 1/100 of the light is lost per ~20 km. So beyond, say, 100 km, almost all photons are gone (unless you have quantum repeaters, which themselves require storage and entanglement swapping). Also, dispersion and other effects could in theory affect state (though polarization or time-bin qubit states are robust over fiber with some compensation). So directly, current fiber links without repeaters can do on the order of 50–100 km for quantum signals before losses make it impractical (some QKD systems use 50 km links and still get detectable signals). Progress is being made with quantum repeaters – intermediate nodes that catch and re-transmit entanglement using entanglement swapping, theoretically allowing arbitrarily long chains. But repeaters themselves are essentially small quantum computers (so we circle back to needing the other criteria at those nodes!). As of now, no large-scale quantum repeater network exists, but demonstrations of entanglement over 100 km fiber with trusted nodes have been done.

- Free-space (satellite) transmission: Photons can be sent through air or space; the main issue is scattering and beam divergence. But if you go to space (satellite links), you can transmit between satellites or from satellite to ground with less absorption (above the atmosphere for most of the path). The Chinese satellite Micius in 2017 famously demonstrated quantum entanglement distribution and QKD between distant ground stations by beaming photons from a satellite, achieving entanglement over 1200 km. This showed that faithful transmission is possible on a global scale provided you handle losses by acceptable rates and maybe pre-share a lot of entangled pairs to have some survive. The fidelity of the surviving pairs was high, it’s just that many photons were lost. Criterion 7 is about faithfulness, which implies we care that the state doesn’t get depolarized or decohered. Loss is an issue but in principle if you know a photon was lost, you just have no outcome (erasure). The worst is partial decoherence (like rotation of polarization unpredictably due to the channel). Fibers can have polarization mode dispersion, etc., but these can be compensated with calibration.

From a platform perspective:

Superconducting qubits

If they solve conversion to optical, then same story – photons can then be sent. If they try to send microwave photons through air or waveguides, that’s only good for short distances (within same cryo or maybe chip-to-chip). So for long haul, they must rely on optical photon transmission, which reduces to above cases.

Trapped ions

Trapped ions with their photons: They often emit in UV/visible spectrum which doesn’t travel far in fiber (high loss). So one must convert wavelength (e.g., use a quantum frequency conversion device to translate a 369 nm photon from Yb+ into a 1550 nm photon for fiber). This has been demonstrated with some efficiency. So ions plus such converters can do moderate distances in fiber. The faithfulness then depends on how good the conversion is and fiber attenuation. Experiments entangling ions in separate labs via optical fiber over 10-100 m have worked; extending to many kilometers will likely require integrating with telecom fibers and repeaters. Not trivial, but no fundamental roadblock beyond building those devices.

Photonic systems

Photonic systems naturally are built to transmit photons, so they embody criterion 7, but with the caveat that optical infrastructure needs to be very low-noise. Techniques like using quantum repeaters or entanglement swapping help extend distance. In essence, criterion 7 may be satisfied in a limited sense today (e.g., up to tens of kilometers in fiber, or satellite-to-ground links) with existing tech. Achieving it at intercontinental distances will require repeater networks or quantum satellites. But for a quantum computer investor, the question is: can this approach hook up to a network when needed? Photons at telecom wavelengths are the best flying qubits we have – so any platform that can interface with telecom photons inherits the best possible channel.

It’s worth pointing out an analogy: Classical networking vs quantum networking. In classical, you amplify signals to go long distances. In quantum, you cannot clone or amplify unknown qubit states (no-cloning theorem), so you can’t just boost a photon’s signal mid-way without measurably collapsing it. That’s why quantum repeaters must use entanglement swapping and purification (which is like error correction for channels). This criterion implicitly assumes we either have low enough loss or have quantum repeaters in place to achieve “faithful” transmission at the desired distance. Right now, a quantum investor might look at companies working on quantum networking gear – for instance, startups making quantum memory devices or QKD systems. Those directly address criterion 7 by building the infrastructure.

In summary, Criteria 6 and 7 (quantum communication) extend the quantum computing paradigm into a networked context. They become very relevant when you consider scaling beyond a single machine. A large-scale fault-tolerant quantum computer might actually be a network of smaller modules entangled together – meeting these criteria would be necessary for that modular approach. Likewise, if one worries about distributed quantum threats (like an attacker storing quantum entanglement in one place and doing part of a computation in another), these criteria determine viability. Today, these two criteria are partially met by some platforms (ions, NV centers, photonics) and are an area of intense R&D for others (superconducting). For pure quantum computing needs (like breaking RSA in one machine), you don’t need quantum communication. But for quantum cryptography and scaling up via modular systems, you absolutely do.

From an investor perspective, if a startup claims to have a quantum networking solution or plans to connect processors, ask: Do they have a way to convert qubits to photons and back? What distances can their photons travel? A company focusing on quantum repeaters would be tackling criteria 6 and 7 head-on, by making devices that store and forward entanglement with minimal decoherence.

Implications for Investment Readiness and Quantum Tech Feasibility

For CISOs concerned about when quantum computers might threaten current cryptography, and for investors deciding which quantum ventures to fund, the DiVincenzo criteria are a valuable framework. They help separate mere lab demos from architectures that can eventually become full-stack, scalable quantum computers. Here’s how the criteria inform an evaluation of maturity and feasibility:

- If a technology fails any one of the first five criteria, it’s not a viable quantum computing approach – at least not without major breakthroughs. For example, if a qubit design can’t be scaled beyond a handful (Criterion 1) or can’t be initialized or measured reliably (Criteria 2 or 5), it might make for interesting physics but not a computer. Investors should be wary of proposals that hand-wave away these basics. Historically, proposals like NMR quantum computing hit a wall because they violated Criterion 2 (no pure-state initialization) and Criterion 1 (not scalable to large qubit counts). Similarly, some solid-state approaches with exotic qubits might struggle with Criterion 3 if coherence is too short. When doing due diligence on a quantum hardware startup, mapping their specs to DiVincenzo’s list is a quick litmus test:

- How many qubits can they realistically scale to, given their approach (ion traps, for instance, might say “we can do 50 easily, and then use modular tricks for more” – one can then judge that plan)?

- What’s their qubit coherence vs. gate time? (A rule of thumb: if coherence isn’t at least 100–1000 times gate time, they will need heavy error correction from the get-go, which might be impractical in near-term.)

- Do they have high-fidelity gates and readout demonstrated? A quantum computer is only as good as its worst component – a chain is as strong as its weakest link. So if their two-qubit gate fidelity is lagging far behind others, that’s a risk.

- Criteria as roadmap milestones: Investors often ask companies for their technical roadmaps. The DiVincenzo criteria can serve as milestones on those roadmaps. For instance, a superconducting qubit startup might timeline: “By year X, demonstrate >50 μs coherence (Criterion 3 progress), by year Y, achieve 99.9% two-qubit fidelity (Criterion 4), by year Z, integrate an optical interface for networking (Criteria 6/7).” If a company can articulate how they will meet each criterion as they scale (and by when), it indicates a deep understanding of the challenges. If they ignore or gloss over one of them (“oh, we’ll figure out measurement later” or “scaling isn’t an issue, we just need more money to copy-paste qubits”), that’s a red flag. It’s akin to a car maker ignoring the need for a steering system because they focus only on engine horsepower.

- Understanding trade-offs: The criteria also highlight that different quantum tech choices involve trade-offs. An investor seeing flashy headlines (e.g., “Startup X achieves 1,000 qubits” vs “Startup Y achieves 99.99% fidelity”) needs context – these correspond to different criteria emphases. A balanced quantum computer needs all criteria satisfied, so extreme strength in one area doesn’t guarantee success if another area lags. For example, D-Wave’s early annealers had thousands of qubits (scalability!) but they weren’t universal gate quantum computers (violated Criterion 4) and had limited coherence (violated Criterion 3 for gate model computing). Thus, they were great for specific optimization problems but not a general threat to encryption. By checking each criterion, one can gauge where a platform’s weaknesses lie. Superconducting systems today have scaled to hundreds of qubits (Criterion 1 check) but are approaching the limits of their dilution refrigerators and control wiring (future scaling will require new innovations in multiplexing or 3D integration). Ions have superb coherence and fidelity (Criteria 3 and 4 check) but must show they can scale beyond ~100 qubits with a modular architecture (Criterion 1 pending for large scale). Photonics might in the next few years demonstrate a small error-corrected logical qubit using thousands of physical photonic qubits – if they do, it proves they can tame Criteria 1,3,4 in a very different way (lots of qubits, error correction to handle shortfalls in gate reliability).

- Timeline to impact (CISO perspective): A CISO preparing for post-quantum cryptography is essentially guessing how long until a quantum computer can factor RSA-2048 or break elliptic-curve crypto. That likely needs thousands of logical (error-corrected) qubits, which in turn means millions of physical qubits at current error rates. DiVincenzo’s criteria can help estimate this timeline: we’d need a platform that can scale qubits (Criterion 1) to million-range, keep coherence for maybe billions of gate operations (Criteria 3 & 4 with heavy QEC), and so on. Today, no platform is there yet, but we see steady progress:

- Superconducting qubits: If coherence and fidelity improve incrementally, one could imagine reaching the required scale by late this decade or next for breaking certain key sizes – but it’s far from guaranteed. Many criteria must hold up when going from 100 qubits to 1,000,000 qubits (think: fridge engineering, yield of fabrication, cross-talk elimination – these are extensions of Criterion 1 and 3).

- Trapped ions: They might achieve logical qubits with fewer physical qubits due to higher fidelities. Companies like Quantinuum already demonstrated logical qubit memory with 99.4% sustaining fidelity using 11 physical ions for a Bacon-Shor code. But linking many traps via photons will be needed to reach crypto-breaking scale – meaning Criteria 6 and 7 become relevant. Will ion traps network effectively? Possibly in small numbers at first. So a CISO might conclude: No imminent threat yet, but if multiple criteria’s curves (like qubit count and error rate improvements) are all pointing upward, the risk within 5-10 years becomes tangible. The criteria provide a structured way to ask hardware vendors the right questions: “What’s your two-qubit fidelity? How many qubits can you run without significant crosstalk? Can you keep qubits coherent long enough for the depth of Shor’s algorithm needed for RSA-2048 (which is huge)?” The answers indicate how close we are. If a technology can’t see a path to meet one of the criteria at the required scale, that could delay or negate the threat to current cryptography.

- Investment focus – beyond qubit count: As an investor or tech leader, it’s tempting to focus on single metrics (like qubit count, similar to focusing only on CPU GHz in classical CPUs). The DiVincenzo criteria remind us that quantum computing is a multi-dimensional challenge. You might use a balanced scorecard approach: rate a given technology on each of the seven criteria on a scale (maybe 0 to 5). For example, superconductors might score: Scalability=4 (they’ve shown medium scaling), Initialization=5 (easy), Decoherence=3 (okay but needs improvement), Gates=4 (universal, but errors could improve), Measurement=5 (solid), Conversion=1 (not yet), Transmission=1 (not yet). Ions might score: Scalability=2 (not scaled beyond tens yet), Initialization=5, Decoherence=5, Gates=5, Measurement=4 (high fidelity but slower), Conversion=4 (yes via photons), Transmission=3 (photons yes, but distance limited so far). Photonics: Scalability=3 (chip integration potential high, but not proven at scale), Initialization=4 (sources exist but efficiency can improve), Decoherence=5 (photons don’t decohere, just loss), Gates=2 (probabilistic, reliant on measurement – many overheads), Measurement=5, Conversion= N/A (5 in context, since they are flying), Transmission=5 (it’s their natural strength). Such a scorecard, though qualitative, could help identify which approach is best suited for different applications or timelines. It also suggests a diversified investment strategy: because each approach excels in some criteria and lags in others, investing across multiple quantum technologies hedges bets. For instance, a near-term application (like quantum networking for secure comms) might benefit from photonic/ion systems (strength in criteria 6,7), whereas long-term computing might be between superconductors, spin qubits, or topological qubits (if they materialize) focusing on criteria 1-5.

In conclusion, the DiVincenzo criteria provide a timeless checklist for the dream of a working quantum computer. They were forged in an era when it wasn’t clear any of this was achievable; now, after two decades, we’ve seen each criterion addressed in isolation by various experiments. The next grand challenge – and the focus of many startups and research programs – is to satisfy all criteria simultaneously and at scale. This means integrating solutions: e.g., a large-scale superconducting system will eventually need a quantum interconnect (maybe linking multiple chips, invoking criteria 6-7). Or an ion trap network will need engineering to hold many ions stable while interfacing with photonic links, etc.

For investors, using these criteria to vet a business plan is crucial: Does the company acknowledge and have a plan for each of these requirements? A startup might have an excellent qubit coherence (Criterion 3) via some new physics, but if their scheme cannot be scaled or read out or error-corrected easily, it may not win out. Conversely, a well-rounded approach that systematically improves on all fronts (IBM’s integrated approach or IonQ’s careful incremental improvements) is more likely to yield a quantum advantage and, eventually, a return on investment.

For CISOs and strategists, the criteria are a lens to watch the field’s progress. When a major announcement is made – say a 1000-qubit prototype – one should ask: And how about fidelity and coherence? Or if someone demonstrates a high-fidelity logical qubit: And how many physical qubits did that use; is it scalable? Only when all criteria are reasonably met in a single platform will we truly be in the era of practical quantum computing. That convergence will signal that the technology is maturing from lab demos to an engineering discipline – at which point, the implications (both positive in terms of computing breakthroughs, and concerning in terms of cryptographic vulnerabilities) will rapidly come to fruition.

In summary, DiVincenzo’s criteria remain the North Star for quantum computing development. They encapsulate the hard problems that every quantum hardware effort must solve.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.