IBM’s Roadmap to Large-Scale Fault-Tolerant Quantum Computing (FTQC) by 2029 – News & Analysis

Table of Contents

Introduction

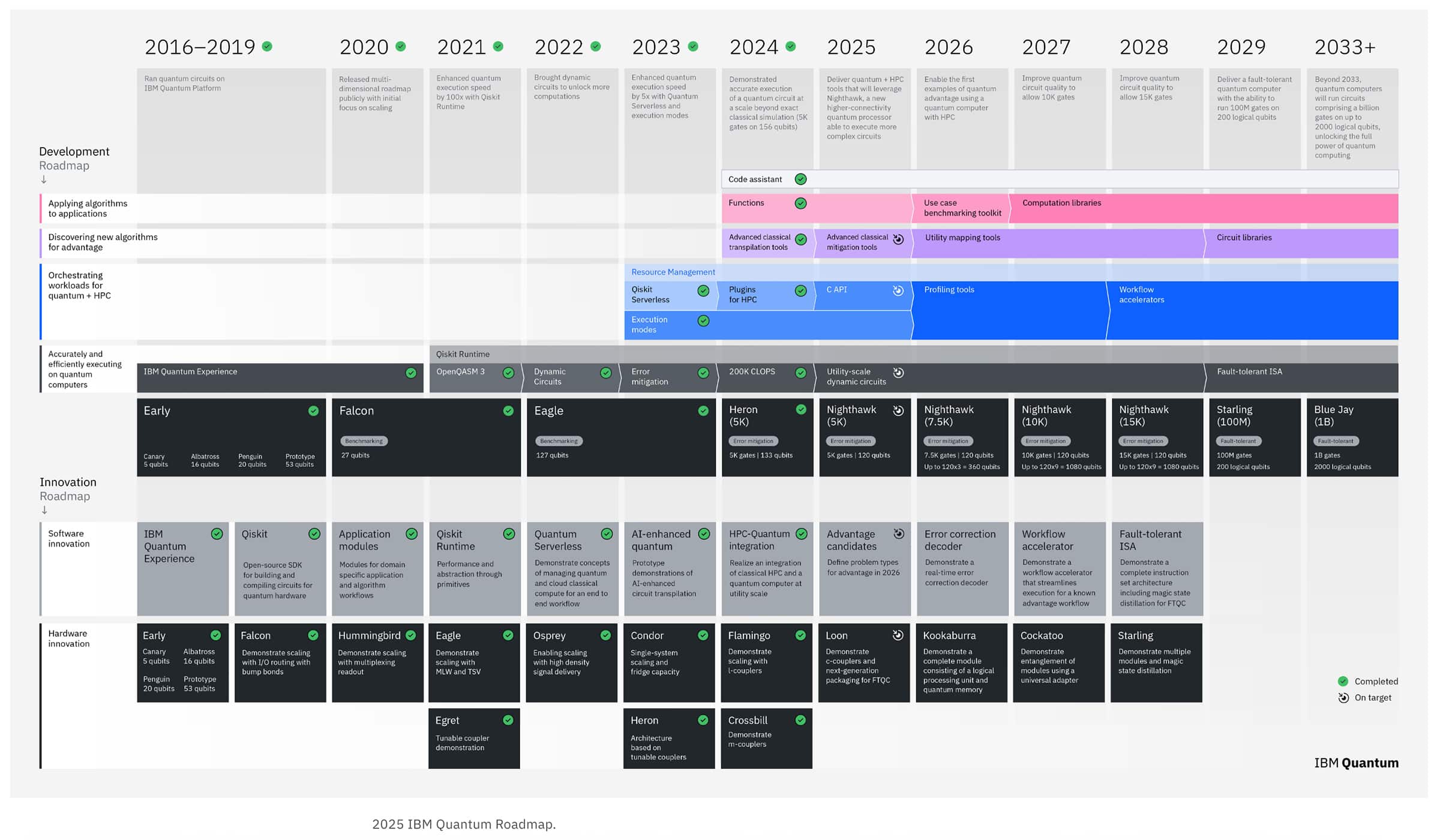

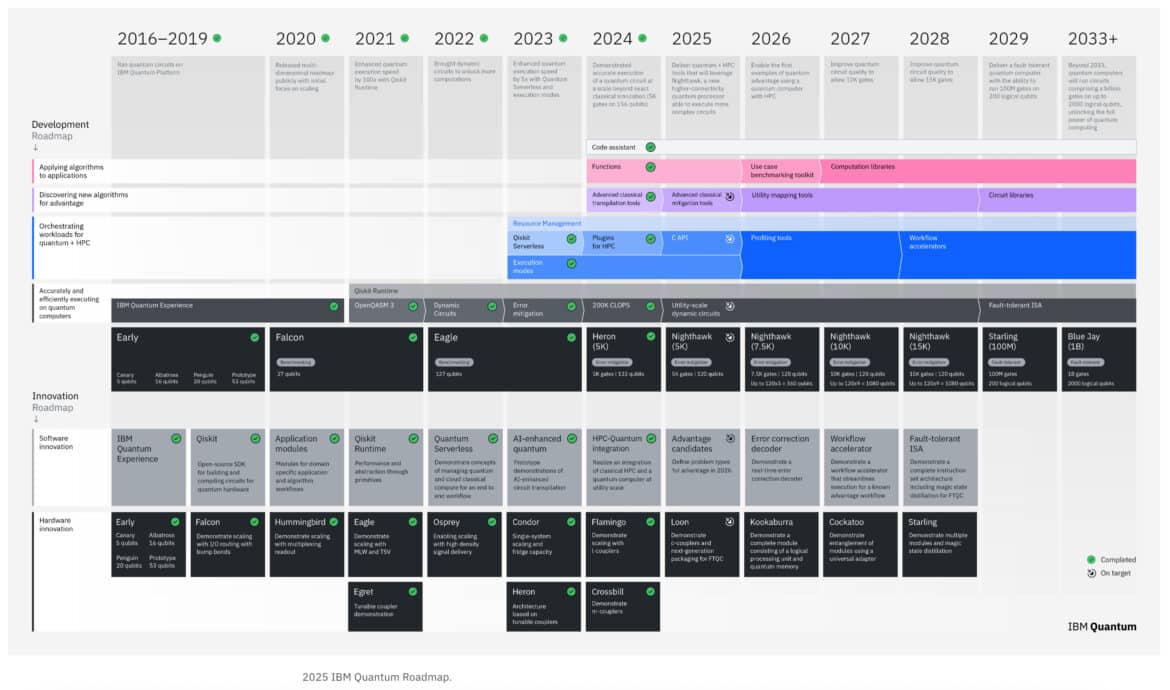

June 10 2025 IBM made a landmark announcement outlining a clear path to build the world’s first large-scale, fault-tolerant quantum computer by the year 2029. Codenamed IBM Quantum “Starling,” this planned system will leverage a new scalable architecture to achieve on the order of 200 logical (error-corrected) qubits capable of executing 100 million quantum gates in a single computation. IBM’s quantum leaders described this as “cracking the code to quantum error correction” – a breakthrough turning the long-held dream of useful quantum computing from fragile theory into an engineering reality.

IBM used the occasion of quantum computing roadmap update to declare that it now has “the most viable path to realize fault-tolerant quantum computing” and is confident it will deliver a useful, large-scale quantum computer by 2029. The centerpiece of this plan is IBM Quantum Starling, a new processor and system architecture that IBM says will be constructed at its Poughkeepsie, NY facility – a site steeped in IBM computing history. Starling is slated to feature about 200 logical qubits (quantum bits protected by error correction) spread across a modular multi-chip system, rather than a single huge chip. According to IBM, Starling will be capable of running quantum circuits with 100 million quantum gate operations on those logical qubits. For context, that is orders of magnitude beyond what today’s noisy intermediate-scale quantum (NISQ) processors can reliably do. IBM emphasizes that achieving this will mark the first practical, error-corrected quantum computer – a machine able to tackle real-world problems beyond the reach of classical supercomputers, thanks to its scale and reliability.

From Monolithic to Modular Quantum Chips

A core theme of IBM’s announcement is the transition from today’s “fragile, monolithic” chip designs toward modular, scalable, error-corrected systems. Up to now, IBM (and most industry players) built quantum processors on single chips with qubits laid out in a planar array (IBM’s 127-qubit Eagle and 433-qubit Osprey chips are examples). These monolithic chips are limited in size and are not error-corrected – more qubits tend to introduce more noise. IBM’s new approach with Starling is modular quantum hardware: multiple smaller chips or modules will be interconnected via quantum links, allowing qubits in different modules to interact as if on one chip. IBM previewed this modular design with its IBM Quantum System Two infrastructure and experiments like the “Flamingo” coupler that demonstrated microwave links between chips. By distributing qubits across replaceable modules connected quantumly, IBM can scale to much larger qubit counts than a single chip can support. Crucially, this modularity is paired with long-range entanglement – qubits on different chips can be entangled through couplers, overcoming the short-range connectivity limitations of a 2D chip lattice. IBM’s 2025 roadmap calls for a stepwise implementation of this modular architecture: for example, IBM Quantum “Loon” (expected in 2025) will test the new inter-chip couplers and other components, followed by Kookaburra (2026) to combine error-corrected memory with logic operations, and Cockatoo (2027) to demonstrate multiple modules entangled via a “universal quantum adapter”. All these lead up to Starling as the first full-scale fault-tolerant system in 2028–2029. In short, IBM is moving from building bigger single chips to building better systems of chips – a modular quantum compute unit that can be expanded piece by piece.

“Cracking” Quantum Error Correction with LDPC Codes

Perhaps the most significant technical breakthrough underpinning IBM’s plan is its quantum error correction (QEC) scheme. Rather than the well-known “surface codes” used by others (which arrange qubits in a 2D grid with local redundancy), IBM is betting on quantum low-density parity-check (LDPC) codes – specifically a family of codes IBM developed called “bicycle codes.” In 2024, IBM researchers published a Nature paper on a bivariate bicycle (BB) code that demonstrated a 10× reduction in qubit overhead compared to surface codes. In simple terms, QEC works by encoding one “logical” qubit of information into many physical qubits, so that if some of the physical qubits get corrupted by noise, the logical information can still be recovered. Surface codes typically might need on the order of ~1,000 physical qubits to encode 1 logical qubit at an error rate suitable for large algorithms. IBM’s new LDPC-based code is far more resource-efficient: for example, one instance encodes 12 logical qubits in 288 physical qubits (a [[144,12,12]] code), achieving the same error suppression as surface code but with an order of magnitude fewer qubits. This is a game-changer for scalability – it means far fewer physical qubits are required to achieve a given computing capability. IBM’s Vice President of Quantum, Dr. Jay Gambetta, boldly stated, “We’ve cracked the code to quantum error correction”, describing the new architecture as “an order of magnitude or more more efficient” than surface-code-based approaches. By combining these LDPC codes with the modular hardware (which provides the long-range connectivity the codes require), IBM’s “bicycle architecture” can create logical qubits that are robust against errors without impractical overhead. The bottom line: IBM’s Starling will use error-corrected logical qubits from day one, not just raw physical qubits. IBM believes this development cracks the last big scientific hurdle and that nothing fundamentally unknown remains – it’s now a matter of engineering scale and integrating the system.

Key Highlights of IBM’s FTQC Announcement:

- 200 Logical Qubits by 2029: IBM’s updated roadmap targets IBM Quantum Starling by 2029, a fault-tolerant quantum computer with ~200 logical qubits (protected by quantum error correction) running on an estimated ~20,000 physical qubits. This system is expected to execute circuits with 100 million quantum gate operations on those logical qubits, a performance level unimaginable on today’s devices. IBM projects Starling to be built at its new quantum data center in Poughkeepsie, and to be “the world’s first large-scale, fault-tolerant quantum computer.”

- Modular “Bicycle” Architecture: Starling inaugurates a new modular architecture that IBM calls the bicycle architecture. Instead of one giant chip, Starling will link multiple quantum processor chips through high-fidelity couplers, effectively forming one large, distributed processor. This modular approach, combined with long-range entanglement links, meets the connectivity needs of IBM’s LDPC error correction code (which requires linking distant qubits in the code’s parity-check graph). It also means the machine can be scaled by adding more modules, akin to a multi-core supercomputer design, overcoming the size limits of a single die. IBM had already prototyped pieces of this with IBM Quantum System Two and research chips, but Starling will be the first full implementation in a replaceable module design.

- Quantum LDPC Error Correction (vs. Surface Codes): IBM is pivoting from the traditional surface code (used by competitors like Google) to quantum LDPC codes, which dramatically reduce overhead. In IBM’s 2024 experiments, a quantum LDPC code achieved the same error suppression as a surface code using 10× fewer qubits. “This architecture doesn’t require anything we don’t know how to build. It requires fewer qubits, so it’s easier to do,” explained Dr. Gambetta, underscoring the efficiency gain. Fewer qubits per logical qubit also mean fewer operations and easier error decoding – overall, a more hardware-efficient path to scale. IBM’s team has developed fast FPGA/ASIC-friendly decoders to correct errors in real-time (detailed in a second paper released on arXiv). By “cracking the code” of QEC with LDPC, IBM claims to have solved the scientific puzzle of building a large fault-tolerant quantum machine.

- Toward a “Useful” Quantum Computer: IBM’s leaders emphasized that Starling is being designed as a useful quantum computer – one that can solve practical, impactful problems, not just run abstract demos. Use cases highlighted include chemistry and materials science simulations, complex optimization problems, and solving scientific equations (like certain PDEs) that are intractable for classical HPC. IBM also implicitly acknowledges cryptanalysis as a frontier application – noting that the industry’s quest for a “cryptographically-relevant quantum computer” (one capable of breaking modern encryption) is a major driver behind these efforts. By achieving a fault-tolerant architecture, Starling would be capable of running deep algorithms like Shor’s factoring or Grover’s search that require long circuit depths. “These computers that people dreamed about – I feel very, very confident we can build them now,” said Gambetta, expressing that after years of research, the project has moved from pure science into engineering execution. IBM is already planning “Blue Jay”, a successor system by 2033 with 10× the scale (on the order of 2,000 logical qubits, or ~100,000 physical) to further expand what problems can be tackled.

Overall, IBM’s June 2025 news marks a pivot point in quantum computing. The company has publicly committed to a deadline – a 200-logical-qubit fault-tolerant quantum computer by 2029 – and backed it up with a detailed roadmap of intermediate milestones and a stack of research results to justify their confidence. They are moving beyond incremental qubit count increases toward a full stack redesign: new codes, new chips, new interconnects, new cryogenic infrastructure, and co-designed software (IBM’s updated Qiskit Runtime and error mitigation tools were also mentioned as part of enabling this scale). This cohesive effort has led analysts to note that IBM appears to have “solved the scientific obstacles to error correction” and now holds “the only realistic path” toward building such a machine on the announced timeline. In the next section, we’ll analyze what this breakthrough means for the wider industry and, critically, for cybersecurity experts who worry about quantum threats to encryption.

Analysis: Implications for Cybersecurity and the Quantum Race

A Quantum Leap for Cryptography Timelines

IBM’s fault-tolerance roadmap should ring alarm bells (or at least wake-up calls) for cybersecurity professionals. A large-scale, error-corrected quantum computer has long been synonymous with the ability to run Shor’s algorithm and break public-key cryptography like RSA and ECC. That capability was often assumed to be “a few decades away,” but IBM’s 2025 announcement, alongside similar goals from others, suggests the early 2030s as the new horizon for a cryptography-breaking quantum machine. In fact, just days before IBM’s news, researchers from Google Quantum AI published findings that RSA-2048 could be cracked by a quantum computer with 1 million qubits running for about a week – potentially by 2030, given the accelerated improvements in algorithms and error-correction. This is a much shorter timeline than previously thought (their estimate uses 20× fewer qubits than earlier projections), compressing the expected arrival of “Q-Day” (the day quantum computers can break current crypto) into the next 5–7 years. Likewise, Gartner analysts now predict that “quantum computing will weaken asymmetric cryptography by 2029,” and NIST has urged organizations to migrate off RSA/ECC by 2030 in preparation. In this context, IBM’s plan to have a 200-logical-qubit machine by 2029 indicates that a cryptographically relevant quantum computer (perhaps a few thousand logical qubits capable of breaking 2048-bit RSA) could indeed materialize in the early 2030s. The implication for security leaders is clear: the quantum threat to classical encryption is no longer a distant hypothetical – it has a target date and a concrete R&D roadmap behind it. Every development that brings fault-tolerant quantum computing closer is effectively pulling Q-Day nearer on the calendar.

LDPC Codes vs. Surface Codes – Why It Matters

One of the less obvious but crucial implications of IBM’s approach is the dramatic reduction in physical qubits needed per logical qubit thanks to quantum LDPC codes. This is not just a technical detail; it directly affects how soon a quantum computer can break encryption. If breaking RSA-2048 is estimated to require, say, on the order of thousands of logical qubits with a certain error rate, a surface-code based machine might need on the order of millions of physical qubits to support that. IBM’s LDPC-based design could potentially achieve the same with only hundreds of thousands of physical qubits, because it encodes information more efficiently (10× or more). In other words, IBM’s “bicycle” QEC code can reach a given logical capacity with far fewer resources – which accelerates the timeline for reaching cryptographically relevant scale. This partially explains why IBM is aiming for useful fault-tolerance by 2029 with ~20,000 physical qubits (yielding 200 logical), whereas conventional wisdom a few years ago might have assumed you’d need >1 million physical qubits to get even 100 logical qubits. IBM’s gambit on LDPC codes is essentially trading code complexity for qubit count savings. However, it’s worth noting that IBM’s approach requires more sophisticated connectivity (long-range links) and fast classical decoding – challenges they appear to be addressing with their modular hardware and custom decoders. If successful, IBM’s method could become a template for the industry: many experts have begun to recognize that surface codes, while simpler, impose a punishing overhead that may make timelines longer. By showing a working LDPC-based logical qubit (IBM already demonstrated a 12-qubit error-corrected “quantum memory” in 2024) and now planning a full logical QPU, IBM is narrowing the gap to practical quantum computing. For cybersecurity, this means the barrier to breaking encryption is lower than we thought – fewer qubits and fewer years may be needed. It’s a reminder that progress in quantum error correction can be just as impactful as progress in raw qubit count.

IBM vs. The Competition – Who Gets to Fault Tolerance First?

IBM’s 2029 target puts it in direct competition with other quantum technology leaders, all racing toward a large-scale error-corrected machine. Here’s how IBM’s timeline stacks up against a few key players:

Google Quantum AI (Superconducting Qubits)

Google announced back in 2021 an aim to build a “useful, error-corrected quantum computer” within the decade (i.e., by 2029). Google has been pursuing the surface-code approach and has demonstrated logical qubits with small code distances. In early 2023, Google reported a milestone of reducing error rates by increasing code size (a step toward the logical “break-even” point). However, Google’s strategy likely requires a million or more physical qubits in a vast 2D array to reach cryptographically relevant scales. Google’s current hardware (Sycamore processors) are ~70-100 qubits in size, and they have a long road to scale up. While Google’s timeline is similar to IBM’s (2029 goal), IBM’s explicit plan for 200 logical qubits with modular scaling and LDPC codes potentially gives IBM an edge in efficiency. It’s notable that Google’s own researchers have started exploring novel encoding and error-correction tricks (e.g., *“layered” surface codes and magic-state factories) to cut down qubit needs. The race between IBM and Google might come down to engineering execution: IBM’s integrated approach (designing the full stack and manufacturing in-house) versus Google’s research-driven approach with academic collaborations. As of mid-2025, IBM has set a very concrete hardware roadmap, which arguably makes it a front-runner – even Google’s team will surely be studying IBM’s papers to adopt any advantages they can.

PsiQuantum (Photonic Qubits)

Startup PsiQuantum has long been extremely ambitious in its claims – it famously set out a goal to build a 1-million physical qubit, fault-tolerant photonic quantum computer by 2027 by using silicon photonics and optical interconnects. Their approach is outline in their Blueprint. While that timeline proved optimistic, PsiQuantum recently secured nearly $1 billion in funding to build a “utility-scale” photonic quantum computer in Brisbane, Australia, with a target of one million physical qubits by 2029 as well. Their approach is also modular: thousands of small photonic chips networked together with fiber optics, all operating at a relatively warm 4 K temperature (photons don’t require ultra-deep cryogenics). By 2027, PsiQuantum hopes to have a partially operational system, and by 2029, a fully error-corrected million-qubit machine that could deliver on commercial use cases. If they succeed, that could mean hundreds of logical qubits (since photonic error correction codes also have overhead, though details are sparse). It’s a very different technology stack from IBM’s (photons vs. superconducting circuits), and PsiQuantum’s progress is less public – they haven’t announced logical qubit demonstrations yet. IBM’s 200 logical qubits in 2029 might be rivaled by PsiQuantum’s claim of a full fault-tolerant system by then, but many in the industry see PsiQuantum’s timeline as aggressive. The key contrast: PsiQuantum is aiming straight for a million physical qubits (to get enough logical qubits for useful tasks) in one big leap, whereas IBM is incrementally building up to tens of thousands of physical qubits with a clear intermediate milestone at 200 logical. It remains to be seen which path will hit the target first, but if IBM meets its goals, it could very well beat PsiQuantum to delivering a working “useful” quantum computer.

Quantinuum (Trapped-Ion Qubits)

Quantinuum (the company formed by Honeywell Quantum and Cambridge Quantum’s merger) is pursuing fault tolerance using trapped-ion technology, which currently offers some of the highest-fidelity qubits albeit at slower gate speeds. In late 2024, Quantinuum unveiled an “accelerated roadmap” aiming for fully fault-tolerant, universal quantum computing by ~2030. Their plan involves scaling from the current generation H2 ion trap (which has 32–64 physical qubits) to a next-gen system called “Apollo” that will incorporate thousands of physical qubits and deliver hundreds of logical qubits by the end of the decade. Quantinuum has already demonstrated small logical qubit arrays – as of 2024 they reported 12 logical qubits on their H2 system via a partnership with Microsoft, up from 4 logical qubits earlier. Their roadmap explicitly states that reaching “hundreds of logical qubits” will unlock broad quantum advantage in areas like finance, chemistry, and biology. In comparison to IBM, Quantinuum’s timeline is roughly the same (2030 for full fault tolerance) and they are steadily improving their qubit counts. However, scaling ion traps to thousands of qubits is a different challenge (it may require modular optical connections between ion trap modules – a strategy IonQ and others also plan). Quantinuum’s CEO has claimed they have “the industry’s most credible roadmap” to fault tolerance, and indeed their achievements in logical qubit fidelity are impressive. Still, IBM’s 200 logical qubits on superconductors by 2029 would likely outscale anything Quantinuum has publicly projected prior to 2030. IBM also touts the advantage of having a full stack in-house (fabrication, cryo, control electronics), whereas Quantinuum relies on extremely specialized ion trap hardware (leveraging Honeywell’s expertise). The competition here is less direct since the technologies are different, but from a cybersecurity perspective, either platform reaching a few hundred logical qubits could threaten encryption. IBM’s aggressive scaling might make it the first to cross that threshold, although Quantinuum will not be far behind if they execute their roadmap.

(Others: It’s worth noting that many other players are in the race too – e.g., Intel is researching silicon spin qubits with a focus on manufacturability, IonQ is pursuing modular trapped-ion systems, Rigetti and Google working on superconducting multi-chip integrations, Microsoft exploring topological qubits, etc. The three above, however, are among the leaders with explicit fault-tolerance timelines.)

From Scientific Speculation to Engineering Execution

A striking aspect of IBM’s announcement (and echoed by others in the field) is the shift in mindset – quantum computing is no longer viewed as a distant science project but as an engineering project with deliverables and deadlines. “The science has been de-risked… now it comes down to engineering and building it,” said IBM’s Jay Gambetta, emphasizing that the remaining challenges are about scale-up, integration, and reliability – hallmarks of engineering work. In practical terms, this means we will see fewer research papers about fundamental quantum gate physics and more about systems integration, error suppressing software, fabrication yields, cryogenic packaging, etc. IBM’s construction of a dedicated Quantum Data Center in Poughkeepsie to house Starling and subsequent systems is a sign of this industrialization. The company is partnering with universities, national labs, and even government agencies (e.g., IBM participates in DARPA’s quantum programs) to build out the supply chain and technology stack needed. We’re essentially witnessing the birth of a new quantum computing industry that treats qubit scaling like the semiconductor industry treats transistor scaling. For cybersecurity and IT leaders, this transition from “if and when” to “who and how soon” should be a strong signal that quantum disruption is getting real. Just as the 1940s–50s saw electronic computers move from lab curiosities to operational machines (often spurred by government and military investment), the late 2020s are poised to be the era when quantum machines move from research labs into working systems that can be used to solve problems – including problems one might not want solved, like cracking cryptography.

Urgent Call to Action – Upgrade Your Cryptography Now

The developments of 2025, led by IBM’s roadmap to a fault-tolerant quantum computer, reinforce a critical message: organizations must accelerate their transition to post-quantum cryptography (PQC). Waiting until “a big quantum computer is here” is not an option – by then it will be too late. Data that is sensitive and long-lived (health records, state secrets, intellectual property, etc.) is already at risk today from the “harvest now, decrypt later” tactics of adversaries, who can intercept and save encrypted data now in hopes of decrypting it when quantum capabilities come online. The early 2030s – barely 5-7 years away – is looking increasingly plausible for when quantum attackers might have the tools to break RSA/ECC. Industry standards bodies (NIST, ETSI) have been sounding this alarm for a few years, but now the timelines are tightening. As the CSO of a Fortune 500 or a government agency, one should treat IBM’s announcement as the canary in the coal mine: a public demonstration that the quantum countdown is on. Forward-thinking security teams are already beginning to inventory their cryptographic assets, prioritize which systems to upgrade first, and implement PQC algorithms (like those standardized by NIST in 2022-2024) in a phased manner. Gartner recommends having replacement of vulnerable crypto in place by 2030, and full removal by 2035, to stay ahead of the threat. That means large institutions have essentially one tech refresh cycle to get PQC done. The progress IBM and others are making should dispel any remaining complacency – as one analyst put it, this is a “wake-up call for measured urgency, not panic”. The good news: just as the quantum hardware is moving from science to engineering, the countermeasures (PQC) are also moving from theory to deployment. The challenge for cybersecurity leaders is mainly one of implementation and timing – start planning, testing, and rolling out PQC solutions now so that your organization is quantum-safe by the time machines like Starling come online.

Conclusion

IBM’s June 2025 roadmap revelation is a watershed moment in quantum computing, marking the beginning of the fault-tolerant era and putting the world on notice that scalable quantum machines are coming this decade. The takeaway is twofold. First, quantum computing is rapidly transitioning from lab research to a competitive engineering race, with IBM currently in a leading position by virtue of a clear plan for 200 logical qubits by 2029 using innovative QEC techniques. Second, this quantum race has direct consequences for cybersecurity: the risk timeline for quantum-breaking of cryptography has accelerated, and the window to prepare is closing. IBM’s claim of “cracking the code” to quantum error correction is not just a scientific achievement – it’s a starting gun. The next few years will likely see extraordinary leaps (and no doubt some setbacks) as companies strive to build these fault-tolerant quantum computers. By 2029, if IBM’s confidence is well-placed, we will see a machine that can reliably run quantum algorithms of unprecedented scale – algorithms that could revolutionize chemistry and medicine, optimize complex systems, and yes, soon after, render current encryption algorithms obsolete. Every organization reliant on cybersecurity must act with that future in mind.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.