New Study Shows Post‑Quantum Cryptography (PQC) Doesn’t Have to Sacrifice Performance

Table of Contents

20 Mar 2025 – An interesting new paper was published by Elif Dicle Demir and colleagues, “Performance Analysis and Industry Deployment of Post‑Quantum Cryptography Algorithms.”

Last year I wrote about the infrastructure challenges of “dropping in” post‑quantum cryptography (PQC) and cautioned that the road to quantum‑safe communications is not as simple as swapping out RSA or elliptic‑curve cryptography. Our existing internet protocols were designed around small keys and lightweight signatures. PQC algorithms, particularly lattice‑based schemes like Kyber and Dilithium, have larger key sizes and messages, which can stress network protocols and legacy devices. I also warned that while some lattice schemes are fast, side‑channel hardening and lack of mature libraries could increase CPU cost. Those concerns remain valid.

However, the conversation should not be dominated by pessimism. The quantum threat is coming, and we need an honest assessment of PQC performance in real workloads. This new paper, “Performance Analysis and Industry Deployment of Post‑Quantum Cryptography Algorithms,” provides exactly that. The authors benchmark the NIST‑selected schemes CRYSTALS‑Kyber (a key encapsulation mechanism) and CRYSTALS‑Dilithium (a digital signature scheme) and compare them against traditional RSA and ECDSA. They also explore real‑world deployment in telecommunications networks. The findings are encouraging: post‑quantum security does not necessarily come at the cost of performance.

Benchmarking Kyber and Dilithium

To make fair comparisons across algorithms, Demir et al. establish a controlled benchmarking environment. Each cryptographic operation – key generation, encapsulation (or signing), and decapsulation (or verification) – is executed 1,000 times on a 3.3 GHz CPU with both the reference implementation and an AVX2‑optimized version. AVX2 provides vectorized polynomial arithmetic; the authors compute the speedup factor by dividing reference execution time by AVX2 time. Classical schemes (ECDH/ECDSA/RSA) are benchmarked using OpenSSL under the same conditions. This approach avoids apples‑to‑oranges comparisons and isolates CPU execution time from network delays or I/O.

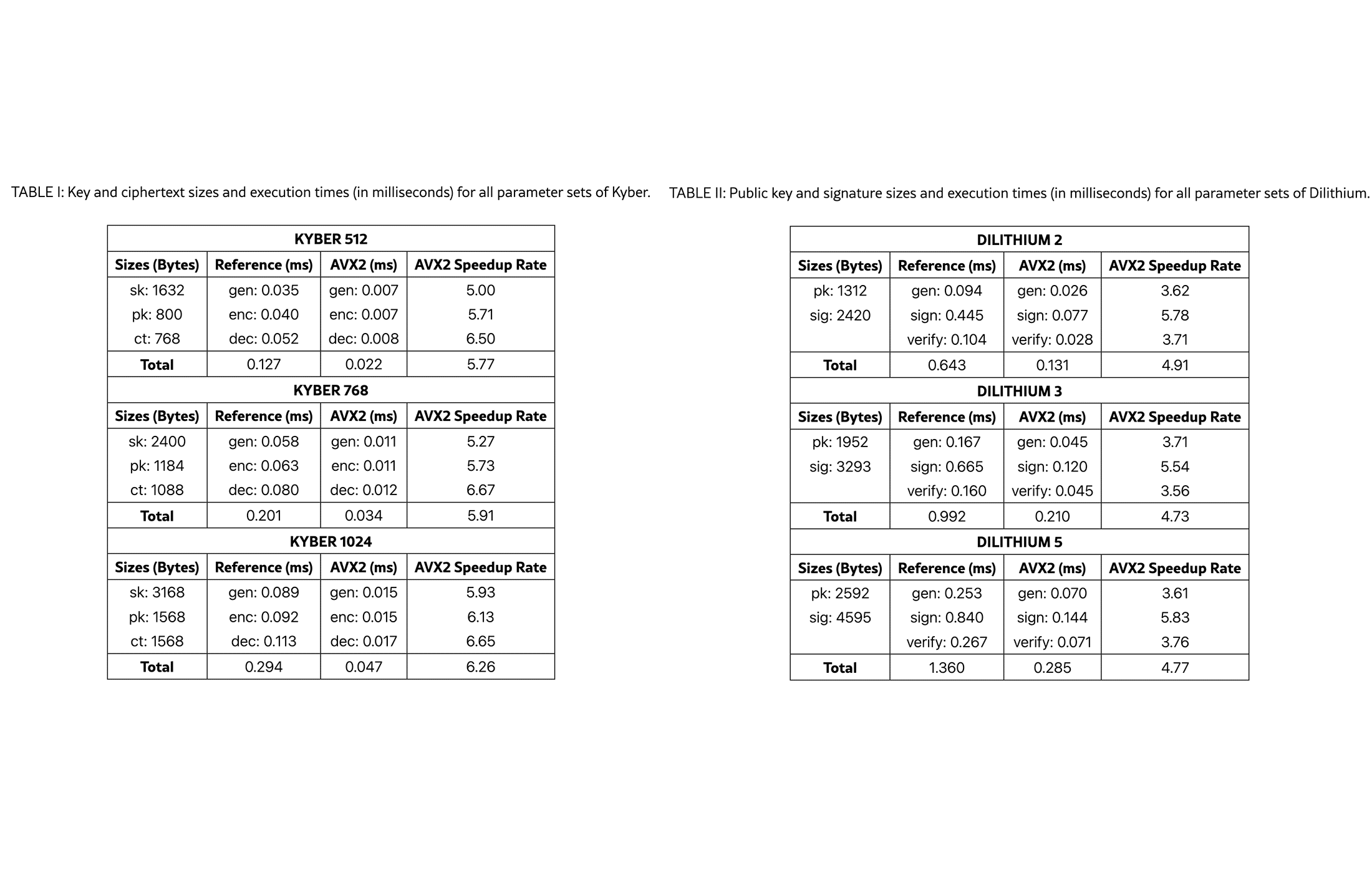

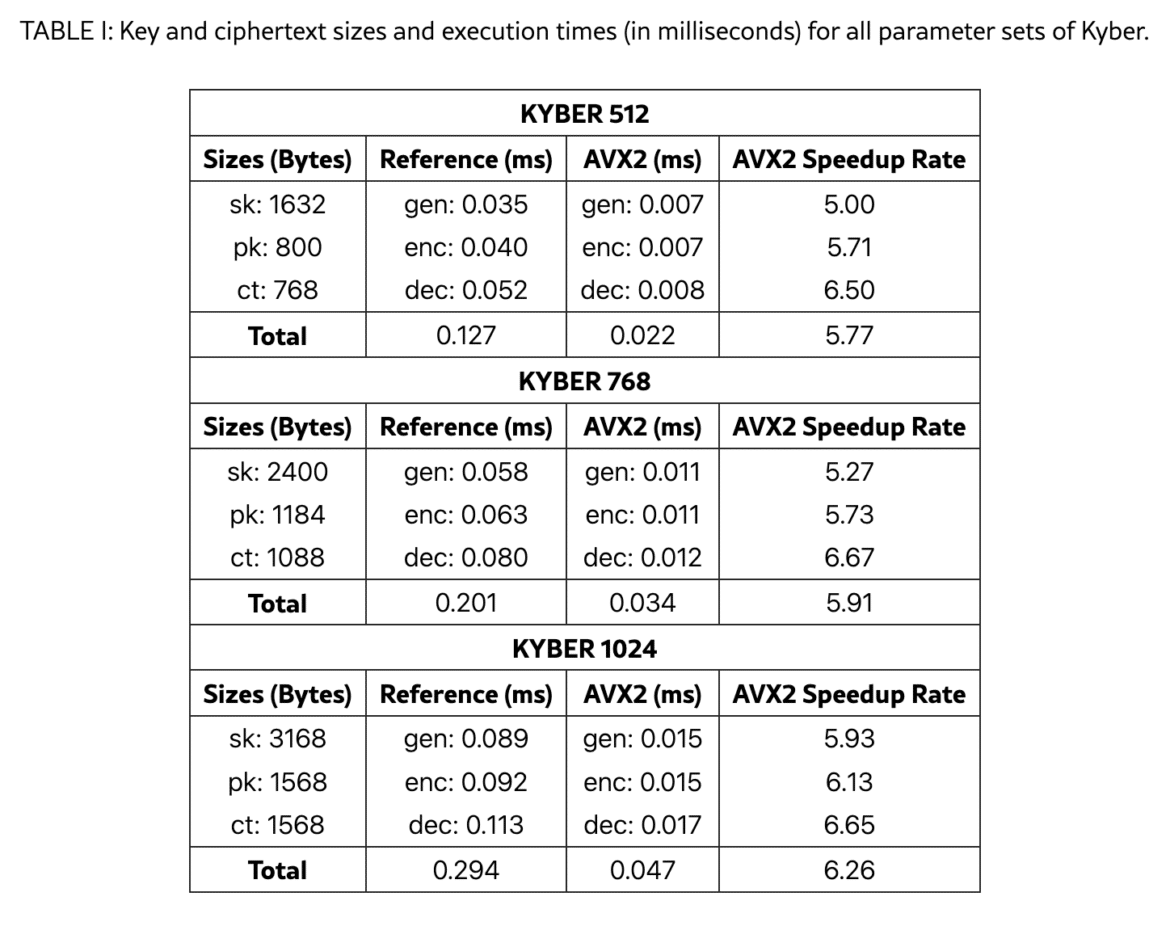

Kyber performance

Table I of the paper reports performance for Kyber at three security levels. Kyber‑512, which offers 128‑bit security, generates a key pair in 0.035 ms, encapsulates in 0.040 ms and decapsulates in 0.052 ms. With AVX2 optimizations these times drop to 0.007–0.008 ms, yielding a 5.00×–6.50× speedup. Even at Kyber‑1024 (256‑bit security), the slowest variant, total execution time is only 0.294 ms using the reference implementation and 0.047 ms with AVX2. The paper notes that decapsulation benefits most from vectorization, with speedups up to 6.65×.

These results show excellent scalability. As security increases from Kyber‑512 to Kyber‑1024 the total runtime doubles rather than quadrupling, and the AVX2 version remains comfortably below 0.05 ms. For context, this is faster than many network round‑trip times.

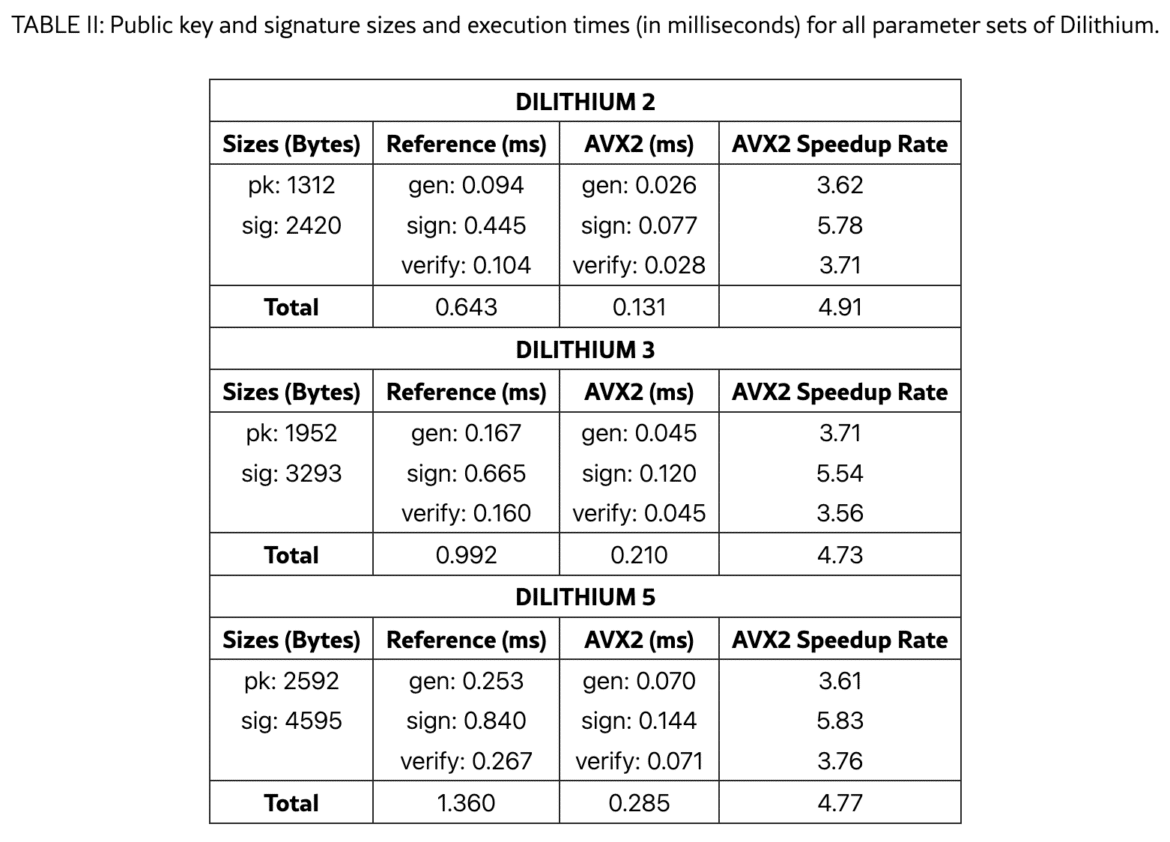

Dilithium performance

Dilithium signatures are heavier than key encapsulation because they require lattice sampling. The reference implementation of Dilithium‑2 (128‑bit security) generates keys in 0.094 ms, signs in 0.445 ms, and verifies in 0.104 ms, for a total of 0.643 ms. The AVX2 implementation reduces these to 0.026 ms, 0.077 ms, and 0.028 ms, respectively, achieving a 4.91× overall speedup. For Dilithium‑5 (256‑bit security) the total reference time is 1.360 ms and the AVX2 time is 0.285 ms. Even at this highest security level, signing operations remain under 0.15 ms with vectorization. The authors emphasize that signature generation, not verification, dominates Dilithium’s runtime, so applications requiring frequent signing (e.g., code signing) should plan accordingly.

Why AVX2 matters

A striking finding is how much the right implementation matters. Kyber sees nearly 6× speedups and Dilithium around 5× when using AVX2 instructions. This underscores the importance of using optimized libraries and, where possible, hardware acceleration. In my earlier article I noted that side‑channel countermeasures could double the CPU cost; Demir et al.’s results show that we can reclaim much of that cost through vectorization. As enterprise hardware evolves, many servers and even clients will support AVX2 or AVX‑512, making PQC implementations more efficient.

Post‑Quantum vs. Classical Cryptography

The authors directly compare Kyber and Dilithium against RSA and ECDSA at equivalent security levels. The results overturn the common assumption that PQC is necessarily slower.

- Key exchange: Kyber‑512 achieves 0.128 ms total execution time (including key generation, encapsulation, and decapsulation), whereas RSA‑2048 clocks in at 0.324 ms. Even Kyber‑1024 is three times faster than RSA‑3072, which only offers 128‑bit security. Compared to ECDH (P‑256), Kyber is slower in raw numbers (0.128 ms vs. 0.102 ms), but the gap is small and, importantly, Kyber provides quantum resistance.

- Signatures: Dilithium‑2 produces a signature in 0.644 ms total, while ECDSA (P‑256) requires 0.801 ms. At higher security levels, the gap widens: Dilithium‑5 completes in 1.361 ms vs. 2.398 ms for ECDSA (P‑512). The paper notes that Dilithium’s deterministic signature eliminates nonce‑related vulnerabilities that have plagued ECDSA implementations.

These comparisons demonstrate that post‑quantum algorithms can be faster than their classical counterparts. This aligns with what I wrote previously: well‑chosen lattice schemes are quite efficient and often comparable to RSA or faster. The common fear that PQC will slow down authentication or key exchange is not borne out when using optimized implementations.

Deployment Challenges in Telecommunications

Demir et al.’s paper goes beyond benchmarking to analyze the challenges of deploying PQC in telecom networks. This section resonates strongly with my previous cautionary notes. Operators must upgrade complex infrastructures while maintaining service continuity. The paper identifies six key challenges:

- Performance and latency impacts: PQC keys and ciphertexts are larger, which can increase handshake size, strain bandwidth, and tax memory‑constrained devices. A single extra kilobyte can increase response time by 1.5 % in a TLS handshake, and radio access networks may struggle with computational overhead. I raised the same concern: a Kyber‑768 key share may not fit in one UDP packet and can fragment the ClientHello, causing some middleboxes to drop connections.

- Interoperability with legacy systems: Not all network elements will upgrade simultaneously. A PQC‑enabled node could be cut off from peers that only understand classical crypto. Hybrid modes (classical + PQC) mitigate this but require new standards and careful validation. In my article I noted similar challenges: TLS 1.3 can negotiate PQC gracefully if both sides support it, but older protocols like TLS 1.2 lack downgrade protections.

- Standardization and regulatory concerns: At the time of writing (late 2025), NIST’s PQC standards are just being finalized. The telecom industry awaits guidelines from 3GPP, IETF, ETSI, and national regulators, creating uncertainty for large carriers.

- Cost and resource allocation: Upgrading base stations, routers, SIM cards, and customer devices is expensive. Small operators fear that only large carriers can afford early adoption. However, the paper notes that as vendors integrate PQC into their products, costs will decline.

- Security risks and transition challenges: New algorithms may harbor undiscovered vulnerabilities; side‑channel resistance is critical. I previously highlighted that implementing constant‑time operations and masking can double the computational cost. Demir et al. echo this, warning that early deployments have revealed issues such as middleboxes failing due to large key exchange messages.

- Vendor readiness and supply chain: Telecom relies on a vast ecosystem of hardware and software vendors. Many are waiting for finalized standards before integrating PQC. Operators must ensure that SIM cards, baseband chips, and TLS proxies support new cipher suites..

Despite these hurdles, the paper highlights successful case studies in telecom:

- SoftBank conducted a hybrid PQC trial on a 5G network using Kyber for key exchange and found minimal performance impact.

- SK Telecom tested Kyber‑protected USIM cards and observed that subscriber authentication could be secured without noticeable delay.

- North American carriers are preparing pilot deployments of PQC‑enabled authentication schemes.

These trials suggest that with proper engineering and hybrid modes, PQC can be integrated into existing telecom infrastructure. They also validate my earlier recommendation to test PQC in a lab or staging environment, measure performance under realistic loads, and look for failures or anomalies.

What the New Findings Mean for Enterprises

From my perspective, Demir et al.’s results should bolster confidence among enterprise leaders who fear that PQC is too slow or burdensome. Here are the key takeaways:

- PQC algorithms can be faster than classical crypto. The benchmarking shows that Kyber and Dilithium outperform RSA and ECDSA at equivalent security levels. Many of us assumed that “bigger keys” automatically meant slower operations, but lattice‑based schemes leverage polynomial arithmetic and number‑theoretic transforms to achieve high throughput. This does not negate my earlier warning about larger network packets or memory usage —both statements can be true. You can have fast CPU execution and bigger messages.

- Implementation matters. A naive reference implementation may be acceptable for prototypes, but production systems should use AVX2‑optimized libraries or hardware accelerators. Demir et al. show speedups up to 6×. In my article I mentioned that side‑channel countermeasures could double the cost; careful coding and vectorization can offset much of that overhead.

- Hybrid and phased deployment is the way forward. Both my previous article and the new paper emphasize starting with hybrid TLS (classical + PQC) to maintain compatibility and gradually expanding PQC usage. Operators should inventory where public‑key crypto is used, prioritize critical assets, and plan for phased rollouts. This ensures backward compatibility and allows time to tune performance.

- Costs will decline as standards mature. While the capital expense of upgrading devices is real, the paper notes that many transitions can be done via software updates rather than hardware replacements. Vendors are already integrating PQC into their products, and standardization will accelerate this trend.

- Act now—waiting is riskier. The paper warns that waiting until large‑scale quantum computers arrive is too late. Attackers are already harvesting encrypted data with the hope of decrypting it later when quantum computers mature. Conducting cryptographic inventories and migrating critical systems early reduces this “harvest now, decrypt later” risk.

Conclusion

The new performance analysis of Kyber and Dilithium is a welcome addition to the PQC literature. It confirms that post‑quantum security and good performance are not mutually exclusive, especially when using optimized implementations. In fact, Kyber and Dilithium often outperform classical cryptography at comparable security levels. This challenges the narrative that PQC will drastically slow down our networks.

At the same time, the paper reinforces my earlier message: migration is not plug‑and‑play. Larger keys and messages can disrupt protocols and devices, and side‑channel security remains paramount. Enterprises must plan for interoperability, increased memory usage, and hardware support. Hybrid deployments, staged rollouts, and thorough testing are essential.

Overall, I am optimistic. The combination of strong security guarantees and respectable performance makes Kyber and Dilithium suitable for high‑throughput environments like telecom networks. Early trials by SoftBank and SK Telecom show minimal performance impact, and regulatory bodies are finalizing standards. With proper planning, enterprises can deploy PQC without crippling their infrastructure. The key is to start now—perform a cryptographic inventory, test PQC implementations, and collaborate with vendors. By 2030, we could see PQC become as ubiquitous as TLS is today. The sooner we embrace this future, the better prepared we will be for the quantum era.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.