IonQ’s 99.99% Breakthrough and What It Means for Q Day

Table of Contents

News Summary

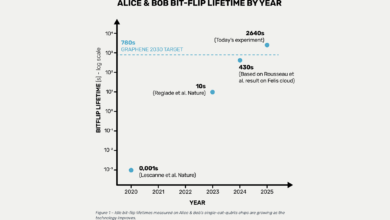

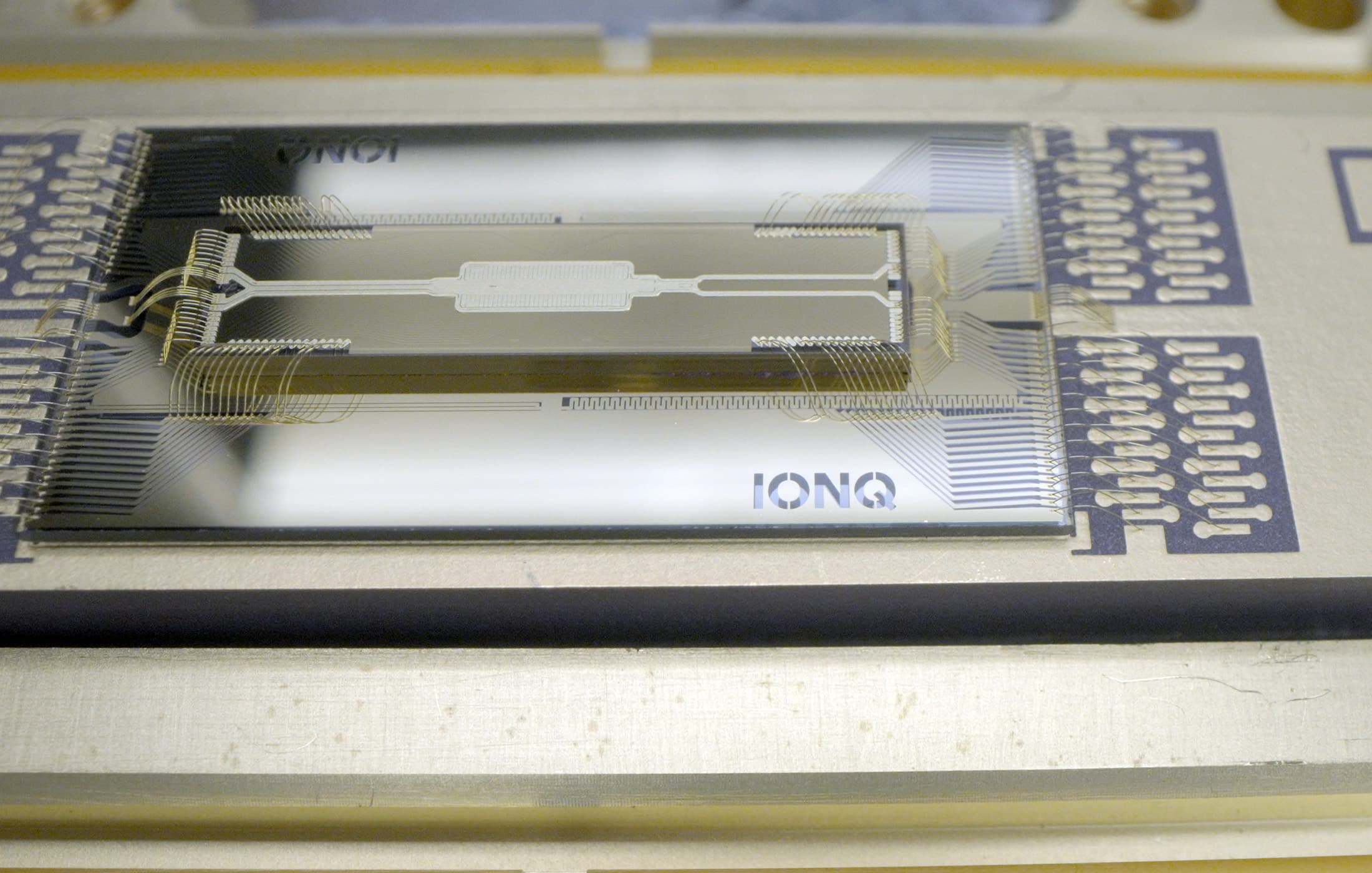

21 Oct 2025 – IonQ announced a new world record in quantum gate performance: >99.99% two‑qubit fidelity demonstrated on trapped‑ion hardware without ground‑state cooling. IonQ says the result comes from a new “smooth gate” technique developed by the Oxford Ionics team (now part of IonQ) and claims it will underpin 256‑qubit prototype systems in 2026 and a long‑term roadmap to millions of qubits by 2030. Read the official press release, the technical blog, and the arXiv preprint for the details.

What Was Achieved – In Plain English

- Record accuracy without extra cooling. IonQ’s team ran the basic two‑qubit operation that creates entanglement and got it right more than 99.99% of the time – and they did it without the slow, last‑mile “ground‑state cooling” step that trapped‑ion systems usually need. Think of it as getting top‑tier performance without warming up the engine for minutes beforehand.

- It stayed accurate even when the system was “hotter.” They deliberately heated the ions’ motion and still kept errors at or below 0.0005 per gate. (In ion‑speak: the average phonon number on the gate mode was pushed up to n̄ ≈ 9.4 and the gate still behaved.)

- A new way to run the gate made this possible. Instead of finely timing strong pushes on the ions (the usual approach), they slowly slid the gate’s frequency “off‑center” and back during the operation. This gentler, guided route avoids the kind of motion‑related errors that get worse as the ions warm up – so the system remains accurate above the Doppler cooling limit (i.e., at a normal, easier‑to‑reach “cool” temperature rather than an ultra‑cold ground state).

- Why it matters. In big, practical trapped‑ion machines, cooling and shuttling ions around often eat most of the runtime. If you can skip the slowest cooling step and still run with four‑nines accuracy, you can execute much longer programs faster and with less engineering complexity. That’s a real systems‑level win, not just a nice number in a lab demo.

- IonQ’s storyline. IonQ says this technique underpins 256‑qubit systems planned for 2026 and feeds into its electronics‑first (EQC) roadmap toward much larger machines later in the decade.

What Was Achieved – Technical Details

IonQ reports two‑qubit gate errors below 1×10⁻⁴ (i.e., fidelity above 99.99%), validated using subspace‑leakage randomized benchmarking. Crucially, the team shows 0.000084 error per gate for sequences up to 432 two‑qubit gates at temperatures above the Doppler limit, and maintains ≤5×10⁻⁴ error even when the motional mode is deliberately heated (average phonon occupation up to n̄ ≈ 9.4). That’s the centerpiece of the claim: record‑level fidelity without the expensive, slow “last‑mile” ground‑state cooling most trapped‑ion systems rely on.

Technically, this comes from a new adiabatic entangling method the authors call a “smooth gate.” Instead of ramping the gate amplitude and counting on perfect timing (the usual “catch the loop” approach), they ramp the detuning δ(t) while keeping the gate Rabi frequency Ωg essentially constant. Adiabatically steering the phase‑space trajectory suppresses residual spin‑motion entanglement – the temperature‑sensitive piece that usually forces ground‑state cooling. The paper lays out the theory (including the adiabaticity condition and filter‑function analysis) and shows the five‑step gate sequence and detuning‑ramp design used in the experiment.

On hardware, the team implemented a bichromatic Mølmer-Sørensen drive on two ⁴⁰Ca⁺ ions, with a total gate time tg ≈ 226 μs. Calibration reduces to finding a single parameter—the minimum detuning δmin ≈ -2π×21.7 kHz that yields θg = π/2 – rather than the usual two‑parameter sweep. The same gate remains robust under static detuning offsets (±2 kHz), with markedly lower leakage than Walsh‑modulated diabatic gates at similar speeds.

IonQ positions this as an inflection point for scaling: prototypes in R&D today will, they say, form the basis of 256‑qubit systems shown in 2026, and the company continues to message an ambition of ~2 million qubits by 2030 – via Electronic Qubit Control (EQC) and mass‑manufacturable chips with electronic (not laser) control stacks.

My Take: How Big a Deal Is This?

(Disclaimer – Opinion & Analysis: The following section reflects my personal interpretation, analysis, and forward-looking assessment based on the published paper, IonQ’s official announcements, and broader industry context. While I treat IonQ’s public statements as credible – especially given their track record of delivering on previously communicated milestones – this is not independent verification. The views expressed here are my own and should be read as informed commentary, not as objective reporting or investment advice.)

1) Four‑nines is not just a trophy – it changes error‑correction math.

Moving from ~99.9% to ~99.99% two‑qubit gates isn’t a “10× better” narrative; it can be a thousand‑fold reduction in logical error rates once you stack many rounds of error correction.

For a bit more of an in depth explanation on why this matters, see “Fidelity in Quantum Computing“. But in short, quantum errors accumulate exponentially over circuit depth. A simple way to understand the difference is to ask: “what’s the chance a circuit runs with zero errors?” If each gate succeeds with probability p, then an N‑gate circuit succeeds with pᴺ (assuming independent errors). At 99% per gate (p=0.99), a 100‑gate run is clean only ~36.6% of the time (0.99¹⁰⁰≈0.366). Bump that to 99.9% and it’s ~90.5% (0.999¹⁰⁰≈0.905). Now look at IonQ’s result: at 99.99% per gate, 100‑gate circuits are clean ~99.0% of the time (0.9999¹⁰⁰≈0.990) – and even 1,000‑gate circuits still succeed ~90.5% of the time (0.9999¹⁰⁰⁰≈0.905). By contrast, 99% fidelity over 1,000 gates yields only ~0.004% error‑free runs (0.99¹⁰⁰⁰≈4.3×10⁻⁵), roughly 1 in 23,000.

That’s why each extra “nine” is transformational: errors compound exponentially with depth, so four‑nines moves you from toy‑scale circuits toward thousands of gates before error correction meaningfully kicks in. (Reality check: correlated errors can change the exact numbers, but the exponential scaling, and the advantage of four‑nines, still holds.)

That’s exactly how IonQ frames it, and it’s directionally consistent with surface‑code scaling intuition (though the precise gain depends on code choice, cycle time, and correlated noise).

2) Above‑Doppler operation is a systems milestone.

If you can run high‑fidelity two‑qubit gates without the slow, second‑stage cooling, you take a sledgehammer to a well‑known runtime bottleneck in QCCD trapped‑ion machines. The paper cites results showing that transport and cooling can dominate 98-99% of circuit time – so eliminating ground‑state cooling opens the door to order‑of‑magnitude time‑to‑solution improvements, even if the primitive gate itself is “only” a few hundred microseconds.

3) The technique generalizes.

Because the “smooth gate” suppresses spin‑motion entanglement by detuning ramps (not delicate amplitude choreography), calibration simplifies and robustness to motional noise improves. The filter‑function analysis and detuning‑offset experiment both back that up. That’s exactly the sort of knob you want as you go from a two‑ion demo to hundreds or thousands of ions.

4) Caveats (that don’t diminish the advance):

- Benchmark scope. The headline number comes from subspace‑focused randomized benchmarking on a two‑ion device. It’s the right tool here, but the usual scaling questions apply: cross‑talk, spectator modes, mode crowding, calibration drift across many zones, and correlated errors in large arrays.

- Clock speed. The reported entangling gate is ~226 μs – typical for ions, slower than superconducting/neutral‑atom two‑qubit gates. IonQ’s argument is that removing cooling overhead dominates the end‑to‑end speedup; I think that’s plausible in QCCD, but surface‑code cycle time still matters for fault‑tolerant workloads.

What it means for IonQ’s roadmap

Earlier this year I wrote about IonQ’s roadmap and noted the company’s unusually aggressive posture on commercially relevant systems and CRQC timelines (as early as 2028 if everything breaks right). This result fits that story: higher native fidelity trims the number of physical qubits per logical qubit and lowers the depth required to hit a target logical error rate. IonQ also reiterates 256‑qubit demonstrations in 2026 and the long‑term “millions by 2030” target on EQC chips. Whether you accept those dates or not, the technical through‑line (electronics‑first control + above‑Doppler operation) is becoming coherent.

From a systems‑engineering angle, the smooth‑gate approach pairs nicely with global‑drive architectures where you can shim individual zones with local DC electrodes to fine‑tune mode frequencies, tweak entangling angles, or even “turn off” interactions locally. That’s one way to keep control complexity from exploding at scale.

…and for Q‑Day?

Q‑Day (or Y2Q) is the moment a cryptographically relevant quantum computer (CRQC) can run Shor’s algorithm to break today’s public‑key crypto. In my own framing, it’s not just a qubit count; it’s enough logical qubits, at low enough logical error, at fast enough cycle times to complete the job within practical time limits.

Two developments are converging:

Algorithmic/resource estimates are tightening. In May 2025, Craig Gidney updated the canonical 2019 estimate (20 million qubits for 8‑hour factoring) and argued that <1 million noisy physical qubits (surface‑code assumptions: ~10⁻³ gate errors, 1 μs cycles) could factor RSA‑2048 in under a week by combining algorithmic and error‑correction tricks. That puts CRQC in the sub‑million‑qubit conversation – still enormous, but no longer sci‑fi.

Hardware fidelity is improving. IonQ’s >99.99% two‑qubit gates are 10× cleaner than the 10⁻³ error rate assumed in many resource studies. In principle, that reduces overhead per logical qubit and relaxes factory budgets – though the trade changes with code choice and noise correlations, and ions’ cycle times are typically slower than the 1 μs assumption used in many estimates. The above‑Doppler result could partially compensate by slashing idle/runtime overheads in a QCCD machine.

Bottom line: This result pulls Q‑Day a bit closer on the axis that has historically mattered most – gate quality – and it strengthens IonQ’s claim that its EQC approach can be scaled without hitting a cooling wall. But Q‑Day remains a systems integration problem: millions (or at least hundreds of thousands) of high‑fidelity qubits, sustained at practical cycle times, with mature error correction and control software. IonQ’s milestone is necessary, not sufficient. And still a very big deal.

Questions I’m asking next

CRQC pathfinding: Given sub‑million‑qubit factoring estimates, what combination of fidelity, cycle time, and magic‑state factory throughput does IonQ target for a credible CRQC – especially in an electronics‑first architecture?

Surface‑code cycle time vs. cooling elimination: With ~226 μs gates today, what is IonQ’s near‑term target for code cycle time on EQC hardware, and how much does “no ground‑state cooling” shorten real‑world runtimes when you move beyond two ions?

Scaling the smooth gate: How does the detuning‑ramp scheme behave in a crowded mode spectrum across dozens of zones? Do we still get the same leakage suppression and easy calibration, or do cross‑mode interactions force more complex schedules at scale?

Impact on Q‑Day and What to Do Next

Feeding New Parameters to the Q-Day Estimator

I believe that IonQ’s new record meaningfully shifts timelines for Q‑Day – the projected moment a cryptography-breaking quantum computer (CRQC) comes online. By achieving four‑nines fidelity without the usual slow ion cooling step, IonQ has effectively pulled forward the horizon on which large-scale, error-corrected quantum computers become viable.

In plain terms, a credible vendor just crossed a pivotal quality threshold in a way that also speeds up quantum operations – a double win that brings the threat of code-breaking quantum machines closer than previously assumed.

Testing Q‑Day with IonQ’s Advance: To gauge how this breakthrough affects Q‑Day, we can plug some updated assumptions into my simple “Q‑Day estimator.” This tool combines four factors – logical qubit count, error budget (operations depth), operation speed, and yearly improvement – to predict when a quantum computer could factor an RSA‑2048 key within a week (a proxy for breaking present-day cryptography). Here’s a realistic mid-case reflecting IonQ’s progress, and why each value makes sense:

- Logical Qubits (LQC = ~256): IonQ’s roadmap now openly targets a 256-qubit prototype in 2026, on a path to “millions of qubits by 2030” via its Electronic Qubit Control (EQC) tech. While today’s logical qubit counts are in the single digits, a few hundred high-fidelity qubits in the next few years is plausible. Using 256 as the effective logical qubit capacity captures this momentum without assuming miracles.

- Logical Operations Budget (LOB ≈ 1011): Higher fidelity directly translates to deeper circuits before they fail. IonQ’s experiment showed error rates as low as ~8×10-5 per two-qubit gate (on 432-gate sequences) and stayed below 5×10-4 even with extra “heat” in the ions – meaning very long sequences can run reliably. That pushes the feasible operation count closer to the 1011 range (tens of billions of gate operations) needed to factor RSA-2048 in a week. In other words, four-nines fidelity shrinks the error-correction overhead and inches the target depth from the 1010 toward 1011-1012 operations range. So let’s put “11” into the tool to keep it more realistic.

- Quantum Operations Throughput (QOT ≈ 106 ops/sec): Eliminating the slowest cooling cycles removes a huge runtime bottleneck in trapped-ion machines. IonQ’s native two-qubit gate takes ~226 µs (a bit slower than some other platforms), but because the system no longer pauses for ground-state cooling, the overall processing speed can approach a million operations per second with enough parallelism. We keep 106 ops/sec as a baseline (which corresponds to completing an RSA-2048 factoring in ~1 week in prior studies) – a fair mid-range assuming IonQ and others continue to improve net clock speed. If one were extremely IonQ-specific, a slightly lower number (e.g. 105) could be used, but 106 is reasonable for a cross-platform outlook.

- Annual Improvement (×2.3 per year): IonQ’s public targets imply a very steep growth curve (from 256 qubits next year to millions by 2030). That would be well over 3× progress per year in some metrics. To be a bit conservative, we assume an overall 2.3× yearly boost in composite capability (more qubits, better fidelity, faster gates each year). This still far outpaces historical “Moore’s Law” and accommodates IonQ’s claims while leaving cushion for engineering challenges. But it would fit “Neven’s Law“. Faster growth (say 2.5-3×) would pull Q‑Day even closer; slower (∼2×) would push it out a bit.

What does this mean? Plugging these inputs into the Q-Day estimator yields a composite score of about 0.026 today – meaning the capability soon will be ~2.6% of what’s needed to break RSA-2048 in a week. At a 2.3× annual growth, that crosses the “1.0” threshold around 2030.

This aligns with other analyses pointing to the early 2030s for Q-Day if progress continues. In practical terms, IonQ’s leap doesn’t flip an immediate “break encryption” switch, but it does shorten the timeline we’re working with. To illustrate the stakes, consider two bookend scenarios using the same estimator (baseline assumptions unchanged):

- Conservative Case (slower progress): LQC 300, LOB 1011, QOT 105, growth 2.0×. The starting score here is lower (about 0.003), crossing 1.0 in ~8-9 years – pushing Q-Day toward 2033-2034. This assumes IonQ’s breakthrough is real but scale-up and speed lag behind plan. It’s essentially a “high friction” scenario: we get four-nines fidelity devices, but clock speeds and qubit counts improve more gradually.

- Aggressive Case (IonQ hits its goals): LQC 1000, LOB 1011, QOT 106, growth 2.5×. This starts around 0.1 (10% of the goal) and reaches 1.0 in just ~2.5-3 years – potentially 2027-2028. Here we assume IonQ and others sprint ahead: on the order of 1000 logical qubits and a million-op/second throughput by the late 2020s. Not coincidentally, this scenario mirrors IonQ’s own optimism (a thousand-plus logical qubits by ~2028 and significant speed-ups), and it would put a CRQC within the decade if realized.

Most likely, reality will land between these extremes, but the takeaway is clear: IonQ’s four-nines feat nudges the quantum threat timeline forward. Or at least solidifies more optimistic industry predicitons. That’s enough to turn planning horizons from “next decade” to “this decade.” As such, the prudent move for security leaders is to treat this as a call to action. Below, I offer guidance on how to communicate and operationalize this development for both executive stakeholders and technical teams responsible for crypto agility.

You can play with your own assumptions and parameters here: CRQC Readiness Benchmark (Q-Day Estimator) and my methodology and assumptions are explained here: CRQC Readiness Benchmark – Benchmarking Quantum Computers on the Path to Breaking RSA-2048.

Key Signals and Tripwires – Why Act Now

To justify moving from “planning” to execution in your post-quantum crypto (PQC) program I often teach my clients how they can predict the Q-Day themselves, and to always watch for “tripwires” – technical achievements that should trigger or accelerate action.

With this research, multiple technical tripwires, as I defined them, have been tripped: a key error-rate threshold has been passed, in a thermally robust way, on a platform designed to scale. These are precisely the signals risk managers have been watching for to move from preparation into action.

- Gate Fidelity Breakthrough: IonQ achieved the ≥99.99% two-qubit fidelity mark on real hardware. This has long been viewed as pivotal for error correction. Status: Met (IonQ/Oxford Ionics experiment). Implication: It’s time to escalate PQC efforts from monitoring to acting. We now have experimental confirmation that the necessary quality for large-scale quantum computing is here.

- Thermal Robustness: The fidelity held steady even without ultra-cooling – errors stayed below ~5×10-4 under significant ion heating (n̄ up to ~9) in the IonQ demo. Status: Met. Implication: Quantum operations can run at high accuracy without lengthy cool-downs, meaning future machines will execute longer calculations faster than we thought. Adjust any “time to break” estimates downward accordingly.

- Stable Gate Calibration: The new “smooth gate” method showed low sensitivity to imperfections – e.g. minimal leakage errors even when they intentionally detuned the system by ±2 kHz. Status: Demonstrated. Implication: More robust gates = more reliable scaling. It increases confidence that vendors can maintain these fidelities as systems grow, which in turn raises our confidence that large quantum computers will arrive on schedule.

- Cycle Time Watch: IonQ’s current entangling gate takes ~226 µs, but removing cooling overhead could yield an order-of-magnitude speedup in real-world circuit execution. We should watch how fast IonQ and others can make their full error-correction cycles. Implication: If we start seeing credible claims of <100 µs per cycle with these fidelities, that’s another leap toward practicality. It would be a second-order “green light” that quantum computers can not only compute accurately but also quickly.

- Scaling Roadmap Credibility: IonQ is openly messaging 256-qubit systems in 2026 and a pathway to millions of qubits by 2030. Many were skeptical of those numbers; this fidelity milestone lends them credibility. Implication: Align your cryptographic transition plans with the possibility that large-scale quantum machines could appear by the end of the decade. For instance, U.S. federal agencies are already instructed to be quantum-safe by 2030-2033 in various domains – plans which assumed quantum advances would continue. Now we have evidence that assumption is holding. Use “256 qubits by next year” as justification to start pilot deployments of PQC now rather than waiting.

CRQC checklist: map Gidney’s assumptions to IonQ’s published data

Let’s compare how IonQ’s published data compare with the currently best know algorithmic approach to cracking RSA-2048 – Craig Gidney’s paper from May 2025.

Legend: Green = aligned or better. Amber = plausible but incomplete or unproven at system scale. Red = significant gap vs assumption.

| Gidney CRQC assumption (RSA‑2048) | Value in Gidney 2025 | IonQ 2025 status | RAG | What to watch / ask |

|---|---|---|---|---|

| Physical two‑qubit error | 1×10⁻³ (uniform) | ~8.4×10⁻⁵ at Doppler; ≤5×10⁻⁴ heated | Green | Replicate on larger devices and across zones. |

| Single‑qubit / SPAM performance | 1×10⁻³ (uniform) | Trapped‑ion state‑of‑the‑art is 99.999%‑class SPAM and extremely low 1‑qubit error in Oxford‑line work | Green | Confirm IonQ EQC stack specs and drifts at scale. |

| Surface‑code cycle time | 1 μs | Two‑qubit gate ~226 μs; above‑Doppler reduces cooling overhead but not per‑cycle timing yet | Red | Publish code‑cycle targets for EQC hardware; show end‑to‑end speedups beyond two‑ion demos. |

| Control reaction time | 10 μs | Not publicly specified for IonQ EQC | Amber | Provide closed‑loop latency figures on real systems. |

| Max concurrent logical qubits | ≈1.4k active peak (1399 in Table 5) | IonQ messaging: 256‑qubit class prototypes 2026 and scaling toward much larger systems | Amber | Show path from physical to ~1.4k logical with concrete code and overhead. |

| Logical error per round | Target 10-15 to keep a <week run on track | IonQ has not published per‑round logical error; four‑nines physical helps, but metrics differ | Amber | Specify per‑round or per‑op logical error at target distances; align definitions with Gidney. |

| Magic‑state factories / throughput | ~6 factories; cultivation + 8T‑to‑CCZ; week‑scale runtime | Not yet published by IonQ | Red | Publish factory designs, throughput, and schedule on EQC devices. |

| Topology / layout | 2‑D nearest‑neighbor grid | QCCD with shuttling and electronic control; different, but compatible with FTQC | Amber | Demonstrate multi‑zone, low‑cross‑talk logical ops consistent with code layout. |

| Total runtime target | < 1 week factoring under assumptions | Above‑Doppler could cut runtime by >10× in QCCD, but full system hasn’t been shown | Amber | Publish end‑to‑end code‑cycle cadence and logical throughput (rQOPS). |

Bottom line: On error rate, IonQ now exceeds what Gidney assumed. On speed and scale, key disclosures are pending. If IonQ can pair four‑nines with credible code‑cycle time and factory throughput, it closes the gap that matters most for Q‑Day timing.

Board-Level Summary

Framing the Risk: For a board or C-suite audience, the message should be concise and business-relevant. For example:

“A new milestone in quantum computing was just reached: IonQ demonstrated two-qubit operations with 99.99% accuracy – crossing a long-awaited threshold for practical error-corrected quantum computers. Not only is this a record, but they achieved it in a way that speeds up quantum processing (they bypassed a usual cooling step). In plain English, this means a leading quantum vendor can now run much larger algorithms with far fewer errors. Why does it matter to us? Because it accelerates the timeline for quantum computers to potentially crack encryption. Experts have been predicting the early-2030s for that “Q-Day” moment; this hardware breakthrough reinforces those predictions – and even suggests we should be prepared a bit sooner (late 2020s). In short, a risk we thought was 8-10 years out may hit in ~5 years. We need to ensure our post-quantum cryptography transition is on track, treating this with the urgency of a near-term cybersecurity project, not a distant science experiment.”

This kind of summary puts the technical feat in context (“fewer errors – bigger machines sooner”) and links it to the organization’s risk posture and crypto migration program. The emphasis should be on urgency and validation: a quantum threat once seen as theoretical is rapidly becoming tangible, and a credible industry player just provided proof.

How To Start?

Obtain budget. Use the memo above plus some ideas from “Securing Quantum Readiness Budget Now” and “How CISOs Can Use Quantum Readiness to Secure Bigger Budgets (and Fix Today’s Problems).”

Immediate Next Steps (0-3 months)

Given this development, what should organizations do in the very near term? Here’s a checklist for the next 90 days:

- Establish or Reinforce a PQC Program: If you haven’t already, formally stand up an enterprise-wide post-quantum cryptography program with an owner, budget, and clear KPIs. Quantum risk can no longer be treated as a back-burner R&D topic. As part of this, inventory your cryptographic assets – identify everywhere you use algorithms like RSA, ECC, or DH that could be broken in the future. (In fact, U.S. federal guidance already mandates such inventories; e.g., White House memo OMB M-23-02 in 2022 required agencies to catalog all vulnerable cryptography and start funding transitions.) Knowing what you have is step one.

- Update Procurement Language: Begin requiring NIST-approved post-quantum algorithms in new contracts and tech procurements. For example, mandate that any products support the NIST PQC standards (Kyber for key encryption and Dilithium for digital signatures, now published as FIPS 203 and 204). Where immediate replacement isn’t possible, ask vendors for roadmaps to be compliant with the NSA’s CNSA 2.0 suite (the U.S. National Security Agency’s post-quantum guidelines) by the designated timelines. In short, make future purchases prove they’re “quantum-resistant” or at least quantum-agile.

- Launch Targeted Pilot Implementations: Identify a few high-impact areas to pilot PQC solutions. Good candidates are:

- Secure communications (TLS/VPN): Test out hybrid key exchange using a classical algorithm + a PQC algorithm (many libraries and cloud services now support this).

- Software signing and update integrity: Start implementing dual-signing of critical software or firmware with a PQC digital signature (alongside the existing signature), so you can transition seamlessly later.

- Public Key Infrastructure (PKI): Experiment with issuing a post-quantum certificate authority (CA) or integrating PQC into your certificate lifecycle, even if just in a test environment.

These pilots will surface practical challenges and help build organizational muscle for the broader migration. Leverage guidance from CISA and NIST (e.g., CISA’s PQC updates and sector-specific roadmaps) to choose pilot scopes.

- Engage Vendors and Partners: Communicate to your critical suppliers and technology partners that quantum readiness is now part of your security requirements. This might involve sending a questionnaire or adding a section in vendor risk assessments about their PQC transition plans. Many organizations are now asking for crypto-agility – the ability to swap out cryptographic algorithms quickly. Make sure your vendors are aware that you expect compatibility with PQC standards in the coming few years.

Near-Term (6-12 months)

Over the next year, the focus should shift from planning to initial rollouts and building resilience into your cryptographic infrastructure:

- Automate Crypto Inventory & Monitoring: Develop a “Crypto Bill of Materials” for your applications – tools that can scan code, binaries, and network traffic to identify which cryptographic algorithms are being used where. This helps track progress (e.g., the percentage of systems that have been upgraded to quantum-safe algorithms) and catches any overlooked usages of vulnerable crypto. Regularly update this inventory (U.S. agencies are required to update their crypto inventory annually through 2035 ; enterprises should mirror that practice).

- Data Re-encryption & Key Management: Begin re-encrypting or re-keying sensitive data that has a long shelf life. For example, backups, long-term archives, or confidential data that must remain secure for 5+ years should be re-protected with quantum-resistant encryption. Attackers might steal encrypted data now and decrypt it later when they have a quantum computer (“harvest now, decrypt later”), so prioritize any data that would still be sensitive if exposed a decade from now. This may involve deploying hybrid encryption systems (combining classical RSA/ECC with PQC algorithms during a transition period). Use available buyer’s guides (like the U.S. GSA 2025 PQC Buyer’s Guide) for reference on solutions and best practices.

- Build Crypto-Agility into Development: Ensure your CI/CD pipelines and software architectures can handle algorithm changes. This might mean abstracting cryptographic libraries, so you can swap in a new algorithm by configuration, or enabling support for larger key sizes and new certificate formats. Test rolling out a new cryptographic primitive in a controlled way (for instance, switching a dev environment’s TLS to use PQC cipher suites) – measure the impact on performance and compatibility, so you’re not caught off-guard later.

- Third-Party Assurance: Include PQC readiness in your supplier security reviews. For any critical software or service provider, get a statement of their plans for PQC support and expected timelines. If you’re a regulated industry or work with government contracts, tie this to upcoming rules – for example, if an RFP says all cryptography must meet CNSA 2.0 by 2030, you need assurances your vendors will hit that date. Begin updating contracts to stipulate that any new systems must be “Q-Day safe” or upgradable to PQC by default.

Tracking Progress and Reporting Upwards

Finally, start measuring your organization’s crypto-migration progress with metrics that executives and boards can understand. A few examples of metrics/KPIs to report include:

- Coverage: What percentage of your cryptographic assets (applications, protocols) have been identified and assessed for quantum vulnerability? Aim for 100% visibility.

- Migration Progress: How many of those have been upgraded to post-quantum solutions in pilot or production? For instance, “30% of external-facing services now use quantum-safe key exchange.” Track the trend over time.

- Vendor Compliance: What fraction of critical suppliers have committed to PQC support by a given date? (E.g., “80% of our software vendors have roadmapped CNSA 2.0 compliance by 2030.”) This holds your supply chain accountable.

- Crypto-Agility Readiness: How fast can you update cryptographic algorithms in your systems if needed? This might be measured by drills or simulations – for example, report that “an emergency TLS certificate switch took X days in testing” and work to reduce that. In an ideal state, algorithm swaps should be a matter of configuration and take only days or weeks, not months.

- Threat Timeline Watch: Keep a pulse on external developments like IonQ’s. You might set up an internal “quantum threat index” that gets updated whenever a new milestone is hit – e.g., new record fidelity, a prototype with >1000 qubits, a successful demonstration of a fully error-corrected logical qubit, etc. Each time one of these occurs, you can reassess if your crypto timeline needs to accelerate. (IonQ’s four-nines result would score high on such an index, as it directly reduces uncertainty about achieving large-scale quantum computers.)

Mapping IonQ’s Milestone to Policy Deadlines

Lastly, connect this technical progress to the regulatory and government timelines that are driving the broader community. For instance, the U.S. National Security Memorandum-10 (NSM-10, 2022) and OMB M-23-02 memo kicked off a requirement for federal agencies to inventory and begin migrating systems to PQC – essentially treating 2030 as a no-later-than date for most transitions. The NSA’s CNSA 2.0 (the updated Suite of cryptographic standards for national security systems) sets specific deadlines in the 2025-2033 range for different product categories (with things like VPNs and web browsers expected to be exclusively quantum-safe by 2033).

IonQ’s achievement is a validation that those timelines were wisely chosen – and arguably, that we should not assume we have slack beyond them. If anything, this news is a nudge that staying ahead of the 2030-2033 mandate is the safer course. Companies should use it to reinforce to regulators, auditors, or internal policy teams that quantum transition projects must stay on schedule or even be pulled forward.

Meanwhile, NIST’s standards for PQC (FIPS 203 for key establishment and FIPS 204 for signatures) are already finalized – meaning we have the tools in hand to act. There is no reason to “wait for better crypto” at this point; the focus should be on implementation and iteration.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.