Harvard’s 448-Atom Quantum Computer Achieves Fault-Tolerant Milestone

Table of Contents

November 11, 2025 – A Harvard-led team unveiled a record-breaking neutral-atom quantum processor that for the first time integrates all core elements of scalable, error-corrected quantum computation into a single system.

Quantum computers promise exponential processing power by encoding information in qubits – quantum bits – that can exist in superposition and become entangled. But qubits are notoriously fragile, easily losing their quantum state (a problem known as decoherence). Quantum error correction (QEC) is the decades-old dream solution: encoding logical qubits across many physical qubits so that errors can be detected and corrected on the fly. Until now, QEC remained largely theoretical or limited to small-scale demonstrations because implementing all the ingredients of a fully fault-tolerant architecture is hugely challenging.

Using 448 rubidium atoms trapped in reconfigurable optical tweezers, the researchers demonstrated a universal quantum computing architecture that can detect and correct errors below the critical “fault-tolerance” threshold. In other words, adding more qubits actually reduces the logical error rate instead of increasing it. Mikhail Lukin, the project’s senior author, said “For the first time, we combined all essential elements for a scalable, error-corrected quantum computation in an integrated architecture…. These experiments – by several measures the most advanced done on any quantum platform to date – create the scientific foundation for practical large-scale quantum computation.” This is the same team that published, in my opinion, the most consequential neutral-atom results in 2023. Worth checking this out for some background. And the same team that recently achieved 3,000 qubit continuous operation. They are firmly establishing themselves as the leading neutral-atom modality group.

Lead author Dolev Bluvstein called the system “fault tolerant” and emphasized its conceptual scalability. While still far from the millions of qubits needed for a cryptographically relevant quantum computer (CRQC) capable of breaking RSA encryption (so-called Q-Day), the achievement marks an essential step toward that goal. Hartmut Neven, an engineering director at Google Quantum AI (which pursues superconducting qubits), hailed the result as “a significant advance toward our shared goal of building a large-scale, useful quantum computer”. The breakthrough also highlights the rising prominence of neutral atoms among quantum hardware platforms, alongside superconducting circuits and trapped ions, in the quest for scalable quantum machines.

Inside the 448-Qubit Neutral-Atom Processor

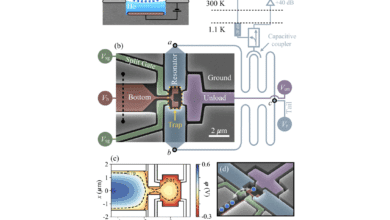

The Harvard system stores qubits in the hyperfine “clock” states of neutral rubidium-87 atoms held in optical tweezers. A spatial light modulator creates up to 448 trapping sites in a 2D grid, which can be rearranged on demand via moving optical tweezers (acousto-optic deflectors) to position atoms for desired gate interactions. This reconfigurable design allows atoms to shuttle between different zones of the processor mid-computation. The team divides the array into separate regions for storing idle qubits, performing multi-qubit entangling gates, reading out qubits, and an extra reservoir of spare atoms.

Single-qubit operations are carried out with focused laser beams for Raman rotations on individual atoms, while two-qubit entangling gates use fast excitation of atom pairs to Rydberg states (highly excited atomic states with strong interactions). All qubits share a common global clock laser for synchronization and can receive parallel control pulses – crucially, entire blocks of atoms can be driven in unison for logical operations on encoded qubits. This parallelism means that, for example, all physical qubits within a logical code block can be entangled simultaneously during a logical gate or measured together for error syndrome extraction.

A key hardware innovation is the non-destructive qubit readout via “spin-to-position conversion.” In traditional cold-atom experiments, measuring an atom’s state often causes the atom to be lost (atoms in one state are ejected or appear dark, a form of measure-by-loss). The Harvard team instead uses a state-selective optical lattice that pulls the two spin states into separate positions without kicking the atom out. Essentially, if the qubit is in state |1⟩ (“bright” state), it gets pinned by the lattice and stays put, whereas if it’s in state |0⟩ (“dark”), it is free to be moved by the tweezers. After this separation, an imaging camera can detect which position the atom ended up in – thus revealing the qubit state while keeping the atom available for reuse. This spin-to-position readout yields both the measurement outcome and an “erasure” flag for any atom loss, improving the error correction decoding (since the system knows when an atom vanished entirely). Combined with techniques to quickly re-cool and re-initialize measured atoms, the processor can recycle qubits mid-circuit instead of discarding them. Bluvstein et al. report that this upgrade boosted their experimental cycle rate by two orders of magnitude by avoiding the need to reload a new atom after each measurement.

Finally, the machine’s control infrastructure – high-speed waveform generators and optical modulators – was refined to coordinate the intricate sequence of laser pulses and atom moves with sub-10 ns timing jitter. With these advances, the team could run complex quantum circuits lasting over 1 second and involving up to 27 layers of operations on the atoms. By comparison, many prior neutral-atom experiments were limited to much shallower circuits before decoherence or atom loss dominated. The extended coherence is aided by the use of magnetically insensitive clock states (with >1 s qubit coherence times) and dynamic decoupling pulses applied during idle periods.

Repeated Quantum Error Correction in a Surface Code

To test fault tolerance, the researchers implemented a small surface code – a leading QEC code where physical qubits are arranged on a grid with overlapping stabilizer measurements (checks) that detect error syndromes. In this experiment, a distance-$$d$$ surface code (with $$d=3$$ or $$5$$) encoded one logical qubit into a block of $$d^2$$ data atoms plus additional ancilla atoms for measurement. They performed up to 5 consecutive rounds of error syndrome extraction, using fresh ancilla atoms each round to measure multi-qubit parity operators (stabilizers) in both X and Z bases. Between rounds, any detected errors were decoded using a machine-learning decoder informed by atom loss data, and corrective logical operations (if needed) could be applied in post-processing.

Crucially, the system showed “below-threshold” error rates, meaning error correction actually improved with larger code size. For example, using a distance-5 code instead of distance-3 roughly halved the logical error rate per round (a 2.14× reduction). This indicates the physical qubit error probability $$p$$ was below the code’s threshold $$p_{\mathit{th}}$$, so more redundancy yields exponential suppression of logical errors. Specifically, Bluvstein et al. achieved an error per logical QEC round of ~0.6% for the $$d=5$$ surface code, compared to ~1.3% for $$d=3$$. They note that incorporating atom loss detection and using a modern decoding algorithm (a neural network trained on both simulated and experimental data) improved the QEC performance by ~1.7× compared to decoding without those features. The decoder takes into account so-called “erasure” errors (flagged atom losses) which are easier to correct than unknown errors. It also uses “supercheck” operators – combined stabilizers around a lost atom – to recover information that would otherwise flicker and evade detection. By leveraging these, the team could effectively handle atom loss events that occurred during the QEC cycles, greatly reducing their impact on the logical error rate.

Perhaps the most striking result was observing the “quantum Zeno effect” in action: by repeatedly measuring and correcting, coherent errors were rapidly converted into incoherent, correctable ones. The group intentionally injected a small systematic rotation error on all qubits each cycle; with no QEC, this would accumulate as a coherent logical error. But with frequent stabilizer measurements, the coherent drift is constantly interrupted (Zeno effect), breaking it into random small errors that the code can catch. Thus, after 4–5 rounds the logical qubit ended in a state closer to the ideal than it would have without correction, demonstrating genuine error suppression rather than just detection. This is a first for neutral-atom qubits – previous demonstrations in atoms were limited to one or two rounds of error correction or were not below threshold.

Entangling Logical Qubits: Transversal Gates vs. Lattice Surgery

Error-corrected qubits are only useful if we can compute with them. The Harvard team showed two ways to perform a logical CNOT (entangling two logical qubits) and studied the trade-offs. The first method is a transversal gate: apply controlled-NOT in parallel between each pair of corresponding physical qubits across two code blocks. The second is lattice surgery, where two separate logical qubits are merged by measuring joint stabilizers and then split apart – effectively “stitching” two surfaces into one and then ripping them to entangle the logical states.

In their experiments, transversal CNOTs had the advantage of speed and resilience to measurement errors. Because transversal gates don’t require extra ancilla and can be done in one synchronized step, they introduced fewer opportunities for error per logical operation. Indeed, the researchers found the transversal approach tolerated ancilla readout errors much better than lattice surgery, which involves multiple measurement cycles and is more sensitive to those errors. On the other hand, lattice surgery is more resource-frugal – it doesn’t need a full extra code block for an ancilla and can be more efficient for certain algorithmic structures. The team ran sequences of up to 3 logical CNOTs in a row, interleaved with QEC cycles, and monitored how the logical error probability scaled. They observed an optimal balance when roughly 3 transversal CNOT gates were done per QEC round – beyond that, errors from the gates accumulated, but too few gates wasted the quantum information throughput. Lattice surgery, in contrast, benefited from error detection and extra QEC to mitigate its higher per-gate noise.

In simple terms, the transversal gates were faster and “cleaner” but less flexible (limited to certain operations), whereas lattice surgery was slower and more error-prone but can realize operations like logical merging/splitting that transversal gates cannot easily do. The Harvard results are among the first to experimentally compare these two paradigms of logical operation on the same platform. Both approaches worked, entangling logical qubits with high fidelity, but with differing sensitivity to errors. Notably, they confirmed that regular QEC cycles during computation (“stabilizer measurements during logic”) help keep entropy under control even as gates are performed. This validated the idea that one should interleave error-correction with computation continuously in a fault-tolerant machine, rather than attempting long stretches of computing without checking for errors.

Teleportation: A Shortcut to Universal Gates

One limitation of 2D surface codes is that, by themselves, they cannot perform certain “non-Clifford” gates (like the $$T$$-gate, a $$\pi/8$$ rotation) within the code while remaining fault-tolerant. This is a consequence of the Eastin-Knill theorem, which prohibits a fully transversal universal gate set. To achieve universal quantum computing, one strategy is to prepare special magic states and inject them via teleportation to implement gates like $T$. The Harvard group implemented a form of this using a small 3D color code (specifically a 15-qubit Reed-Muller code) in the $$|T_L⟩$$ state as an ancilla. By performing a logical teleportation protocol – entangling the ancilla with the data block via a transversal CZ and then measuring the ancilla – they effectively teleported a $$T$$ gate onto the logical data qubit. This allowed them to execute arbitrary-angle rotations on the logical qubit, not just the 90° rotations of the Clifford group.

They demonstrated this by synthesizing various rotation angles and performing full tomography to verify the output state. With up to three $$T$$ gates in sequence, they could reach different points on the Bloch sphere; the angles matched theory within error bars. Importantly, the overhead for these synthesized rotations grows only polylogarithmically – consistent with the Solovay-Kitaev theorem – meaning a few magic states can produce a high-fidelity small rotation. The use of 3D codes is clever because the [[15,1,3]] Reed-Muller code does have a transversal $$T$$ gate. By teleporting from a 3D code to a 2D code (and even demonstrating code switching between them), the team showed a path to get the best of both: the practical hardware benefits of a 2D planar code and the transversal $$T$$ of a 3D code. Essentially, measurement breaks the restriction of Eastin-Knill – by leveraging measurement-induced teleportation, they achieved universality with only transversal physical operations.

The authors emphasize that teleportation is a powerful tool not only for gates but also for error management. Since their logical teleportation moves the logical state onto a fresh block of qubits and leaves behind the old block, it provides a way to periodically “swap out” the qubits that have accumulated physical errors for new ones – effectively a quantum reboot mid-circuit. In their deep-circuit experiments, they used this idea repeatedly to ensure the system’s entropy remained roughly constant over time. All told, they performed “hundreds of logical teleportations” over the course of lengthy algorithmic sequences, involving dozens of logical qubits encoded in either [[7,1,3]] Steane codes or a high-rate [[16,6,4]] code, while successfully maintaining error-corrected operation.

Deep Circuits and Constant Entropy Operation

With mid-circuit measurement and teleportation-based resets in hand, Bluvstein et al. pushed their processor to run some of the deepest quantum circuits ever executed on an error-corrected platform. They report running logical circuits up to 27 layers (~1.1 seconds long) that involved dynamic atom reuse and active cooling between layers. One highlight was a 150-cycle Rabi oscillation test on the same atoms with intermittent measurements and reinitialization – essentially measuring a qubit 150 times in sequence while preserving its quantum coherence. The fact that the same atoms could undergo 150 measurement/reset cycles and still exhibit clean Rabi oscillations attests to the stability of the mid-circuit reuse technique and the ability to remove entropy injected by measurements.

The concept of “constant internal entropy” means that as the computation proceeds, errors and entropy introduced are continuously stripped away so the quantum register doesn’t heat up or decohere cumulatively. The Harvard team achieved this by a combination of methods: (1) Teleporting logical qubits onto new physical qubits (from the reservoir) periodically, as described above, so old errors don’t propagate. (2) Using 1D optical molasses cooling and optical pumping in between gate layers to remove motional energy and reset any qubits that were measured. (3) A 1529 nm “shield” laser that protected idle qubits in the storage zone from stray light during readout and cooling of their neighbors. With all these, they effectively turned the usually detrimental processes (measurement, leakage, etc.) into benign or even helpful ones by making them dissipative resets of a subset of qubits. In the paper’s words, “physical errors remain on the previous block… measuring this block then enables qubit reset… ensuing that errors are not carried forward”. This is a remarkable illustration of the interplay between quantum logic and entropy removal – a theme the authors highlight as key to efficient quantum architectures.

They also experimented with a high-rate [[16,6,4]] code, which encodes 6 logical qubits in 16 physical qubits (much higher information density than the surface code). Such quantum LDPC codes are theoretically promising but typically have very complex circuits. By using their teleportation approach, the team was able to handle a [[16,6,4]] code and observe it behaving as expected under error correction, with error rates comparable to the simpler codes. This suggests their architecture can accommodate more advanced QEC codes down the line.

A Glimpse of the Future Quantum Computer

Harvard’s achievement underscores that neutral-atom qubits are now serious contenders in the quantum computing race. Just a year or two ago, superconducting qubit systems (like Google’s and IBM’s) were considered clearly ahead in integrating QEC. But this 448-atom platform now demonstrates many of the same essential capabilities – high qubit counts, configurable connectivity, repeated error correction, and logical operations – with some unique advantages. Neutral atoms are naturally identical and free from fabrication defects, and they can be scaled to 2D arrays of hundreds or even thousands (indeed, the same group recently ran a 3,000+ atom array continuously for hours). The ability to rearrange atoms on the fly gives flexibility in implementing different QEC codes or circuit layouts on demand. Moreover, mid-circuit measurement and reuse in an atomic system was a major open challenge – now solved here via the spin-to-position technique and 1D optical cooling.

That said, neutral atoms currently operate at a slower clock speed than some other platforms. In this experiment, each error-correction cycle (including moving atoms and performing gates and measurements) took ~4.5 milliseconds, and a logical two-qubit gate (like transversal CNOT) took on the order of hundreds of microseconds. In contrast, superconducting qubits perform gates in tens of nanoseconds and can do an error-correction cycle in a few microseconds. Photonic qubit schemes could be even faster (signals traveling at light-speed). The Harvard group acknowledges this difference but is confident that with engineering improvements – e.g. stronger Rydberg laser power, faster electronics – the neutral-atom cycle times can be cut down significantly. They outline a path to reduce gate errors by 3–5× and speed up the clock by ~10×, which would put the system comfortably below the fault-tolerance threshold with overhead to spare. If achieved, that would allow hundreds of logical operations on thousands of physical qubits with manageable error rates.

Finally, this work carries profound implications for quantum industry readiness. It is a step closer to the era of fully error-corrected quantum computers that can run arbitrarily long algorithms reliably. While Q-Day is not here yet, the techniques demonstrated – particularly the way teleportation was used to continually refresh the quantum hardware – suggest a clear route to building ever-larger fault-tolerant modules. These could potentially be networked together (the team even speculates about photonic interconnects for neutral-atom modules in the future). Companies like QuEra Computing (a startup co-founded by the researchers) will likely seek to commercialize this technology. In the broader context, it shows that quantum error correction is becoming practical in hardware: we are seeing the needed ingredients being assembled and verified experimentally after years of theory. As Lukin put it, “by realizing and testing these fundamental ideas in a lab, you really start seeing light at the end of the tunnel.”

Quantum hardware is not a one-horse race, and neutral atoms just leap-frogged into a leading position alongside superconducting and ion-trap systems. Each has its strengths – superconducting qubits still hold a speed record, and trapped ions have shown great fidelity – but neutral atoms now boast the largest scale error-corrected computation to date.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.