Feynman and the Early Promise of Quantum Computing

Table of Contents

Introduction

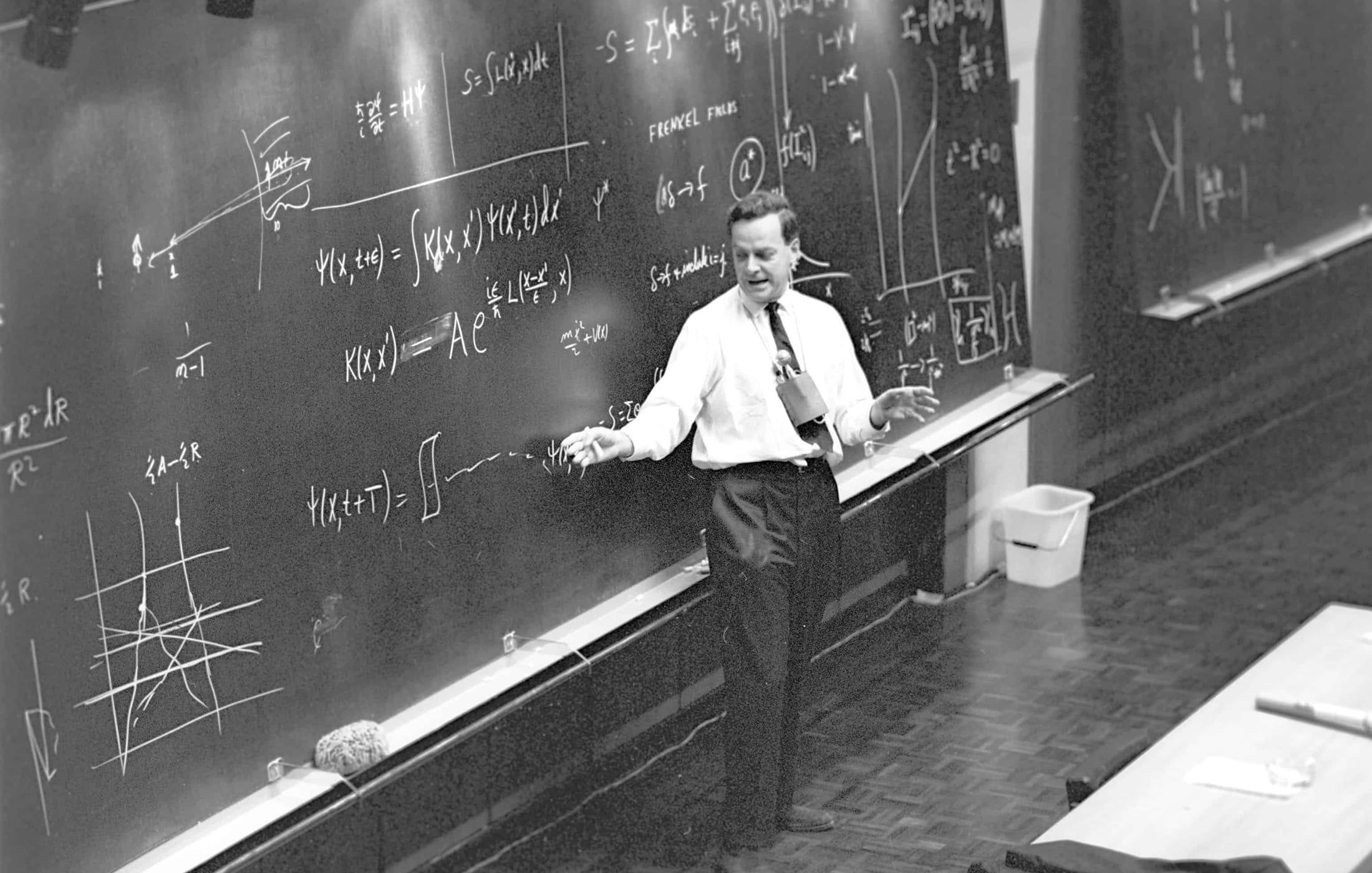

In the early 1980s, the legendary physicist Richard Feynman imagined a new kind of computer – one that operates on the weird rules of quantum mechanics rather than classical physics. Frustrated by how clumsy ordinary computers were at simulating the subatomic world, Feynman famously declared: “Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical”. In other words, the only way to truly capture the bizarre behavior of particles (like electrons doing dances that classical bits can’t imitate) was to build a computer that itself spoke nature’s quantum language. This bold idea, sparked in a 1981 lecture at MIT, planted the seeds for what we now call quantum computing.

Feynman’s Vision: A Computer for the Quantum World

Feynman’s insight was born from a stark realization: if you try to use a normal computer to simulate even a tiny quantum system, you quickly hit a wall. The reason is something almost magical – quantum particles can exist in many states at once, and as you add more particles, the number of possible states explodes exponentially. A classical computer would need to grind through an astronomical number of variables to track every flicker of a molecule or atom. For example, simulating a complex molecule might require more bits of memory than there are atoms on Earth. No matter how fast our classical chips became, they’d always fall hopelessly behind when faced with nature’s quantum richness.

So Feynman asked: why not turn the problem on its head? Instead of forcing a classical machine to pretend to be quantum, build a computer that is quantum from the ground up. Such a machine would use quantum bits, or qubits, which can exist in multiple states simultaneously (thanks to a phenomenon called superposition). In theory, a handful of qubits could juggle an immense number of possibilities all at once, allowing us to simulate mother nature without the exponential overhead.

It was a revolutionary proposal. Feynman wasn’t alone in these musings – around the same time, physicist Paul Benioff had shown that a quantum system could be used to mimic a Turing machine (the abstract engine behind all computers). But Feynman’s rallying cry and colorful “dammit” quote gave the field a memorable spark of motivation. If nature is quantum, our computers had better be quantum too.

At first, this idea was primarily aimed at simulating physical phenomena. Feynman dreamed of calculating what atoms and particles do by using qubits to mirror nature’s own logic. The promise was profound: chemists could design new molecules by simulating chemical reactions quantum-mechanically; materials scientists could discover novel materials by crunching quantum interactions directly. It was like being handed a miniature universe-in-a-box to experiment with. These were the origins of quantum computing – as a tool for science, born from the marriage of quantum physics and computation.

From Theoretical Blueprint to First Algorithms

Throughout the 1980s, the concept of quantum computing percolated in theoretical physics and computer science circles. In 1985, Oxford researcher David Deutsch took Feynman’s vision a step further by rigorously defining what a universal quantum computer could be. He described a blueprint for a machine that could run any algorithm – just like a classical computer, but harnessing quantum tricks to potentially solve certain problems far faster. Deutsch introduced the world to the idea of quantum logic gates acting on qubits in superposition, enabling a kind of massive parallel calculation (later dubbed quantum parallelism). To prove the point, he even devised one of the first quantum algorithms, a thought experiment now known as Deutsch’s algorithm, showing that a quantum computer could outperform a classical one on a very contrived task. It was a minimal example, but it proved the principle: quantum computers weren’t just wild speculation; they could actually compute in ways classical ones couldn’t.

Deutsch’s work essentially laid the theoretical bedrock for quantum computing. It fleshed out the architecture that Feynman had envisioned, giving everyone a clearer picture of how to build and program these exotic machines. With a universal quantum machine on paper, researchers started to think beyond physics simulations. What other problems could a quantum computer tackle? It turns out, quite a few—ranging from math puzzles to database searches. But the real bombshell was yet to come, delivered by a softly spoken mathematician in the mid-90s.

Shor’s Algorithm: From Simulating Physics to Cracking Codes

For over a decade, quantum computing remained a niche idea, intriguing to physicists but still looking for its killer app. That changed in 1994, when Peter Shor unveiled an algorithm that made the world sit up and take notice. Shor discovered that a quantum computer (if one could be built) would be insanely good at one particular task: factoring large numbers. Now, on the surface, factoring numbers doesn’t sound as glamorous as simulating the universe – but its importance in the real world is huge. The security of our online communications, banking, and even state secrets rested (and largely still rest) on schemes like RSA encryption, which in turn rely on the fact that factoring big integers is ridiculously hard for classical computers. Shor showed that a quantum computer could factor those large numbers exponentially faster than any classical algorithm. In plain terms, a task that might take a classical supercomputer longer than the age of the universe could potentially be done in hours on a quantum machine.

This result was seismic. Suddenly, quantum computing wasn’t just about physics or chemistry – it was about cybersecurity, espionage, and global trust. If built to scale, Shor’s quantum algorithm could break RSA encryption, undermining the very foundations of digital security. Governments and tech companies took notice (and perhaps a few NSA analysts spat out their coffee). Shor’s algorithm transformed quantum computing from a scientific curiosity into something with profound practical stakes. It injected urgency (and funding) into the field: the race was now on not just to simulate molecules, but to outsmart every classical code-breaker on the planet.

Shor’s breakthrough also carried a more hopeful promise: quantum computers might solve problems that stumped classical machines in general, not just in physics. It hinted at a future where new algorithms could tackle optimization, search, and other computational challenges in fundamentally faster ways. And indeed, just two years later, another discovery reinforced that idea.

Grover’s Algorithm and the Broadening of Quantum Possibilities

In 1996, Lov Grover at Bell Labs came up with a different kind of quantum trick – one aimed at speeding up the mundane act of searching through unsorted data. Imagine looking for a name in a phonebook with no particular order; a classical search might on average check half the entries. Grover’s quantum algorithm could do this search in roughly the square root of the number of steps, a quadratic speedup that, while not as dramatic as Shor’s exponental leap, was still remarkable. Grover’s Algorithm showed that even for more day-to-day computational tasks (like database lookups or cracking symmetric cryptographic keys by brute force), a quantum computer offers an advantage. It was another proof that quantum machines have a few more tricks up their sleeve than classical ones, reinforcing the notion that the quantum approach could broadly reshape computing.

By the late 1990s, thanks to Shor, Grover, and other pioneers, the initial promises of quantum computing had crystallized. First, the promise Feynman cared about: simulating the natural world with unprecedented accuracy, from chemistry to materials science. And second, the promise that grabbed headlines: solving certain abstract problems (like factoring or searching) spectacularly faster than classical computers. These twin motivations – scientific discovery and computational superiority – have driven quantum research ever since. The field was no longer just a theoretical playground; it had concrete algorithms and clear stakes.

The Long Road from Dream to Reality

Of course, having a nifty algorithm on paper is one thing; building a machine to run it is another. The early heroes of quantum computing all understood that quantum hardware would be incredibly challenging to create. Qubits are finicky beings – they’re susceptible to noise and decoherence (losing their quantum-ness by slightest disturbances). Throughout the 1990s and 2000s, scientists labored to coax a few qubits into working together reliably. They also came up with ingenious schemes to correct errors in quantum systems (for example, Shor himself devised the first quantum error-correcting code in 1995) so that larger computations might one day be possible. These efforts were like building the first shaky flight prototypes after dreaming up the idea of an airplane.

By the mid-2010s, progress was evident: small quantum processors with a handful of qubits were built in labs; companies like IBM and Google were investing heavily, and the phrase “quantum supremacy” – the moment a quantum computer overtakes a classical one at some task – was buzzing in the tech press. The early ideas and promises that Feynman and friends conjured decades ago still guide the quest today. Each year brings us a step nearer to fully-fledged quantum machines that might simulate complex molecules for drug discovery, optimize logistical problems, or yes, crack those once-impenetrable cryptographic codes.

If you’re curious for a deeper dive into the timeline of key events (and all the colorful characters) in the history of this field, I’ve put together a separate article outlining the early history of quantum computing with more detail. It’s fascinating to see how an idea that sounded like science fiction in the 1980s has steadily moved toward reality.

Conclusion

The origins of quantum computing are a tale of bold imagination at the intersection of physics and computer science. What began as a quest to simulate the strange beauty of the quantum world has evolved into a broader dream of transforming computing itself. From Feynman’s quip about nature’s quantum character to Shor’s eureka moment that startled cryptographers, the early history of quantum computing is rich with visionary thinking.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.