Entropy-Driven Photonic Quantum Computing: A Critical Look at QCI’s Approach

Table of Contents

Quantum Computing Inc. (QCI) has introduced an unconventional paradigm in quantum computing called Entropy Quantum Computing (EQC). Unlike the standard approach of isolating qubits in pristine, ultra-cold environments, QCI’s method intentionally embraces environmental noise and loss as part of the computation. Their quantum processors, optical devices operating at room temperature, leverage photons and a bit of chaos to solve complex optimization problems.

QCI’s Noise-Driven Quantum Approach

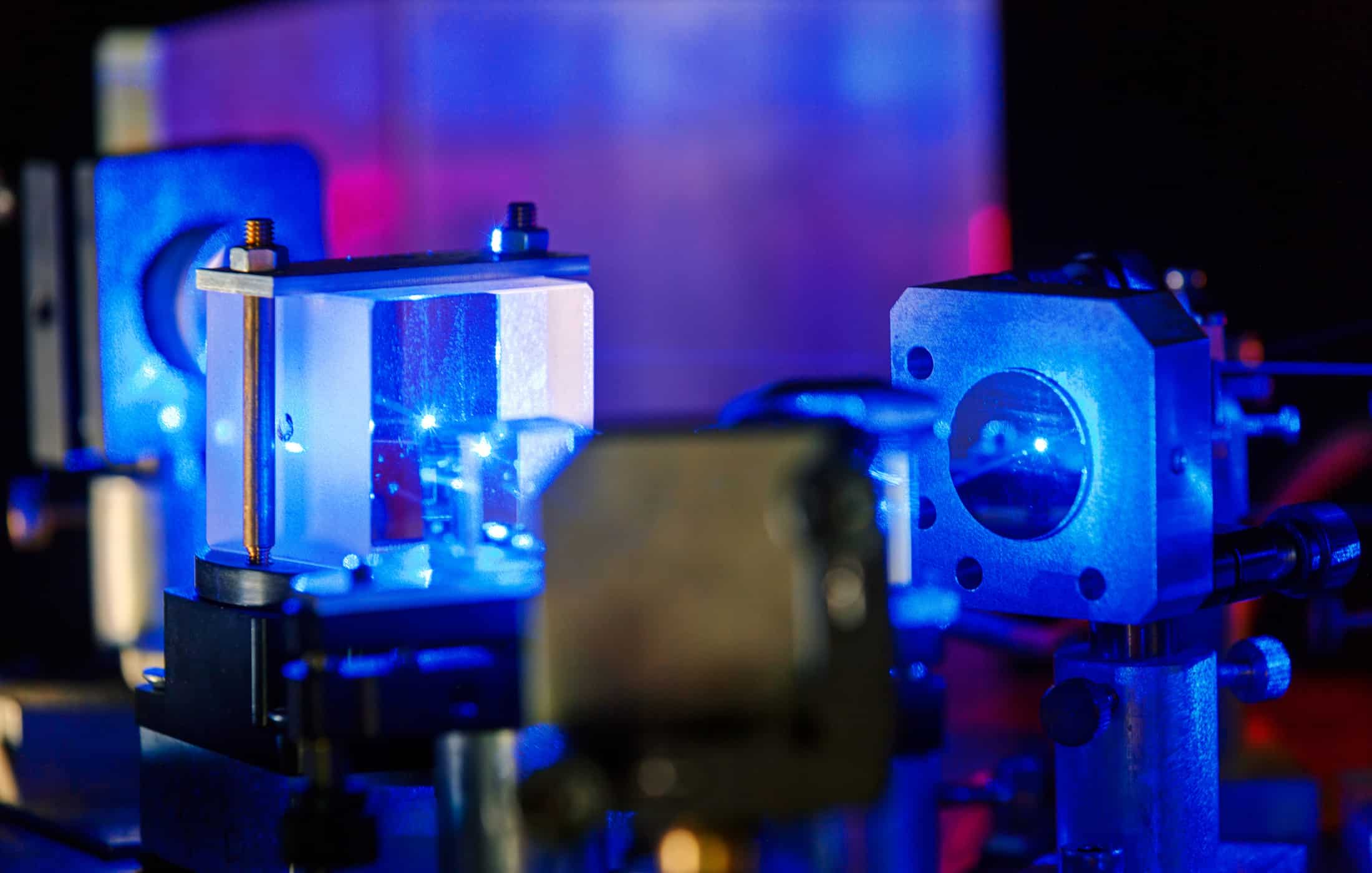

Engineers at QCI developed a novel way to encode and process information on photonic hardware, aiming for a practical, scalable, and affordable quantum computer. The result is Entropy Quantum Computing, which “flips the coin” on conventional wisdom: instead of fighting decoherence, it uses decoherence and loss as fuel for computation. In traditional quantum devices, any interaction with the environment (heat, photons, vibrations) causes loss of quantum coherence, so those systems are kept isolated in vacuum chambers and dilution refrigerators. QCI’s EQC, by contrast, works as an open quantum system that is deliberately coupled to an environment (an entropy source). By doing so, it turns noise into a tool – essentially guiding the system toward optimal solutions through carefully managed dissipation. Thanks to this design, QCI’s machines require no cryogenics or vacuum; they run at room temperature on photonic integrated circuits (e.g. chips made of thin-film lithium niobate) with low power consumption. This drastically reduces size, weight, and complexity compared to dilution-fridge quantum computers, making QCI’s devices more like regular PCIe cards than lab apparatus.

How does entropy-based quantum computing actually work? QCI provides an outline of its process: an optical resonator network with a few key components – an amplifier, a nonlinear mixer, and a tunable loss element. In simple terms, the system undergoes the following steps:

- Generate many candidate states: The machine creates a large superposition of possible solutions to the problem (currently achieved using an optical cavity that can hold many photonic states).

- Inject entropy (noise): Random fluctuations (an “entropy bath”) are introduced, seeding the system with a mix of quantum states. This randomness populates a broad search space of potential solutions.

- Apply gain and loss: The optical network provides amplification to all states while also introducing loss (damping). This gain-loss balance sets up competition among the states.

- Modulate losses via the Quantum Zeno Effect: Using fast modulation (via electro-optic or all-optical control), the system selectively suppresses certain states’ amplitudes. In essence, by measuring or damping undesired states frequently, those states decay faster (a quantum Zeno-like mechanism).

- Converge to the optimal state: Over time, only the state that best satisfies the problem (the “marked” state with lowest effective loss) can persist with enough gain. The system stabilizes when this winning state’s gain equals its loss – at that point, its oscillation dominates, representing the solution.

Mathematically, the problem to be solved (for example, finding the minimum of a complex energy function) is encoded into the physical parameters of the photonic network – essentially programming a Hamiltonian into the interference and loss pattern. QCI’s current hardware, the Dirac series (Dirac-1 and Dirac-3 machines), is optimized for combinatorial optimization tasks. They advertise that Dirac-3 can represent problems with over 11,000 binary variables (qubits) or even 1,000+ discrete-value variables (qudits) in an analog fashion. These numbers refer to the effective dimensionality of the optical state space; however, it’s important to note these are not 11,000 entangled qubits in the gate-computing sense, but degrees of freedom in a photonic analog system. In essence, QCI’s device operates somewhat like a laser-based Ising machine or quantum annealer, searching for ground states (optimal solutions) in a highly parallel, analog manner.

QCI’s Approach in Context: Who Else Is Doing This?

QCI’s entropy-based photonic computer is quite unique, but it doesn’t exist in a vacuum. Several other companies and research groups are exploring non-traditional quantum computing paradigms that share similarities or goals:

- D-Wave Systems (Quantum Annealing): D-Wave pioneered a quantum annealer that uses superconducting qubits to solve optimization problems by evolving them to a ground state. This is a closed-system analog approach (requiring cryogenic temps) and differs from gate-based quantum computers. QCI’s approach is conceptually akin to annealing, both attempt to find minima of an energy landscape, but QCI uses photonic oscillators and an open, dissipative process rather than superconducting qubits.

- Coherent Ising Machines (CIMs): In the past decade, researchers (e.g. at Stanford/NTT) built optical devices where networks of lasers or optical parametric oscillators find solutions to Ising spin problems. These CIMs showed promising speed scaling on certain optimization tasks (faster convergence with problem size than some annealers). However, stability issues (e.g. maintaining phase coherence across fiber networks) limited their practicality. QCI’s design can be seen as a descendant of these photonic Ising machines, but with integrated photonic chips and added control mechanisms to improve stability and programmability.

- Xanadu and PsiQuantum (Photonic Gate Quantum Computers): Several startups are using photonics for quantum computing in a very different way from QCI. Xanadu (Canada) and PsiQuantum (US) employ photonic qubits (squeezed light or single photons) to build gate-model quantum computers that perform universal quantum logic. For instance, Xanadu demonstrated a photonic quantum advantage experiment with 216 squeezed-light qubits on its Borealis machine. These photonic processors still aim to maintain quantum coherence and entanglement (with error correction in the long run), unlike QCI’s analog optimizer. In short, they use light, but do not harness environmental noise – in fact they still need very precise control and sources of entangled photons.

- IonQ, IBM, and Others (Conventional Qubits): Mainstream quantum hardware companies like IonQ (trapped ions), IBM and Rigetti (superconducting circuits), Quantinuum/Honeywell (ions), etc., all focus on closed-system qubits with long coherence times. They carefully isolate qubits and correct errors, essentially the opposite philosophy of QCI. As a result, those machines require vacuum chambers or cryostats and complex error-correction schemes. IonQ’s trapped-ion approach, for example, uses electromagnetic traps for ions in near-perfect vacuum – a stark contrast to QCI’s room-temperature photons in a chip.

- LightSolver (Photonic “Quantum-Inspired” Computing): QCI is not alone in claiming that optical systems can tackle optimization faster than classical computers. LightSolver, an Israeli startup, built a “Laser Processing Unit” that uses coupled lasers and interference to solve hard optimization problems, marketed as quantum-inspired rather than truly quantum. LightSolver’s purely optical solver also runs at room temperature with no special cooling, and the company similarly touts the ability to scale with low power. They reported instances of outperforming classical algorithms on specific combinatorial problems by using an all-optical setup. The key difference: LightSolver explicitly states their device is not a quantum computer (no entanglement, no qubits), whereas QCI maintains that their entropy computing leverages quantum dynamics in an open system. In practice, both approaches target similar applications – they are specialized machines for optimization – using photonics and analog processing.

In summary, no major competitor is doing exactly what QCI does, but there are parallels. QCI’s use of dissipation as a computational resource is a rare approach (most quantum efforts avoid dissipation). The closest analogies are quantum annealers (like D-Wave) and coherent Ising machines – QCI’s twist is to combine ideas from both, implemented in photonic chips with deliberate coupling to noise. Meanwhile, other photonic computing companies (Xanadu, PsiQuantum) pursue the longer road of fault-tolerant logic gates with light, and might not directly compete with QCI’s near-term analog optimizer.

Is QCI’s Noise-Harnessing Strategy Valid?

A natural question is whether QCI’s entropy-driven paradigm really counts as “quantum computing” – and whether it can deliver on its promises. The answer is a matter of debate. On one hand, there is theoretical support for dissipative quantum computing: research has shown that open-system dynamics (with engineered dissipation) can still yield quantum speedups equivalent to closed-system approaches in certain cases. In fact, some algorithms in continuous-time quantum computing and even certain annealing processes can leverage the environment to help find solutions faster, rather than treating it as an enemy. QCI’s team points out that entanglement – often touted as the sine qua non of quantum computing – may not be strictly necessary for a quantum advantage in optimization; their device operates more on interference and gradual state selection. If their claims hold, entropy computing could sidestep the scaling challenges that plague gate-model qubit systems (decoherence, error correction, etc.), offering a more direct path to useful quantum acceleration for optimization tasks.

However, skepticism is high in the quantum tech community. Critics note that QCI has yet to demonstrate a clear advantage over classical methods on any non-trivial problem. The company’s flagship research paper (released as a preprint in 2023) described proof-of-concept experiments but did not show a decisive win against classical optimization solvers. In a public forum discussion, a physicist with a PhD reacted to QCI’s paper with sharp criticism: “They basically cook up some Hamiltonian and say physics will do the trick… In the paper, the conventional gradient descent only required 10-100× more iterations… It’s not even clear they have an advantage here. The Dirac-3 machine literally costs $1k/hr to run. Why wouldn’t I just use a GPU for a few cents?” This commentator pointed out that QCI solved only very small optimization examples (ones easily handled by classical algorithms) while claiming the ability to tackle NP-hard problems. Others have questioned whether the device is fundamentally just a classical analog computer with a fancy quantum sheen. One forum poster argued that if QCI could truly gain computation from noise in the way they claim, it might violate thermodynamic principles, calling the idea “physically impossible” and dubbing the machine “purely classical optical computing.” Such harsh skepticism has even led to comparisons with over-hyped tech fiascos; an investing community member bluntly wrote, “Forget the science… this is the next Theranos”, doubting the company’s legitimacy.

It’s important to temper these opinions with the fact that QCI’s approach is brand-new and unproven – not necessarily fraudulent. The company is publishing its results and inviting scrutiny (even if one commenter noted the research avoided the word “quantum” in the title to preempt controversy). The big question remains unanswered: Can an open-system photonic computer achieve a quantum speedup (even just a quadratic Grover-type speedup, or significant practical speedup) on meaningful problem sizes? As of 2025, no independent experiment has verified a performance advantage of QCI’s EQC over best-in-class classical algorithms. Thus, many experts remain cautiously doubtful, awaiting evidence that entropy computing isn’t just a rebranded form of analog computing. The approach is certainly innovative, but the burden of proof is on QCI to show that harnessing entropy can outpace a classical computer armed with clever algorithms.

Market and Industry Perspectives

Despite the technical uncertainty, market interest in QCI has surged in the past year. Quantum Computing Inc.’s stock (NASDAQ: QUBT) skyrocketed over 2,000% in 12 months, fueled by investor excitement around quantum technology and perhaps the company’s frequent press releases about new milestones. In 2024 QCI raised substantial capital (hundreds of millions of dollars) and even opened its own quantum photonic chip fabrication foundry in Arizona. This foundry, slated to be fully operational by 2025, is reportedly the first of its kind focused on thin-film lithium niobate (TFLN) photonic chips in the U.S. Having an in-house foundry gives QCI a strategic asset – they can rapidly prototype and manufacture the optical components for their EQC devices, and even offer foundry services to others working on photonic circuits. The move was seen as a bold bet that photonic computing (for quantum or otherwise) will be a growing market, and it aligns with U.S. interests in domestic photonic technology for communications and defense.

On the customer side, QCI’s current revenue remains very modest (on the order of tens of thousands of dollars per quarter, mostly from R&D contracts). However, there are signs of early adoption of their entropy computers in specialized domains. In late 2024, QCI announced its first commercial sale of a Dirac-3 device to an automotive manufacturer, calling it an example of “increasing demand” for quantum optimization tools. They also partnered with the Sanders Tri-Institutional Discovery Institute (a biomedical research consortium) to use the Dirac-3 for drug discovery simulations, providing cloud access to their machine for tackling biomolecular modeling problems. These use cases underscore QCI’s strategy: target specific, computation-heavy problems (like portfolio optimization, logistics, drug molecule folding) where an analog quantum optimizer, even if not exponentially faster, might offer value by exploring many solutions in parallel. QCI is positioning its tech as a “quantum machine for real-world applications today”, emphasizing accessibility and immediate usefulness over absolute superiority.

Within the quantum industry, opinions on QCI’s approach are mixed. Some observers are intrigued by the fresh take – noting that if nothing else, QCI broadens the design space of quantum computing by exploring photonic analog methods. The fact that QCI doesn’t directly compete with giants like IBM or Google (since QCI isn’t chasing general-purpose quantum computers) could allow it to carve out a niche market, much as D-Wave did with annealers. The company’s partnerships and the foundry indicate that parts of the tech community (and government) are willing to give this approach a try, especially for near-term problem solving. On the flip side, many analysts do not yet take QCI as seriously as other quantum firms. In investor circles, it’s often pointed out that QCI’s name is misleading – unwary traders sometimes lump QCI in with true quantum hardware leaders, but experts caution that “Quantum Computing Inc. has nothing to do with quantum computing despite the name”. In lists of “pure-play” quantum stocks, QCI is frequently excluded, with insiders focusing on IonQ, D-Wave, and Rigetti as the legitimate players. This underscores a perception that QCI’s technology might not be fundamentally quantum or that its progress is too speculative. Indeed, the stock has also attracted heavy short interest, reflecting bets that the hype will not materialize into long-term value.

In summary, the market’s view on QCI ranges from optimistic curiosity to deep skepticism. Optimists see a novel quantum-inspired technology that, if validated, could sidestep the bottlenecks of traditional qubits and find practical use cases soon. Skeptics see a small-cap company capitalizing on the quantum buzz with unproven science – potentially to be avoided until they show real results. As of 2025, QCI stands at a crossroads: it has the spotlight and ample funding, but it must convince both the scientific community and customers that entropy quantum computing is more than just an intriguing idea.

Personal Take

In my view, EQC is best understood today as an interesting analog photonic heuristic rather than a demonstrated quantum computer. Until QCI (or an independent lab) publishes peer‑reviewed, reproducible head‑to‑head results that beat state‑of‑the‑art classical solvers (e.g., Gurobi/CPLEX, large‑scale SA/SQA, GPU heuristics) on structured, real‑world instances at meaningful scales – with rigorous time‑to‑solution and energy‑to‑solution metrics – I will treat their “qubit” counts as variable/mode counts and their outputs as heuristic optimization. I’d also want ablations that isolate any genuinely quantum contribution. Absent that evidence, this is solid engineering, but not yet a credible path to quantum advantage.

Conclusion

Quantum Computing Inc.’s approach marks a fascinating departure from the orthodox roadmap of quantum computing. By leveraging photonics and even the very noise that most quantum engineers dread, QCI is exploring a path toward solving optimization problems that blurs the line between quantum and classical computing. Is this entropy-driven, open-system paradigm the next big breakthrough or a dead-end detour? It’s too early to say. The concept is backed by some theoretical insights and has produced a working prototype that functions at room temperature – an achievement in itself – yet it remains scientifically unvalidated in terms of outperforming classical methods. Other companies and researchers have tackled similar challenges with photonic or analog devices, and history shows both the promise and pitfalls of such approaches.

The coming years will be crucial for QCI: delivering clear evidence of quantum advantage (or at least a clear practical advantage) is likely the only way to convert the current curiosity into confidence. Until then, QCI’s entropy quantum computing will rightly provoke healthy skepticism even as it pushes the envelope of what a “quantum computer” can be. The idea of turning noise into an ally is a bold one – if it succeeds, it could open an entirely new branch of computing; if it fails, it will serve as a valuable experiment on the winding road to harnessing the quantum world for computation. Either way, QCI has sparked a conversation about how we define and achieve quantum computing, and whether entropy might ultimately be a resource rather than just an adversary in that quest.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.