Quantum Breakthrough Slashes Qubit Needs for RSA-2048 Factoring

Table of Contents

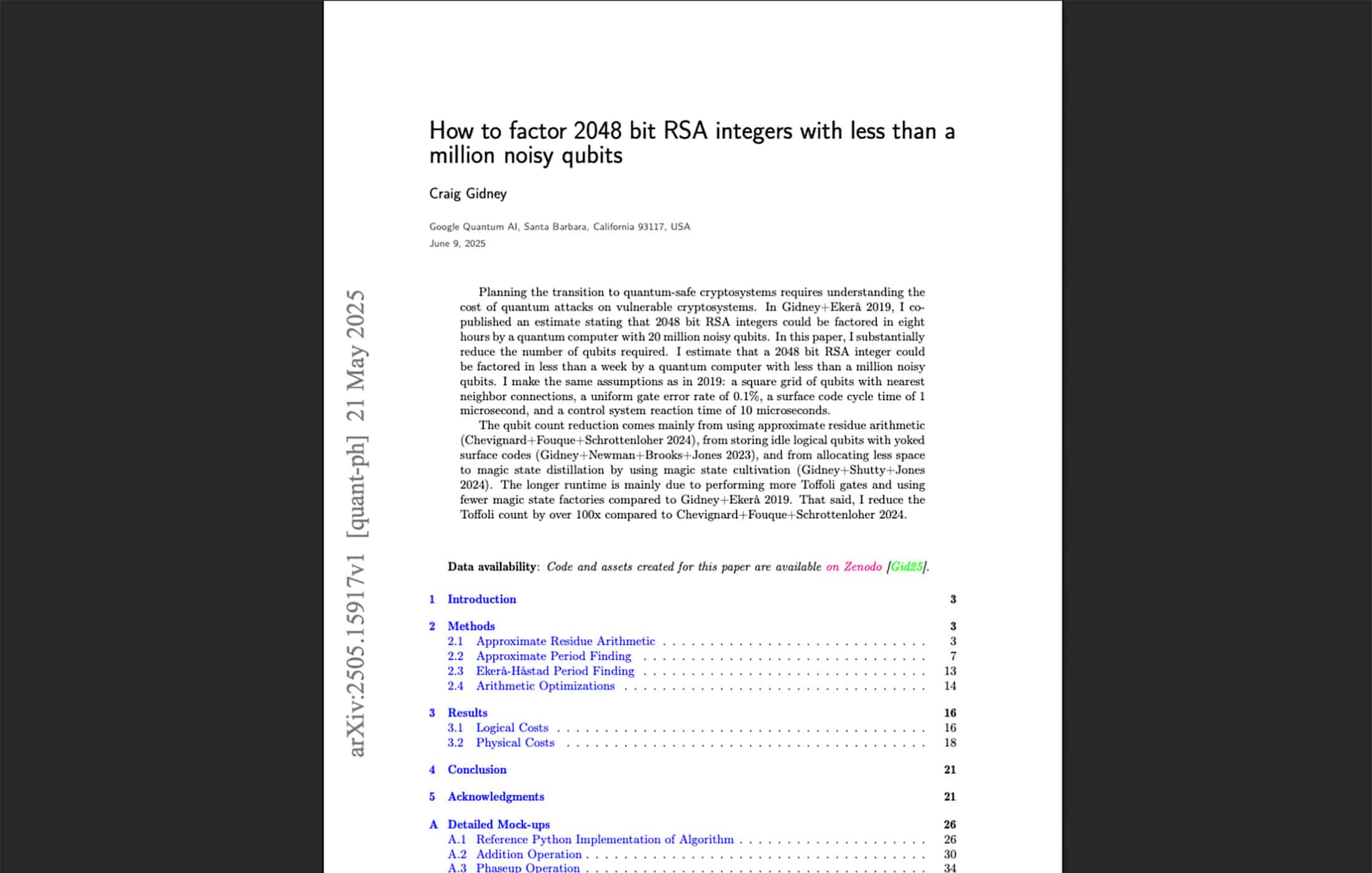

A new research preprint by Google Quantum AI scientist Craig Gidney has dramatically lowered the estimated resources needed to break RSA-2048 encryption using a quantum computer. Gidney’s May 2025 paper, “How to factor 2048 bit RSA integers with less than a million noisy qubits,” argues that a fault-tolerant quantum computer with under 1 million qubits could factor a 2048-bit RSA key in under one week. This is a stunning 20× reduction in qubit count compared to Gidney’s own 2019 estimate, which required ~20 million qubits and about 8 hours of runtime for the same task. The new approach trades a longer runtime for far fewer qubits, signaling major algorithmic and error-correction advances in quantum factoring.

The Paper

What Changed?

The drastic qubit reduction comes from three main innovations introduced in the 2025 paper:

Approximate Residue Arithmetic

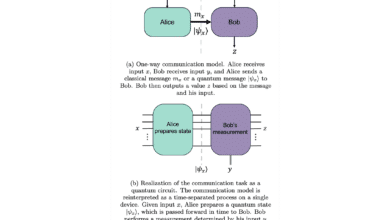

Instead of performing exact modular exponentiation (the core operation in Shor’s factoring algorithm) with huge quantum registers, Gidney employs a method of approximate modular arithmetic. Developed by Chevignard, Fouque, and Schrottenloher in 2024, this technique computes exponentiation in small pieces, using much smaller quantum registers and tolerating tiny errors. (For more about Chevignard et al. paper see my previous post “RSA-2048 Within Reach of 1730 Qubits. Or is it?“) In essence, the quantum computer doesn’t need to hold the entire 2048-bit number at once. By cleverly allowing a controlled amount of approximation (which can be corrected or accounted for later), the algorithm avoids the old “one qubit per bit” bottleneck and recycles qubits for multiple steps. This resurrects a concept called qubit recycling, an idea from the 1990s, meaning the same quantum bits get reused sequentially rather than all needed at once. The result is a huge savings in the logical qubits required for the calculation, albeit at the cost of more operations.

Yoked Surface Codes for Storage

Gidney’s method also tackles the overhead of quantum error correction by packing idle qubits more densely. When a logical qubit isn’t actively computing, it can be stored in a high-density encoding using a “yoked” surface code. This recent 2023 technique basically layers an additional error-correcting code to triple the storage density of inactive qubits. In practical terms, it means a million physical qubits can store many more logical qubits when they’re waiting their turn, without losing error protection. This innovation, credited to Gidney, Newman, Brooks, and Jones (2023), frees up hardware space so that fewer total physical qubits are needed to keep the algorithm’s qubits error-corrected. It’s a bit like compressing memory: more data (qubits) can be reliably stored in the same hardware footprint.

Magic State Cultivation

The third key improvement is a new way to generate the essential quantum resource states for computation. Magic states are special quantum states needed to perform non-error-correctable operations (like the Toffoli or CCZ gates used in Shor’s algorithm). Previously, factories for these states used magic state distillation, a very resource-hungry process. Gidney’s paper instead uses magic state cultivation, a 2024 Google Quantum AI innovation. This method gradually “grows” a high-fidelity magic state from noisy ones, rather than distilling many rough states into one. It drastically reduces the number of extra qubits and time needed to supply Toffoli gates for the algorithm. In fact, magic state cultivation makes producing a clean T/CCZ state almost as cheap as a regular operation, cutting down the vast overhead that distillation would require. By shrinking the “factory” space for generating these gates, the overall qubit count is lowered and more of the device can be devoted to the computation itself.

Thanks to these advances, the new factoring approach uses far fewer qubits and even manages to reduce the total gate count compared to earlier low-qubit methods. Notably, a 2024 algorithm by Chevignard et al. achieved low qubit usage but at the cost of an astronomically high gate count (~$$2\times10^{12}$$ Toffoli gates). Gidney optimized this, cutting the necessary number of Toffoli gates by over 100× relative to that 2024 approach. The 2025 design requires on the order of only a few billion Toffoli gates (approximately $$6.5\times10^9$$ by one estimate) instead of trillions. This is only about 2× more operations than the 2019 method, a huge improvement in the time–space trade-off. The net effect: factoring RSA-2048 now appears technically feasible with ~1 million qubits in roughly a week, rather than utterly impractical resource counts.

Assumptions and Runtime

It’s important to note that Gidney’s estimates rely on the same hardware assumptions used in 2019, just applied to the new optimized algorithm. The hypothetical quantum computer is assumed to have a 2D grid layout of qubits with only nearest-neighbor connectivity (no exotic long-range links). Each physical gate is assumed to have an error rate around $$1/1000$$ (0.1% error probability), which is roughly on par with or slightly better than today’s best quantum hardware fidelity. The error-correction uses the surface code, with a cycle time of 1 μs per round of error correction, and a control system feedback delay of about 10 μs. In plain terms, the model envisions a very fast, error-corrected superconducting or similar quantum processor where operations and corrections happen on the order of a million per second. These are aggressive but not implausible specs for future machines – current lab quantum processors operate in the ~10–100 μs gate times and achieve error rates near 0.1–1% in best cases, though only on tens or hundreds of qubits so far.

Under those assumptions, Gidney projects that factoring a 2048-bit number would take on the order of a few days of runtime, which he conservatively rounds up to “less than a week” to account for contingencies. This timeframe accounts for executing billions of quantum gate operations, including error-correction overhead and repeat trials to amplify the success probability. By comparison, the 2019 estimate was about 8 hours, but that faster time assumed a much larger quantum computer that could run many operations in parallel. The new design opts to use fewer “factories” in parallel (to cut qubit count), which lengthens the runtime. Still, one week vs. 8 hours is a reasonable trade if it means needing 20× fewer qubits – especially because qubits (hardware) are much harder to come by than additional time. A week is well within practicality for a cryptographically relevant attack (and could potentially be sped up with more parallelization if extra qubits were available).

Media Reception and Q-Day Buzz

The quantum and security communities have taken notice of this milestone. Coverage by tech media highlighted that quantum computers “could crack RSA codes with far fewer resources than previously thought”. Headlines emphasized the drop from 20 million to ~1 million qubits, suggesting that Q-Day – the day a quantum computer breaks public-key crypto – might arrive sooner than anticipated. Observers pointed out that some companies (and government roadmaps) aim to build million-qubit quantum processors by around 2030. If such a machine materializes on that timeline, the ability to factor RSA-2048 in a week could indeed be within reach, potentially accelerating the urgency for post-quantum cryptography. That said, Gidney himself is careful to note that today’s quantum computers are nowhere near this scale – current devices have at best a few hundred to a few thousand noisy qubits, not all of them error-corrected. His paper is a theoretical projection, not a demonstration, and it remains a preprint (not yet peer-reviewed).

In the broader crypto community, the reaction has been mixed: excitement at the technical tour-de-force, tempered by reminders that this doesn’t equate to RSA being cracked today. Some security experts used the news as a call to action, echoing the paper’s suggestion that planning for quantum-safe cryptography should proceed with urgency. At the same time, skeptics caution against hype, noting that achieving a million-qubit, low-error quantum computer is still a monumental challenge. In short, Gidney’s work generated buzz about an impending quantum threat, but also reinforced the existing consensus that migrating to post-quantum encryption is the prudent course – ideally before large quantum machines come online.

Personal Opinion

Does This Breakthrough Change the Urgency?

In an optimistic-yet-grounded view, Gidney’s factoring feat underscores the urgency of transitioning to quantum-safe cryptography, but it shouldn’t induce panic. The truth is, we in the industry have already been urging migration to post-quantum algorithms – this research simply puts an exclamation point on those warnings. The paper itself explicitly supports the timeline proposed by NIST’s post-quantum standards: deprecate vulnerable crypto by 2030 and eliminate it by 2035. Not because we know a quantum RSA-breaker will exist by then, but because “attacks always get better”. History has shown that both algorithms and hardware tend to improve faster than initially expected. Gidney’s work is a prime example: in just a few years, the estimated resources for quantum factoring fell by an order of magnitude. For cybersecurity practitioners, the lesson is clear – don’t bank on RSA-2048 staying safe until 2035 or beyond simply because early estimates looked infeasible. Preparing now by adopting post-quantum cryptography (PQC) is a wise risk management strategy, especially for data that needs to remain secure for a decade or more. The concept of “harvest now, decrypt later” attacks means adversaries could be stockpiling encrypted data today with the intent to decrypt it once a quantum computer is available. This new research signals that the timeline to “later” might be moving up, so the window to safely implement PQC could be tighter than we thought.

Are the Assumptions Realistic Given Today’s Hardware?

From a hardware perspective, Gidney’s scenario is both ambitious and surprisingly concrete. The assumed error rate (0.1% per gate) and operation speed (1 μs cycles) are at the cutting edge of today’s quantum experiments, but not sci-fi. Superconducting qubit platforms, like Google’s own, have demonstrated two-qubit gate fidelities on the order of 99.5–99.9% (i.e. ~0.1% error) in laboratory settings. And 1 μs cycle times are within reach for superconducting or possibly photonic systems (ion traps are slower per gate but very high fidelity). So in terms of quality, the assumptions represent the likely capabilities of quantum hardware in the next generation. The real stretch is quantity: scaling from 100 or 1000 qubits today to a million. That’s a leap of three orders of magnitude, demanding huge engineering advances in fabrication, cryogenics, control electronics, and error correction infrastructure. Companies like IBM, Google, and Intel have sketched roadmaps to reach a million qubits by the 2030s, but those roadmaps are speculative and face many hurdles (circuit footprint, crosstalk, yield, etc.) So while nothing in Gidney’s vision flagrantly defies known physics, it is very much a best-case scenario for the mid-2030s. It assumes everything – hardware scaling, noise reduction, and software – progresses steadily with no show-stopper.

Crucially, the paper does not claim RSA-2048 can be cracked with current tech; it assumes a fully error-corrected quantum computer, which itself requires many physical qubits per logical qubit (on the order of thousands of physical qubits to make one low-error “logical” qubit at the given 0.1% noise rate). So the million-qubit figure already factors in heavy error correction overhead. In practice, building even a small fault-tolerant quantum computer is an ongoing research effort. The first logical qubit with error correction was only recently demonstrated. Now we’re talking about ~20,000 logical qubits (which might be roughly what a 1-million physical qubit machine could support) operating in concert for days. Achieving that will likely require new breakthroughs in qubit architecture (e.g. modular or layered designs, more efficient error-correcting codes, etc.). So, while Gidney’s assumptions are reasonable targets for quantum engineers, they are by no means a given. We should view this as a motivating goalpost: it tells hardware teams what performance they need to strive for to make quantum codebreaking feasible. And conversely, it tells security teams that if those hardware goals are met, our classical cryptography would be in jeopardy.

The bottom line: the assumptions are at the edge of what many experts consider achievable in ~10. It’s neither immediate (so don’t toss out RSA tomorrow in a panic) nor infinitely distant. As one quantum insider quipped, “It’s not tomorrow, but it’s also not never.” In other words, the threat went from “maybe in our grandchildren’s time” to “maybe in our time,” and that’s a significant shift for planning purposes.

Takeaways for Security Professionals

For those in cybersecurity who aren’t quantum specialists, one might wonder how directly this academic-sounding breakthrough translates into action items. Here are a few key lessons:

- Quantum Threat Trajectory: The trajectory is unmistakable – quantum attacks on RSA are getting closer. In 2012, estimates said a billion qubits would be needed. By 2019, it was 20 million. Now it’s a million. The resource barrier is dropping by orders of magnitude roughly every half-decade. While it’s true that hardware progress is much slower (we haven’t jumped orders of magnitude in qubit count yet), the continual algorithmic improvements mean we can’t rely on earlier comfort margins. Don’t assume “too many qubits required” will hold true for long. As Gidney wryly notes, every encryption scheme eventually faces better attacks; quantum RSA-breaking is following that rule.

- PQC Transition is Non-Negotiable: If anyone was on the fence about whether to prioritize migrating to post-quantum cryptography, this is a clear signal. We now have a concrete (if challenging) blueprint for how a large quantum computer could break RSA-2048 in a matter of days. Combined with the “store now, decrypt later” risk, the safe course for any organization is to begin deploying PQC well before such a quantum computer appears. The good news is that PQC algorithms (like CRYSTALS-Kyber, Dilithium, etc., standardized by NIST) are available today. They may not need to be universally deployed this very second, but crypto agility – the ability to swap out algorithms quickly – should be part of your roadmap now. Think of it as preparing for a coming software update: you want to be ready to patch your cryptographic systems before the adversary’s hardware catches up.

- Keep an Eye on Quantum Advances (Both Hard and Soft): Cyber leaders should track not just hardware announcements (e.g. a new 1000-qubit chip), but also the algorithmic side of quantum computing. Gidney’s paper shows that pure theoretical research can radically alter the threat landscape. It’s not all about engineering bigger machines; sometimes someone comes up with a smarter way to use those machines. This means collaborations between cryptographers and quantum algorithm experts are crucial. Regularly consult sources that monitor quantum algorithm progress (academic journals, NIST reports, or industry analyses) – the next breakthrough might not make headlines in the mainstream press but could matter for your risk assessments.

- Quantum-Safe Doesn’t Mean Quantum-Immune to All: It’s worth noting that RSA is just one vulnerable algorithm – albeit the most iconic one. The same quantum advancements that threaten RSA also threaten other public-key schemes like elliptic-curve cryptography (ECC), which would fall to Shor’s algorithm as well. Gidney’s techniques could likely be adapted to attack ECC with fewer qubits too (ECC keys are shorter, but require similar periodic arithmetic). Digital signatures and key exchanges widely used today (TLS, code signing, cryptocurrency wallets) often rely on these primitives. So the imperative is broad: ensure that not only encryption, but also authentication mechanisms are transitioned to quantum-safe alternatives in time. Conversely, symmetric cryptography (AES, SHA) is not broken by quantum in the same way – it’s affected by Grover’s algorithm but that is a more modest threat (doubled key lengths suffice). Security teams should focus on the asymmetric cryptography in their systems first and foremost, as these are the crown jewels a quantum computer targets.

The Pace of Quantum Optimizations: A Moving Target

One striking aspect of this story is how rapidly the algorithmic frontier is advancing. It’s reminiscent of the early days of classical computing, where initial algorithms for a problem were often vastly optimized over time. In quantum computing’s context, we’ve seen a cascade of improvements for factoring: from Beauregard’s circuit in 2003 to Zalka, then to Fowler and others in the 2010s, then Gidney & Ekerå in 2019, and now Gidney again in 2025 – each iteration finding ways to cut overhead. This highlights an important point: quantum algorithmic efficiency is a moving target. Even if hardware stagnated, our understanding of how to use quantum computers more efficiently can leap forward unpredictably. Oded Regev’s 2023 work is another example, proposing alternative factoring methods. Likewise, improvements in error correction (like quantum LDPC codes or new decoders) could suddenly make a given number of physical qubits more powerful than we thought.

For cybersecurity planners, the takeaway is to build in safety margins. Don’t assume that an algorithm which appears out of reach in 2025 will stay out of reach by 2035. In the span of about 6 years, Gidney’s own estimate went from 20 million qubits to 1 million. He openly states that under the current model he doesn’t see another 10× reduction without changing assumptions – but that doesn’t preclude different approaches or unforeseen shortcuts. Maybe someone will find a clever way to use mid-circuit measurements or alternative qubit connectivity to shave resources; maybe a new algorithm (or a variant of Shor’s) will emerge from academic circles. It’s wise to expect the unexpected optimization. The pace of quantum progress isn’t just about qubit counts doubling; it’s also about the creativity in reducing those counts and gate operations.

On the flip side, it’s not all doom and gloom. The same innovation that yields better attacks also yields better defenses. For example, the magic state cultivation technique Gidney used doesn’t just help factoring – it improves the cost of any quantum algorithm needing lots of T-gates, including those for chemistry or materials science. This means future quantum computers might be able to do more with fewer qubits across the board. And on the defensive side, the post-quantum algorithms chosen by NIST were selected with a margin against quantum cryptanalysis – but researchers are continuously analyzing them too. In short, we’re in a technological race where both the attackers (quantum algorithms) and defenders (post-quantum crypto) are evolving.

In summary, the new finding that 2048-bit RSA can be cracked with <1 million qubits in ~week is a landmark for quantum computing and a wake-up call for cybersecurity. It doesn’t mean instant disaster – but it does mark the ticking of a clock. The race between quantum codebreakers and cryptographers has entered a new phase, and the finish line (Q-Day) appears closer on the horizon.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.