Quantinuum’s Helios Quantum Computer Demonstrates Quantum Advantage

Table of Contents

9 Nov 2025 – Quantum computing has reached a new milestone with Quantinuum’s Helios system – a 98-qubit trapped-ion quantum computer that has demonstrated beyond-classical performance on both benchmarking tests and a real-world simulation task.

Helios Architecture: 98 Qubits with Record Fidelity

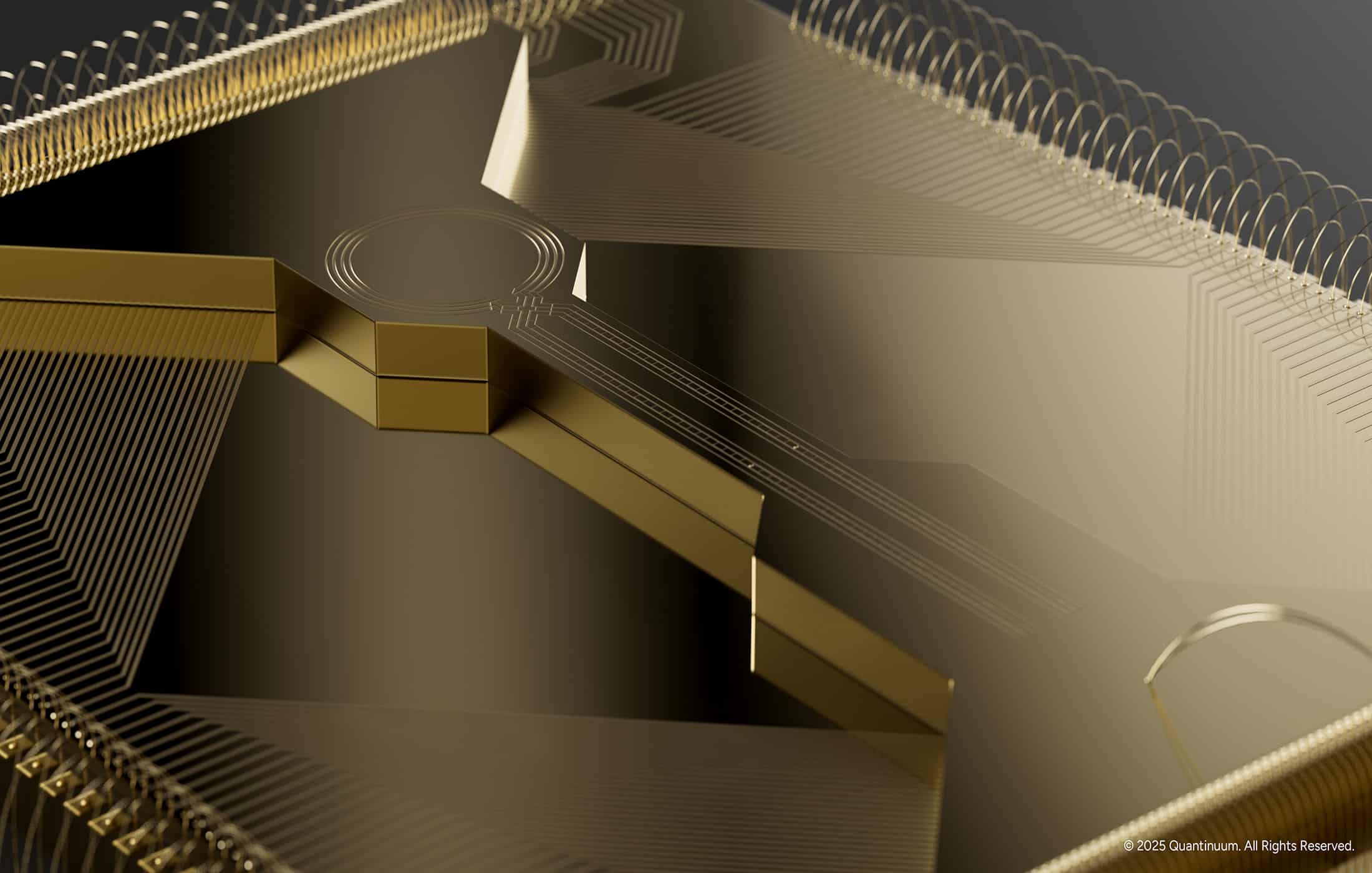

Helios represents the third generation of Quantinuum’s quantum charge-coupled device (QCCD) trapped-ion architecture. Unlike fixed two-dimensional qubit layouts in superconducting processors, Helios uses mobile atomic qubits shuttled through a trap with a four-way “X” junction. Qubits (single barium-137 ions) can be stored in memory regions and moved into one of 8 quantum logic zones for gates. This design enables all-to-all connectivity: any pair of qubits can interact by transporting ions, rather than being limited to nearest neighbors. The use of barium ions – instead of ytterbium used in earlier H-series models – allows higher gate fidelities due to improved laser stability and cooling transitions. Helios’s control system can make real-time decisions on ion transport and gate application, orchestrating complex programs with parallel operations in different zones.

Helios nearly doubles the qubit count of its predecessor (the H2, 50 qubits) and dramatically boosts performance. It boasts 98 physical qubits with average single-qubit gate fidelity of 99.9975% and two-qubit gate fidelity of 99.921% across all pairs – the highest reported for any commercial quantum processor. These error rates, on the order of $$10^{-4}$$ for two-qubit operations, are record-breaking and were confirmed through rigorous component-level benchmarks. For example, randomized benchmarking measured a two-qubit gate error around $$8\times 10^{-3}$$ per gate (consistent with ~99.2% fidelity) and single-qubit errors in the $$10^{-4}$$ range. Such low error rates enable deeper circuits and even allow experimenting with small-scale error correction. Indeed, Helios can encode logical qubits with only two physical qubits per logical (a 2:1 ratio), achieving a “better than break-even” error-corrected qubit – an encouraging sign for future scalability.

Another key architectural advance is the junction ion trap itself. Helios’s trap features a rotatable ion storage ring connected to upper and lower linear “legs” via an X-junction. Qubits are loaded and swapped between the ring (which acts as a random-access memory) and the linear segments (which feed into logic zones). This junction allows efficient routing of ions without increasing control wiring complexity, paving the way to maintaining full connectivity even as qubit counts grow.

Why does this architecture matter? In practical terms, Helios’s design translates to high operational parallelism and scalability. While only 16 qubits at a time can be manipulated in the logic zones (eight 2-qubit gates in parallel), the fast ion transport between zones means the device can implement large circuits involving dozens of qubits by time-multiplexing without sacrificing fidelity. The separation of storage and processing regions also reduces crosstalk and allows continuous cooling of idle qubits, which helps maintain coherence. Thanks to these innovations, Helios “sets a new state-of-the-art in digital quantum computers” and is delivering performance at a scale (nearly 100 qubits) previously limited to noisier platforms.

Random Circuit Sampling and Mirror Benchmarking

To quantify Helios’s capabilities, the team conducted random circuit sampling (RCS) benchmarks – the same task Google used in 2019 to claim quantum supremacy. In a typical RCS experiment, the quantum computer generates bitstrings from the output of a randomly chosen quantum circuit. Verifying quantum advantage requires showing that no classical supercomputer can sample from that output distribution in a feasible amount of time. Google’s original approach relied on cross-entropy benchmarking (XEB), where the fidelity of the quantum circuit is estimated by comparing measured outputs to classically computed probabilities for those outputs. However, classical simulation becomes intractable beyond about 50 qubits or moderate circuit depth – exactly the regime where quantum advantage emerges. This poses a catch-22: how do you verify the quantum computer’s result without being able to classically compute it?

Helios’s team employed a clever solution known as mirror benchmarking to bypass that roadblock. The idea is to run random circuits that are structured to “mirror” themselves, so that the outcome is known without full classical simulation. In practice, they execute a random sequence of gates followed immediately by its approximate inverse. Concretely, an RCS circuit of depth d layers is followed by a reversed circuit of d layers, with some randomization applied to prevent error cancellation. If there were no errors, the mirrored circuit would return the qubits to their initial state deterministically. In reality, the final state deviates due to noise, but the probability of returning to the correct state (the return probability) serves as a direct measure of the circuit’s fidelity. This method provides an experimental fidelity estimate for deep, large-qubit circuits without heavy classical computation. It essentially trades the need for classical verification for the ability to self-verify quantum circuits by reversing them.

Mirror benchmarking was applied to random circuits on Helios spanning its full 98 qubits. Each circuit layer consisted of random two-qubit gates (with a random connectivity pattern taking advantage of Helios’s arbitrary coupling) interleaved with random single-qubit rotations. By varying the number of layers, the team could observe how fidelity decays with circuit depth. As expected, fidelity decayed exponentially with depth, indicating that errors compound per gate roughly in line with the component error rates. Importantly, even at the largest depth tested, Helios retained a small but non-zero fidelity, meaning it was indeed successfully executing some of the most complex circuits ever run on a quantum computer.

Crucially, the Helios RCS study also estimated the classical simulation cost for those same circuits. Using state-of-the-art tensor network algorithms, the researchers estimated how many FLOPs (floating-point operations) and how much memory a supercomputer would need to replicate the sampling task. The results, summarized in Figure 3, are striking. As circuit depth grows, the classical cost skyrockets – even under optimistic assumptions about memory and parallelization – eventually exceeding what our best supercomputers could handle within the age of the universe. By the highest depths run on Helios, drawing a single random sample via simulation would take on the order of $$10^{10}$$ years on a top supercomputer (even assuming ~1 exaFLOP/s of sustained performance). In terms of energy, one would require fantastical levels of power to match Helios’s output: Quantinuum reported that a classical machine drawing samples at Helios’s rate would need more power than the Sun – indeed, more than all the stars in the visible universe combined! In contrast, Helios accomplished the task in a matter of minutes using about as much electricity as a single server rack. This astronomical gap between quantum and classical capabilities is a clear marker of quantum advantage.

In summary, RCS mirror benchmarking allowed the Helios team to demonstrate quantum advantage in a rigorous way, by showing Helios can generate highly complex quantum states that are essentially impossible to simulate classically in any reasonable time. The fidelity of these operations, while decaying with depth, was high enough to be measurable – a testament to Helios’s extraordinary accuracy. This moves beyond Google’s 2019 result: Google had achieved beyond-classical sampling with 53 superconducting qubits, but at such low fidelity that verifying the result required heroic classical effort on smaller instances. Helios not only scales to nearly double the qubits, but does so with a fidelity that can be directly inferred via mirroring. By the RCS benchmark, Helios has pushed “further into the quantum advantage regime than any system before it”.

Quantum Advantage in Practice: Simulating Superconductivity

Random circuit benchmarks are impressive, but they are essentially synthetic tests. Of equal importance is whether a quantum computer can solve practical problems beyond the reach of classical computing. Helios made a splash here as well: it was used to simulate a challenging physics problem in quantum materials, achieving a world-first result. Specifically, researchers ran large-scale simulations of the Fermi-Hubbard model to probe phenomena related to high-temperature superconductivity. The Fermi-Hubbard model is a foundational model of interacting electrons in a lattice, believed to capture the essence of superconducting behavior in cuprates and other materials. Classic computational methods struggle with it because the model’s quantum state space grows exponentially with system size.

Using a novel fermionic encoding and Helios’s all-to-all gate flexibility, the team simulated a Hubbard model on a $$6\times6$$ lattice – mapping to 72 qubits representing electrons, plus up to 18 ancilla qubits for readout, totaling 90 qubits in use. This is an enormous simulation: the full quantum state resides in a $$2^{72}$$-dimensional Hilbert space (more than $$4.7\times10^{21}$$ basis states), far beyond exact diagonalization or brute-force classical simulation. Even sophisticated classical algorithms like tensor network methods typically cannot handle a 6×6 Hubbard model with real-time dynamics, especially not when long-range observables are needed. Analog quantum simulators (like ultracold atom experiments) have studied the Hubbard model in certain regimes, but they are limited in measurements – typically only able to measure particle densities or simple correlators. Helios’s digital quantum simulation, by contrast, allowed arbitrary state preparation, long dynamical evolutions, and flexible measurements including the “off-diagonal” observables that signal superconductivity.

The payoff was significant. As reported in a recent scientific publication, the Helios experiment successfully measured superconducting pairing correlations in three different scenarios – something no previous platform had achieved. In one case, they prepared a half-filled 6×6 Hubbard model ground state and then simulated a laser pulse (an oscillating field) applied to it, analogous to experiments that induced transient superconductivity in materials. Helios was able to track the system’s non-equilibrium dynamics under this perturbation and observed a notable rise in η-pairing correlations – a mathematical signature that electrons were forming Cooper pairs out of equilibrium. In another regime, they prepared a doped “checkerboard” Hubbard model and detected d-wave pairing correlations in its approximate ground state, and also observed s-wave pairing in a two-layer (bilayer) Hubbard model relevant to nickelate superconductors. These observations constitute direct evidence that Helios can create and probe complex many-electron quantum states that exhibit superconductivity-like behavior.

The ability to see non-zero off-diagonal pairing correlations is a breakthrough because it proves a quantum computer can reliably produce and measure subtle quantum orders that elude classical detection. In practical terms, this means researchers can now use quantum computers as a kind of “quantum laboratory” to investigate open problems in condensed matter physics. For high-temperature superconductors, questions like how a laser pulse induces superconductivity, or what conditions favor pairing, can be explored by simulating various scenarios on the quantum computer – something Helios enables with fine control over all aspects of the simulation. As the blog announcing this result put it, “Helios offers a new level of control and insight… researchers can explore scenarios that are completely inaccessible to real materials or analog simulators”. This is an early glimpse of quantum advantage for useful tasks: not only has Helios done something a supercomputer could not (simulate a 72-particle entangled system in full detail), but it also delivered new scientific insight (confirming the presence of pairing correlations) that advances the understanding of superconductivity.

It’s worth noting that these simulations pushed Helios near its limits – using most of its qubits and requiring very low error rates to maintain coherence through the circuit. The achievement was made possible by algorithmic innovations (like a more compact fermion-to-qubit encoding that reduces overhead) and by Helios’s high fidelity and all-to-all circuit capability, which kept the required circuit depth manageable. The result was a real-world experiment at a scale “so large that no amount of classical computing could match it”. In the words of Quantinuum, “a system so large that its full quantum state spans over $$2^{72}$$ dimensions”, something beyond reach of any classical workflow. This firmly establishes that Helios’s quantum advantage is not just a parlor trick with random numbers, but a tool for exploring uncharted scientific territories.

Claiming “Quantum Advantage” – and How It Compares to Google’s Result

Quantinuum’s announcements have touted Helios’s accomplishments as entering the quantum advantage regime in a meaningful way. The term “quantum advantage” generally means a quantum computer performing a task that is infeasible for any classical computer. Google’s 2019 quantum supremacy experiment was the first famous example, but since then, the bar has been raised: not only do we want beyond-classical performance, we want it for tasks that are verifiable or useful. So how does Helios stack up, and how does it compare to Google’s latest?

On the benchmarking front, Helios’s RCS demonstration is a classic quantum supremacy-style result, but with important improvements. Google’s 2019 Sycamore processor sampled 53-qubit random circuits of depth ~20, claiming it would take thousands of years on a supercomputer to replicate. However, that experiment’s usefulness was limited – it basically produced random bits, and skeptics noted the outputs weren’t directly verifiable beyond heavy statistical tests. Helios in 2025 doubled the qubits (98) and achieved similar or greater circuit depth while maintaining enough fidelity to measure meaningful signal via mirror circuits. The computational gap reported (power of all stars in the universe vs a rack of equipment) even exceeds Google’s original claim (“10,000 years” or “10 septillion years” in some descriptions(. In essence, Helios strongly reinforces the point that as qubit counts and fidelity grow, random circuit sampling remains a clear quantum advantage benchmark – and one that any would-be competitor must now at least match.

That said, Google’s more recent milestone (2025) was the demonstration of a verifiable quantum advantage using a new algorithm called Quantum Echoes. In that experiment, Google’s team (using a 70+ qubit “Willow” superconducting chip, later scaled to 100+ qubits) measured an out-of-time-order correlator (OTOC) – a specific physics quantity related to quantum chaos. The brilliance of their approach is that the OTOC is an expectation value (like an average measurement) rather than a random sample, and it remains consistent across runs. This means it can be cross-checked on smaller instances or even analytically in some cases. Google essentially ran a forward-and-backward random circuit (echoing a similar mirroring concept) with a tiny perturbation in between, and showed that the resulting measurement from their 100+ qubit system could not be reproduced classically, yet had a definite value that could be verified in principle. They dubbed this “verifiable quantum advantage” – a step closer to useful quantum computing, since the result is not just random bits.

Comparing Helios to that: in spirit, both Helios’s simulation and Google’s OTOC experiment involve running complex circuits that would overwhelm classical computers, and both leverage a forward/backward evolution structure. Helios’s superconductivity simulation produced specific observables (pairing correlations) that are scientifically interpretable, which is analogous to Google’s OTOC being a concrete metric of chaos. Both experiments required superb hardware performance – Google’s used 103 qubits with error mitigation to get a meaningful signal, while Helios used up to 90 qubits with error rates low enough to detect subtle correlations. Helios’s advantage claims are arguably broader: RCS shows raw computational power, and the Hubbard model simulation shows domain-specific utility. Google’s claim, meanwhile, is that they have an algorithm (Quantum Echoes) that one can trust as producing a correct, verifiable result beyond classical reach. In terms of scale, Helios’s 98 qubits are on par with Google’s 70-100 qubits; both are pushing towards 100-qubit-level demonstrations.

It’s also worth noting that other forms of quantum advantage have been claimed (for example, Xanadu’s photonic processor demonstrated advantage in boson sampling tasks). But those photonic experiments, like Gaussian Boson Sampling, similarly suffer from being hard to verify and not yet tied to practical use. What we see in 2025 is a convergence: multiple quantum computing platforms are consistently performing tasks no classical computer can, and doing so in more controlled or useful ways than the initial supremacy experiments. Helios’s achievements contribute strongly to this narrative, especially by showing that a trapped-ion system – traditionally slower in clock speed but higher in fidelity – can reach the advantage regime and even facilitate real scientific discovery. As one Quantinuum scientist put it, “Currently, this is easily the most powerful quantum computer on Earth”, reflecting the confidence that Helios’s all-around performance (qubit count and fidelity) is unmatched.

In short, Helios’s quantum advantage demonstrations complement Google’s: Helios underscores raw performance and practical simulation capability, while Google underscores algorithmic verifiability and chaotic dynamics. Both are essential steps toward general-purpose quantum computing that meaningfully outperforms classical computing.

Implications for Quantum Computing and Cryptography

For the broader quantum computing field, Helios’s success is a proof-point that the field is steadily progressing on both axes needed for useful quantum computers: increasing qubit quantity and maintaining qubit quality. Achieving nearly 100 qubits with ~99.9% fidelities was not guaranteed a few years ago – errors could have scaled out of control. Helios shows that engineering advances (such as better ion traps, better lasers, and real-time control systems) can maintain performance at scaleg. This bodes well for the future: larger devices (hundreds of qubits) are on the horizon, and if they can keep similar error rates through techniques like modular QCCD architecture, we may continue to see quantum advantage in higher and higher impact problems.

From an industry perspective, Helios is positioned as an enterprise-grade quantum computer. During its early-access phase, companies like JPMorgan Chase and SoftBank tested it on “commercially relevant research”. While details are sparse, such use cases likely include complex optimization, quantum chemistry simulations for materials or pharmaceuticals, and generating certified random numbers for cryptography. Notably, Quantinuum’s own Quantum Origin service (a QRNG for cryptographic keys) can benefit from the RCS outputs of devices like Helios to produce provably unpredictable numbers. In fact, the Helios team alludes to protocols for certified randomness generation that Helios enables – presumably leveraging the idea that only a quantum device could have produced certain random strings, thereby providing a certification of true randomness for security applications.

Now, an important question for cybersecurity leaders: Does Helios bring us closer to “Q-Day,” the day when quantum computers can break widely used cryptography? The short answer: yes, but indirectly and not immediately. Helios’s accomplishments demonstrate significant progress in quantum computing, but they have not yet threatened cryptographic algorithms like RSA or AES. Breaking RSA-2048, for example, would require running Shor’s factoring algorithm on a quantum computer with thousands of logical qubits (roughly on the order of 4,000 error-corrected qubits for the number itself). In terms of today’s physical qubits, due to error correction overhead, that might mean millions of physical qubits are needed. Current machines like Helios are two orders of magnitude away in qubit count, and they operate in the NISQ (noisy intermediate-scale quantum) regime without full error correction. Helios’s 98 qubits could factor only very small numbers if running Shor’s algorithm, and errors would quickly accumulate for any non-trivial key size.

However, Helios does bring Q-Day closer in a broader sense: it proves that quantum hardware is rapidly improving and tackling classically hard tasks. Each leap in qubit count and fidelity shortens the timeline to CRQC. Five years ago, 50 qubits was front-page news; today we’re approaching 100 qubits with much better reliability. If this trend continues (and especially if error correction “break-even” milestones keep improving, as Helios has hinted), we might see prototype cryptographically relevant quantum computers within years. For instance, if a future Helios successor or another platform can integrate a few thousand physical qubits with error correction such that, say, a dozen logical qubits are stable, that could implement small instances of Shor’s algorithm or Grover’s search as a starting point.

In the meantime, the recommendation for CISOs and security professionals remains unchanged but ever more urgent: prepare your organizations for a post-quantum world. The fact that a quantum computer can solve a problem that consumes “the power of all the stars in the visible universe” to simulate classically should erase any doubt that quantum machines are not merely theoretical. Helios’s quantum advantage in simulating physics doesn’t directly translate to cracking encryption, but it validates the quantum computing field writ large. It is a reminder that the risk of Q-Day is not science fiction – it is a matter of when, not if.

Conclusion

Quantinuum’s Helios has established itself as one of the most powerful quantum computers to date, delivering high-fidelity operations at nearly 100 qubits and crossing important thresholds of quantum advantage. Through RCS mirror benchmarking, Helios proved it can outperform any classical supercomputer on a computational task, and through an ambitious Hubbard model simulation, it became a tool for new scientific discovery – marking the first observation of superconducting correlations on a quantum platform. These achievements highlight how far quantum computing has come: from speculative theory to machines that can do things no classical machine can.

For cybersecurity and IT leaders, Helios is a milestone that should be celebrated for its technological prowess and also noted as a harbinger. While not a threat to encryption by itself, it is part of a fast-moving progression. The quantum advantage it demonstrates is a stepping stone on the path to the cryptographic advantage a future quantum computer could hold over today’s protocols.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.