Oxford Achieves 10⁻⁷-Level Qubit Gate Error, Shattering Quantum Fidelity Records

Physicists at the University of Oxford have set a new world record for quantum logic accuracy, achieving single-qubit gate error rates below $$1\times10^{-7}$$ – meaning fidelities exceeding 99.99999%. The breakthrough, reported in a study published in Physical Review Letters in June 2025 (with a preprint on arXiv which I previously discussed in Jan and now updating), marks the lowest error ever recorded for any quantum computing platform. “As far as we are aware, this is the most accurate qubit operation ever recorded anywhere in the world,” said Professor David Lucas of Oxford’s Dept. of Physics. “It is an important step toward building practical quantum computers that can tackle real-world problems.”

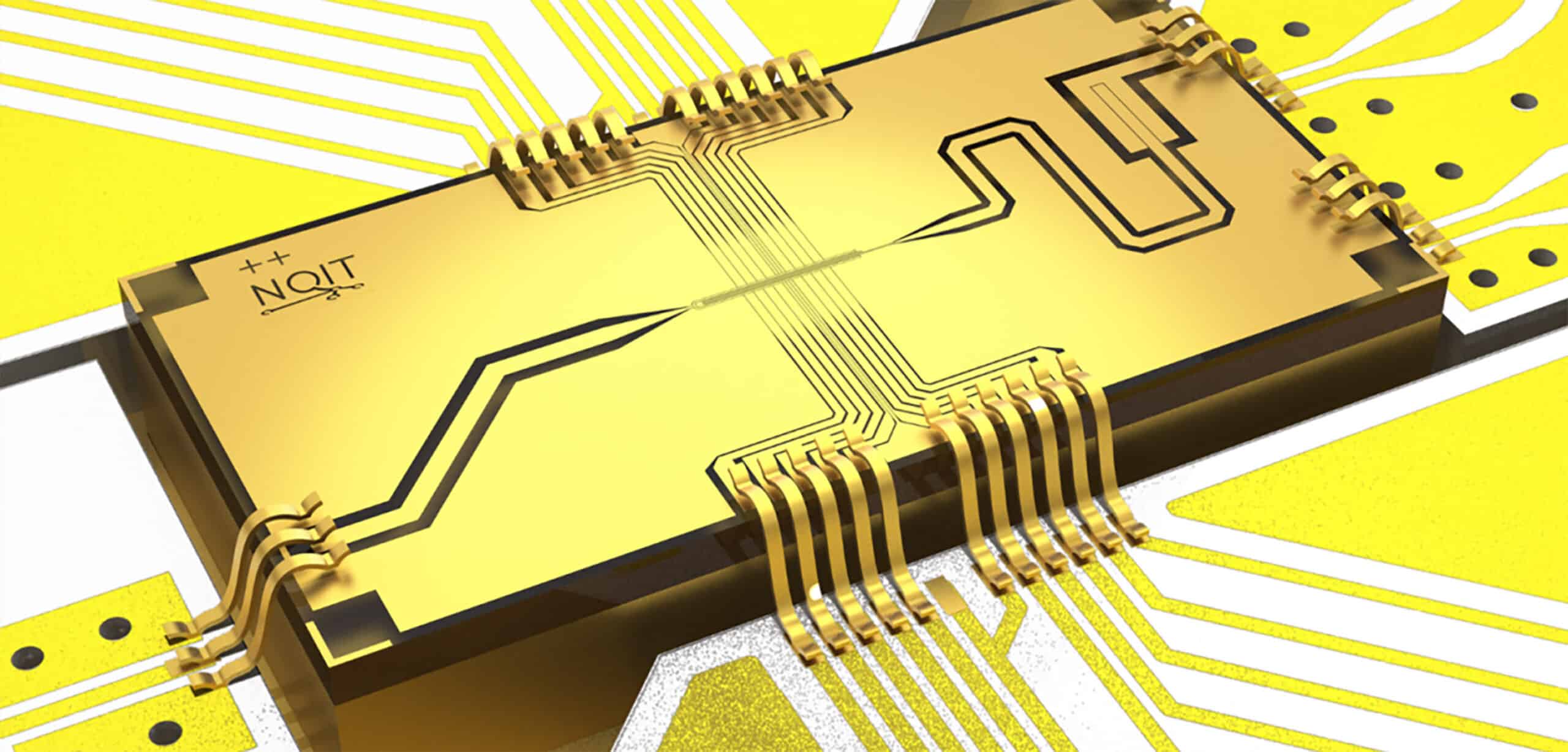

The Oxford experiment used a single trapped ion ($$^{43}$$Ca⁺) as a qubit, leveraging a hyperfine “clock” transition known for its long coherence time. Uniquely, the team controlled the ion’s quantum state with microwave pulses delivered through a chip-integrated resonator, rather than the laser beams conventionally used in trapped-ion systems. This all-electronic control – implemented on a microfabricated surface-electrode trap operated at room temperature with no magnetic shielding – offers exceptional stability and is cheaper and easier to scale than laser-based gating. As a result, the qubit’s environment was extraordinarily noise-free: calibration errors were suppressed below $$10^{-8}$$ (negligible) and the ion’s coherence time reached $$70s$$, allowing the researchers to execute millions of operations with only vanishingly small drift or decoherence.

To measure the gate fidelity, the Oxford team employed rigorous randomized benchmarking techniques. They applied long sequences of random Clifford gates on the ion and fitted the decay of state fidelity to extract an average error per gate of about $$1.5\times10^{-7}$$. In other words, only about 1 in 6.7 million single-qubit operations would fail, an error rate of 0.000015%. For perspective, a person is more likely to be struck by lightning in a given year (~1 in 1.2 million) than this qubit is to suffer a gate error. The Clifford gate sequences in the benchmark lasted up to tens of thousands of gates, confirming the infrequent errors with high statistical confidence. Notably, state preparation and measurement (SPAM) errors on this setup are on the order of $$10^{-3}$$ (0.1%) – orders of magnitude higher than the gate error – underscoring that the single-qubit logic is no longer the limiting factor in the system’s accuracy.

In achieving this record fidelity, the researchers mapped out all significant error sources. Qubit decoherence from residual phase noise was identified as the dominant contribution, given the finite $$T_2 \approx 69~\text{s}$$ coherence time of the ion. Smaller errors came from population leakage out of the qubit states and slight measurement imperfections over long sequences. Fast control errors (amplitude or frequency mis-calibrations of the microwaves) were effectively eliminated: automated calibration routines kept control errors below $$10^{-8}$$. Even at the fastest gate speed tested – a 4.4 μs single-qubit pulse – the error per gate was only ~$$2.9\times10^{-7}$$. This is a remarkable 20× speed-up compared to the team’s previous record gate (which was 88 μs long) while still achieving an error well below $$10^{-6}$$. Across the full range of gate durations from 35 μs down to 4 μs, the error stayed in the $$10^{-7}$–$10^{-6}$$ band, indicating a favorable speed-fidelity tradeoff now accessible with their microwave-driven ion technology.

Importantly, this result surpasses all prior single-qubit performance milestones. The previous best error rate was about 1 in 1 million ($$1×10^{-6}$$, or 0.0001%), achieved by the same Oxford group in 2014. Over the past decade, other platforms have improved steadily – for example, superconducting qubits in labs reached ~99.998% fidelity ($$2×10^{-5}$$ error) in 2025 using a fluxonium device, and advanced ion-trap systems from industry have demonstrated ~99.999% single-qubit fidelity (on par with $$10^{-5}$$ error) in regular operation. Nonetheless, Oxford’s $$1.5×10^{-7}$$ error rate represents nearly an order of magnitude leap beyond the “six nines” fidelity level, entering a regime of “seven nines” fidelity that no other qubit to date has attained. Even leading superconducting processors from IBM and Google typically report ~99.9% single-qubit fidelities (∼$$1×10^{-3}$$ error), and neutral-atom qubits have been around ~99.5% fidelity ($$5×10^{-3}$$ error) for single-site operations. Trapped-ion machines were already known for the highest gate fidelities – e.g. Quantinuum’s commercial ion systems recently hit 99.9% (three nines) fidelity for two-qubit gates, implying single-qubit gates in those systems are above 99.99% – but the Oxford result sets a new absolute benchmark across all platforms. “This record-breaking result represents nearly an order of magnitude improvement over the previous benchmark,” notes the Oxford press release, adding that it “opens the way for future quantum computers to be smaller, faster, and more efficient” by drastically reducing error-correction overhead.

The achievement was enabled not just by brute-force stability of the ion qubit, but by careful engineering. The use of microwave-driven gates (instead of lasers) is cited as a key advantage: “Electronic control is much cheaper and more robust than lasers, and easier to integrate in ion trapping chips.” Moreover, the experiment ran at room temperature in a normal laboratory environment – no cryogenics or magnetic shielding – which bodes well for practicality. The Oxford group, which previously spun out its expertise into the company Oxford Ionics in 2019, has been a pioneer in ion-trap quantum computing for years. Their latest result solidifies trapped ions’ reputation for ultra-high fidelity control. To perform useful calculations on a quantum computer, millions of operations will need to be run, which means if the error rate is too high, the final result will be meaningless. Hitting the one-in-10^7 error level is a significant confidence boost that single-qubit operations can be essentially “perfect” for practical purposes.

Despite the celebration, the researchers emphasize that this is only part of the larger challenge of building a scalable quantum computer. The next hurdle is two-qubit (entangling) gates, which are inherently more error-prone. In the best demonstrations to date, two-qubit gates have error rates on the order of $$5\times10^{-4}$$ (around 1 in 2000 operations go wrong) – five orders of magnitude higher than the Oxford single-qubit gates. Reducing two-qubit errors is “crucial to building fully fault-tolerant quantum machines,” the Oxford team notes. Still, this new single-qubit milestone provides a foundation: it means that qubit initialization and solo rotations can be essentially taken off the list of worries, allowing engineers to focus on multi-qubit coupling and readout errors. The Oxford researchers carried out the experiments as part of the UK Quantum Computing and Simulation Hub. Their work, titled “Single-qubit gates with errors at the 10⁻⁷ level,” appears in Phys. Rev. Lett. 134, 230601 (2025) and is available via arXiv:2412.04421 for those interested in the technical details.

My Analysis

Why 10⁻⁷ Errors Matter for Fault-Tolerance

Achieving physical gate errors in the $$10^{-7}$$ range is far more than an academic accolade – it directly impacts the roadmap to fault-tolerant quantum computing. Quantum error correction (QEC) theory tells us that if physical error rates can be pushed below a certain threshold (often quoted around $$10^{-2}$$ to $$10^{-3}$$ for many codes), then logical qubits with arbitrarily long lifetimes become feasible by encoding information across many physical qubits. However, the lower the physical error rates, the less overhead (extra qubits and gate operations) is required to maintain a given logical accuracy. In practical terms, a single logical operation on a fault-tolerant quantum computer might require dozens of physical operations if physical error is $$10^{-3}$$ – but only a handful of physical operations if error is $$10^{-7}$$. Higher-performant qubits will lead to lower overhead requirements for implementing error correction, as one researcher noted in context of recent advances.

In the case of the Oxford ion-trap experiment, the single-qubit gates are so accurate ($$1.5\times10^{-7}$$ error) that other error sources now dominate. This is actually a welcome development: it means one can treat basic one-qubit flips or rotations as effectively error-free in a quantum circuit, focusing QEC efforts on the harder problems of two-qubit entangling gates, memory decoherence, and readout errors. For instance, Oxford’s data showed SPAM (state preparation and measurement) error around $$1\times10^{-3}$$ – four orders of magnitude larger than the gate error. Similarly, two-qubit gates on ion traps (even the best-in-class) are still at least $$10^{-4}$$ to $$10^{-3}$$ error per gate. In a full algorithm, those higher-error operations would be the bottleneck; there’s little point worrying about $$10^{-7}$$ infidelity from single-qubit rotations if your entangling gates are three or four orders worse. Thus, the Oxford result shifts the frontier: attention can now concentrate on closing the fidelity gap for two-qubit gates and other system-level errors. The good news is that techniques learned here – such as robust microwave control, dynamic decoupling, better calibration, and error budgeting – can inform improvements in multi-qubit gates as well.

Lower physical error rates translate into exponentially lower logical error rates once error correction is applied. For example, surface-code QEC (used by Google and others) requires physical error below ~1% to start suppressing errors. If a quantum computer operates barely below that threshold, it might need thousands of physical qubits per logical qubit to achieve, say, $$10^{-15}$$ logical error probability. But if physical errors are orders of magnitude below threshold (like $$10^{-7}$$, which is 10,000× smaller than a 1% threshold), the required code size can shrink drastically. In fact, with error rates in the $$10^{-7}$$ range, even a relatively small distance code could in principle push logical errors down to the $$10^{-15}$$–$$10^{-18}$$ level (suitable for lengthy computations). This directly reduces the number of physical qubits and gate operations needed. The Oxford team highlighted that by reducing the error per gate, “the number of qubits required [for error correction] and consequently the cost and size of the quantum computer itself” are reduced. In short, ultra-high fidelity hardware can be traded for less redundancy – a smaller quantum computer can do the job of a much larger, noisier one.

To appreciate the stakes, consider cryptographic challenges like factoring an RSA-2048 key (the basis of much of today’s security). Most-cited estimates by researchers Gidney and Ekerå (2021) suggested that around 20 million physical qubits (with error rates around $$10^{-3}$$) might be needed to factor a 2048-bit RSA number in about 8 hours. This staggering number comes from the overhead of error correction on “noisy” qubits. More recent circuit optimizations have lowered the theoretical logical qubit count (e.g. to ~1,730 logical qubits), but if each logical qubit still requires thousands of physical qubits, the total hardware scale remains enormous. Now imagine we had qubits as reliable as Oxford’s: error $$~10^{-7}$$ or better. Such qubits could likely be operated with much shorter error-correcting codes – perhaps each logical qubit might only need tens or hundreds of physical qubits instead of thousands. While a precise recalculation is complex, it is clear that fewer qubits and fewer cycles would be required. The net effect is that a cryptographically relevant quantum computer (CRQC) (sometimes dubbed “Q-Day” when it arrives) might need orders of magnitude fewer physical qubits if those qubits are of Oxford-level quality. In other words, improving fidelity can accelerate timelines by lowering the bar for the quantity of qubits needed. As a simple thought experiment: if one could magically make two-qubit gates as clean ($$~10^{-7}$$ error) as these single-qubit gates, the resource estimates for breaking RSA-2048 would shrink dramatically, potentially from millions of qubits down to mere hundreds of thousands or even less (plus a lot of runtime) – bringing such feats closer to feasibility.

Of course, we are not fully there yet. The Oxford experiment dealt with one qubit; scaling to many qubits while maintaining $$~10^{-7}$$-level fidelity is an open challenge. Two-qubit gates in ion traps (and other platforms) must be improved to comparable levels for the full benefits to manifest. The best reported two-qubit gates in trapped ions are now around 99.9% fidelity, achieved by Quantinuum using laser-driven logic on a commercial system, and similar ~99.9% two-qubit fidelities have been demonstrated in small-scale superconducting circuits (e.g. MIT’s 2024 two-qubit fluxonium experiment reached 99.92% ). These results are encouraging – crossing the “three nines” barrier for entangling gates has long been viewed as a key threshold for practical QEC. In fact, Quantinuum’s team noted that at 99.9% 2-qubit fidelity, many error-correcting codes start working effectively. Still, 0.1% error is 3-4 orders of magnitude higher than 0.00001% ($$~10^{-7}$$). It will require sustained innovation in gate design, noise isolation, and perhaps novel techniques (like squeezed error-modes or more complex pulse shaping) to push entangling gates toward the $$~10^{-7}$$ realm. Additionally, SPAM errors need reduction – high-fidelity quantum computing demands not only perfect gates but also high-precision qubit initializations and measurements. Techniques like better optical detection, error mitigation, and cross-entropy verification can help, but ultimately new hardware (e.g. superconducting single-photon detectors for ion readout, or advanced quantum non-demolition measurements) may be needed to bring measurement error down to the $$~10^{-5}$$ or $$~10^{-6}$$ level to complement gate fidelities.

Quality vs. Quantity: A Hardware Strategy Debate

The Oxford accomplishment spotlights a fundamental strategic question in quantum engineering: Is it more effective to improve the quality of qubits, or to simply increase the quantity and rely on error correction? This question underpins different approaches taken by leading quantum computing efforts. On one hand, groups like Google and IBM have aggressively scaled up the number of qubits on chip (into the hundreds, and aiming for thousands), accepting that physical error rates around $$10^{-3}$$ or $$10^{-4}$$ may be just below threshold, and planning to use error correction across many qubits to reach reliability. Google’s 72-qubit “Willow” processor, for instance, recently demonstrated a distance-5 and -7 surface code that suppressed logical errors by half each time code distance increased, a key proof-of-concept that their physical qubits were operating below the threshold error rate. That approach is akin to “brute-force redundancy” – you tolerate fairly noisy operations, but you have so many of them and layered correction that the computation survives.

On the other hand, the Oxford result (and similar efforts by NIST and others in the ion trap community) represent a push for ultra-low error per qubit even at small scale. This “fidelity-first” philosophy argues that scaling up a faulty qubit is a dead end – better to perfect a qubit’s performance now, such that when you do scale up, you need far fewer extras to correct errors. As Oxford’s press statement put it, “By drastically reducing the chance of error, this work significantly reduces the infrastructure required for error correction”, enabling future quantum computers to be “smaller, faster, and more efficient.” The trade-off is that pursuing extreme fidelity often requires more engineering time per qubit (fine-tuning controls, materials, etc.), and sometimes slower gates. Trapped ions, for example, have spectacular coherence but relatively slow gate speeds (microseconds to milliseconds), whereas superconducting qubits are fast (nanoseconds) but decohere quickly. Some experts believe we will need a balance: qubit counts must grow and error rates must shrink hand-in-hand. Indeed, IBM’s recent 156-qubit “Heron R2” processor emphasizes both improved qubit coherence and better gate calibrations, which brought two-qubit error rates down from ~0.5% to 0.3% on that platform. IBM is effectively trying to incrementally improve fidelity as it scales, rather than waiting for a revolutionary low-error design. Similarly, Google’s prototype error-corrected logical qubit was achieved not by improving physical fidelity drastically, but by carefully orchestrating 49 physical qubits into a logical qubit that, for the first time, showed lower error than any individual component qubit.

The new record from Oxford suggests that quantum hardware can reach error rates once thought “astronomical” in their smallness – reinforcing that improving physical qubit quality is a viable path. It’s worth noting that certain quantum algorithms (especially those for chemistry or materials) might run on the so-called “NISQ” devices (noisy intermediate-scale quantum) if only the qubits were a bit less noisy. In that sense, extremely high fidelity could make some quantum applications feasible without full-blown error correction. Moreover, some quantum architectures (like modular ion traps or photonic cluster states) envision networking high-fidelity modules rather than monolithically fabricating thousands of qubits. In those cases, each module must have error rates as low as possible, because the cost to error-correct within a module is high. Oxford’s use of microwaves and integrated traps is aligned with a modular, scalable vision – for instance, the spin-out Oxford Ionics is pursuing modules that communicate via photonic links but maintain superb local gate fidelities. High fidelity at the module level could reduce the number of required modules or links.

Ultimately, the “quality vs quantity” dichotomy is somewhat false – a practical quantum computer will need both many qubits and high fidelity. The real question is one of emphasis and timing. The Oxford team’s achievement tilts the needle, showing that pushing for fidelity beyond the usual ~$$10^{-3}$$ regime can yield big dividends. It demonstrates that error rates are not stuck at 0.1% – with clever control (and perhaps a bit of luck in a quiet device), we can get to the 0.00001% scale. This will motivate other groups to target similar feats on two-qubit gates and multi-qubit systems. If those efforts succeed, the reliance on massive overhead (millions of qubits) might lessen, potentially accelerating the timeline to solving certain problems. As Professor Lucas noted, millions of operations across many qubits will be needed for useful algorithms, “and although error correction can fix mistakes, it comes at the cost of requiring many more qubits”. Every factor of 10 reduction in error means a significant reduction in that cost.

In summary, Oxford’s record-breaking $$10^{-7}$$ error rate is a milestone for quantum computing that resonates well beyond a single-qubit demo. It provides a proof-point that fault-tolerance at scale is attainable if we relentlessly improve physical qubit performance. It also sets a new benchmark for all hardware platforms to aspire to. The challenge ahead will be bringing all components of the quantum computing stack to comparable levels of reliability. With single-qubit gates approaching perfection, the spotlight will increasingly turn to two-qubit gates, measurement, and crosstalk errors. But for now, the community has clear cause to celebrate: a new global benchmark in qubit control that takes us one step closer to viable quantum machines.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.