Unpacking the “Modular Quantum Factoring” Hype: Why RSA Isn’t Dead Yet

Table of Contents

Introduction: Concern Over a Quantum “Breakthrough”

I’ve been inundated with messages asking about a recent paper titled “A Modular, Adaptive, and Scalable Quantum Factoring Algorithm.” On social media and even some press articles, this paper has been touted as a breakthrough – with claims that it dramatically reduces qubit requirements and possibly signals an imminent threat to RSA encryption. Understandably, CISOs in my network are alarmed, wondering if Q-Day (the day a quantum computer breaks RSA) just got pulled closer.

I want to set the record straight.

What the Paper Proposes: Shallow, Modular Phase Estimation

At its core, Shukla and Vedula’s paper tackles one part of Shor’s famous quantum factoring algorithm: the quantum phase estimation (QPE) routine (often implemented via a Quantum Fourier Transform) used to find the period of a function as the key step in factoring.

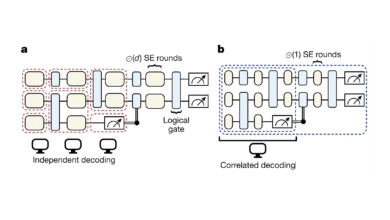

In standard Shor’s algorithm, phase estimation requires a large phase register (on the order of twice the number of bits of the integer you want to factor) and a deep, coherent quantum circuit – something far beyond today’s Noisy Intermediate-Scale Quantum (NISQ) hardware. The new paper’s idea is to break this phase estimation into smaller, shallow modules (or “windows”) that use only a few qubits at a time and can be run sequentially, with classical post-processing to combine the results. In other words, instead of one big monolithic circuit, they propose many bite-sized circuits.

How it works: Each module is a small QPE circuit operating on, say, 3 or 4 qubits (the paper claims as few as three or four phase qubits suffice for their examples). These circuits handle just a segment of the phase information. After running one module and measuring it, you move on to the next module. By doing this in a “windowed” fashion, the algorithm drastically reduces the number of qubits needed at one time for the phase estimation part.

Importantly, however, the authors acknowledge that the number of qubits in the work register (the part of the quantum computer that performs the modular exponentiation on the number to be factored) is not reduced by this method. In their own words, the approach “allows for a reduction in the size of the phase (or counting) register … down to a small, fixed block size of only a few qubits … while leaving the work register requirement unchanged”. This means the heavy lifting – all the qubits and gates needed to implement the modular arithmetic for large numbers – remains just as costly as in regular Shor’s algorithm.

The novel twist is how the results of these small quantum blocks are stitched together classically. The paper introduces an “overlap mechanism” between blocks: essentially, the blocks are designed to have overlapping bits of the phase so that consecutive modules share some information. You can imagine each module yielding a fragment of the phase (like a few bits of the binary expansion of the number’s period), and the next module’s fragment overlaps with the previous fragment. This overlap provides redundancy – a form of error-checking and alignment to make sure that the fragments correspond to a consistent solution. If two adjacent fragments agree on the overlapping section, chances are they belong to the same “phase band” (the same underlying period); if they don’t agree, the classical algorithm knows that combination of fragments was inconsistent and can discard it. The authors liken this to matching color bands: if each possible eigenphase in the quantum algorithm is a distinct color, each block reveals a short segment of a color band. The overlap lets you ensure you’re stitching together segments of the same color, rather than ending up with a mismatched multi-color result.

Another clever aspect is the use of a phase offset (or “exponent offset”) for each successive window. Instead of always starting each QPE module in the exact same state, they increment the exponent used in the modular exponentiation for each block – effectively targeting different bits of the phase in each window. This is somewhat like choosing a non-standard initial state or basis for the later modules, rather than the naive approach of always starting from scratch. By doing so, they home in on different portions of the phase bit string. Combined with a “carry-aware” stitching algorithm, this helps align the outputs of each window in the right positions when reconstructing the full phase. The overlap provides error redundancy: if a block produced a spurious result due to noise, it likely won’t consistently overlap with its neighbors, so the classical post-processing can detect and eliminate such outliers.

In summary, the paper claims an approach that can perform Shor’s algorithm’s phase estimation with far fewer qubits in the quantum circuit at any given time. The trade-off is that you need to run more circuits sequentially and do some smart classical post-processing to combine the pieces. It’s a bit like breaking a long calculation into chunks that only require a small calculator each time, then piecing together the answer.

The authors present numerical simulations (for factoring small numbers like 221, which has a known period 16 in their example) to show that the method can indeed recover the correct result using overlapping windows of 3-4 qubits. It sounds great – if it could scale up as advertised.

What’s Novel and Clever Here?

To give credit where it’s due, several elements of this proposal are innovative:

Modular “shallow” circuits

By limiting quantum operations to small blocks, the method is more NISQ-friendly. Shallow circuits suffer less from decoherence, and needing only ~3 qubits for the QFT/phase-estimation part (instead of potentially thousands) is an appealing idea.

This could, in principle, make it easier to factor very small numbers on real hardware, or to incorporate parallelism (multiple small quantum processors working on different blocks in parallel).

Overlap mechanism

The idea of overlapping the blocks (sharing a few qubits or a few bits of the phase between sequential runs) is quite clever. This overlap creates a redundancy that can be used to “tie” the independent quantum runs together, almost like having a jigsaw puzzle where each piece shares a bit of picture with the next piece so you know how they connect.

In theory, this maintains a vestige of coherence across the entire algorithm through classical means. The authors describe how this overlap enables a “robust reconstruction of phase information” despite each block being measured separately.

Error redundancy and classical stitching

By having overlapping segments and multiple candidate outputs from each block, the classical post-processing can cross-verify and eliminate inconsistent results. This redundancy could make the algorithm more tolerant to noise in any single run – a desirable feature for NISQ-era machines.

Essentially, if one block’s outcome was wrong, it likely won’t line up correctly in the stitching process, so the correct global solution can still be identified from the sea of candidates. The paper reports using a “carry-aware stitching” algorithm that checks overlaps and discards mismatched combinations, which is a novel approach to assemble the phase bits.

Non-standard initial states (Exponent offsets)

Unlike the traditional Shor’s algorithm, where each phase estimation uses the same starting state (a uniform superposition over all possible exponents), the authors introduce an exponent offset parameter for each block. In plainer terms, each block is not looking at the exact same aspect of the problem – later blocks start with the quantum state already “rotated” to focus on a different window of phase bits.

This adaptive targeting of different segments of the phase is an interesting twist, and it builds on the authors’ earlier work on “adaptive and windowed phase-estimation” techniques. Essentially, they prepare each subsequent QPE module in a tailored way rather than the standard approach, which is indeed a novel idea in the context of factoring algorithms.

These ideas show creative thinking and may well find use as subroutines or optimizations in future quantum algorithms (for phase estimation or amplitude estimation in other contexts). However, the big question that industry experts immediately asked was: Does this actually scale up for large, cryptographically relevant integers? Or are these advantages only apparent for small toy problems?

Expert Criticisms from the SciRate Community

Within days of the paper’s release, a spirited discussion unfolded on SciRate (a site for discussing arXiv preprints). Notably, Craig Gidney (a quantum computing researcher at Google, known for his work on quantum algorithms and error correction) and Martin Ekerå (a researcher in post-quantum cryptanalysis) raised serious objections. Here I’ll summarize their key critiques (which I share), along with direct comments from the SciRate thread:

Breaking Global Coherence Loses the Signal

Shor’s algorithm works by creating a delicate quantum interference pattern that makes the correct period stand out from an exponentially large space of possibilities. Gidney argued that by chopping the phase estimation into independent pieces (and thus measuring and discarding the quantum state after each block), you risk destroying the very interference that carries the information. In his words: “Shor’s algorithm produces states that have exponentially huge numbers of likely outputs. Small subsections of the output will be exponentially close to uniformly random. So sampling small parts of the output is equivalent to sampling a uniformly random number… If you throw out the quantum state like they’re suggesting, all you’re left with is a random number.”

In essence, each small block by itself just yields what looks like random noise – unless there is a mechanism to maintain coherence across blocks. The only reason techniques like qubit-recycling QFT work in other contexts is that they do preserve a global quantum state throughout the process. In this proposal, after each module the state is measured (collapsed), so the worry is that nothing useful carries forward. Gidney’s immediate take was blunt: “I think it’s wrong,” he said of the paper’s core claim.

Exponential Number of Eigenstates (“Colors”) Problem

In the factoring problem, the quantum state isn’t a single eigenstate but rather a superposition of many outcomes. Gidney and others pointed out that the authors’ method, as initially described, only clearly works if the quantum state fed into phase estimation is a single eigenstate of the unitary operation (i.e. in a trivial case where the answer is sort of already known). In Shor’s algorithm you have a superposition of many different states (often on the order of $$2^n$$ possible values, where n is number of bits). One SciRate commenter illustrated this using a “colors” analogy: each distinct eigenphase corresponds to a different color band, and with many colors present, each small window might pick up a fragment of any of them. Gidney pressed the question: what happens when there are $$2^{2048}$$ possible eigenstates (for a 2048-bit RSA number)? He noted that with so many “colors,” “you’ll de-facto never see the same color twice” in separate runs. That means the overlap mechanism might not ever get a chance to latch onto a consistent color across blocks, because every run yields a fragment of a basically unique state. The worry is that the stitching method could break down completely at large scale.

In the thread, after some debate, even the supportive commenters conceded that the method had only been explicitly shown for at most a superposition of two eigenstates (in the authors’ prior work on amplitude estimation). Extending it convincingly to a superposition of extremely many states remains an open question.

In summary, it’s unclear whether the overlap-and-stitch trick can recover useful information when faced with the full complexity of Shor’s algorithm, where many eigenphases contribute.

Need for Large Block Size (No Free Lunch on Qubits)

Martin Ekerå’s critique honed in on a theoretical requirement: to get any information out of an order-finding procedure, the resolution of your phase estimation window must be fine enough to distinguish the actual period. He argued that if the order (period) is $$r$$, then each independent run needs a phase register of size > $$\log_2 r$$ qubits to capture enough information. If you arbitrarily restrict each block to a tiny size (like 3 or 4 qubits) regardless of how large r is, you are essentially running a quantum circuit that can’t possibly resolve the period – and the outcome of that run will be uniform random noise (no information). Ekerå backed this up with simulation examples from the paper’s own data. For instance, the authors factored $$N=221$$ (with period $$r=16$$) using four 3-qubit windows. Ekerå notes that 16 is a small power of two, which is a special easy case. He re-simulated the authors’ circuits and observed that whenever the block size was smaller than $$\log_2 r$$, the measurement distributions were uniform (no peaks) – except in special cases where the period happened to align nicely with a power of two. For a less special case like $$N=161$$ (order $$r=66$$, which is not a power of two), running the same small-window approach yielded uniform distributions in every window – essentially a failure to get any signal.

His conclusion: unless you allow the window size to grow with the problem (roughly proportional to $$\log_2 r$$), the approach doesn’t scale. The authors had suggested $$m_{\max}$$ (max window size) could be as low as 3 or 4 even for large problems, which Ekerå flatly calls implausible for general cases. This undercuts the headline claim that only a constant number of qubits are needed for phase estimation regardless of the number size – in truth, that appears to hold only for very small or special orders.

Efficiency of “Stitching” and Lack of Proof

Another objection is the computational cost of the classical stitching process. If each small block yields many candidate bits (many “top candidates” for that window of phase), by the end you could have an exponentially large list of possibilities to stitch together. Ekerå expressed skepticism that the stitching can be efficient at scale, “since there are exponentially many frequencies” to consider in general. The paper did not provide a rigorous complexity analysis showing that their classical post-processing won’t blow up exponentially (it’s presumably heuristic, aided by the overlap constraints).

Additionally, experts pointed out that the authors did not supply a formal proof that the method succeeds with high probability in the general case – they provided simulations for small examples and intuitive arguments. Given how tricky quantum algorithms can be, the community is rightly cautious: without a proof (or at least much larger experimental evidence), it’s hard to believe this method will scale to RSA-sized numbers.

As Gidney noted, the authors’ theoretical proof seemed to cover only the trivial scenario of starting with an eigenstate, not the full superposition. So there is a gap in the argument when it comes to scalability and correctness for large n.

Context of Prior Work

Finally, it’s worth noting that the idea of reducing qubit counts for phase estimation is not entirely new. Techniques like qubit recycling, iterative phase estimation, and various optimized order-finding routines have been studied for decades.

Ekerå observed that the authors did not cite some of the seminal prior research on windowed phase estimation and recycling in Shor’s algorithm. In fact, previous methods have already brought down the qubit count for phase estimation to roughly n (from 2n) in some cases by trading more runs or assuming some classical help. The new paper’s approach is “complementary” in the sense that it focuses purely on the phase estimation layer (and the authors themselves acknowledge that their method could be combined with other optimizations for the arithmetic layer). But experts remain unconvinced that this new angle overcomes the known theoretical barriers.

To sum up the community feedback: interesting idea, but heavy skepticism about its practical impact. As one commenter put it, the proposal might be a “small ingredient” in future improvements, but on its own it doesn’t change the fact that factoring large numbers on quantum hardware is enormously demanding. After extensive back-and-forth on SciRate, the consensus among experts is that RSA is not in immediate danger from this approach.

No Imminent Threat to RSA from This Paper

After reviewing the paper and the critiques, my opinion is firmly aligned with the skeptics. There is no quantum revolution or imminent RSA-breaking technique to worry about in this work. The method is clever in parts and might inspire further research (for example, on hybrid quantum-classical algorithms or error mitigation strategies), but it does not overcome the fundamental scaling challenges of Shor’s algorithm.

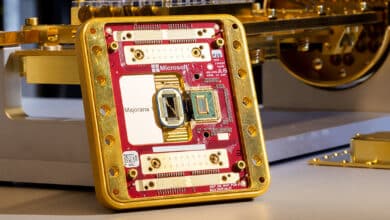

Even if we assume the phase estimation could be done in smaller pieces, the fact remains that to factor, say, a 2048-bit RSA number, you would still need to implement huge modular exponentiation operations on a quantum computer. That requires thousands of logical qubits and error-corrected gates for the work register – a mountain that this paper doesn’t attempt to move.

In other words, the bottleneck for breaking RSA is not just the phase estimation; it’s the entire circuit depth and qubit count needed for the modular math. This proposal leaves that part “unchanged”. So the overall order-of-magnitude resources (qubits * time) are still far beyond current technology.

Additionally, nothing in the paper shortcuts the need for quantum error correction when scaling to large problems. Reducing the phase register might save a linear factor of qubits, but if you still need millions of physical qubits for error correction of the work register, the timeline for a cryptographically relevant quantum computer remains essentially the same.

For CISOs and security professionals, the takeaway is: don’t believe the hype without verification. This paper does not signal an early arrival of Q-Day. We should of course continue to monitor genuine advances in quantum algorithms and invest in post-quantum cryptography migration in a sensible timeframe. But this particular result, despite some misleading social media chatter, isn’t a game-changer.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.