Zuchongzhi 2.0: China’s Superconducting Quantum Leap

Table of Contents

Hefei, China, (Jun 2021) — A team of Chinese physicists has unveiled Zuchongzhi 2.0, a cutting-edge 66-qubit superconducting quantum computing prototype that pushes the frontiers of computational power. Announced by the CAS Center for Excellence in Quantum Information, this new quantum machine builds on its predecessor (Zuchongzhi 1.0) with more qubits and higher fidelity, achieving a milestone known as quantum computational advantage (or “quantum supremacy”) in a programmable device. In a benchmark test, Zuchongzhi 2.0 solved a problem in just over an hour that researchers estimate would take the world’s fastest supercomputer at least eight years to crack. This dramatic speedup – on the order of millions of times faster than classical computing for the task – firmly places Zuchongzhi 2.0 at the forefront of the quantum computing race. It also marks China as the first nation to reach quantum advantage on two technological platforms (photonic and superconducting), underlining the country’s rapid strides in this high-stakes field.

From Zuchongzhi 1.0 to 2.0: Scaling Up a Quantum Processor

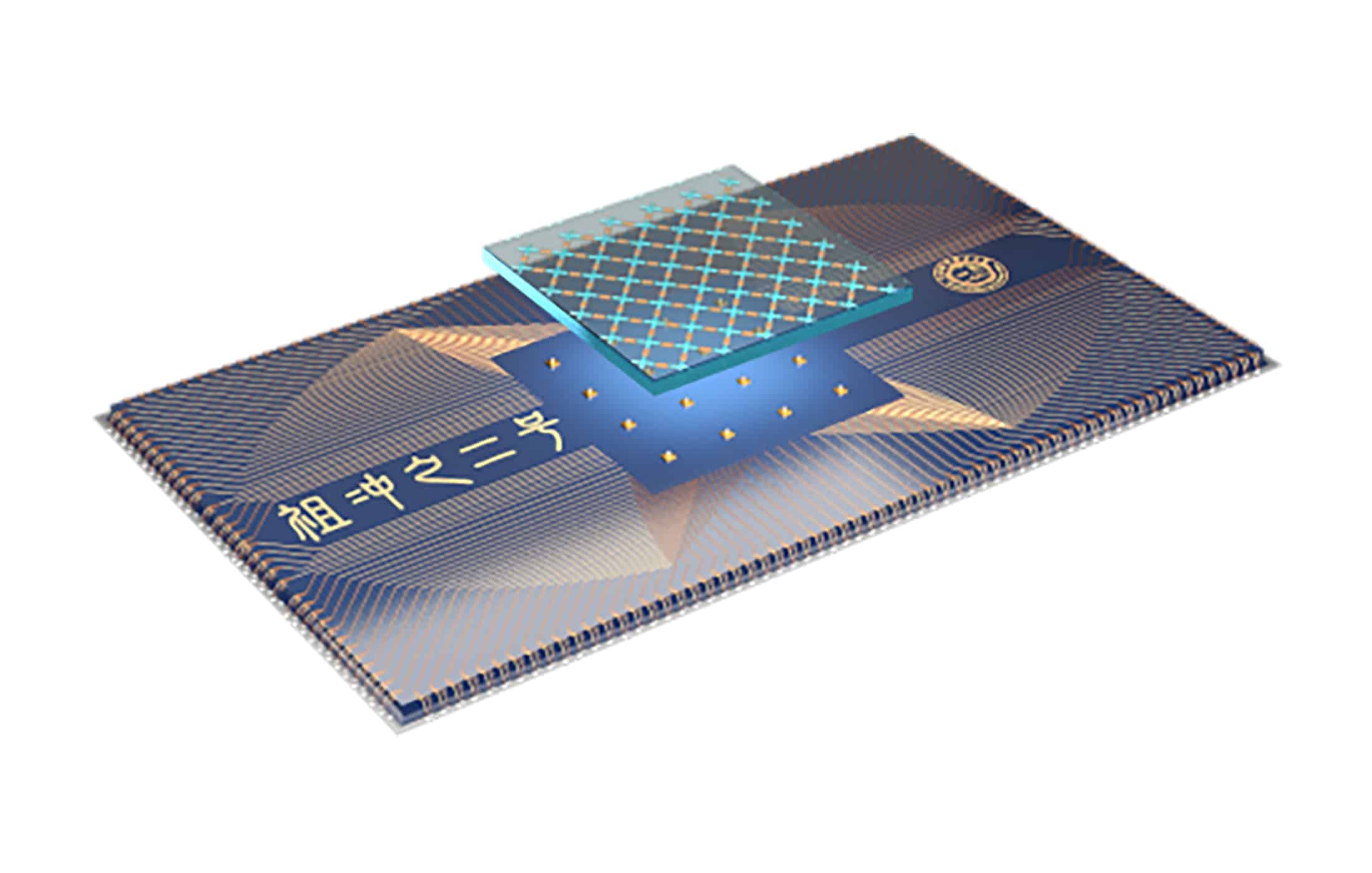

Zuchongzhi 2.0 is the second-generation superconducting quantum processor developed by a team led by Pan Jianwei at the University of Science and Technology of China (USTC) and the Chinese Academy of Sciences. It is named after Zu Chongzhi, a 5th-century Chinese mathematician famed for calculating pi with record-breaking precision. The first version (Zuchongzhi 1.0), introduced in early 2021, featured 62 qubits and demonstrated advanced quantum control (such as two-dimensional quantum walks) but stopped short of outperforming classical computers. Zuchongzhi 2.0, by contrast, ups the ante to 66 functional qubits arranged in a two-dimensional lattice, with a network of 110 tunable couplers linking them. This expanded, programmable architecture allowed the researchers to attempt a much more complex computation than ever before on a Chinese superconducting device.

Under the hood, Zuchongzhi 2.0’s design emphasizes both scale and precision. Each of its 66 qubits is a transmon (a superconducting circuit element) that can be entangled with its neighbors via adjustable couplers, effectively enabling or disabling interactions as needed. The team implemented a flip-chip layering technique: one chip contains the qubit array and couplers, while a second chip carries the control wiring, bonded back-to-back. This 3D integration reduces interference and crowding from control lines, a crucial engineering advance for scaling up qubit counts. The researchers also improved qubit coherence and readout fidelity – reporting an average readout accuracy of about 97.8% in the upgraded “Zuchongzhi 2.1” system – ensuring that the larger processor could still operate with high precision. These refinements gave Zuchongzhi 2.0 the stability and controllability needed to tackle a computational challenge beyond the reach of classical supercomputers.

Demonstrating Quantum Advantage on a 66-Qubit Chip

The flagship achievement of Zuchongzhi 2.0 was a demonstration of quantum computational advantage using a randomized computing task. The team ran a random quantum circuit sampling experiment – essentially instructing the 66-qubit processor to apply a series of random operations (gates) on a subset of qubits and sampling the output probabilities. In practice, they programmed a circuit involving 56 of the qubits, with a depth of 20 cycles of operations. This type of task is designed to be easy for a quantum computer but extraordinarily hard to simulate on a classical machine, because the quantum circuit generates a complex, essentially random output distribution across an immense state space.

The results decisively favored the quantum approach. Zuchongzhi 2.0 completed the 56-qubit, 20-cycle random circuit sampling in about 1.2 hour, generating a set of measured bitstrings that sample the target distribution. By contrast, the researchers estimated that simulating the same process on TaihuLight (one of the world’s most powerful supercomputers) would require at least 8 years of number-crunching. In terms of computational complexity, this experiment was 2–3 orders of magnitude more demanding than the landmark 2019 demonstration on Google’s 53-qubit Sycamore processor. “Our work establishes an unambiguous quantum computational advantage…infeasible for classical computation in a reasonable amount of time,” the team wrote, heralding the result.

How It Stacks Up Against Google, Jiuzhang, and IBM

- Google’s Sycamore (2019): Google was first to claim quantum supremacy, using a 53-qubit superconducting chip to perform a random circuit sampling task in 200 seconds. They initially estimated the task would take 10,000 years on a classical supercomputer, though subsequent algorithmic improvements showed it could be done in days on large clusters. Zuchongzhi 2.0’s sampling task was significantly harder: USTC researchers pegged it at $$10^2$$ – $$10^3$$ times more computationally complex than Sycamore’s experiment. In other words, Zuchongzhi’s 56-qubit circuit generated a distribution even more daunting for classical algorithms to simulate, extending the quantum advantage gap. While Google’s feat relied on one narrow benchmark, the Chinese team’s success reinforced that the quantum speedup holds even as problem size grows – a critical validation amid debates spurred by IBM and others about classical simulation tricks.

- USTC’s Jiuzhang 1.0 (2020): Before Zuchongzhi, Pan Jianwei’s team achieved quantum advantage with a very different technology: photonics. Their Jiuzhang 1.0 optical quantum computer used laser-generated photons and a complex interferometer to do Gaussian boson sampling, detecting 76 photons and obtaining results ~1014 times faster than a classical supercomputer could. This was a specialized but pivotal experiment, showcasing quantum primacy in a photonic system. In 2021, alongside Zuchongzhi 2.0, the team also unveiled Jiuzhang 2.0 with 113 detected photons, pushing the photonic advantage to an astonishing 1024-fold speedup over classical computation. Zuchongzhi 2.0’s accomplishment is analogous – a different physical platform (superconducting circuits) tackling a different hard sampling problem – but equally significant. Taken together, Jiuzhang and Zuchongzhi made China the first country to demonstrate quantum advantage in both of the two leading paradigms for quantum computing.

- Zuchongzhi 1.0 (early 2021): The immediate precursor to Zuchongzhi 2.0 was a 62-qubit superconducting prototype simply called “Zu Chongzhi.” Debuted in May 2021, it implemented a smaller-scale programmable quantum walk and other tests. However, it didn’t quite surpass classical capabilities; think of it as a stepping stone that proved the hardware design. Researchers learned from Zuchongzhi 1.0’s performance and quickly iterated. The jump to Zuchongzhi 2.0 (sometimes dubbed “2.1” after final refinements) brought four more qubits into play and significantly improved fidelity (for example, nearly 98% readout accuracy vs. lower in the earlier version). This upgrade was key to successfully running the large 56-qubit sampling circuits without excessive error buildup. As Prof. Lu Chaoyang of USTC noted, Zuchongzhi 2.1 achieved quantum advantage “for the first time” compared to the earlier 62-qubit device which had not.

- IBM’s Superconducting Systems (pre-2021): IBM, another leader in quantum computing, had been steadily advancing its superconducting qubit processors but with a different focus. By 2020 IBM had a 65-qubit chip (codenamed Hummingbird) available on its cloud, and in 2021 it announced a breakthrough 127-qubit processor called Eagle. Unlike Google and USTC, IBM did not attempt a “quantum supremacy” sampling experiment with these devices. Instead, IBM emphasized quantum volume (a holistic performance metric) and improving error rates. In fact, only a fraction of IBM’s 65 qubits could be used reliably at once in 2020 tests due to fidelity limitations. The company was pursuing a longer-term road map: the Eagle chip introduced a new multi-layer wiring approach (separating control and qubit layers, similar to Zuchongzhi’s strategy) to maintain stability as qubit counts grow. IBM claimed Eagle was the first circuit impossible to simulate classically, but did not publish a specific benchmark like random circuit sampling to quantify this. In short, by late 2021 IBM had the largest qubit count on a single chip, whereas Zuchongzhi 2.0 held the record for actually demonstrating a computational task beyond classical reach. Both approaches underscored different aspects of progress in the field – IBM targeting future fault-tolerance, and USTC seizing a near-term computational milestone.

Technical Breakthroughs and Why They Matter

The success of Zuchongzhi 2.0 rested on several technical advances that carry significance beyond this one experiment. First, the tunable coupler architecture is a notable innovation in superconducting qubit design. By inserting extra qubit-like circuits between data qubits, engineers can modulate the interaction strength with fast electronic pulses. This means qubits can be strongly coupled when performing two-qubit logic gates, then effectively decoupled (isolated) during other operations to reduce cross-talk. Tunable couplers helped Zuchongzhi 2.0 achieve high-fidelity operations across a large 66-qubit array – a challenging balance of connectivity versus noise. The approach is “compatible with surface-code error correction” the team noted, meaning the layout could, in the future, host error-correcting protocols by arranging qubits in a 2D grid with nearest-neighbor links. In the broader context, such an architecture is paving the way toward scalable quantum computers where thousands or millions of qubits might be controlled in a modular, switchable network.

Another key achievement was maintaining coherence and control precision at scale. Quantum advantage demonstrations are extremely sensitive to errors; each additional qubit and each layer of gates increase the difficulty of staying ahead of classical simulation (since too many errors would make the quantum output easy to mimic by noise). The Zuchongzhi 2.0 team implemented careful calibrations and error mitigation techniques to maximize fidelity. They reported that the “whole system behaved as predicted when the system size grows from small to large,” indicating minimal introduction of new error modes as they ramped up to 56 qubits. In practice, single-qubit gate fidelities on Zuchongzhi were on the order of 99.9%, and two-qubit gates around 99.5% (comparable to Google’s Sycamore), with improved readout reducing measurement mistakes. These incremental improvements matter greatly – a few percentage points in fidelity can be the difference between a successful quantum advantage experiment and one that fizzles due to noise. The technical lessons from Zuchongzhi 2.0 thus inform how researchers might reach even larger problem sizes or run early quantum algorithms: the device demonstrated that one can scale up the number of qubits and circuit depth in tandem, provided the error rate per operation is pushed low enough.

Finally, Zuchongzhi 2.0’s accomplishment has experimental validation value. It showed that two independent platforms (superconducting circuits and photonic systems) can both hit the quantum advantage regime, each with very different physical mechanisms. This cross-platform success makes it harder for skeptics to argue that quantum advantage was a one-off quirk or mere theoretical proposal. In the words of a Physics review, the USTC results “push experimental quantum computing to far larger problem sizes, making it much harder to find classical algorithms and computers that can keep up.” Such validation builds confidence that we are indeed in the onset of the “quantum computational supremacy” era, and not simply missing a classical shortcut that undercuts the quantum gains. It also points the way forward: to stay ahead, quantum hardware will need to continue growing in scale and quality, which is exactly the path being pursued.

Broader Impact and What’s Next

Zuchongzhi 2.0’s breakthrough, while primarily a scientific milestone, carries wider implications for the tech industry and cybersecurity – though with important caveats. In the near term, this 66-qubit machine will not be breaking RSA encryption or solving practical business problems; the random circuit sampling task it excelled at is more of a proof-of-concept than a useful application. However, the demonstration is a strong proof of feasibility. It confirms that quantum computers can indeed vastly outperform classical supercomputers on certain well-defined problems. This adds urgency to global R&D investments in quantum technology. Competing nations and companies are keenly aware that leadership in quantum computing could eventually translate to advantages in materials science, drug discovery, optimization, and yes, cryptography. For example, Chinese research institutions have poured resources into both superconducting qubits (like Zuchongzhi) and photonic systems (Jiuzhang), while U.S. tech giants (Google, IBM, Intel) and a growing cadre of startups are developing their own quantum chips. The quantum race is thus as much collaborative as it is competitive – each new milestone spurs others to up the ante.

In terms of cybersecurity, quantum advantage is a distant early warning, not an immediate threat. The algorithms run on Zuchongzhi 2.0 are not the type that break codes; they are essentially artificial math problems crafted to showcase raw computing power. Breaking modern encryption (e.g. via Shor’s algorithm for factoring) would require a general-purpose quantum computer with thousands of error-corrected qubits, far beyond the noisy 66 qubit NISQ-era machine demonstrated here. Experts project it will take many more years of research to reach that stage. Nonetheless, the trajectory set by devices like Zuchongzhi 2.0 underscores why the cybersecurity world is paying attention. Governments and companies are already developing post-quantum cryptography in anticipation of future quantum code-breaking, precisely because the hardware is improving steadily. Each quantum advantage experiment, be it Google’s or USTC’s, signals that the theoretical power of quantum computing is being realized step by step.

Meanwhile, the practical applications of Zuchongzhi-class machines are starting to be explored. The USTC team suggests that processors with tens of high-quality qubits could be used as research tools – for example, to simulate quantum many-body physics or to run primitive algorithms in quantum chemistry and machine learning that exceed what classical heuristics can do. These are early forays into useful quantum computing, beyond the flashy “stunt” computations for supremacy. Zuchongzhi 2.0, having shown it can maintain quantum coherence over a relatively large circuit, provides a platform to test such near-term applications. As Zhu Xiaobo (one of the lead researchers) put it, after reaching quantum advantage, their work is entering a “second stage” focused on fault-tolerant quantum computing and near-term applications such as quantum machine learning and quantum chemistry. In other words, the journey continues from simply proving quantum computers can outpace classical ones, to making quantum computers that are broadly useful.