Harvard & MIT’s Continuous 3,000-Qubit Breakthrough: A New Era of Quantum Operation

Table of Contents

16 Sep 2025 – Harvard and MIT researchers have unveiled a quantum computing system with over 3,000 qubits that can operate continuously for hours without restart. Described in a new Nature paper Continuous operation of a coherent 3,000-qubit system, the demonstration marks the first time a quantum machine of this scale runs steadily instead of in short bursts. Using neutral atoms as qubits, the team kept the array of 3,000+ qubits alive and coherent for over two hours – in theory it could run indefinitely – by rapidly replacing any atoms lost from the trap. Over the 2-hour run, more than 50 million atoms were cycled through to replenish the qubit array.

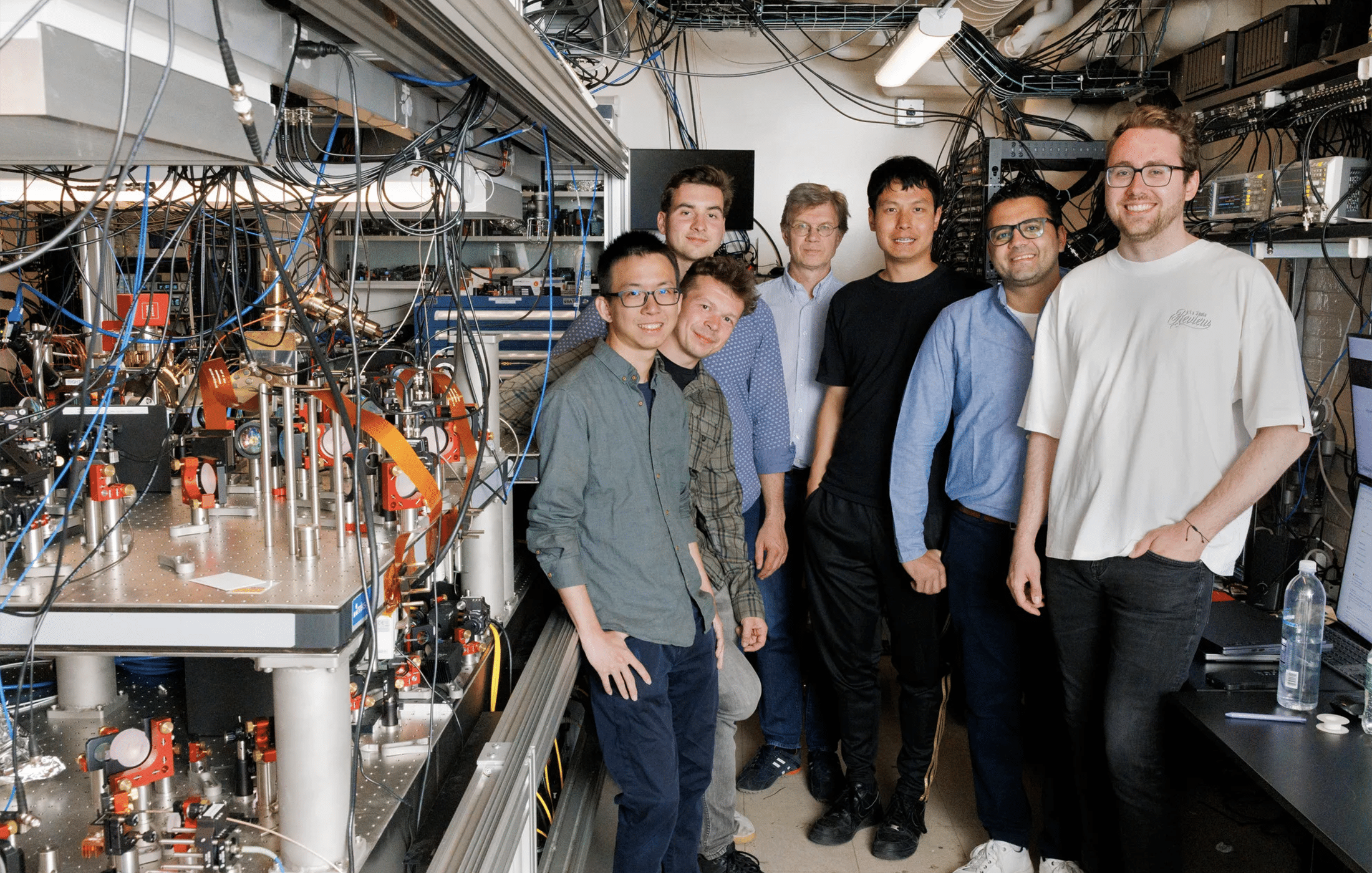

This achievement, led by Mikhail Lukin’s group at Harvard in collaboration with MIT and QuEra Computing, overcomes a long-standing hurdle in quantum hardware and is a significant step toward scalable, fault-tolerant quantum computers.

Key Achievements of the 3,000-Qubit Demo

- Continuous Qubit Reloading: “We’re showing a way where you can insert new atoms as you naturally lose them without destroying the information that’s already in the system,” said team member Elias Trapp. The new system uses high-speed optical “conveyor belts” and tweezers to reload up to 300,000 atoms per second into the array, far outpacing the loss rate. This is roughly two orders of magnitude faster than previous neutral-atom loading methods, enabling a near-steady-state operation.

- Long-Duration Operation: By continuously refreshing lost qubits, the researchers maintained a 3,000+ qubit array for over 2 hours. Prior experiments were limited to single shots – run a circuit briefly, then stop and reinitialize – because atoms would inevitably escape traps. This new setup clears that barrier: the machine could, in principle, run indefinitely (limited only by laser stability and other practical factors), a first for a quantum platform of this size.

- Preservation of Coherence: Crucially, fresh atoms can be added without disturbing the quantum states of the atoms already in the array. The team demonstrated that they could continuously refill qubits that were either in a known spin state or even in a coherent superposition (quantum bit 0 and 1 at once) while preserving the coherence of neighboring qubits. In other words, the delicate quantum information in the system wasn’t destroyed during the refilling process – a key breakthrough for quantum stability.

- Zoned Architecture for Stability: The experiment employs a novel three-zone architecture: a cold atom reservoir, a preparation zone for cooling and initializing qubits, and a storage zone where the large qubit array resides in isolation. This physical separation, enabled by dual optical lattices acting as conveyor belts, prevents the act of loading new atoms from perturbing the stored qubits. The storage zone’s qubits are essentially shielded from the disturbance of incoming atoms, allowing concurrent operations in different zones.

- Path to Fault-Tolerance: By solving the atom loss problem, this continuous-operation scheme lays groundwork for implementing quantum error correction (QEC) in neutral-atom systems. QEC requires many sequential operations and the ability to replace or “reset” qubits on the fly during computation – something this experiment makes possible. The team estimates their current reloading rate (30,000+ fresh qubits per second) is sufficient to support a quantum computer with ~10,000 physical qubits running error-correcting algorithms continuously. With modest improvements (e.g. scaling to 80,000 reloads/sec and higher gate fidelities), hundreds of error-corrected logical qubits could be sustained with error rates around $$10^{−8}$$ – an encouraging outlook for truly large-scale processors.

Overcoming the Atom Loss Barrier

In the world of neutral-atom quantum computers, atom loss has been a notorious limiting factor. These systems use ultra-cold atoms trapped in arrays by laser beams as qubits. Over time, atoms tend to escape or get ejected from their traps due to background gas collisions, heating, or occasional operation errors. Even a tiny loss rate meant that experiments could only run in a “pulsed” mode: perform a sequence of operations quickly before too many qubits vanish, then pause to reload the traps for the next run. This start-stop process severely limited circuit depth (how many operations you can do in a single algorithm run). It also made it impossible to do the repeated cycles of error detection and correction that a fault-tolerant quantum computer requires.

The Harvard-MIT team attacked this problem head-on by essentially building a quantum machine that refuels itself in real-time. Instead of the usual approach of running until the qubits decay or drop out, they continuously replace any lost atoms with new ones while the processor is running. The concept sounds straightforward but is immensely challenging in practice – any disturbance from adding a new atom could disrupt the fragile quantum states of the others.

How did they do it? The setup uses two intersecting optical lattice transport beams (acting like conveyor belts made of light) to deliver a steady supply of laser-cooled rubidium-87 atoms from an off-site “source” (a magneto-optical trap, or MOT) into the main chamber. Upon arrival, these atoms are loaded into a 2D grid of optical tweezers in the preparation zone, where they are cooled and arranged into defect-free positions (filling any vacancies) and initialized into the desired qubit state. Then, the prepared qubits are transferred into the adjacent storage zone – a much larger tweezer array holding the 3,000+ qubits that participate in the computation or simulation. All of this happens while, in a separate part of the apparatus, the existing stored qubits are evolving under quantum operations or just idling in superposition. Essentially, the machine can be loading new qubits in one region while calculations continue in another.

A key innovation is that the loading process is decoupled from the storage array. There is no direct line-of-sight or resonant light hitting the area where the quantum information is stored. Techniques like “light shielding” – using carefully tuned laser light to shift energy levels – protect the stored qubits from stray photons when new atoms are brought in. By partitioning the system into a supply line and a storage zone, the researchers ensured that adding an atom in one place doesn’t knock out an atom’s quantum state elsewhere. Mikhail Lukin, the project’s senior author, highlighted that this continuous operation and rapid replacement ability “can be more important in practice than a specific number of qubits”. In other words, 3,000 qubits that you can keep alive as long as needed are far more useful than, say, 10,000 qubits that only last a few seconds.

This dramatic improvement in quantum uptime is what makes the new result a potential turning point. It shifts the paradigm from maximizing qubit count to maintaining qubit reliability and longevity, which are just as crucial for doing any serious computation. A headline-grabbing qubit count means little if those qubits decohere or disappear almost immediately. Here, the researchers tackled that issue head-on, delivering qubits that not only exist in great numbers but stay usable consistently.

Preserving Quantum Coherence Mid-Operation

Achieving continuous operation would be moot if the act of reloading qubits destroyed the quantum state of the whole system. Impressively, the Harvard/MIT team showed that quantum coherence can be preserved even as new atoms are added. They performed tests where some qubits in the storage zone were prepared in a superposition state (a delicate quantum combination of 0 and 1), and then additional atoms were injected and moved into neighboring sites. The outcome: the original qubits retained their superposition state, evidencing that no collapse or decoherence was induced by the refilling process.

This result is vital because it means the machine’s qubits are effectively “hot-swappable.” You can lose one, slot in a new one, and keep computing without resetting the whole system. The fresh qubits can even be loaded already initialized in a certain state or entangled as needed, and they join the register without disturbing the others. It’s akin to replacing a burnt-out light bulb in a circuit without turning the power off – and having the rest of the lights flicker only minimally or not at all.

In technical terms, the experiment demonstrated a form of mid-circuit qubit replacement that is loss-tolerant. This builds on recent progress by other groups who have shown pieces of the puzzle in smaller systems. For instance, in 2023, a University of Wisconsin team managed to measure and remove individual atoms from a 48-atom array without disrupting the coherence of the remaining atoms. That was a proof-of-concept that one could perform “mid-circuit” operations (like measurements or qubit resets) in a neutral atom setup. Now, the Harvard/MIT result takes it to the next level: not only removing or measuring a qubit mid-operation, but re-inserting a new qubit and doing so at scale, thousands of times over.

To preserve coherence, several tricks were employed. The replaced atoms were introduced in a benign internal state and only later rotated to the desired qubit states once they were securely in the storage zone and isolated. Timing was synchronized such that quantum gate operations on stored qubits did not coincide with moments of reservoir transfer that could introduce noise. And as mentioned, the use of a “shielding” laser during loading prevented resonant scattering off the storage qubits’ transitions. Thanks to these measures, the team reports no observable interference between the reloading process and the quantum coherence of the computational qubits – a major win for quantum engineering.

Implications: Toward Scalable, Fault-Tolerant Quantum Computers

Continuous operation of a large qubit array is more than just a stunt – it directly addresses some of the biggest roadblocks on the path to practical quantum computers. One of those is implementing quantum error correction (QEC) reliably. QEC schemes (like the popular surface code) work by encoding one logical qubit’s information into many physical qubits and performing frequent rounds of error syndrome measurements and corrections. This requires qubits that can undergo many repetitive operations and remain available throughout the process. If qubits vanish or decohere too quickly, the error correction loop breaks down.

By solving qubit loss, the Harvard/MIT approach essentially supplies the quantum computer with an infinite reservoir of new qubits or fresh states to use as needed. Need to reset an ancilla qubit? Grab a new atom from the reservoir. Lost a qubit in your code block? Here’s a replacement on the fly. In the researchers’ words, “high-rate qubit replenishment provides a direct solution” to the problem of QEC cycles being hampered by atom loss. Their Nature paper quantifies this: with a gate operation taking ~1 ms on their platform, a reload flux of ~15,000 atoms/sec would suffice to sustain a 10,000-qubit processor running QEC – a rate well below the ~300,000 atoms/sec they achieved. In other words, the system already operates in a regime that can support tens of thousands of physical qubits continuously, which is the scale where error-corrected logical qubits become feasible.

Looking at the broader quantum computing landscape, this result aligns with a trend of tackling the quality, not just quantity, of qubits. Industry leaders and research teams are pouring effort into reducing error rates and extending coherence times so that quantum processors can perform deeper algorithms. For example, Google’s Quantum AI group recently showed that increasing the size of their superconducting qubit error-correcting code (from distance-3 to distance-5) actually lowered the logical error rate, hinting that larger systems can outperform smaller ones when QEC is in play. And as I covered in June, Microsoft’s Quantum team proposed new “4D” error-correcting codes that promise to encode logical qubits with far fewer physical qubits than today’s 2D surface codes. Those codes, if realized, could significantly cut down the overhead needed for fault tolerance – but they still assume you have a stable hardware platform to run on. Continuous-operation architectures like the Harvard/MIT neutral atom system might be just what’s required to host such advanced QEC codes. With more robust qubit retention and on-demand replenishment, the hardware begins to meet the theoretical assumptions of long-lived qubits that error-correction theory relies on.

Notably, the Nature paper suggests that with reasonable improvements (scaling the reload rate to ~80k qubits/sec and two-qubit gate fidelities to ~99.9%), one could support on the order of several hundred logical qubits with error rates around $$10^{−8}$$ on this platform. That would be a game-changer – hundreds of logical qubits is territory for meaningful algorithms in chemistry, cryptography, and beyond, all running on a physically realizable machine. It’s a striking projection, and while it assumes progress on multiple fronts (faster loading, better gates, etc.), it shows a clear roadmap: improve the pipes (reload speed) and the plumbing (fidelity) a bit more, and this architecture could handle bona fide fault-tolerant computing. In effect, the experiment bridges a gap between current quantum prototypes and the big dream of a logical-qubit-based processor. We’re not there yet, but you can see the path forward.

This development also has implications for quantum networking and sensors, as hinted by the authors. A continuously running atomic array could serve as a high-duty-cycle atomic clock or a quantum sensor that doesn’t require downtime for resetting, improving stability and bandwidth. In quantum networks, a steady stream of fresh qubits could be used to generate entangled links between distant nodes at high rates. The general theme is clear: any quantum technology that suffers from duty cycle limits or resource depletion stands to benefit from a “continuously refreshed” design.

The Big Picture and Next Steps

From a my perspective, this achievement feels like a watershed moment for neutral-atom quantum computers. For a while, superconducting qubits and trapped ions grabbed most of the limelight in the race to scalable QCs, each making strides in error correction and integration. Neutral atoms had shown great promise in terms of qubit count – e.g. 50, 100, even 256 atom arrays were demonstrated for specific tasks – but the knock was that you couldn’t run them for long or reuse them easily. With this new result, that knock is no longer valid. 3,000 coherent qubits running continuously puts neutral-atom platforms firmly back in the conversation for the long haul.

What’s next for this line of work? The team plans to apply the continuous architecture to actually perform computations and error correction cycles. Until now, they’ve proven the machine’s stability by essentially keeping the qubits in simple states or mild entanglement while reloading. The natural next step is to run a complex algorithm or a sequence of gate operations for hours, demonstrating not just quantum memory but quantum processing in continuous mode. We may soon see results on quantum simulations or small error-correcting code benchmarks (like a repetition code or a surface code logical qubit) running on this system. In fact, Lukin’s group had another Nature paper recently on a reconfigurable 2D array for simulating quantum magnets, and yet another on new error-corrected architecture ideas – indicating they are already pushing on the computational front as well. It wouldn’t be surprising if they attempt to marry those ideas with this continuous loading capability: imagine a continuously operating, reconfigurable, error-corrected 3,000-atom quantum computer. That’s the vision down the road.

In summary, the continuous 3,000-qubit neutral atom system is a major milestone that tackles a less flashy but absolutely essential aspect of quantum scaling: keeping the computer running. It shows that quantum processors can have endurance, not just a sprint. There’s a long road ahead to fault-tolerant quantum computing, but innovations like this give a tangible sense that we’re getting there step by step. As I often emphasize on PostQuantum.com, it’s not just about qubit count – it’s about coherence, error rates, and architecture. This breakthrough hits all those notes, demonstrating an increase in qubit quantity and quality simultaneously.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.