Google Announces “Verifiable Quantum Advantage” on Willow Quantum Chip

Table of Contents

News Summary

22 Oct 2025 – Google Quantum AI has unveiled a major milestone in quantum computing: its Willow superconducting quantum processor has achieved the first-ever “verifiable quantum advantage”. In an announcement, Google’s researchers introduced a new algorithm called Quantum Echoes that leverages out-of-time-order correlators (OTOCs) – essentially quantum “echo” signals – to probe chaotic dynamics on a 65-qubit subsystem of the 105-qubit Willow chip.

The key result is that Willow produced measurable quantum signals that no existing classical supercomputer can feasibly simulate, demonstrating a computation beyond classical reach. In fact, certain data points from the Quantum Echoes experiment would take an estimated 3+ years each to simulate on Frontier, one of the world’s fastest supercomputers, whereas the quantum chip generated them in seconds.

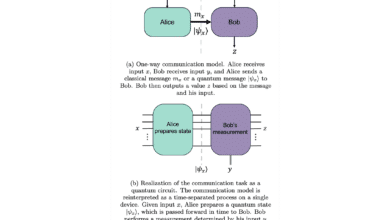

According to Google, the Quantum Echoes algorithm ran ~13,000× faster on the quantum hardware than the best-known classical simulation on a top supercomputer. Crucially, unlike Google’s 2019 “quantum supremacy” experiment (which involved sampling random bits with no straightforward way to verify each result), this new algorithm produces a deterministic, verifiable outcome that can be repeated and cross-checked on any sufficiently advanced quantum computer.

In other words, the output here is a stable quantum observable (the OTOC value) rather than a one-off random string, making it possible to confirm the quantum computer’s answer by rerunning the experiment and comparing results. This gives the achievement a more practical bent. Google’s team reports that the Quantum Echoes results matched theoretical expectations and are reproducible, marking the first time a quantum computation has surpassed classical supercomputers while yielding a checkable result. The research is documented in a Nature paper titled “Observation of constructive interference at the edge of quantum ergodicity”, and Google has emphasized that the techniques could “pave the way” for tackling real-world problems like Hamiltonian learning in chemistry and materials science.

In a companion demonstration of real-world application, Google applied the same Quantum Echoes technique as a kind of “molecular ruler.” In collaboration with researchers at UC Berkeley, the team used Willow to simulate NMR (nuclear magnetic resonance) spin-echo experiments that determine molecular geometry. This proof-of-principle, described in a second paper (“Quantum computation of molecular geometry via many-body nuclear spin echoes”), involved running the OTOC-based algorithm on two molecules (one with 15 atoms, another with 28) and comparing against laboratory NMR data. The quantum-derived results not only matched traditional NMR measurements but also revealed extra structural information that NMR alone couldn’t easily provide. By essentially “reversing time” in the quantum simulation of molecular spin dynamics, the Quantum Echoes approach was able to amplify subtle signals corresponding to inter-atomic distances.

Google points to this as an early glimpse of a useful application: quantum processors augmenting NMR spectroscopy to better map molecular structures (which could aid drug discovery, materials design, etc.)

Together, these results – the physics benchmark in Nature and the NMR use-case – form the core of Google’s claim that it has reached a new milestone where a quantum computer outperforms classical ones in a verifiable way.

(Links to papers: see Nature publication on Quantum Echoes (OTOC) and Companion paper on molecular geometry via spin echoes for full technical details.)

My Analysis

How General or “Useful” Is This Quantum Advantage?

Google’s “verifiable quantum advantage” claim raises the question: is this a broad breakthrough or a relatively narrow, contrived scenario?

It’s a bit of both. The Quantum Echoes algorithm is purpose-built to push the quantum hardware into an intractable regime – it deals with highly chaotic quantum circuits and uses a clever time-reversal trick to amplify a measurable signal.

In that sense, the experiment solves a very specialized task: measuring higher-order OTOCs on random circuits. This isn’t a “useful application” in the everyday sense; you won’t use OTOCs to balance your checkbook or speed up web browsing. Some skeptics might argue the task is tailored to show advantage rather than to solve a pressing real-world problem – much like the 2019 random circuit sampling was a contrived benchmark.

However, the Google team deliberately chose OTOCs because they’re not just random bits; they’re physically meaningful observables relevant to quantum chaos, with potential applications in molecular spectroscopy, materials physics, and learning unknown quantum dynamics.

In fact, demonstrating OTOC measurements could be directly useful for Hamiltonian learning, essentially letting a quantum computer characterize the internal interactions of a system (like a molecule or material) that would be prohibitively hard to simulate classically.

So while Quantum Echoes is a fairly esoteric algorithm, it represents a class of techniques that scientists actually care about in physics and chemistry. It’s not Shor’s algorithm or a prime factorization tool – it won’t crack RSA – but it’s more purposeful than the previous supremacy experiments.

In short, the achievement is narrowly focused, but not useless. It demonstrates a new capability (probing quantum chaos verifiably) that could indeed be a stepping stone toward broader applications. Many breakthroughs in computing start with contrived demos, so this milestone, while targeted, expands the repertoire of what quantum computers can do in a verifiable way.

Scrutinizing the “Quantum Advantage” Claim

Google has been careful to back up its claims with evidence, likely mindful of the scrutiny that greeted its 2019 announcement of quantum supremacy. So, are the claims justified by the data?

From what’s been released, Google’s case appears strong. The team showed that simulating their quantum circuits would require tracking an astronomically large quantum state (on the order of $$2^{65}$$ complex probability amplitudes) – utterly infeasible with today’s classical memory and processing.

They also report benchmarking nine different classical algorithms and finding that all of them struggle to recreate the Quantum Echoes results within reasonable time. Perhaps the most striking data point: a single second-order OTOC data set from the experiment (involving 65 qubits and deep random circuits) was estimated to take 3.2 years on the world’s fastest supercomputer (Frontier) per data point – whereas Willow produced a whole series of such data in a matter of minutes.

Google also highlighted a headline speedup of 13,000× over classical algorithms for this task. All of this suggests a clear gap between what the quantum hardware did and what classical computing can do today.

That said, caution is warranted. It’s wise to remember the lesson from 2019: after Google’s Sycamore processor famously ran a beyond-classical random circuit experiment, IBM researchers found clever ways to simulate that “supremacy” task much faster than Google initially estimated. Google had claimed a 10,000-year classical runtime, but IBM showed it could be done in maybe 2-3 days on a large supercomputer with improved algorithms and ample disk space. In the end, Google’s result was still beyond what anyone had actually done classically, but the gap wasn’t as infinite as first advertised.

This time around, Google seems to have done more homework on pre-empting classical counterarguments – they explicitly explored multiple simulation strategies and even in their blog post acknowledged the need to identify “fundamental obstacles” that classical algorithms face when trying to mimic these quantum interference effects. The involvement of complex many-body interference and negative probability amplitudes in the OTOC signals is highlighted as a key classical difficulty.

Still, it’s possible that in the coming weeks some computer scientist might find a shortcut or a specialized algorithm to simulate certain aspects of the Quantum Echoes protocol more efficiently than we expect. The claim of quantum advantage will surely be scrutinized by experts, as it should be.

Notably, this experiment pushed the quantum hardware to its limits: Google reportedly had to collect on the order of one trillion measurements over the course of the project to distill the OTOC signals from the noise. That speaks to how demanding the experiment was, and it’s a reminder that the quantum advantage here is not a wide margin in an absolute sense – rather, it’s just enough of a margin that classical methods (so far) can’t keep up.

In my view, Google’s claims are credible given the evidence, but we’ll need to see if any classical breakthroughs or independent validations occur. As of now, the scientific paper has passed peer review at Nature, indicating that at least a group of experts found the results convincing. I’ll be watching in the days ahead for commentary from quantum computing researchers; we can expect lively discussions on whether this truly qualifies as a decisive “advantage” and how hard it might be for classical simulations to catch up.

Broader Implications and Outlook for Quantum Computing (and Cryptography)

Does this move the field forward in a meaningful way? In many respects, yes. Technologically, it shows that Google’s latest hardware can run a complex algorithm at scale with enough precision to get a useful result – something not demonstrated before at this qubit count. The Willow chip, with 100+ qubits of high quality, managed to execute a time-reversal experiment that required fine control and high fidelity across the entire processor. This gives confidence that quantum devices are steadily improving; it’s not just more qubits, but better qubits.

From a proof-of-concept standpoint, having a repeatable quantum computation that beats classical is a big psychological and practical step. It addresses the criticism that quantum supremacy demonstrations were “sampling junk” with no verification or value. Here we have a verified beyond-classical result that also relates to a real physical question (how information scrambles in a quantum system, or how we might deduce molecular structure from NMR signals).

It’s still a far cry from general-purpose quantum computing, but it expands the frontier of tasks quantum computers can do that classical ones effectively cannot.

From a more cautious angle, we should note that quantum advantage in this context does not mean quantum computers are now universally superior for most tasks. It’s one specific algorithmic domain. Classical computers remain far better for the vast majority of problems. But every new domain where quantum pulls ahead is a milestone. This latest result will likely energize research into other near-term quantum algorithms that could be verifiable and useful. It also validates Google’s investment in scaling up superconducting qubit technology – the fact that they ran an algorithm involving 65 entangled qubits with repeated forward/backward evolution underscores significant progress in hardware stability and error mitigation.

Many experts will be weighing in soon on the significance of Google’s “Quantum Echoes” experiment. The coming days will likely bring detailed analyses from academics and industry rivals dissecting the methodology and implications. We’ll probably hear debates on questions like: What counts as a real application? Was this just a physics experiment of niche interest, or does it hint at broader utility? Could a different approach simulate the result classically after all? As a follower of the field (and having covered the 2019 Sycamore milestone in depth), my perspective is that this 2025 achievement feels more substantive in application scope than the 2019 one, but also that we should avoid hyperbole. In 2019, initial talk of “quantum supremacy” was later softened to “quantum advantage” after classical improvements narrowed the gap. Google itself now prefers the term beyond-classical or verifiable quantum advantage rather than “supremacy.” It’s a recognition that these milestones are incremental – important, but not absolute dominations of classical computing.

Finally, the question my network always asks me – “what does this mean for cryptography and the quest for a Cryptographically Relevant Quantum Computer (CRQC)?” In short: nothing immediate.

This experiment doesn’t implement Shor’s algorithm or break any encryption; it wasn’t about factoring numbers or computing discrete logarithms. It was a physics experiment, not a code-breaking one. So we shouldn’t rush to change our cryptographic protocols based on this news alone.

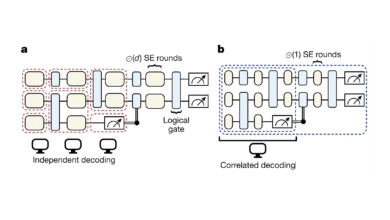

That said, any quantum computing advance raises the question of how it fits into the longer-term roadmap toward a CRQC. Google’s own roadmap, for example, lists a series of milestones from demonstrating basic quantum advantage (Milestone 1, achieved in 2019) to implementing a prototype error-corrected qubit (Milestone 2, around 2023) to eventually building a large-scale error-corrected quantum computer.

The Willow chip’s verifiable advantage demo is an achievement along that path – it shows scaling and error reduction paying off in a tangible way. Notably, Google achieved error rates around 0.1% or better per gate on Willow, which was crucial for this experiment’s success. For cryptographically relevant tasks, we’d need error-corrected quantum circuits running for millions or billions of operations, something still out of reach.

Google’s team openly acknowledges that much work remains: getting to a useful CRQC will require “orders-of-magnitude improvement in system performance and scale, with millions of components to be developed and matured”. In other words, this 2025 milestone doesn’t suddenly make Q-day (quantum decryption day) imminent. It’s an encouraging sign of progress, but not a paradigm shift for cryptography yet.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.