Google Claims Breakthrough in Quantum Error Correction

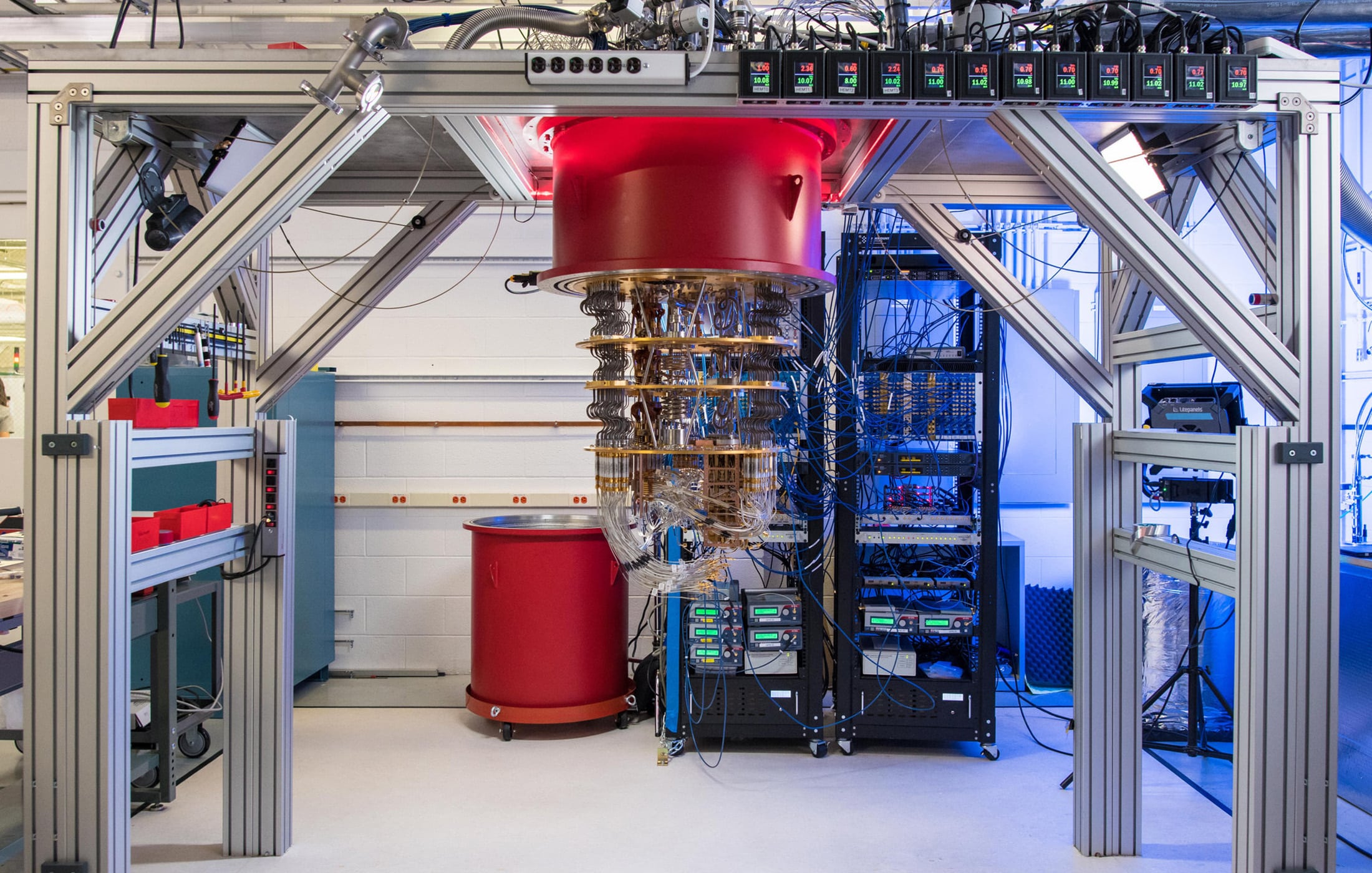

Feb 2023 – Quantum computers hold the promise of tackling problems beyond the reach of classical machines, but they are notoriously fragile. Even tiny disturbances can introduce errors into quantum computations. To build a fault-tolerant quantum computer – one that can reliably run long computations – scientists have to keep these errors in check. Google’s Quantum AI team just reported in Nature a major milestone toward that goal: they demonstrated that making a quantum error-correcting code larger reduced the error rate of a encoded (“logical”) qubit.

In other words, by using more physical qubits in a clever way, they managed to suppress errors better than ever before – the first time this has been achieved in any quantum computing platform. This result, published in Nature as “Suppressing quantum errors by scaling a surface code logical qubit,” is a significant step toward scalable, error-free quantum computing.

A First Step Toward Fault-Tolerant Quantum Computing

For today’s quantum processors, errors are inevitable – qubits can randomly flip or lose their delicate quantum state due to noise. The idea of quantum error correction (QEC) is to counteract these errors by encoding one “logical” qubit of information across many physical qubits. In theory, the more physical qubits used in the code, the more protected the logical information becomes. This is akin to how you might repeat an important message multiple times over a noisy phone line: if you send the bit string “111” instead of a single “1,” the receiver can take a majority vote to infer the correct bit even if one copy gets flipped to “0”. Similarly, a logical qubit is a redundancy scheme – it spreads quantum information out so that if some of the parts (physical qubits) go wrong, the error can be detected and corrected from the remainder.

The big catch, however, is that adding more qubits also means adding more potential sources of error. If each physical qubit is error-prone, simply using more of them could make things worse rather than better. The key requirement is that the base error rate of the physical hardware must be low enough so that the benefits of redundancy outweigh the added noise. This threshold criterion has long been known in theory: if the error probability per operation is below a certain value (roughly on the order of 1% for the surface code), then a large enough error-correcting code will see lower logical error rates as it grows. If the hardware is above this error threshold, adding qubits won’t help – it would be like trying to build a reliable raft out of leaky buckets. Until now, quantum hardware has been just on the wrong side of that threshold, so small error-correcting codes provided no advantage over a single qubit. Google’s new result is exciting because it shows that their latest processor crossed this threshold: for the first time, a bigger code actually performed better than a smaller one.

What Google Achieved: A Bigger Code, Fewer Errors

Google Quantum AI’s experiment focused on a specific QEC scheme called the surface code (more on this below). They built a new superconducting quantum processor with 72 physical qubits and used it to run two versions of a surface code memory: a distance-5 code that spanned 49 physical qubits, and a smaller distance-3 code using 17 qubits. These numbers refer to the code distance, essentially the size of the code, which determines how many errors it can correct. After carefully calibrating and running many rounds of error-correction cycles on both codes, the team measured the resulting logical error rates. The larger, distance-5 code achieved a logical error of about 2.9% per cycle, slightly better than the ~3.0% per cycle error of the smaller distance-3 code. In other words, using 49 qubits to encode a single logical qubit gave a lower error rate than using 17 qubits did.

At first glance, a 2.914% vs 3.028% error rate per cycle doesn’t sound like a dramatic difference. But in the context of quantum error correction, this modest improvement is a watershed moment. It is the experimental proof-of-concept that increasing the size of the code (adding more qubits) can actually suppress errors, rather than amplify them. As the Google researchers noted, a larger code outperforming a smaller one is “a key signature of QEC” – a fundamental benchmark that quantum computers must hit on the road to practical, fault-tolerant operation. Ever since Peter Shor first proposed quantum error-correcting codes in 1995, scientists have been striving to see this clear advantage of a logical qubit over the best physical qubits. Google’s result is the first definitive demonstration of that advantage in any real quantum processor, marking an essential milestone for the field.

Of course, 2-3% error per cycle is still far too high for running useful algorithms; practical quantum computing will require error rates orders of magnitude lower (perhaps $$10^{-6}$$ or less). But achieving even a slight logical error reduction by going from distance 3 to distance 5 shows that the hardware is operating in the right regime. It means the team has tamed the physical errors to a point where the error correction is net beneficial. This gives confidence that further scaling to larger codes and improving the qubit quality will continue to push the logical errors down. In short, the experiment provides a “proof in hardware” that the principles of quantum error correction actually work when implemented on a chip – a necessary step on the long journey to a scalable quantum computer.

What Is a Surface Code and How Does It Work?

So, what exactly is the surface code that Google used, and how does it suppress errors? The surface code is currently one of the most promising quantum error-correcting codes, favored by many research groups (including Google’s) because of its relative tolerance to error and its suitability for 2D chip layouts. It belongs to the family of topological codes, which means the logical information is stored in a global, distributed way across a surface (in this case, a grid of qubits), rather than in any one physical qubit. The “distance” of the code (d) is basically the width of this grid. A larger distance means a larger patch of qubits and, in principle, the ability to correct more errors: specifically, a distance-d surface code can typically handle up to ⌊(d-1)/2⌋ simultaneous errors on different qubits. For example, a distance-5 code can correct up to two errors occurring anywhere in the code, whereas a distance-3 code can correct only one.

The surface code works by arranging qubits on a checkerboard-like grid and repeatedly checking for inconsistency (parity) among them, rather than trying to directly copy or clone quantum information (which is impossible due to the no-cloning theorem). In Google’s layout, there are two types of qubits: data qubits that hold the actual quantum information (these are the ones that collectively encode the logical qubit), and measure qubits interspersed between them whose sole job is to detect errors. Imagine the data qubits sitting on the vertices of a checkerboard, with measure qubits sitting in the center of each square. Each measure qubit is responsible for a stabilizer check on its four neighboring data qubits, kind of like asking “Do all my neighboring data qubits have the same combined state as expected?” without actually revealing the state of those data qubits. Some measure qubits are designated to check for bit-flip errors, and others check for phase-flip errors, covering the two types of quantum errors that can happen. Because these measurements only tell you whether an error occurred (an odd parity) or not (even parity), they don’t disturb the encoded quantum information itself.

By doing these stabilizer checks across the whole patch of qubits, any single physical qubit error (or even a few of them) will cause a noticeable pattern of “error flags” in the measurements. The pattern of which stabilizers report errors (often called the syndrome) lets the system infer where the error likely occurred. It’s analogous to finding a typo in a crossword puzzle: you might not know immediately which letter is wrong, but the fact that certain words don’t line up gives you clues to pinpoint it. The surface code decoder collects the pattern of these error signals and decides which qubit (or qubits) must have flipped, then corrects them (or equivalently, keeps track of the error without collapsing the computation). This way, the logical qubit – the global encoded state across the whole grid – can survive many cycles of errors as long as they are not too frequent or too correlated.

Crucially, the larger the code (higher d), the more errors it can tolerate. A distance-d surface code has a kind of built-in redundancy: the logical qubit’s information is entangled across d×d data qubits in such a way that you’d need errors on a chain of d qubits in a row to irreversibly damage the logical state. Any error affecting fewer qubits than that can be caught and corrected in time. This is why increasing the code distance should exponentially suppress the logical error rate – each step up in distance makes it dramatically less likely for an uncorrectable chain of errors to slip through. However, this theoretical benefit only materializes if the base error rate of each qubit is below the threshold. If the qubits are too noisy, then error signals (syndrome flips) will be so frequent and widespread that the decoder gets confused. It would be like trying to find a single typo when half the letters are erratic – adding more letters (qubits) in that case won’t help at all.

Crossing the Error Threshold: Why Google’s Experiment Is Novel

The novelty of Google’s 2023 result lies in proving that their hardware is good enough to make a larger code actually worthwhile. Previous experiments with small surface codes (distance-3, etc.) had shown they could detect errors, but they did not definitively show lower error rates than using fewer qubits, because the physical error rates were still too high. In this new work, Google’s team invested several years into improving every aspect of their quantum chip’s performance to tip the scales in favor of error correction. They designed a third-generation Sycamore processor with better qubit coherence (each qubit stays stable longer), higher-fidelity operations, and lower cross-talk between qubits running in parallel. They also implemented fast, high-fidelity readout and reset of qubits and refined their calibration algorithms to reduce errors from drifting parameters. In essence, they squeezed the physical error per operation down to around the threshold region (~1% or below in many cases). This herculean hardware effort was necessary so that the overhead of using more qubits (which introduces more places for errors to occur) would be offset by the error-correcting capability of the code. As the team put it, “introducing more qubits also increases the number of error sources, so the density of errors must be sufficiently low” for logical performance to improve with scale. By achieving that low error density, they were able to see the coveted crossover point where a 49-qubit logical qubit outperforms a 17-qubit one.

Another novel aspect of the experiment is the sheer scale of the code itself. Running a distance-5 surface code with 49 qubits (plus additional ancilla qubits for certain calibrations or leakage removal, as noted in the paper) is the largest realization of a quantum error-correcting code to date on a superconducting platform. The researchers had to orchestrate 25 cycles of simultaneous operations on this sizeable patch of qubits, performing dozens of delicate two-qubit gates and measurements in each cycle, all timed and tuned to avoid triggering cascades of errors. They also employed advanced decoding algorithms to interpret the syndrome data. The fact that the logical error rate remained lower for the bigger code indicates that all these pieces – qubits, gates, measurements, decoder – were functioning in concert below the threshold. It’s a bit like building a complex machine with many components and verifying that adding more components doesn’t break the machine’s overall performance. That had never been conclusively shown in quantum computing until now.

Implications and Future Outlook

Google’s demonstration of a working logical qubit that gets better with scale is a pivotal proof-of-principle for quantum computing. It tells us that fault-tolerant quantum computing is achievable in practice, not just in theory. With this result, we know that as qubits get less error-prone (through engineering improvements) and as we are able to marshal more of them into error-correcting schemes, we can expect the logical error rates to continue dropping. In the experiment, going from distance 3 to distance 5 gave roughly a 4% improvement in logical error per cycle. In the future, going to distance 7, 9, and beyond should suppress errors even further – potentially by larger factors each time if the exponential error reduction regime is fully realized. In fact, the Google team has indicated that with a distance-17 surface code (which would involve hundreds of physical qubits for one logical qubit) and sufficiently low physical error rates, they project logical error probabilities on the order of 1 in a million per cycle. Reaching such ultra-low error rates is essential for running long quantum algorithms.

There are still many challenges ahead. So far, Google’s experiment encoded one logical qubit and measured it as a memory (they prepared a state and checked how well it survived over many cycles). The next steps will involve performing logical operations on encoded qubits and coupling multiple logical qubits together – all while maintaining error suppression. Each of those steps will introduce new opportunities for errors to creep in. Moreover, achieving useful quantum computing will require not just one logical qubit but thousands, perhaps millions of them, all working in tandem with error correction. This is why researchers talk about needing millions of physical qubits to do something like break modern encryption with Shor’s algorithm – the vast majority of those qubits will be devoted to error correction overhead, keeping a smaller number of logical qubits on track.

The encouraging news is that Google’s milestone suggests a clear path forward. It validates the approach laid out in the quantum computing roadmaps: continue to incrementally improve qubit quality and scale up the error-correcting codes. As long as each generation of hardware can beat the error threshold with some margin, increasing the code size will yield exponentially decreasing logical error rates, unlocking longer and more complex computations without failure. In the coming years, we can expect to see distance-7 and higher surface codes demonstrated, likely with even more impressive error suppression. Each new distance will test the limits of the hardware and the creativity of engineers in qubit design, fabrication, and control software.

In summary, Google’s 2023 surface code experiment is a landmark because it showed error correction at scale actually works. A logical qubit built from 49 entangled qubits behaved better than one built from 17 qubits , giving a glimpse of the promised land of fault tolerance. It’s a bit like the moment in early aviation when engineers first added a second wing and found the biplane could actually fly more stably – a validation that adding complexity (done right) leads to better performance, not worse. There is much work remaining to take quantum computers from this one logical qubit prototype to a full-blown error-corrected quantum machine. But the fundamental physics and engineering principle has now been demonstrated.

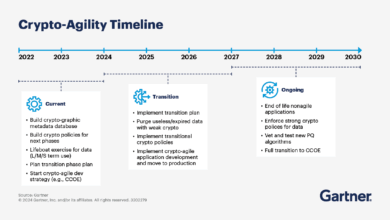

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.