Caltech’s 6,100-Qubit Optical Tweezer Array: A Quantum Leap in Scale and Coherence

Table of Contents

24 Sep 2025 – In a new quantum computing milestone, Caltech physicists have created the largest qubit array ever assembled: 6,100 atomic qubits held in place by laser “tweezers.” This far exceeds previous neutral-atom arrays, which contained only hundreds of qubits. Even more impressive, these thousands of qubits were kept in a fragile quantum state (superposition) for about 13 seconds – nearly ten times longer than prior systems. Equally crucial, the team could manipulate individual qubits with 99.98% accuracy, showing that scaling up didn’t come at the expense of quality.

Published in a Caltech blog and in Nature on September 24, 2025 as an accelerated article preview “A tweezer array with 6100 highly coherent atomic qubits“, the paper demonstrates a viable path toward the error-corrected quantum computers scientists have long envisioned. Quantum computers will likely require hundreds of thousands of qubits because qubits are notoriously fragile and need redundancy for error correction. Caltech’s breakthrough – combining record quantity and quality in qubits – is a significant step toward that goal.

What Was Achieved: 6,100 Qubits with Record Coherence and Fidelity

The Caltech team’s accomplishment can be summed up in a few record-breaking metrics:

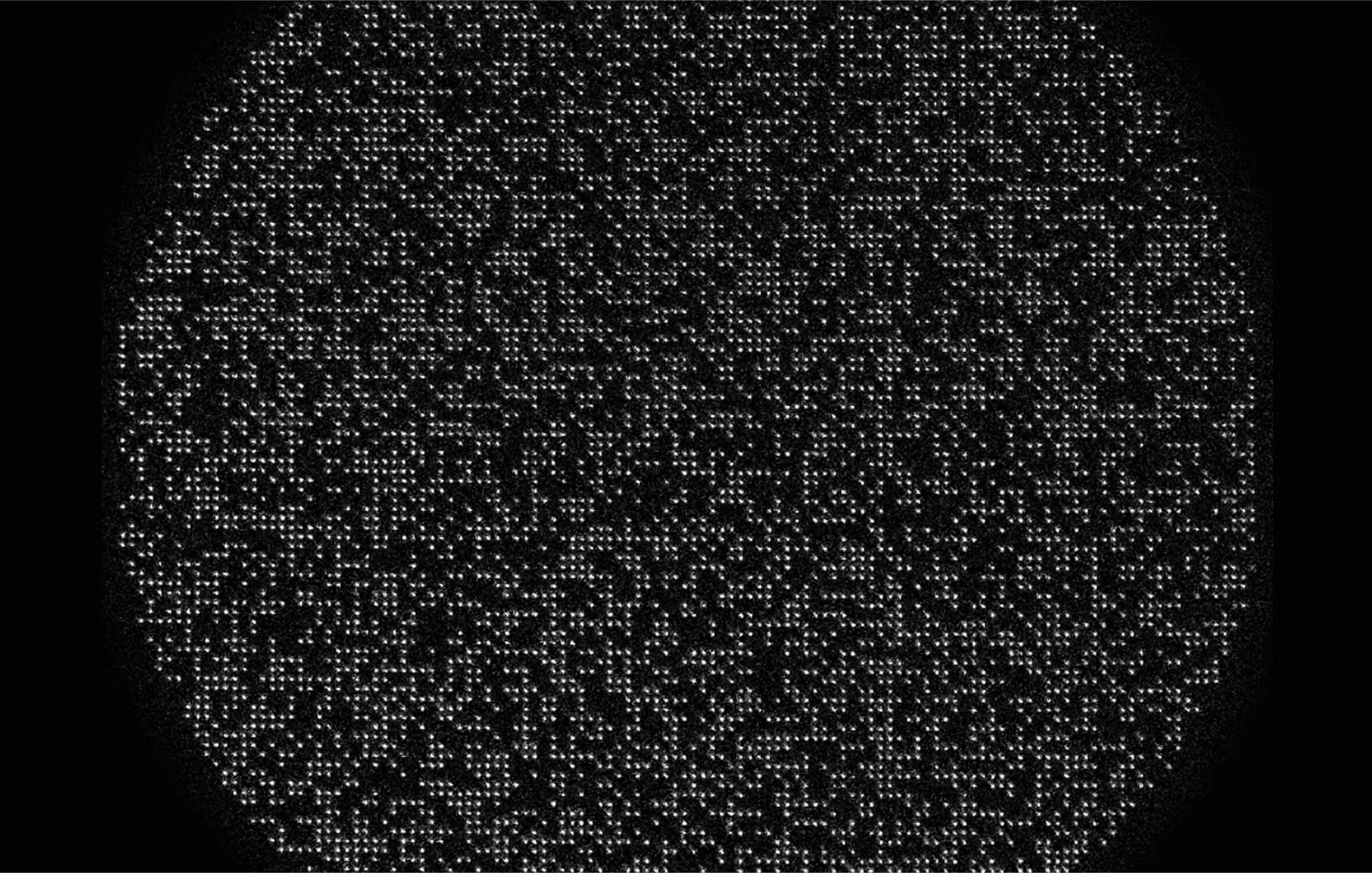

- Largest Qubit Array to Date: 6,100 neutral-atom qubits arranged in a grid of laser traps (optical tweezers). This leapfrogs previous experiments of this kind, which managed only on the order of hundreds of atoms, and even recent attempts of ~1,000 atoms that lacked full quantum control.

- Long-Lived Quantum States: Each cesium atom qubit remained in a superposition state for up to ~12.6 seconds – a coherence time roughly 10× longer than earlier similar systems (which were under 2 seconds). This 12+ second coherence is a new record for hyperfine-based qubits in an optical tweezer array, meaning the qubits can “hold” quantum information far longer before it dissipates.

- High Operational Fidelity: The researchers could individually control and read out these qubits with 99.98-99.99% accuracy, nearly perfect by quantum standards . In practice, that means only about 1 in 5,000 operations might fail – a key indicator of quality. They also achieved an imaging fidelity above 99.99%, meaning they can measure the atoms with virtually no errors.

- Stable Trap and Transport: The atoms were held in a vacuum chamber, with a trapping lifetime of ~23 minutes – an eternity in quantum computing – which enabled the team to move atoms around hundreds of micrometers while keeping them in superposition. In other words, they could shuttle qubits across the array without decoherence, a bit like rearranging pieces on a chessboard while each piece is delicately balanced on edge. This ability to transport qubits is unique to neutral-atom platforms and was achieved here with coherence intact.

Maintaining both long coherence and high fidelity in a large system is extremely challenging. Usually, more qubits mean more opportunities for error and decoherence (like trying to keep thousands of plates spinning at once). The Caltech team overcame this by operating at room temperature in a very high-quality vacuum (which helps the 23-minute trap lifetime) and using sophisticated laser and control techniques to isolate the atoms from noise. The result is a kind of quantum hardware that is both scalable and stable – a combination that’s been elusive until now.

Bottom line – it’s not just a lot of qubits, but a lot of good qubits.

From Hundreds to Thousands: Breaking the Scale Barrier

Scaling up to thousands of qubits is not just an incremental improvement – it’s a game-changer for the field. For comparison, most cutting-edge quantum processors today have qubit counts in the tens or low hundreds. Superconducting qubit chips (like those from IBM and Google) are just crossing the few-hundred qubit range, and trapped-ion systems typically hold on the order of 50-100 qubits in a single device.

Neutral atoms in optical tweezers were already considered promising for scalability, but until this work, no one had demonstrated coherent control over a multi-thousand-qubit array. Earlier optical tweezer experiments managed tens or hundreds of atoms with full control, and only recently had some reached around 1,000 atoms without demonstrating them as coherent qubits.

In other words, large arrays existed in principle, but they weren’t operating as a quantum computer’s building blocks (no superposition or individual operations on each atom). The Caltech team’s 6,100-atom array is the first to combine extreme scale with genuine qubit behavior at each site.

What does having thousands of qubits enable that hundreds did not? For one, it moves quantum computing into a regime where we can start exploring quantum error correction with enough qubits (more on that shortly). It also means we can tackle more complex quantum simulations and computations, as there are more “quantum bits” to represent information. Additionally, having so many atoms available allows for flexible connectivity. A unique advantage of neutral-atom qubits is that any atom in the array can potentially interact with any other, not just its nearest neighbors. Atoms can be made to interact via laser-induced Rydberg states or by physically moving them together. In fact, the Caltech setup allows atoms to be rearranged on the fly: they demonstrated moving atoms by hundreds of micrometers (a significant distance relative to the array) while those atoms remained in superposition. This is like sliding a quantum bead along an abacus without disturbing its state – an ability that hardwired solid-state qubits (like those on a chip) don’t typically have.

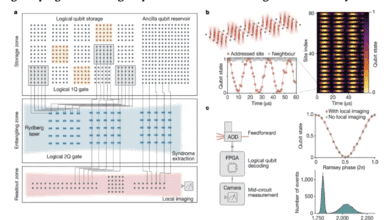

By splitting one laser into 12,000 traps to hold 6,100 atoms, the researchers also showcased an engineering triumph. It’s non-trivial to control that many laser beams and keep them stable. The image above, with thousands of pinpricks of light, attests to the level of control – each of those 6,100 points had to be precisely positioned and focused. Indeed, it looks almost like a starry night captured on a screen, except every “star” is actually a single atom in a lab.

Manuel Endres, the Caltech professor leading the project, summed up the significance: “This is an exciting moment for neutral-atom quantum computing… We can now see a pathway to large error-corrected quantum computers. The building blocks are in place.” In other words, reaching 6,100 coherent qubits suggests that the fundamental pieces needed for scaling up quantum machines are here. The long-standing question had been: can we get both more qubits and better qubits at the same time? This experiment shows that we can – it’s no longer a choice between quantity or quality.

Why Does Scale Matter? Toward Quantum Error Correction

Qubits are error-prone. They can lose their quantum state (decohere) or undergo faulty operations due to noise. In classical computers, we combat errors with redundancy (e.g. error-correcting codes in memory) and by simply copying bits. In quantum computing, we can’t just copy qubits willy-nilly – the no-cloning theorem in quantum physics forbids making identical copies of an arbitrary unknown quantum state. Instead, we use a strategy called quantum error correction (QEC), which distributes quantum information across multiple physical qubits in such a way that if some of them go astray, the error can be detected and corrected by the others. This process is very resource-intensive: a single “logical” qubit (an error-corrected qubit that behaves reliably) may require dozens or even thousands of physical qubits working in unison as backups.

That’s why having thousands of qubits available is so significant. Researchers estimate that a useful, general-purpose quantum computer likely needs on the order of a million physical qubits to do highly complex tasks with full error correction. Even for nearer-term demonstrations of QEC, we’re talking thousands of qubits to encode a handful of logical qubits with the necessary redundancy. Until now, no experimental platform had the sheer numbers to even attempt QEC at the scale of thousands of qubits. The Caltech result changes that. As graduate student Elie Bataille noted, “The next big milestone for the field is implementing quantum error correction at the scale of thousands of physical qubits, and this work shows that neutral atoms are a strong candidate to get there.” The array of 6,100 atoms could, for example, be conceptually divided into regions that each act as an error-correcting code, or used to encode a few logical qubits with a very robust code.

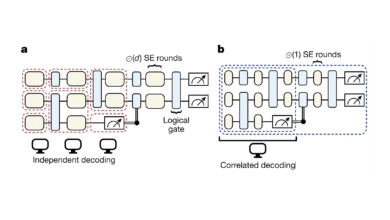

In fact, the authors outline a “zone-based” quantum computing architecture for their array. The idea is to partition the large array into zones that can process information in parallel and then connect via shuttling atoms between zones. They demonstrated the key ingredients of this: moving qubits around (pick-up and drop-off operations) while preserving coherence. It’s akin to having modules on a chip that you can link by sending qubits between them as needed – except here the modules and links are all made of mobile atoms and laser beams. By characterizing these moves with a technique called interleaved randomized benchmarking, they verified that transport didn’t degrade the qubits significantly. This modular, movable approach could make error correction more efficient, as you can bring qubits together for multi-qubit operations (like syndrome measurements in error-correcting codes) and then spread them out to avoid unwanted interactions.

All told, having “quantity and quality” together opens the door to actually using error correction rather than just talking about it. With thousands of high-coherence, high-fidelity qubits, one can start experimenting with encoding logical qubits and running small algorithms in a fault-tolerant way. This will be the crucial next step to prove that a quantum computer can scale beyond the toy-problem stage. The Caltech team is already looking ahead to entangling their 6,100 qubits as the next milestone, because entanglement between qubits is necessary to do any non-trivial computation or error correction routine. So far, the array has been used to store qubit states and move them around, but not yet to perform entangling operations across the whole system. The researchers plan to achieve that in the near future. Given that entanglement has been shown in systems of even larger numbers of particles in other contexts, there’s optimism that it’s feasible here too. If and when they succeed, we’ll have a fully programmable quantum simulator/computer with thousands of qubits on our hands – something that was science fiction not long ago.

Does This Bring Us Closer to Q-Day?

Whenever a quantum computing breakthrough like this happens, it raises the question of “Q-Day.” Q-Day (or Y2Q) is the hypothetical day when a quantum computer is built that’s powerful enough to break our current cryptographic systems – for example, cracking the RSA encryption that secures much of the internet. Cybersecurity analysts worry about this because quantum algorithms (like Shor’s algorithm) could factor large numbers exponentially faster than classical methods, undermining public-key encryption.

So, does the achievement of 6,100 high-quality qubits materially move up the timeline for Q-Day?

The short answer: It’s an important step, but we’re not there yet. Caltech’s 6,100 qubit device is a huge leap in scale, but it’s not (at this stage) a fully operational quantum computer capable of running complex algorithms like Shor’s. In fact, as noted, these atoms have not even been entangled with each other yet for computation – they’ve demonstrated the “memory” of qubits and the ability to move them, but not the multi-qubit logic operations needed for something like factoring a number.

To threaten RSA-2048 encryption, experts estimate a quantum computer would need on the order of thousands of logical qubits and an error-corrected operation sequence running for many hours or days. That, in turn, could require millions of physical qubits if error rates are not dramatically improved. No current quantum system is anywhere close to those requirements. For example, a recent analysis by Google Quantum AI suggests that breaking a 2048-bit RSA key in under a week would still require roughly a million qubits with very low error rates, even with improved algorithms – a sharp reduction from earlier estimates of ~20 million, but still far above 6,100. Moreover, that hypothetical machine would need to run continuously for five days with error-corrected operations throughout, something far beyond today’s capabilities (the Caltech array can maintain coherence for 13 seconds, not days).

However, the significance of this breakthrough for Q-Day is that it underscores a trend: the quantum computing world is rapidly checking off the necessary milestones on the path to a cryptography-breaking machine. We now have a plausible hardware platform that can scale to tens of thousands of qubits. We have seen quantum error correction codes demonstrated on small scales, and with 6,100 atoms, scaling up those codes becomes conceivable. Algorithmic advances (like the mentioned Google study) are continually lowering the qubit counts and time needed for attacks. And just recently, other research has cracked key problems like achieving gate fidelities as high as 99.99999% (one error in 10 million) on certain platforms, which would hugely reduce overhead for error correction.

All these pieces are like parts of a puzzle coming together. I previously predicted that a quantum computer capable of breaking RSA-2048 will likely exist by around 2030 if current trends hold. Admittedly it was a bit bullish prediction that assumed we’d have certain breakthroughs. But with the papers released just this week, such as this one and the Transversal Algorithmic Fault Tolerance (AFT) I also covered today, my prediction is becoming increasingly reaslitic.

So Caltech’s 6,100-qubit array does raise the threat level slightly: it shows that one big hurdle – having enough qubits – might be overcome sooner than many expected. It is a proof-of-concept that you can assemble thousands of controllable qubits, which is a prerequisite for attacking cryptography. That said, a lot of work remains between this experiment and a code-breaking quantum computer. The atoms will need to be entangled and made to perform universal quantum gates reliably. The system would likely need orders of magnitude more qubits (or at least an integrated multi-array network of such devices) to run something like Shor’s algorithm with full error correction. And it must do so faster than decoherence or noise can spoil the process. None of these are trivial leaps.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.