Capability B.2: Syndrome Extraction (Error Syndrome Measurement)

Table of Contents

This piece is part of an ten‑article series mapping the capabilities needed to reach a cryptanalytically relevant quantum computer (CRQC). For definitions, interdependencies, and the Q‑Day roadmap, begin with the overview: The CRQC Quantum Capability Framework.

(Updated in Nov 2025)

(Note: This is a living document. I update it as credible results, vendor roadmaps, or standards shift. Figures and timelines may lag new announcements; no warranties are given; always validate key assumptions against primary sources and your own risk posture.)

Introduction

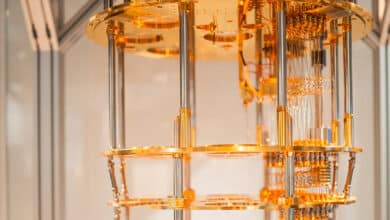

Quantum syndrome extraction – also called error syndrome measurement – is the process of measuring collective properties of qubits to detect errors without destroying the encoded quantum information. It is essentially the sensor mechanism of a quantum error-correcting code (Capability B.1), analogous to measuring parity checks in a classical error-correcting code. In a stabilizer code (the leading framework for quantum error correction, introduced by Gottesman in the late 1990s), the code’s state is defined by a set of multi-qubit parity constraints (stabilizers). Syndrome extraction means measuring those stabilizers’ values to see if an error has occurred, but crucially without revealing any information about the logical data. In other words, we learn where an error happened, not what the encoded qubit’s value is.

This capability is critical for fault-tolerant quantum computing: it provides the real-time “heartbeat” of error correction cycles, allowing errors to be identified and corrected continuously before they accumulate. If syndrome extraction fails or lags, errors slip past and can rapidly snowball into logical faults, causing the quantum computation to crash.

At a high level, syndrome extraction works by entangling data qubits with dedicated ancilla (syndrome) qubits, then measuring the ancillas. The measurement outcomes – a stream of classical syndrome bits – indicate the parity of certain qubit groupings. A change in a given parity (often called a syndrome flip) between successive rounds signals that some qubit in that group suffered an error. By design, these parity measurements are quantum non-demolition: they do not collapse the logical state of the data qubits. For example, in a surface code (a popular 2D stabilizer code), one continuously measures X-type and Z-type parity checks on patches of four qubits; a flip in one of those check results tells the decoder that an error likely hit a nearby qubit, without revealing any information about the logical 0/1 encoded in the surface code qubits.

This ability to “watch for errors” while preserving quantum superpositions is what makes quantum error correction possible. Syndrome extraction typically must be repeated in rapid, ongoing cycles throughout the computation – effectively setting the clock speed of a fault-tolerant quantum processor. Each cycle might be on the order of a microsecond in superconducting qubit platforms, and would need to run trillions of times in a large-scale computation (e.g. on the order of 1011 cycles for a cryptography-breaking run spanning several days).

Achieving fast, reliable, and scalable syndrome extraction is therefore a cornerstone capability on the road to a cryptographically relevant quantum computer (CRQC).

Foundations of Syndrome Extraction in QEC

The concept of measuring an error syndrome without collapsing quantum data was first laid out in the pioneering quantum error correction (QEC) proposals of the mid-1990s. In 1995, Peter Shor introduced the first QEC code, showing that by encoding a qubit into nine physical qubits one could detect and correct single-qubit errors – provided you measure certain multi-qubit parity checks (syndromes) instead of the qubits directly. Shor’s scheme entailed using an ancilla “cat state” to probe the parity of data qubits, extracting error information while leaving the superposition intact.

Shortly after, Andrew Steane presented the $$[[7,1,3]]$$ code (7 physical qubits encoding 1 logical qubit, correcting any 1 error) which also relies on measuring syndromes using ancilla qubits and cleverly chosen circuits to avoid disturbing the encoded state.

These early theoretical works established the principle that ancillary syndrome measurements are essential to QEC, and showed it is possible in theory to perform such measurements fault-tolerantly (i.e. in a way that contains errors from spreading).

The stabilizer formalism (Gottesman 1997) further generalized how to design syndrome measurements for any QEC code. In stabilizer codes, the “stabilizers” are simply the parity-check operators – typically tensor products of Pauli matrices – that define the code space. Measuring each stabilizer yields a 0 or 1 syndrome bit indicating whether an even or odd parity error has occurred on that group of qubits. A string of all-zero outcomes means no error is detected, while any 1s pinpoint which stabilizer checks have flipped, information the decoder uses to infer the error’s location. Importantly, all valid code states yield the same stabilizer results (e.g. “even parity”); thus these measurements reveal no logical information.

The theory of fault tolerance (Shor 1996; Gottesman 1998) developed methods like ancilla verification and gate gadget protocols to ensure that even the syndrome extraction circuits themselves don’t introduce correlated errors that could corrupt the encoded qubit. These foundational papers kicked off a vast research effort into how to best perform syndrome extraction in various physical platforms and codes.

On the experimental side, demonstrating syndrome extraction has been a long-standing challenge. Early prototypes of QEC in the late 1990s were done in NMR systems (liquid-state nuclear magnetic resonance) – essentially ensemble quantum computers – which showed basic error detection, but without true real-time syndrome readout (and not in a scalable architecture).

The first bona fide syndrome measurements on quantum hardware came in the 2000s and early 2010s as lab technology advanced. Notably, in 2011 a team at Innsbruck led by Rainer Blatt achieved the first repeated QEC cycles in a trapped-ion system. They used three ion qubits in a phase-flip code (a simple repetition code) along with an ancilla, and performed up to three consecutive error-correction cycles, periodically measuring the syndrome ancilla and resetting it to catch and correct introduced bit-flip errors. This was a landmark because it proved errors could be detected and fixed “on the fly” multiple times in a running quantum register, rather than just one-off error detection.

Around the same time, in 2012, Yale researchers led by Robert Schoelkopf demonstrated the first quantum error correction in a solid-state system (superconducting transmon qubits). They implemented a three-qubit code (correcting single bit-flips) by encoding a qubit across two data qubits and one ancilla qubit; the ancilla was measured to obtain the error syndrome and then used to correct the error on the fly. This experiment showed that superconducting circuits – an “artificial atom” platform – could perform a nondestructive parity measurement and use it to preserve quantum information. These achievements were basic (handling only one type of error and just a few rounds), but they validated the core idea of syndrome extraction outside of purely theoretical proposals.

Progress Through Key Demonstrations

Research into syndrome extraction has dramatically accelerated in the past decade, with multiple hardware platforms and error-correcting codes showing incremental progress. Below are some key milestones and demonstrations illustrating how this capability has matured:

2011 (Trapped Ions, Innsbruck)

As mentioned, Schindler et al. implemented repetitive syndrome extraction for a phase-flip code on three trapped-ion qubits. They showed that using a high-fidelity ancilla measurement and fast qubit reset, they could detect and correct errors over three successive cycles, extending the effective coherence of the qubit. This was the first evidence that continuous error correction cycles were feasible in principle on real hardware.

2014 (Trapped Ions, Innsbruck)

Nigg et al. realized a $$[[7,1,3]]$$ color code (a more complex QEC code) using 7 trapped ions, performing syndrome measurements for both bit-flip and phase-flip error detection on a single round. This demonstrated the ability to extract multiple stabilizers’ syndromes in parallel and detect arbitrary single-qubit errors, a stepping stone toward fault tolerance.

While the experiments were still one-shot (not yet repeated indefinitely), they proved that small stabilizer code “patches” could be realized and the syndrome information used to identify errors.

2015 (Superconducting Qubits, IBM)

Córcoles et al. at IBM reported a milestone experiment: a 2×2 lattice of superconducting transmon qubits performing simultaneous syndrome extraction for a distance-2 surface code tile. In this Nature Communication paper, they measured a pair of stabilizers (one “Z-type” parity check and one “X-type”) on a 4-qubit circuit using two ancilla qubits. This setup is essentially a primitive unit of the surface code, and they were able to detect arbitrary single-qubit errors in a non-demolition manner, preserving an encoded two-qubit entangled state despite error injections. The significance was showing that parallel stabilizer measurements could happen in a superconducting device without catastrophic cross-talk, and that the error syndrome stream (two bits per cycle) reliably indicated where errors occurred while the logical entanglement remained intact. It addressed a piece of the “fault-tolerant syndrome extraction” challenge by showing QND measurements on a small planar code. However, it was still an error-detection code (they caught errors but did not correct them in real-time, instead verifying via post-processing) and only ran for a few cycles in a row.

2018-2020

Throughout the late 2010s, both academic and industry labs pushed syndrome extraction further. For instance, researchers implemented small Bacon-Shor codes (a type of subsystem code) on 9 or 13 qubits in ion traps and superconducting devices, demonstrating fault-tolerant syndrome measurement circuits. A 2019 experiment on a 4-qubit superconducting device showed autonomous syndrome extraction via continuous feedback, and others demonstrated flag qubits (extra ancillas that catch ancilla errors) to maintain fault tolerance during extraction. These efforts incrementally improved measurement fidelity and showed pieces of the full QEC workflow (e.g. preparing logical states, doing a syndrome measurement, and even applying correction operations conditioned on the syndrome).

2021 (Trapped Ions, Duke/UMD/IonQ)

A collaboration led by Laird Egan and Chris Monroe demonstrated fault-tolerant control of a logical qubit using 13 trapped ions and the [[9,1,3]] Bacon-Shor code. This was a breakthrough because they implemented fault-tolerant syndrome extraction circuits that significantly reduced error rates at the logical level. They showed that by using verified ancilla preparation and cross-checks (a fault-tolerant measurement design), the error syndromes could be extracted with much lower risk of inducing additional errors. In comparing their fault-tolerant syndrome measurement to a simpler (non-FT) method, the logical error rates were markedly lower. In fact, they achieved an average logical state preparation and measurement error of just 0.6% – far better than without error correction – and even performed a few logical gate operations with error rates (~0.3%) below the physical qubits’ error rates. Although their syndrome extraction was not yet in a fully continuous feedback loop (corrections were applied in post-processing), this experiment showed that quantum error correction can actually suppress errors when done in a fault-tolerant way. It was an encouraging validation that the whole syndrome extraction and decoding procedure can work in a real device to enhance reliability, not just catch errors.

2022 (Superconducting Qubits, ETH Zurich/IBM)

In 2022, a team led by Andreas Wallraff and collaborators used a 17-qubit superconducting circuit to demonstrate repeated quantum error correction in a distance-3 surface code. They encoded one logical qubit into 9 data qubits (distance 3 surface code) and had 8 ancilla qubits continuously measuring the stabilizers (four X-type and four Z-type parity checks) in a rapid cycle of 1.1 microseconds per round. They ran the error-correction loop for up to 50 cycles in sequence, decoding the syndromes using a minimum-weight perfect matching (MWPM) algorithm, and showed that the logical qubit’s state could be preserved with about 97% fidelity per cycle (≈3% error per cycle). This was a major step: a fast, repetitive syndrome extraction on a two-dimensional code operated near the threshold. In their experiment, every 1.1 µs cycle produced 8 syndrome bits which were processed (in this case, by an offline decoder) to correct errors; the fact that the logical error rate per cycle was only ~3% indicated that the physical error rates were approaching the regime where scaling up the code could exponentially suppress errors. The team noted that if they discarded runs where a qubit leaked out of the computational state, the effective logical error per cycle was even lower (around 3% including both bit-flip and phase-flip error probabilities).

This ETH/IBM result showed fast, parallel syndrome extraction on a larger patch of qubits than ever before, and crucially, that they could handle both X and Z error syndromes simultaneously at high speed. It provided a taste of what a “heartbeat” QEC cycle might look like in a future large quantum computer.

2023 (Superconducting, IBM)

IBM’s quantum team demonstrated multi-round subsystem QEC on their 127-qubit processor, using a heavy-hexagon code (a variant of the surface code adapted to IBM’s qubit connectivity). In this experiment, Sundaresan et al. ran several rounds of syndrome extraction on a distance-3 heavy-hexagon logical qubit, with real-time processing in the loop. They used a combination of matching decoders and a maximum likelihood decoder to interpret syndromes, and importantly they performed real-time feedback: after each 0.85 µs cycle, they conditionally reset the syndrome qubits based on the measurement outcomes before proceeding to the next cycle. This conditional reset (a simple form of real-time correction) is an ingredient for a truly autonomous QEC system. The reported logical error rates per cycle in this experiment were on the order of a few percent (around 4% per syndrome round for Z errors, and ~8% for X in their particular device, when using post-selection to remove detected leakage). While the logical error was still slightly above the physical qubit error (~1% or so), the key accomplishment was executing multiple rounds with active feedback and verifying that any single fault in the circuit could be corrected by the code (thus meeting the basic definition of fault-tolerance). It was also a demonstration of integrating classical and quantum processes: the system had to read out 13 syndrome and flag qubits, process that classical information in under a microsecond, and feed back a result to reset qubits – highlighting the interplay between syndrome extraction speed and decoding electronics.

2023 (Superconducting, Google)

Perhaps the most publicized syndrome extraction milestone to date came from Google’s Quantum AI team in 2023. They reported a distance-5 surface code logical qubit that outperformed a distance-3 code – the first definitive experimental proof of the error-correcting “threshold” principle in a scalable code. In their Nature paper, Google researchers used 49 physical qubits (25 data + 24 ancillas) to run a distance-5 surface code for 25 cycles of error correction. The syndrome extraction involved 24 simultaneous parity measurements per cycle, executed via an optimized sequence of two-qubit gates and ancilla readouts, with a cycle time around a microsecond. The result: the logical error probability for the larger distance-5 code over 25 cycles was about 2.9%, slightly better than the ~3.0% error for a smaller distance-3 code over the same duration. In terms of error per cycle, the distance-5 logical qubit had ~0.12% error per round, versus ~0.121% per round for distance-3 on average (these numbers correspond to about 2.914% vs 3.028% over 25 rounds).

This narrow but clear improvement with a larger code is a “critical step towards scalable quantum error correction” – it validates that increasing the code size (and thus performing more syndrome measurements on more qubits) can actually lower the logical error rate, as theory predicts. Achieving this required extremely precise syndrome extraction: every one of the 24 stabilizer measurements had to be done with enough fidelity that the benefit of extra redundancy wasn’t lost. Google’s team optimized the circuit (using a variant called the “XZZX” surface code to balance X and Z error rates) and tamed crosstalk and leakage sufficiently to realize a slight gain. While the improvement was modest (a 4% relative reduction in error rate ), it was a watershed moment showing that the whole pipeline – fast parallel syndrome measurements, decoding, and logical error tracking – can work at scale.

It also highlighted new challenges: for example, they observed that leakage (qubits getting stuck in non-computational states) caused correlated errors across cycles, underscoring the need for syndrome extraction to handle such non-idealities (possibly via leakage-reduction circuits or additional syndrome bits to detect leakage).

2025 (Superconducting, Caltech)

Very recently, in 2025, a group from Caltech (O. Painter et al.) demonstrated an alternative approach combining bosonic qubits with a small stabilizer code. They concatenated a bosonic “cat” qubit (which has an intrinsic bias against certain errors) with a repetition code of distance 5, using ancilla transmons for syndrome extraction. In each QEC cycle, the ancillas measured parity checks on the bosonic mode to catch phase flips, while the cat qubit architecture passively suppressed bit flips. This hybrid approach still relies on rapid syndrome measurements, but benefits from longer-lived bosonic states. Notably, they achieved a logical error per cycle of ~1.65% for the distance-5 concatenated code, compared to ~1.75% for distance-3, indicating performance below the threshold and comparable results even as the code scaled up.

This suggests that with clever engineering (like biasing the error channels and optimizing measurement), syndrome extraction can be efficient enough to yield net error suppression with relatively low overhead. It’s an exciting development that broadens the toolkit for syndrome measurement – showing that not only conventional two-level qubits but also multi-level bosonic systems can feed into fast stabilizer measurements.

Across these and other experiments, the trajectory is clear: researchers have progressed from single-round, few-qubit error detection to multi-round, dozens-of-qubits error correction. The fidelity of syndrome extraction has improved steadily (though it still has a long way to go), and the speed has reached the order-of-magnitude of 1 µs per cycle in superconducting platforms.

We have seen syndrome extraction not just in surface codes, but also in other promising codes like the Bacon-Shor subsystem code (used in ion traps and IBM’s heavy-hexagon variant), the color code (in trapped ions), and tailored repetition or “XZZX” codes for biased noise. Each code has its own stabilizer measurement circuits, but they share the common challenge of needing many ancilla qubits and fast, repeated readout.

Notably, trapped-ion systems have achieved high measurement accuracy too (though typically with slower cycle times ~tens of microseconds), and even neutral atom arrays have begun exploring error syndrome measurements (e.g. a 2022 experiment used Rydberg atom arrays to detect phase-flip errors on a small qubit ensemble).

Superconducting qubits currently lead in raw speed of syndrome extraction, whereas trapped ions lead in measurement fidelity; how these will converge in a large-scale machine remains an open question.

Current Status and Challenges

Despite the impressive progress, today’s syndrome extraction capability is still far from what a full-scale cryptographically relevant quantum computer will demand. Present experiments have realized on the order of 10-50 consecutive QEC cycles (or in a couple cases, up to a few hundred) before the process is manually stopped or the qubits decohere. This corresponds to at most a few milliseconds of sustained QEC operation in trapped-ion setups, or a few tens of microseconds in superconducting setups. In contrast, a CRQC might need billions of cycles run in succession (imagine a 1 µs cycle running non-stop for days) to execute a long algorithm like Shor’s factoring on large numbers.

We are therefore 5-6 orders of magnitude away in terms of continuous operation duration (Capability D.3). No current hardware can run autonomously correcting errors for more than a tiny fraction of the required time.

In one illustrative benchmark, Google’s 2023 surface code experiment ran 25 cycles in a single shot – about 25 µs of protected operation. Extending that to even 1 second (1e6 cycles) is far beyond the state of the art; it will require much better qubit stability and infrastructure to supply continuous cooling, gating, and readout without interruption.

Syndrome extraction speed and fidelity are key limiting factors. The cycle time in superconducting qubits is presently just above 1 µs for a distance-3 code (as reported by both ETH Zurich and IBM). Achieving ~1 µs cycle time required significant engineering: fast local two-qubit gates (on the order of 30-50 ns each), nearly simultaneous operations across the chip, and high-bandwidth readout resonators and amplifiers to distinguish qubit states quickly.

Pushing this below 1 µs will be tough and might involve faster gate schemes (like flux pulses or improved microwave gates), or perhaps novel hardware like cryogenic classical processors closer to the qubits to reduce latency.

The fidelity of each syndrome measurement is also constrained – currently in the range of 95-99% for superconducting qubits’ readout. Any error in a syndrome bit (a false indication or a missed real error) can confuse the decoder. Experimenters often repeat trials many times and post-select or statistically analyze the results, but in a real CRQC there is no do-over – the syndrome info must be correct in real time. A missed syndrome is effectively an undetected error, which will propagate. Today’s best demonstrations still see 1-5% chance of error per syndrome measurement, which is only just around the threshold for some codes.

Crosstalk is another issue: when you perform hundreds of measurements in parallel (as a large code would), the measurements can inadvertently disturb neighboring qubits or qubits sharing readout electronics. This has been observed as “correlated errors” or unexplained syndrome flips beyond simple models. Solving this may require redesigned readout hardware (e.g. isolating resonators, better frequency multiplexing, or using error-mitigating readout protocols).

Another challenge is qubit reset. After you measure an ancilla, it’s typically left in an excited state or an arbitrary state; it must be reset to a known state (usually |0>) before the next cycle begins. In an ideal syndrome extraction, this reset happens rapidly and with high fidelity.

Some platforms, like superconducting qubits, use active feedback to do fast reset (for example, a brief microwave pulse or engineered dissipation); IBM’s 2023 experiment demonstrated conditional reset of syndrome qubits in real-time. Even so, resets can fail or be slow, causing a lag in the cycle or an accumulation of excited-state populations (known as leakage).

Trapped ions typically reset by optical pumping, which can be slower (hundreds of microseconds), making it harder to do rapid cycles – although their trade-off is that the ion memories are very stable, so slower cycles can still be effective.

For solid-state qubits, 0.1-1% probability of a failed reset or leakage per cycle is another error source that the syndrome extraction mechanism must handle (often by including extra “flag” measurements to detect a leaked qubit, as in the ETH and IBM studies).

Scaling up syndrome extraction also puts enormous demand on the classical processing (decoder (Capability D.2)). Every cycle produces a torrent of syndrome bits (for a million-qubit machine, potentially millions of bits per microsecond!). These must be routed to decoders, processed, and a correction decision returned – all in real time to keep up with the next cycle. If the decoder can’t keep up, a backlog of syndrome data would render the corrections too late to be useful. This interdependency is why syndrome extraction is often cited in the same breath as decoder hardware. The faster you make the QEC cycle, the faster your decoder must run. Research is ongoing into ultra-fast decoding algorithms (like renormalization methods, parallelizable matching decoders, or analog decoding with specialized hardware) to meet this challenge. Some proposals even suggest doing part of the decoding in a distributed, asynchronous way so that not every syndrome needs to be processed within one cycle tick.

Likewise, the hardware’s qubit connectivity (Capability B.4) can impose additional overhead on syndrome extraction. If the architecture has limited connectivity (e.g. a 2D grid), stabilizer measurements may need extra steps such as SWAP gates or sequential ancilla operations to reach all the required qubits. This serializes parity checks and slows down each QEC cycle, adding error opportunities and effectively throttling the “heartbeat” clock speed. Conversely, a platform with more flexible connectivity can perform many parity checks in parallel, sustaining a faster cycle time.

Regardless, the syndrome rate effectively sets the clock for the entire quantum computer, and the classical control system has to match it. This is an unusual demand because it requires classical electronics operating at blistering speeds (GHz-range logic) while also interfacing at cryogenic temperatures in many designs. It’s a major engineering frontier.

Finally, maintaining high fidelity over long durations (Capability D.3) remains unproven. Running 1011 QEC cycles without a serious failure will require rock-solid stability of the qubits, the measurements, and the control systems. Drifts in calibration, component heating, radiation hits, etc., over hours or days could all lead to a syndrome extraction failure at scale. Right now, even keeping a superconducting processor stable for a few hours (without recalibration) is challenging.

This hints that significant work on infrastructure – from cryogenics to software calibration – is needed so that syndrome extraction can run as a turnkey “background process” relentlessly. In essence, we’ll need to demonstrate quantum error correction (Capability B.1) as a stable practice, not just a short-lived experiment.

No group has yet shown an error-corrected logical qubit that actually improves in reliability over arbitrarily long times; we see improvements over milliseconds or seconds at best. Achieving that over days is the next mountain to climb.

Interdependencies

Syndrome extraction is deeply intertwined with other CRQC capabilities. It fundamentally depends on high-quality physical qubits operating below error thresholds (Capability B.3) so that error syndromes reliably indicate real faults. It also relies on fast qubit connectivity (Capability B.4) to perform multi-qubit stabilizer measurements in parallel – limited connectivity can force sequential syndrome checks, slowing the “QEC heartbeat” and increasing idle errors. In turn, real-time decoding (Capability D.2) must keep pace with the flood of syndrome bits; a slow decoder would render even perfect extraction moot.

Conversely, continuous operation (Capability D.3) of a quantum computer over days is only feasible if syndrome extraction runs reliably nonstop without introducing too much overhead. Improvements in syndrome measurement fidelity or speed directly benefit other capabilities – for instance, better syndrome readouts relax the load on decoders and help maintain below-threshold error rates. In short, syndrome extraction links the quantum and classical worlds in the CRQC stack, and progress in this area both leverages and enables advances in high-fidelity qubits, connectivity, decoders, and long-term stability.

Why This Matters for CRQC

In the context of a cryptographically relevant quantum computer, syndrome extraction is arguably the make-or-break capability. A large-scale quantum computer (capable of breaking RSA or simulating complex chemistry beyond classical reach) will consist of millions of physical qubits incessantly undergoing error correction. The syndromes are the real-time diagnostics that tell the machine where errors are happening so that corrections (or compensations) can be applied. If those diagnostics fail even briefly, errors will accumulate faster than they can be corrected, and the logical qubits will quickly decohere (losing the quantum information).

To put it simply: missed or late syndromes = undetected errors = logical qubit death. The fault-tolerance threshold theorem, which underpins the whole promise of scalable quantum computing, assumes that error syndromes are obtained and processed faster than errors propagate. If syndrome extraction can’t keep pace, that assumption breaks down. For a CRQC tasked with, say, breaking encryption, this means the computation would never finish successfully unless syndrome extraction is rock-solid. The machine’s effective error rate would creep above threshold, leading to a logical failure before the end.

Syndrome extraction also essentially dictates the speed of computation. Each logical operation in a fault-tolerant quantum computer is typically broken into many rounds of syndrome measurement (for example, a logical T-gate might require state distillation which in turn needs many QEC cycles (Capability C.2), or a logical memory operation might need continuous QEC in the background). The faster one can do these cycles, the higher the quantum computer’s clock rate. Today’s ~1 µs cycle time in superconducting qubits serves as a rough benchmark; if that could be improved to, say, 100 ns, it would significantly speed up algorithms – but that would demand even more from the hardware and decoder.

Conversely, if syndrome extraction is slow, the quantum computer idles waiting for error checks, and decoherence can eat away at the qubits during the downtime. For example, if qubit connectivity (Capability B.4) limitations force stabilizer checks to be performed sequentially rather than in parallel, it would further slow the QEC ‘heartbeat’ cycle and increase idle time. Conversely, stronger connectivity lets error syndromes be measured simultaneously across the device, minimizing wait times and keeping all qubits busy (thus boosting throughput). Thus, syndrome extraction performance is directly tied to both reliability and throughput of a quantum computer.

Moreover, syndrome measurement fidelity feeds into the error correction threshold (Capability B.3). The “threshold” is a certain error rate below which adding more qubits (in a bigger code) reduces the logical error exponentially. If readout errors (syndrome errors) are too high, they count toward the overall error budget. For example, if each stabilizer measurement has 5% chance of being wrong, and you have to do hundreds of them per logical operation, the effective logical error rate might not ever get low enough even if you add more qubits – because the measurement errors themselves form a floor. This is why improving readout fidelity and minimizing cross-talk is crucial: it widens the gap between the physical error rate and the threshold. In other words, cleaner syndrome signals give the decoder a fighting chance to correct mistakes without being confounded by false alarms.

Lastly, syndrome extraction is where quantum hardware meets classical computing in a very intense way. It is a test of the entire system’s integration: qubit quality, control electronics, classical software, and even things like wiring and cryogenics. If any part of this chain underperforms (e.g., a bottleneck in sending syndrome bits to the decoder, or a qubit readout line that is too noisy), the whole fault-tolerance scheme can fail. So, focusing on syndrome extraction forces researchers to consider the total architecture. It’s not just a qubit problem or a software problem – it’s both, simultaneously. As a result, pushing this capability forward tends to drive advancements across the board: better qubit readout designs, faster FPGA/ASIC-based decoders, more efficient error-correcting codes (Capability B.1), and new techniques like real-time qubit reset and calibration. Each of these contributes to a more viable CRQC.

In summary, without high-rate, high-fidelity syndrome extraction, a large quantum computer cannot maintain the delicate balance needed for error-corrected operation. It would be like trying to fly a plane with a broken radar – you’d be essentially flying blind to the errors buffeting the system. All the other quantum breakthroughs (more qubits, better gates, etc.) would fall flat if we couldn’t continuously monitor and correct errors. That’s why syndrome extraction is often called the “heartbeat” of a quantum computer – it beats steadily, cycle after cycle, carrying the vital information (syndromes) that keeps the quantum information alive.

Outlook – Closing the Gap and Future Developments

Looking ahead, the community anticipates several key developments to bolster syndrome extraction toward CRQC levels:

Order-of-Magnitude Scaling in Cycles

We expect experiments in the coming years to go from ~10-100 consecutive QEC cycles to thousands, then millions. Achieving 1,000+ cycles (~1 ms of operation in superconducting platforms) would be a notable milestone, likely requiring improvements in qubit coherence and automated calibration to prevent drift over that timescale. Some theorists are proposing techniques like “quantum firmware” that could self-correct low-level drifts even as QEC runs. In ion traps, where coherence times are longer, we may see attempts at second-long QEC sequences, though at slower cycle rates. Hitting a million cycles continuous (the 1-second mark) on any platform would be a moonshot-level achievement, but one that might happen within a few years if current progress continues – and it’s one of the early milestones on the way to the long-duration stability (Capability D.3).

Faster and Better Measurements

There is a concerted push to make qubit measurements both faster and more accurate. Techniques under exploration include quantum-limited amplifiers with higher bandwidth (so the qubit state can be distinguished in e.g. 100 ns instead of 500 ns), and physical measurement schemes that don’t require waiting for a resonator to ring down. For instance, researchers are looking at ultrafast qubit readouts using advanced amplifier technology (Josephson traveling wave parametric amplifiers, etc.) and perhaps even single-flux quantum (SFQ) logic to quickly latch readout results at cryogenic temperature.

Another avenue is mid-circuit readout in alternative qubit types: for example, in silicon spin qubits, measurement is currently quite slow (on the order of 100 µs), but new ideas like integrating single-electron transistors or optical coupling could speed that up by 10× or 100×. Any such improvement would directly feed into faster syndrome cycles.

Error Mitigation in Extraction

Even while hardware improves, researchers will use clever error-mitigation schemes to handle imperfect syndromes. One idea is to use repeated measurements of the same stabilizer within a cycle (if time permits) to “double check” a syndrome and greatly reduce the odds of a false reading.

Another is employing correlated error decoding, where the decoder is aware of measurement error rates and uses inference (e.g., if one syndrome bit looks suspiciously inconsistent with others, treat it as an ancilla error). Flag qubits and syndrome interleaving will also become more common – small extra measurements designed to catch ancilla failures that would otherwise ruin a code.

All of these effectively make syndrome extraction more robust by design, without needing perfection from the hardware.

Integrated Decoder Hardware

We will likely see special-purpose decoder hardware (Capability D.2) co-designed with the quantum processor. This could mean classical co-processors (FPGAs or ASICs) sitting cryogenically next to the qubits, crunching syndrome data with sub-microsecond latency. Already, some experiments feed syndrome bits to an FPGA to decide a qubit reset in real-time. In the next steps, more of the decoding logic (like the full matching algorithm) might be implemented in hardware to output correction commands on the fly. There’s even talk of using analog or neuromorphic chips for decoding, or harnessing light-speed optical communication to shuttle syndrome data rapidly out of the cryostat. The upshot is that the classical side won’t be running on a generic computer – it will be an integral part of the quantum machine, optimized for speed and parallelism.

Higher-Distance Codes & New Codes

In terms of the quantum codes themselves, we expect to see distance-5, 7, maybe 9 surface codes demonstrated in the coming years, each one requiring more qubits and more syndrome measurements – feeding directly into the scaling behavior (Capability B.3). Every time the distance increases, it’s a test of whether syndrome extraction can scale (since a distance-7 code has roughly double the stabilizers of distance-5, etc.). If researchers can show, for example, a distance-7 code beating distance-5 in logical error rates, it will further validate the approach – and also stress-test the syndrome readout system under larger loads.

Beyond surface codes, there’s growing interest in quantum LDPC codes (which have many qubits per check but potentially much lower overhead at scale) and fusion-based or measurement-based quantum computing (where syndrome extraction is done via entangling measurements between resource states). These may require extracting more complex syndromes (like multi-qubit parity across non-local groups) but fewer of them.

Each code family (surface, color, LDPC, subsystem codes, etc.) that shows experimental promise gives an alternate path – for instance, some LDPC codes might tolerate slightly slower cycle times if they require fewer rounds of measurements. It’s an active area of research: which codes and extraction circuits yield the best trade-off between speed, fidelity, and overhead.

Automation and Stability

To run QEC for days, the system will need self-monitoring and healing (Capability D.3). We might see autonomous calibration routines that adjust readout thresholds or pulse shapes on the fly if they detect a drift in syndrome error rates.

There’s also the concept of “QEC heartbeat monitoring” – essentially having the system report its own syndrome statistics to an external dashboard so operators can intervene if something looks off (for example, if one stabilizer is misbehaving consistently, indicating a bad qubit or bad readout line). In time, one imagines a mature quantum computer will have layers of diagnostics, much like a server farm has logging and error reporting, to ensure the error-correction cycles run smoothly.

This kind of operational maturity is still futuristic, but the seeds are in today’s experiments where they compare measured syndrome rates to simulations to understand if any unmodeled errors are occurring.

Given all these developments, a reasonable prediction is that within the next 5 years, laboratories will demonstrate a logical qubit that can last indefinitely (for as long as you run the QEC) with error rates significantly below a single physical qubit’s error. That will likely involve on the order of 100-1000 physical qubits in a code, cycle times ~1 µs or faster, and advanced decoding.

From there, the task becomes largely one of engineering scale: replicating that unit many times and knitting them together for computation, which of course loops back to syndrome extraction because now those multiple logical units will need to coordinate and not interfere via cross-talk (e.g. thousands of syndrome measurements all happening in parallel across a chip). It’s a daunting scaling challenge, but the roadmap is becoming clearer as each piece – faster readouts, better decoders (Capability D.2), higher fidelity operations – clicks into place.

How to Track Progress in Syndrome Extraction

For professionals and enthusiasts keen to follow this specific capability, there are a few ways to stay up-to-date:

Watch Key Metrics in Research Papers

When new QEC experiments are published (often in journals like Nature, Science, or PRX Quantum), look at metrics such as “logical error per syndrome cycle,” the number of cycles executed, the code distance, and the time per cycle. For example, the 2022 ETH Zurich paper reported a 3% error per 1.1 µs cycle on a distance-3 surface code, and the 2023 Google paper highlighted a 2.9% error over 25 cycles for distance-5 vs 3.0% for distance-3. These numbers are a shorthand for syndrome extraction performance. Over time, seeing those error rates drop and cycle counts rise is a clear sign of progress. Many of these papers are available on the arXiv preprint server even before journal publication (look in the quant-ph section for terms like “quantum error correction,” “surface code,” “fault-tolerant” etc.).

Follow Industry and Lab Announcements

Companies at the forefront – notably IBM, Google Quantum AI, IonQ, Quantinuum (Honeywell), and academic labs like Delft, MIT, Innsbruck, etc. – often announce major milestones via press releases or blog posts. IBM and Google in particular have research blogs where they discuss achieving, say, a record number of QEC cycles or a new readout method. These tend to be written in accessible language and give a good sense of the practical challenges being overcome. Keeping tabs on those outlets (or following the researchers on Twitter/X and LinkedIn) can provide early hints of what’s coming. For instance, when Google achieved the distance-5 threshold result, they released a blog post in tandem with the Nature article, breaking down the significance in a more digestible way.

Conferences and Workshops

The field of quantum error correction has its own dedicated conference (“QEC” conference series, held roughly annually) where the latest experimental and theoretical results are shared. Watching talks or reading abstracts from these events can give insight into the state-of-the-art. Likewise, the American Physical Society (APS) March Meeting each year has sessions on quantum computing where hardware teams present their progress – often including updates on multi-round error correction and syndrome measurement techniques. Many of these talks are recorded or summarized on social media by attendees.

Technology Roadmaps

Initiatives like the U.S. National Quantum Initiative, EU Flagship, or companies’ roadmaps sometimes explicitly mention fault-tolerance milestones. For example, IBM’s quantum roadmap has a goal of achieving a “quantum advantage with error-corrected qubits” in the coming years, and they occasionally update their status in public presentations. By reading those roadmaps, you can see what targets are set for syndrome extraction (like “implementing dynamic circuits by 2023” – which relates to real-time syndrome processing). As these milestones get checked off, it’s a direct indicator of progress.

Hands-on Experimentation (for the Curious)

While you can’t yet run a full QEC cycle on public cloud quantum services easily (because it requires intricate timing and conditional logic), platforms like the IBM Quantum Experience are starting to offer mid-circuit measurement and feedback capabilities on some devices. Interested readers with programming skills can actually try small-scale syndrome extraction routines – for example, implementing a repetition code of distance 3, measuring an ancilla mid-circuit, and using a conditional gate to correct an error. IBM’s Qiskit textbook and tutorials include examples of quantum error correction circuits. Running these on a real backend (even with only a few qubits) can give a firsthand feel for the challenges (you might notice the ancilla measurement error rates and delays). Monitoring the outcomes as you vary timing or insert errors can be an instructive mini version of what the big labs are doing at scale. It’s a way to track progress by experiencing the current limits of hardware.

Community resources

The quantum computing community on forums and Q&A sites (like the Quantum Computing Stack Exchange) sometimes discusses recent breakthroughs in error correction. There are also newsletters (for instance, the Quantum Weekly, or specific ones like the Quantum Error Correction Newsletter if available) that compile recent papers and developments. Subscribing to those can ensure you don’t miss a notable syndrome extraction achievement. Additionally, websites like Quantum Computing Report or the Quantum Insider often cover major announcements in layman-friendly terms.

By keeping an eye on these channels, one can observe, year by year, how the syndrome extraction capability inches forward – through incremental increases in cycles, reductions in error rates, and novel techniques demonstrated. In a sense, the progress in syndrome extraction is the barometer of progress toward fault-tolerant quantum computing itself. Each time the record for sustained error-corrected operation is broken, or a new code is implemented with success, we are witnessing the gap to a CRQC closing a bit more.

Conclusion

Syndrome extraction may not always grab headlines with flashy quantum algorithms, but it is the unsung hero that will determine when quantum computing truly comes of age. As we’ve explored, it originated from fundamental ideas in the 90s and has steadily grown into a sophisticated operational process in modern quantum processors. From the first demonstrations of a single error detection to today’s multi-qubit, multi-round error correction feats, the journey highlights how theory and engineering must go hand-in-hand. The latest achievements – whether it’s a distance-5 surface code showing improvement, or real-time feedback resetting ancillas on a large chip – show that syndrome extraction is meeting key milestones of speed and reliability, albeit at small scales.

The road ahead is still long: we need orders of magnitude more cycles, more qubits, and better fidelity to reach a true CRQC. But the path is visible. Incremental innovations in hardware (faster, cleaner measurements), in architecture (better codes and decoders), and in systems engineering (integrating the whole feedback loop) will continue to push this capability forward. For cyber professionals and quantum enthusiasts tracking the field, syndrome extraction is a perfect lens through which to understand the state of quantum computing. It forces one to ask, “Can we keep the qubits alive and well, no matter what noise comes in?” – a question whose answer is being written year by year in lab results and papers.

In a future not too far off, if someone proclaims the realization of a fully error-corrected qubit that can run for days, it will be because all the pieces of syndrome extraction fell into place: high-speed measurements, error rates far below threshold, robust decoders, and seamless quantum-classical orchestration. That will be the moment the heartbeat of the fault-tolerant quantum computer beats strong and steady, opening the door to the era of practical quantum computing. Until then, each incremental improvement in this capability is worth watching, because it’s bringing that remarkable goal into clearer focus.

Technology Readiness Assessment (Nov 2025)

As of late 2025, Capability B.2 (Syndrome Extraction) is demonstrated in basic form but not yet at scale (TRL ~4). Researchers have run repeated error syndrome measurement cycles on small quantum codes – on the order of ~$$10^6$$ continuous cycles (around one second of operation) in some experiments – but this is still far from the ~$$10^{11}$$–$$10^{12}$$ cycles (multiple days) that a CRQC would require.

In practice, simple syndrome loops have been validated in the lab (proving that we can detect errors and feed them to decoders in real-time), yet we have not achieved the speed and sustained reliability needed for fault-tolerant operation over days. Because syndrome extraction sets the “heartbeat” of the error-correction process, its maturity directly affects the Quantum Operations Throughput of a system. Improving this capability means pushing readout fidelity and speed to extreme levels while maintaining stability over long runs.

Key milestones to watch include faster and lower-noise qubit readouts integrated with real-time decoding, demonstrations of error-correction cycles running continuously for much longer durations, and scalable architectures for parallel syndrome measurement on thousands of qubits. Progress on those fronts will indicate that syndrome extraction is closing the gap toward the performance needed to keep errors at bay in a full-scale CRQC.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.