How You, Too, Can Predict Q-Day (Without the Hype)

Table of Contents

Introduction

For three decades, Q-Day has been “just a few years away.” A surprising amount of ink, and vendor marketing, has been spilled on it. In my case, the first pitch hit my desk roughly 25 years ago, when I was in a CISO role and a vendor tried to sell me “quantum‑resistant” crypto based on supposed “insider NSA information that quantum computers are imminent.” You can imagine how that went.

For years we’ve had two loud camps: vendors promising an imminent quantum apocalypse, and skeptics insisting large‑scale quantum computers would never arrive. Decision‑makers were understandably confused, and desensitized by the endless drumbeat of “almost there.” That skepticism is now dangerous. The mainstream consensus has shifted: cryptographically relevant quantum computers (CRQCs) are coming within the planning horizons of major enterprises and governments. Standards are finalized, government programs are in motion, and national agencies have issued migration roadmaps.

At the same time, the noise level has risen. Resource estimates for breaking RSA change as new algorithms and error‑correction methods appear; hardware roadmaps are aggressive; and “qubit counts” get thrown around without context. People repeat rules of thumb from years ago as if the field weren’t moving weekly. My goal here is to cut through that and show you how to form your own, defensible Q-Day estimate – one tailored to your cryptography, systems, and risk tolerance.

I also publish my own predictions, and have been doing so for almost 15 years; my current estimate places CRQC around 2030 (±2 years), based on converging hardware and algorithmic trends. But I don’t want you to take my date on faith. I want to show you how to make your own call.

(Note: This step-by-step guide is based on my capability-driven framework for predicting Q-Day. I’ve detailed the full rationale and nuances of that approach in a separate explainer, “The CRQC Quantum Capability Framework.” Here, I distill that methodology into a simpler form that anyone can apply.)”

The simple mental model: three dials that actually matter

Counting physical qubits by itself is misleading. In my capability-driven methodology, we focus on nine core technical capabilities (B.1–D.3), plus one cross-cutting engineering capability (E.1: Engineering Scale & Manufacturability), which I compress into three critical ‘dials’ that determine cryptographic breakability. To break RSA you need error‑corrected logical qubits, long and reliable operation depth, and enough throughput to finish within an attack‑relevant time window.

I previously proposed (and recently updated) a crypto‑centric way to track these three dials – the CRQC Readiness Benchmark – which rolls them into a single progress score calibrated to “RSA‑2048 factored in about a week.” (Why a week? Because that’s a practical, nation‑state‑relevant runtime for a high‑value attack. If you insist on “hours,” you’ll get a later date.) I wanted to come up with a way to track progress towards CRQC only, without getting bogged down in other questions such as when would quantum computers offer quantum advantage for what kind of other practical problem.

The three dials:

- Logical Qubit Capacity (LQC): how many logical (error‑corrected) qubits you can run in parallel.

- Logical Operations Budget (LOB): how many reliable logical gate operations (circuit depth) you can execute before the computation fails.

- Quantum Operations Throughput (QOT): how many logical ops per second you can sustain (clock speed × parallelism).

In my benchmark, a score near 1.0 roughly corresponds to the capability to break RSA‑2048 in ~one week. A back‑of‑envelope version looks like:

Score ≈ (LQC / 1000) × (LOB / 10¹²) × (QOT / 10⁶)

You can read the full rationale and caveats in my explainer. And you can play with the parameters yourself with my CRQC Readiness Benchmark (Q-Day Estimator) tool.

Why these dials? Because they map cleanly to both sides of the problem:

- Algorithms & resource estimates (e.g., optimized versions of Shor) tell you target logical qubits and total gate counts for factoring RSA‑2048. Gidney & Ekerå’s well‑known analysis is one landmark (20M physical qubits to crack RSA‑2048 in hours at specific error rates); newer work suggests fewer physical qubits with longer runtimes as techniques improve.

- Hardware roadmaps (IBM, Google, others) now publish milestones in terms of logical qubits and operations – exactly what you need to map to the dials. IBM’s public plan, for example, targets ~200 logical qubits and ~100M logical operations by 2029 (Starling), and ~2,000 logical qubits and ~1B operations by ~2033 (Blue Jay). That’s the right kind of signal to watch.

How to build your own Q-Day estimate (in 7 steps)

Step 1 — Define “broken” for your risk posture.

Pick the cryptosystem and runtime that matters to you. Many of us focus on RSA‑2048 in ≤1 week as a practical threshold for a serious adversary; you can choose tighter (hours) or looser (months), but be consistent. (My CRQC Readiness Benchmark uses the ≈one‑week target.)

Step 2 — Choose a resource‑estimation baseline.

Select an algorithmic estimate for factoring RSA‑2048: e.g., Gidney & Ekerå (2019), and/or more recent updates (2025 preprint suggests <1M physical qubits for <1 week under certain assumptions). This sets the logical qubit count and logical operation count targets. Don’t lock into a single paper; do sensitivity analysis with the conservative and optimistic ends.

Step 3 — Translate estimates into the three dials.

- Map resource estimates to LQC (logical qubits required).

- Convert the total gate count and error‑correction assumptions into LOB (reliable depth).

- Convert cycle times and parallelism into QOT (ops/sec). This is where a tool helps (more below). My rule of thumb: if any one dial is dramatically short, your date is optimistic.

Step 4 — Anchor to public roadmaps.

Use vendor roadmaps to sanity‑check the near‑term trajectory. IBM has put numbers in public: ~200 logical qubits / 10⁸ ops in 2029; ~2,000 logical / 10⁹ ops ~2033. Google is publicly demonstrating below‑threshold error correction progress (“Willow”) and publishing a scaling roadmap focused on logical qubits. Put these points on your dials timeline.

Step 5 — Use two free tools to pressure‑test your dates.

- My CRQC Readiness Benchmark & Q-Day Estimator: adjust LQC/LOB/QOT and growth assumptions; see when the composite score crosses 1.0 (≈RSA‑2048 in a week). It’s built to be transparent and tweakable.

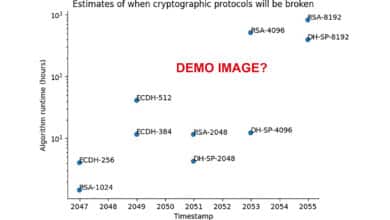

- QTT (Quantum Threat Tracker) by Cambridge Consultants and the University of Edinburgh: an open‑source model that estimates when various schemes fall, combining quantum resource estimation with hardware growth assumptions. Its default demo is intentionally conservative (you’ll see dates like RSA‑1024 ≈2047 / RSA‑2048 ≈2051), but the key is you can change the inputs to match your assumptions and see the impact. Using both gives you a useful pessimistic/optimistic band.

Even though there is an overlap between the two approaches, I believe you should use both. For a more in-depth comparison, see: “CRQC Readiness Benchmark vs. Quantum Threat Tracker (QTT).”

Step 6 — Calibrate to your environment’s risk and data lifetimes.

If you hold data with long confidentiality needs (health, defense, trade secrets, PII, keys that protect archives), you face harvest‑now‑decrypt‑later risk today. Your date should skew earlier than the median and your program should already be underway; multiple agencies explicitly advise preparing now.

Step 7 — Recompute quarterly.

Treat this like threat intel: update when there’s a new algorithmic improvement (e.g., resource reductions), a major error‑correction milestone, or a credible hardware breakthrough/roadmap revision. DARPA’s Quantum Benchmarking Initiative exists precisely to produce application‑centric metrics (“utility scale”), which is the right direction for all of us.

A quick, simplified walk‑through (with pointers)

If you want the skinny version of my method before diving in:

- Pick a target: RSA‑2048 in ≤1 week.

- Pick baseline resources: Start with Gidney‑Ekerå (2019) to set a conservative bar; run a sensitivity check with the 2025 update (fewer qubits, similar runtime).

- Map to dials: Suppose you need on the order of ~1,000 logical qubits and ~10¹¹-10¹² reliable logical operations at ≥10⁶ ops/sec to hit the one‑week mark; that’s roughly where my “Score ≈ 1.0” calibration comes from. Then compare to IBM’s stated targets for 2029/2033 to see how close a leading vendor claims they’ll be.

- Run my estimator: Start with default baseline (LQC₀=1000, LOB₀=10¹², QOT₀=10⁶), set today’s values, choose a growth factor that matches your belief (e.g., 2-3×/year composite), and read the crossing year.

- Cross‑check with QTT: Load the repo or docs, reproduce the default timeline (late 2040s/2050s), then increase hardware growth and update algorithmic modules per recent papers; watch how RSA‑2048 moves earlier. This shows how sensitive your Q-Day is to assumptions – a crucial board conversation.

Why the dates differ so much in the wild

- People fixate on physical qubits instead of logical qubits + error rates + throughput.

- They use outdated resource estimates and miss algorithmic speedups; e.g., moving from “20M noisy qubits for 8 hours” to “<1M noisy qubits for <1 week” massively changes the hardware bar.

- They ignore throughput. Even with enough logical qubits, gates/second can make the difference between “one week” and “several months,” which matters operationally.

- They treat roadmaps as PR or gospel. Treat them as anchor points with error bars. IBM’s milestones are unusually quantifiable (logical‑qubit counts and operation budgets), making them useful for modeling; still, validate and triangulate.

- They conflate “Q-Day” with migration timing. Migration has to start before Q-Day. Governments are already telling you to inventory crypto and plan the move now.

Where the official posture is today (signals you can cite to your board)

- Standards are real: NIST’s first PQC standards are finalized (ML‑KEM, ML‑DSA, SLH‑DSA).

- Mandates exist: U.S. OMB M‑23‑02 orders federal inventories and migration planning; CISA/NSA/NIST issued joint “prepare now” guidance; CISA published an automation strategy for crypto discovery.

- National roadmaps set end‑states: UK NCSC’s migration timeline targets full PQC deployment by 2035 with intermediate milestones (2028, 2031).

- Benchmarks are becoming application‑centric: DARPA’s Quantum Benchmarking Initiative focuses on “utility‑scale” capability by early 2030s.

All of this reinforces a simple reality: quantum readiness is a now‑problem, regardless of whether your Q-Day is 2029, 2032, or 2038. And yes, the migration will be enormous, likely the largest IT/OT transformation in history.

Tools you can use—today

- CRQC Readiness Benchmark (explainer): How the three dials work and how to read vendor claims with a crypto‑breaking lens.

- Q-Day Estimator (interactive): Adjust LQC, LOB, QOT, and growth assumptions; see when your score crosses ~1.0. Ideal for tabletop exercises with your architects.

- QTT – Quantum Threat Tracker (open source): Run the model, swap in newer algorithmic resource estimates, and tune hardware growth to your belief; produces timelines across RSA/ECC variants. (See also Cambridge Consultants’ project background with the UK energy operator.)

- CRQC Readiness vs. QTT (comparison): Why the two approaches diverge and how to use both in tandem.

- The CRQC Quantum Capability Framework: If you want to understand the full methodology behind.

My current view (and how I’d defend it)

Based on today’s public roadmaps and the pace of algorithmic & error‑correction improvements, I put CRQC around 2030 ±2 years. That aligns with a scenario where (a) multiple vendors demonstrate O(10²-10³) logical qubits with deep, reliable circuits, (b) throughput reaches ~10⁶-10⁷ logical ops/sec, and (c) total reliable ops are in the 10¹¹-10¹² range – enough to land an RSA‑2048 break in the “days to a week” window. See my detailed analysis for the full argument and uncertainty bands.

If you want a more conservative plan, adopt QTT’s cautious defaults as your outer bound and my benchmark‑driven estimate as your inner bound. Operate to the earlier date if your data has a long secrecy lifetime or if you’re in critical infrastructure. (Regulators explicitly recommend preparing now, harvest‑now‑decrypt‑later is a present‑day risk, and public timelines leave little slack.)

Practical next steps for CISOs, architects, and policymakers

- Inventory cryptography (protocols, libraries, key sizes, lifetimes). You’ll need a CBOM‑style view to plan the migration – this is literally mandated in some jurisdictions.

- Classify by secrecy lifetime and upgrade lead time; anything where (lifetime + migration time) ≥ your Q-Day estimate gets priority (classic HNDL calculus).

- Pilot NIST PQC in controlled domains (ML‑KEM/ML‑DSA/SLH‑DSA) and build crypto‑agility (rotate, swap, hybridize where appropriate).

- Vendor governance: require roadmaps and attestations; align contracts to PQC milestones (e.g., UK style “done by 2035” endpoint).

- Tabletop the Q-Day model quarterly using my estimator + QTT, refreshing inputs with new roadmaps, error‑correction results, and algorithmic papers.

- Mind your OT/IoT: long‑lived devices and constrained environments will define your critical path—and your residual risk.

A final note

Two things move the date: (1) algorithmic breakthroughs that lower requirements; (2) engineering breakthroughs that raise logical‑qubit counts, depth, and throughput. In the last 18-24 months we’ve seen meaningful movement on both fronts (Google’s below‑threshold error‑correction, IBM’s quantified logical‑qubit targets, new factoring resource estimates). Dates will shift; your process needs to adapt with them.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.