Post-Quantum Cryptography (PQC) Standardization – 2025 Update

Table of Contents

The Quantum Threat and Need for PQC

Modern public-key cryptography, including RSA, Diffie-Hellman, and elliptic-curve schemes like ECDSA, relies on mathematical problems (factoring, discrete logarithms) that are believed intractable for classical computers. However, quantum computers pose an existential threat to these systems. In 1994, Peter Shor discovered a quantum algorithm that can factor large integers and solve discrete logs in polynomial time, meaning a sufficiently powerful quantum computer could break RSA, DSA, ECDSA, and similar cryptosystems almost instantly. In theory, a cryptographically relevant quantum computer (CRQC) could derive a 2048-bit RSA private key from its public key in mere hours and break 256-bit ECC in a similar timeframe.

While timelines are debated, many experts anticipate that a powerful quantum computer capable of breaking current crypto could be built within a decade or so. The U.S. NSA and other government agencies are not taking chances – planning to switch to quantum-resistant cryptography by the early-to-mid 2030s. Notably, symmetric algorithms (AES, SHA-2, etc.) are less vulnerable. For symmetric encryption (like AES), Grover’s quantum algorithm provides a quadratic speedup against key searches; doubling key sizes (e.g., using AES-256) suffices to counter this. Cryptographic hash functions (like SHA-2) also face quantum speedups, including specialized algorithms that attack collision resistance more effectively. While NIST assesses that current standards like SHA-256 remain secure based on the high practical cost of these attacks, stronger variants (e.g., SHA-384) are often recommended for long-term assurance. The real urgency is replacing public-key algorithms for encryption, key exchange, and digital signatures, which cannot be made quantum-safe with minor tweaks. This is where Post-Quantum Cryptography (PQC) comes in – a set of new cryptographic algorithms based on math problems believed resistant to both classical and quantum attacks. In response to the looming threat, a worldwide effort has been underway to develop and standardize PQC so that our secure communications and data remain safe in the quantum era.

I’ve been writing about PQC standards for a while, but in this article I will try to recap all the important aspects of the PQC standardization and provide an update as of March 2025.

NIST’s PQC Standardization Process

To drive this transition, in 2016 the U.S. National Institute of Standards and Technology (NIST) launched an open PQC standardization project. After a global call for submissions, 82 candidate algorithms from 25 countries were received. Over multiple evaluation rounds involving the international cryptographic community, NIST winnowed these down to a handful of finalists.

In July 2022, after three rounds of analysis, NIST announced its first set of PQC algorithms selected for standardization. These include one key-establishment (encryption) scheme – CRYSTALS-Kyber – and three digital signature schemes – CRYSTALS-Dilithium, FALCON, and SPHINCS+. Kyber (a lattice-based Key Encapsulation Mechanism, or KEM) was chosen as the primary replacement for Diffie-Hellman and RSA key exchange, thanks to its strong security and excellent performance. For signatures, NIST selected the lattice-based Dilithium as the primary general-use algorithm, with FALCON (another lattice/NTRU-based scheme) as an alternative for cases where smaller signatures are needed, and SPHINCS+ (a hash-based scheme) to provide a non-lattice “backup” in case lattices are ever compromised. This marked a major milestone: the first quantum-resistant public-key algorithms poised to become standards. NIST’s selection decisions were documented in detail in an official report (NIST IR 8413). Following the announcement, NIST began drafting standards for each algorithm in collaboration with the submitters.

By August 2023, draft standards for Kyber, Dilithium, and SPHINCS+ (FIPS 203, 204, 205) were released.

By August 2024 these standards were finalized. Together with the FIPS designation, each standard now received a generic name as well:

- CRYSTALS-Kyber is standardized as ML-KEM – Module Lattice KEM is covered in FIPS 203.

- CRYSTALS-Dilithium is standardized as ML-DSA – Module Lattice Digital Signature Algorithm and covered in FIPS 204.

- SPHINCS+ as SLH-DSA – Stateless Hash-based DSA and covered in FIPS 205.

- FALCON’s standard (to be called FN-DSA, for FFT NTRU-based DSA) will be covered in FIPS 206 and was still in draft at the time of writing. A draft FIPS 206 was expected in late 2024 and is likely to be finalized in 2025.

These standards include full specifications and implementation guidance, and NIST has urged industry to begin integrating them “immediately” into products and protocols. From now on in this post, we will use the standardized names of the algorithms – ML-KEM, ML-DSA and SLH-DSA – just to get myself, and the readers, used to new names.

NIST did not stop with those four algorithms. Recognizing the need for algorithmic diversity and future backups, NIST continued the process with a Fourth Round focusing on additional KEMs that use different hardness assumptions. In the 2022 announcement, NIST advanced four extra finalists for further study: two code-based encryption schemes (BIKE and HQC), the classic code-based scheme Classic McEliece, and an isogeny-based KEM called SIKE. The goal was to potentially standardize one or two of these as alternatives to lattice-based encryption. However, events unfolded quickly – in July 2022, using a single-core PC, researchers Castryck and Decru were able to recover SIKE’s private key in ~1 hour for the Level-1 parameter set, exploiting a weakness in the underlying elliptic-curve isogeny problem. NIST promptly removed SIKE from consideration. The remaining code-based KEMs underwent intense scrutiny through 2023-2024, including performance benchmarking in protocols (TLS, etc.) and examination of cryptanalytic security. By early 2025, NIST announced that HQC (Hamming Quasi-Cyclic) had been selected for standardization to augment the KEM portfolio. HQC showed a good balance of security and performance among the code-based options and will become an additional NIST-approved KEM (expected to be finalized as a standard by 2026-2027). BIKE, while promising, was not chosen (it is somewhat slower in key generation/decapsulation and, as discussed later, can perform better only in very constrained network conditions). Classic McEliece, though widely regarded as extremely secure, was also not standardized in this round – largely because its huge public keys (hundreds of kilobytes or more) make it impractical for broad use. NIST has decided not to standardize it as part of the current Round 4, but it has hinted that McEliece could still be standardized in the future as a specialized option, but for now it remains on the bench due to lack of deployment interest (few applications can handle megabyte-size public keys easily).

In summary, as of 2025 NIST’s PQC selections consist of five primary algorithms: ML-KEM, ML-DSA, SLH-DSA, FALCON (to be called FN-DSA), and HQC with others potentially added as “backups” in coming years.

Another major piece of NIST’s effort is a new evaluation round for additional digital signature schemes. By the end of the third round in 2022, all the signature finalists apart from ML-DSA/FN-DSA (lattice) and SLH-DSA (hash) had either been broken or deemed unviable, leaving a somewhat narrow set of assumptions (mostly lattices) in the signature portfolio. To diversify, NIST issued a fresh call in Aug 2022 for post-quantum signature proposals, explicitly encouraging schemes not based on lattices (or offering substantially improved performance). This resulted in 50 submissions by the June 2023 deadline, of which 40 met all requirements and were accepted for evaluation. Throughout late 2023 and 2024, NIST and the cryptographic community examined these candidates; by October 2024, NIST down-selected to 15 second-round signature candidates (14 were announced, plus one merged scheme) for further analysis. These encompass a wide range of cryptographic ideas beyond lattices. For example, there are code-based signature candidates such as CROSS and LESS that build on error-correcting code assumptions, an isogeny-based signature (SQIsign) leveraging hard problems in supersingular isogeny graphs, several schemes based on multivariate quadratic equations (e.g. variants of the Unbalanced Oil-and-Vinegar approach, such as MAYO, Rainbow-like proposals), and zero-knowledge proof based signatures often called “MPC-in-the-Head” schemes (like Mirath which merged two submissions, and FAEST which is an improved variant of the Picnic strategy using AES). NIST published a report on the first round of this “on-ramp” signature process (NIST IR 8528), explaining the selection of the Round 2 candidates. The evaluation of these new signatures is expected to span multiple rounds and several years – analogously to the original PQC contest – with the goal of standardizing one or more additional signature algorithms that offer security from different assumptions (and possibly smaller signatures or faster verification in certain scenarios). As of early 2025, this effort is in Round 2, with no winners declared yet. However, NIST has stated that any future signature standards from this group will be considered “backup” options – the primary recommended signature for most applications remains ML-DSA (with SLH-DSA and FN-DSA as alternatives). In other words, organizations should not wait for these new signatures to be finished; the advice is to start deploying the already standardized algorithms now, and swap in any new ones later only if needed.

In summary, NIST’s PQC standardization has delivered a first wave of quantum-resistant algorithms and is actively working on a second wave. Next, we will take a closer look at the algorithms already selected as standards, the ones still in the pipeline, and how they compare to the classical cryptography they aim to replace.

NIST PQC Security Categories

As part of its post-quantum cryptography (PQC) standardization, NIST introduced five security strength categories (often labeled Levels 1-5) to classify the robustness of candidate algorithms. For the full description of categories, see “NIST PQC Security Strength Categories (1–5) Explained“. I am using PQC levels and classical equivalents interchangeably below. So for reader’s reference, the summary table is here:

| Category | Symmetric Security (bits) | Classical Public-Key Equivalent† (RSA/ECC) | Reference Attack (baseline) |

|---|---|---|---|

| 1 | ~128-bit (e.g. AES-128) | RSA-3072; ECC P-256 (approx. 256-bit curve) | Key search on a 128-bit block cipher |

| 2 | ~128-bit (collision context) | – See note [1] | Collision search on a 256-bit hash |

| 3 | ~192-bit (e.g. AES-192) | RSA-7680; ECC P-384 (384-bit curve) | Key search on a 192-bit block cipher |

| 4 | ~192-bit (collision context) | – See note [1] | Collision search on a 384-bit hash |

| 5 | ~256-bit (e.g. AES-256) | RSA-15360; ECC P-521 (521-bit prime curve ~256-bit sec) | Key search on a 256-bit block cipher |

Table 1: NIST PQC Security Categories and Classical Equivalents

† These RSA and ECC key sizes are commonly cited benchmarks for equivalent classical security. For instance, breaking AES-128 (128-bit key) is considered roughly as hard as factoring a 3072-bit RSA key or solving the elliptic curve discrete log on a 256-bit NIST curve.

[1]: Categories 2 and 4 target hash collision resistance at the 128-bit and 192-bit levels, respectively, rather than a symmetric key search. This distinction exists because certain attacks (especially on digital signatures or hash-based designs) are limited by how hard it is to find any two inputs with the same hash output, as opposed to guessing a specific secret key. NIST treated collision search separately to ensure such scenarios are covered. Notably, NIST assumes the ordering 1 < 2 < 3 < 4 < 5 in strength; for example, a brute-force collision on SHA-256 (Category 2) is expected

NIST’s Chosen PQC Algorithms (First-Wave Standards)

ML-KEM (Previously CRYSTALS-Kyber, Standardized in FIPS 203) – KEM – Encryption/Key Exchange

ML-KEM is a lattice-based Key Encapsulation Mechanism designed for encryption and key exchange. ML-KEM’s security is based on the hardness of the Learning With Errors (LWE) problem over module lattices – essentially a problem of solving noisy linear equations in a high-dimensional vector space, believed to be resistant to both quantum and classical attacks (for a more technical write up, see my post “Inside NIST’s PQC: Kyber, Dilithium, and SPHINCS+“). ML-KEM comes with three parameter sets (ML-KEM-512, 768, 1024) targeting different security levels (~AES-128, AES-192, AES-256 respectively). NIST suggested ML-KEM-768 as the primary recommendation for general use (this corresponds to NIST PQC Level 3 security, which is designed to be analogous to the classical Security Strength Category 3, roughly equivalent to 192-bit symmetric security). In practical deployment, ML-KEMwill replace algorithms like RSA, Diffie-Hellman, and elliptic-curve Diffie-Hellman (X25519, P-256 ECDH) for establishing shared secrets over a network.

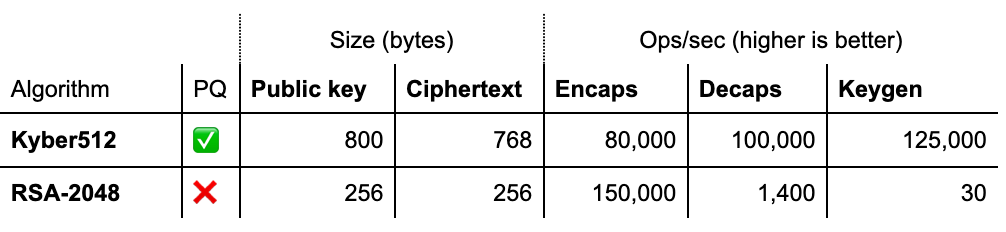

One of ML-KEM’s big advantages is its efficiency – both in performance and in the size of keys/ciphertexts. ML-KEM uses relatively small lattice dimensions (n=256 and modest module rank) with operations amenable to optimizations. As a result, encryption or encapsulation with ML-KEM can be extremely fast. In fact, optimized implementations can perform a ML-KEM-768 encryption in on the order of ~104 CPU cycles. Decryption (decapsulation) is more efficient than RSA-2048. Table 1 below compares ML-KEM at its Category-1 parameter (ML-KEM-512) with RSA-2048 in terms of key sizes and operations per second on a reference platform:

As shown above, a ML-KEM public key is a few hundred bytes (ML-KEM-512: 800 bytes; ML-KEM-768’s is ~1184 bytes) – larger than an RSA-2048 public modulus (256 bytes) but still quite compact in absolute terms (kilobytes, not megabytes). ML-KEM ciphertexts (around 768-1568 bytes depending on parameter) are also larger than an RSA ciphertext (256 bytes for RSA-2048), but the overhead is not prohibitive for most protocols. In exchange, ML-KEM is dramatically faster: in Table 1, ML-KEM-512 decapsulation was measured around tens of thousands ops/sec vs only ~1.4k ops/sec for RSA-2048 decryption (Although, this recent paper “Performance Analysis and Industry Deployment of Post-Quantum Cryptography Algorithms” shows ML-KEM-512 “only” about three times faster than RSA-2048). This is because RSA’s private-key operation is very slow (involving a big exponentiation), whereas ML-KEM’s decap is mainly polynomial multiplication and vector operations in a small dimension. ML-KEM key generation is also many orders faster than RSA keygen (which involves generating large primes). The only operation where RSA shines is encryption (since RSA encryption with a small public exponent is trivial), but even there ML-KEM is within an order of magnitude. In a TLS handshake context, the overall latency difference between ML-KEM key exchange and classical ECDH is small – experiments by Cloudflare and others showed PQC key exchanges add only ~1-2ms to handshakes in ideal conditions. In poor network conditions (high latency or loss), the slightly larger sizes of PQC ciphertexts could cause a minor delay, but generally ML-KEM performs extremely well in networking scenarios (as also documented in the NIST report “NIST IR 8545 – Status Report on the Fourth Round of the NIST Post-Quantum Cryptography Standardization Process.”)

From a security standpoint, ML-KEM’s lattice basis provides not only quantum resistance but also a comfortable security margin and flexibility. It was designed with a Fujisaki-Okamoto transform to be CCA-secure (secure against active attacks), and its parameters were chosen to minimize decryption failures to a negligible probability. Notably, ML-KEM has no known weaknesses after years of public cryptanalysis in the NIST process. All this made ML-KEM the unanimous choice as the “main” post-quantum KEM: NIST recommends it for essentially all applications that need public-key encryption or key agreement. In NIST’s words, ML-KEM offers “strong security and excellent performance” and is expected to “work well in most applications”. It will effectively replace algorithms like RSA-OAEP, DH/ECDH, and ElGamal in the coming years as standards like TLS, IPsec, and others adopt it for negotiating session keys.

In summary, ML-KEM (previously: CRYSTALS-Kyber; standardized as FIPS 203 ) is the workhorse post-quantum encryption scheme: it brings quantum safety with only modest increases in key sizes and, in many cases, faster speeds than the classical algorithms it replaces. Its integration into protocols is already underway (e.g. hybrid TLS 1.3 modes testing ML-KEM with X25519). If you currently rely on RSA/ECC for encryption or key exchange, ML-KEM (or HQC, discussed later, for certain use-cases) will be the quantum-resistant alternative going forward.

ML-DSA (Previously CRYSTALS-Dilithium; Standardized in FIPS 204) – Digital Signatures

For digital signatures, NIST’s primary choice is ML-DSA, another lattice-based scheme from the CRYSTALS family. ML-DSA is based on the hardness of the SIS/LWE problems over module lattices, making it cousins with ML-KEM in terms of mathematical foundation (both use structured lattices, but for different primitives). ML-DSA is essentially a lattice-based Fiat-Shamir with aborts scheme – it produces signatures by sampling random vectors, performing a hidden lattice calculation, and then using a Fiat-Shamir hash to make the process non-interactive. Without diving into the math, the key point is that ML-DSA inherits lattice cryptography’s strengths: strong security assumptions and efficiency. NIST chose ML-DSA as the main post-quantum digital signature algorithm (standardized as FIPS 204), with a recommendation that it will be suitable for the majority of applications that need signatures.

Security: ML-DSA was picked for its robust security and simpler design. It has no structure that has succumbed to cryptanalysis so far – in contrast, alternate signature finalists like Rainbow (multivariate equations) were badly broken before the process concluded. By using lattice assumptions that are well-studied, ML-DSA carries a high confidence level. It offers multiple parameter sets (ML-DSA-2, -3, -5 corresponding to NIST security levels 2, 3, 5) so implementers can choose the appropriate security vs. size trade-off.

Size: The trade-off with lattice signatures is that the signatures and keys are larger than classic ones. A ML-DSA public key is on the order of 1-2KB, and signatures are a few kilobytes (exact figures depend on the security level). For example, the FIPS 204 standard defines parameter sets named ML-DSA-44, -65, -87 (roughly Level2/3/5). At the lowest level, ML-DSA-44 (approx Level 2) has a public key of 1,312 bytes and signature of 2,420 bytes. The highest level, ML-DSA-87 (Level 5), has a 2,592-byte public key and a 4,595-byte signature. These are substantially larger than ECDSA or RSA signatures – for comparison, a typical ECDSA signature (secp256k1 curve) is ~71-73 bytes and the compressed public key is 33 bytes. In other words, a ML-DSA signature can be ~30-50× the size of an ECDSA signature, and public keys ~40× larger. This sounds huge, but in absolute terms a few kilobytes is still very workable for most systems (TLS certificates today often carry 2KB RSA keys and 256B signatures; swapping in 2KB keys and 2-3KB signatures is not a deal-breaker in most cases, though it does increase bandwidth and storage needs for signature-heavy applications like blockchains). One should plan for higher network and storage overhead if using ML-DSA extensively – e.g., blockchain transactions will “bloat” in size compared to ECDSA-based ones. However, these sizes were deemed the best balance given current knowledge; other PQC signatures with smaller sizes were either broken or come with other downsides.

Performance: On the upside, ML-DSA is quite fast. Signing and verification operations involve matrix-vector multiplications and hashing, which can be optimized with efficient arithmetic and parallelism. In benchmarks, ML-DSA can outperform classic algorithms in certain operations. For instance, verifying ML-DSA signatures is typically much faster than verifying RSA or even ECDSA signatures of comparable security. One study showed that ML-DSA verification on a modern CPU was ~20× faster than ECDSA P-521 verification (and even several times faster than Ed25519). Signing speed is also competitive: at lower security, ML-DSA signing is a bit slower than Ed25519 (as expected), but at higher security (where ECDSA gets very slow), ML-DSA can actually be faster. For example, ML-DSA at level 5 (ML-DSA-87) was shown to sign in ~75 microseconds vs ECDSA P-521 taking ~438 microseconds on the same platform. Even ML-DSA’s level 3 was about 2× faster to sign than ECDSA-384 in one test. Verification differences are even more pronounced: ML-DSA can verify thousands of signatures per second, which is especially useful in scenarios like certificate chain validation, code signing checks, etc. It’s important to implement ML-DSA carefully (in constant time, using approved parameter sets) to maintain these security and performance characteristics, but many optimized libraries (in C, Rust, etc.) exist and are being audited.

Use cases: ML-DSA is positioned as the general-purpose quantum-safe signature algorithm. It will replace RSA and ECDSA/EdDSA schemes in applications ranging from TLS server certificates to code signing, document signing, and identity authentication. Already, Google and others have tested ML-DSA in TLS and found it workable, though one challenge is the larger certificate sizes if many ML-DSA signatures are chained (leading to larger TLS handshake sizes). NIST’s guidance is that ML-DSA should be the default choice for post-quantum signatures in most situations. Only if its size or lattice-based structure is problematic should one look to the alternatives (SLH-DSA and FN-DSA).

In summary, ML-DSA (previously: CRYSTALS-Dilithium) provides strong security from lattice problems and good performance, at the cost of larger signatures and keys. Its design is simple and has been extensively vetted, and it is expected to be the primary digital signature standard in a post-quantum world, supplanting our current RSA/ECDSA infrastructure.

SLH-DSA (Previously SPHINCS+; Standardized in FIPS 205) – Digital Signatures

Rounding out NIST’s first cohort of PQC standards is SLH-DSA, a very different kind of signature scheme. SLH-DSA is a stateless hash-based signature. Unlike ML-DSA and FN-DSA, which rely on the hardness of lattice problems, SLH-DSA builds its security solely on the assumed collision resistance and preimage resistance of underlying hash functions (like SHA-256). In essence, SLH-DSA uses Merkle trees and a few other techniques to aggregate many one-time hash-based signatures (such as Winternitz one-time signatures) into a single scheme that can sign arbitrarily many messages without needing to maintain state (hence “stateless”). The big appeal of SLH-DSA is its extremely conservative security assumption: if our hash functions remain secure (even against quantum attacks, which for hashes only gives a quadratic speedup at best), then SLH-DSA signatures remain secure. There are no new algebraic assumptions at all – it’s “just hashes.” This gives a lot of confidence; hash-based signatures are among the oldest forms of digital signatures and are well understood. NIST explicitly wanted a non-lattice backup, and SLH-DSA serves that role: it provides diversity in our cryptographic toolkit, ensuring we’re not betting everything on lattices. If a devastating quantum (or even classical) attack were found against all structured lattice schemes in the future, SLH-DSA would still be standing, since its security is orthogonal to those mathematics.

However, this robustness comes with significant costs in performance and size. Size: SLH-DSA signatures are very large. Even at NIST’s Category 1 security (the smallest parameter set), a SLH-DSA signature is around 7,856 bytes (~7.7 KB). For higher security levels, signatures can be tens of kilobytes (e.g., one variant goes beyond 40 KB per signature). The public key in SLH-DSA is fortunately small (just 32 to 64 bytes, basically a seed for the hash functions), but that hardly matters when each signature is huge. This is an inherent consequence of hash-based designs: you end up computing and including many hash outputs in each signature. Speed: Likewise, SLH-DSA is orders of magnitude slower than lattice or multivariate schemes. Signing involves computing thousands upon thousands of hashes (to traverse Merkle trees and generate WOTS+ one-time signatures). Verification also involves a lot of hashing (though typically faster than signing). In practice, SLH-DSA might be too slow for high-volume systems – it could take several milliseconds or more to produce one signature on a typical CPU (versus microseconds for ML-DSA) and similar effort to verify, depending on parameters. These drawbacks mean SLH-DSA is not intended to be a drop-in replacement for everyday use.

So why include it at all? Because of its aforementioned resilience. NIST selected SLH-DSA primarily as a safety net – a confidence-inspiring fallback option in case our number-theoretic cryptosystems (lattices, etc.) falter. It’s the “insurance policy” in the PQC portfolio. Potential use-cases for SLH-DSA are those where maximum security assurance is required and performance is secondary. For example, a root certificate that only signs rarely could use SLH-DSA to ensure even if all else fails, that root of trust remains secure. Extremely long-term signatures that must remain valid for decades might consider SLH-DSA due to its simple foundation. Additionally, SLH-DSA is stateless, which avoids the risk of state misuse that plagued earlier hash-based schemes (like if you reused a one-time key in XMSS or LMS, security is lost – SLH-DSA doesn’t require keeping track of used keys). This makes it easier to implement correctly in practice when you only occasionally sign something.

NIST’s standardization of SLH-DSA (as FIPS 205) is a forward-looking move to hedge bets. It ensures that not all PQ signatures depend on lattices. It’s worth noting that SLH-DSA was the only third-round finalist signature that wasn’t lattice-based or broken, so in some sense NIST’s hand was forced to choose it to have diversity. The cryptographic community generally applauds having SLH-DSA as an option, but it’s understood that it will see limited deployment. Most software will prefer ML-DSA or FN-DSA due to efficiency, using SLH-DSA only in specialized cases.

To summarize, SLH-DSA provides high-assurance digital signatures based only on hash functions. It produces very large signatures and is slow, so it’s not aimed at general use, but it’s an invaluable backup in our toolkit. If one desires “trust no one’s math, only trust SHA-256,” SLH-DSA is the answer. NIST’s inclusion of it ensures that even in a scenario where all advanced math fails, we have a last line of defense in digital signatures.

FN-DSA (Previously FALCON; To Be Standardized in FIPS 206) – Digital Signatures

Alongside ML-DSA, NIST selected FN-DSA (Fast Fourier Lattice-based Compact Signatures over NTRU) as a second lattice-based signature to be standardized (as FN-DSA in FIPS 206 and for consistency, I’ll keep calling it FN-DSA). FN-DSA was chosen specifically to address use-cases where signature size is at a premium. Indeed, FN-DSA’s signatures are significantly smaller than ML-DSA’s – a FN-DSA-512 signature is about 666 bytes, with a public key around 897 bytes (FN-DSA-1024 uses 1280 bytes). These sizes are far smaller than ML-DSA’s multi-kilobyte outputs. In fact, FN-DSA comes close to the compactness of current ECC. This makes FN-DSA attractive for protocols that need to transmit many signatures or work in bandwidth-constrained environments (for example, certificate chains in protocols like DNSSEC, which have strict size limits, or devices that broadcast signed data over networks with small MTUs).

FN-DSA achieves its compact size by using a different lattice construction: it’s based on NTRU lattices and uses Gaussian sampling and FFT-based operations. Technically, FN-DSA is more complex than ML-DSA. One of its creators famously described FN-DSA as “by far, the most complicated cryptographic algorithm” to implement correctly among the PQC finalists. The scheme relies on floating-point arithmetic (FFT) or careful fixed-point emulation to sample from a discrete Gaussian distribution over a lattice – an area where it’s easy to introduce side-channel leaks or implementation errors. Constant-time, side-channel-resistant FN-DSA implementations are non-trivial and require significant care in handling numeric precision and timing. This complexity is the trade-off for FN-DSA’s high efficiency and small outputs. In verification, FN-DSA is extremely fast (even faster than ML-DSA in fact, since it uses a very optimized FFT-based verification process). Signing with FN-DSA is less efficient than verification and is where the complexity mainly lies (generating a FN-DSA signature involves solving a closest vector problem in a lattice with Gaussian noise, which is intensive). Nonetheless, with good implementations, FN-DSA signing can still be done fairly quickly on modern hardware (albeit not as fast as ML-DSA signing).

NIST’s strategy is to recommend ML-DSA as the default signature scheme and use FN-DSA in niche applications that need its smaller signature size. In practice, we might see FN-DSA used in specific layers of infrastructure: for example, root certificate authorities could use FN-DSA to sign certificates so that the signatures in certificate chains are smaller (making client TLS handshakes lighter). Also, IoT devices or smart cards that need post-quantum signatures might prefer FN-DSA if transmitting a 2KB ML-DSA signature is problematic. Another plausible use is in blockchain or cryptocurrency systems – there has been interest in FN-DSA for post-quantum cryptocurrencies because its signature size is relatively small (important for on-chain storage). That said, the complexity of implementation means developers must be very careful. It’s expected that only well-vetted libraries will be used for FN-DSA to avoid pitfalls in the Gaussian sampling process.

To sum up, FN-DSA (still FALCON until standardization is finalized) provides an alternative lattice signature that is highly compact and fast, at the cost of implementation difficulty. It doesn’t replace ML-DSA outright but complements it. If signature size needs to be as small as possible (and one is willing to handle the more complex implementation), FN-DSA is the go-to choice. NIST wisely included FN-DSA to ensure we’re not solely dependent on one lattice approach and to cover scenarios where every byte counts.

Recap of First-Wave Algorithms

In summary, NIST’s first standardized PQC algorithms consist of one KEM (ML-KEM) and three signature schemes (ML-DSA, FN-DSA, SLH-DSA). ML-KEM and ML-DSA are expected to be the workhorses for most systems (and indeed have been recommended as such). FN-DSA provides a tighter, albeit more complex, signature option for niche requirements, and SLH-DSA provides a highly secure (but heavy) alternative to cover our bases. These algorithms were finalized into FIPS standards by 2024 (FN-DSA is still pending), and organizations worldwide are now testing and rolling out implementations. With these tools, we can begin replacing RSA, DSA, ECDH, ECDSA, EdDSA, etc., in our protocols to ensure long-term security against quantum attackers.

Additional PQC Algorithms in the Pipeline (Second-Wave Candidates)

While the above algorithms form the initial standards, NIST’s PQC project has also been evaluating additional candidates to further enrich and future-proof the post-quantum cryptographic landscape. The focus has been on two areas: (1) alternate KEMs based on different hard problems (to supplement ML-KEM), and (2) new digital signature schemes for broader diversity and possibly better performance in certain metrics. Here we discuss the notable candidates and likely future standards.

Alternate KEMs: Code-Based Encryption (HQC, BIKE, Classic McEliece) and Others

In the fourth round of the NIST process, the primary competition was among code-based KEMs. Code-based cryptography (pioneered by Robert McEliece in 1978) uses error-correcting codes – typically problems related to decoding random linear codes – as the hard mathematical underpinning. These have stood the test of time; McEliece’s original scheme has never been broken in over 40 years (with appropriate parameters). The finalists in this category were:

- HQC (Hamming Quasi-Cyclic): A KEM based on the hardness of decoding random quasi-cyclic codes with some structure. HQC was designed to have relatively efficient implementations while leveraging coding theory security. It has somewhat large parameters but with the benefit of simpler operations (mostly XORs and polynomial arithmetic mod 2).

- BIKE (Bit Flipping Key Encapsulation): Another quasi-cyclic code-based KEM that uses a variant of the McEliece/Niederreiter framework with iterative decoding (bit-flipping) algorithms. BIKE and HQC have similar security assumptions and both were under consideration as the potential “general-purpose non-lattice KEM”.

- Classic McEliece: The entrant based directly on McEliece’s original scheme (using binary Goppa codes). Classic McEliece was a finalist due to its superb security track record – it’s a very conservative design believed to resist even future quantum algorithmic advances. Its major drawback is the huge public key sizes (hundreds of kilobytes up to a megabyte). McEliece ciphertexts, however, are small (a few hundred bytes) and decapsulation is extremely fast, making it an interesting trade-off for certain uses.

- (SIKE was also in Round 4 but as discussed, it was cracked and removed.)

After extensive evaluation, NIST announced in March 2025 that HQC will be standardized. This decision was due to a combination of security and performance factors. Both HQC and BIKE are grounded in similar hardness assumptions (decoding linear codes with some structure), but HQC provided more straightforward security proofs and had fewer unanswered questions regarding possible structural attacks. Additionally, in performance comparisons, HQC was generally faster in cryptographic operations (encapsulation/decapsulation) than BIKE – albeit at the cost of larger key and ciphertext sizes. Specifically, benchmarks showed that BIKE’s decapsulation could be 5-7× slower than HQC’s, and BIKE’s keygen 6-10× slower, whereas HQC’s ciphertexts were about 3× larger than BIKE’s. In network simulations, HQC leads to faster handshakes when network conditions are good, but BIKE might have an edge in extremely constrained networks due to its smaller data footprint. On balance, NIST felt HQC would be the more practical choice for broad usage, and it gives a complementary assumption (code-based) to lattice-based ML-KEM. Thus, HQC is likely to be the next FIPS-approved KEM, augmenting ML-KEM in the standards. It’s anticipated that HQC will be recommended as a backup or alternative – for example, in environments where one wants to hedge against lattice cryptanalysis, or simply to have a second choice in case of patents (NIST checked that HQC’s implementation is not encumbered – any relevant patents are licensed for worldwide use, avoiding potential adoption hurdles).

What about Classic McEliece? NIST acknowledged that Classic McEliece is “widely regarded as secure” and in fact has the longest history of surviving cryptanalysis. However, they also noted that “NIST does not anticipate it being widely used due to its large public key size” and thus did not select it at this time. NIST left open the possibility to standardize McEliece “at the end of the fourth round” if they deem it necessary. Given that HQC was chosen, it seems NIST might hold off on McEliece unless some new need arises. Still, it’s worth understanding Classic McEliece’s parameters: for example, one variant at ~128-bit security (mceliece348864) has a public key of ~261,120 bytes (~256 KB!) and a ciphertext of 128 bytes. At higher security (mceliece8192128), the public key is over 1.3 million bytes (~1.3 MB). These huge keys make it unwieldy for many protocols (imagine sending a 1 MB public key in a TLS handshake – not practical), and also raise storage concerns on constrained devices. For specialized cases (like securing very long-term stored data where you can afford large static keys), McEliece remains an option. But for mainstream use, HQC’s keys (e.g. ~2-7 KB public key, depending on security level) are far more palatable, even if its ciphertexts are larger (~4-14 KB).

It’s important to note that code-based schemes generally have larger key sizes than lattice schemes. When considering secret keys for these code-based schemes, it is crucial to distinguish between the operational key used during decapsulation and the compact representation (seed) used for long-term storage. Due to their underlying mathematical structure (quasi-cyclic codes), both HQC and BIKE can generate their required cryptographic material deterministically from much smaller seeds. HQC benefits from a particularly compact seed (~40 bytes at Level 1), which is advantageous for constrained storage environments, whereas BIKE’s seed is larger (~281 bytes). However, the operational private keys – the expanded form required for efficient decapsulation – are significantly larger, measuring thousands of bytes for both schemes. In this operational form, HQC’s key (approx. 4.5 KB) is notably larger than BIKE’s (approx. 3.1 KB). When comparing the data transmitted during an exchange, BIKE offered significant advantages: much smaller ciphertexts (≈1.5 KB at Level 1 vs. HQC’s ≈4.5 KB) and smaller public keys (≈1.5 KB vs. HQC’s ≈2.2 KB). The trade-offs between BIKE and HQC were therefore quite nuanced, balancing BIKE’s lower bandwidth requirements against HQC’s faster cryptographic operations and smaller storage footprint. Ultimately, the consensus was that HQC’s performance benefits outweighed its larger size in most realistic scenarios.

Outside of code-based, NIST’s Round 4 also initially included SIKE (isogeny-based KEM), as mentioned. SIKE was attractive for its extremely small keys and ciphertexts and its completely different math (supersingular elliptic curve isogenies). However, the break of SIKE in 2022 by Castryck & Decru was a game-changer. They found a classical attack on the underlying SIDH problem that rendered SIKE insecure (a key recovery in 62 minutes on a single core). This was a reminder that even seemingly hard problems can sometimes fall suddenly; it reinforced NIST’s cautious approach. After SIKE’s fall, there is currently no isogeny-based KEM considered viable for standardization (though research continues into patches and different isogeny approaches, none have yet proven secure and efficient enough). NIST formally removed SIKE from the process, so we do not expect any isogeny algorithm in the NIST standards in the near term.

Summary of KEM backups: NIST will standardize HQC as the only Round 4 KEM (code-based) to complement ML-KEM (lattice-based). Together, they cover two very distinct hardness assumptions. Classic McEliece remains a highly secure outlier that might be standardized if a niche demand arises. BIKE will not be standardized, having lost out to HQC. SIKE was eliminated due to a break. With ML-KEM + HQC (and possibly McEliece) as KEMs, users will have a menu of quantum-safe key exchange methods: one can choose lattice for efficiency or code-based for its long-term confidence and different trade-off profile. For most, ML-KEM will suffice, but HQC provides an important alternative, especially for organizations that desire crypto agility or have concerns about relying on one family of math.

New Signature Candidates: Seeking Diversity and Efficiency

As discussed in the NIST process overview, a new wave of signature schemes is under evaluation to augment ML-DSA (Previously CRYSTALS-Dilithium); SLH-DSA (Previously SPHINCS+); FN-DSA (Previously FALCON). The motivation is to explore cryptographic ideas beyond lattices and hashes, in hopes of finding signatures that are maybe smaller, faster, or at least not dependent on lattice security. While these are not finalized yet, it’s worth noting the main categories and some promising candidates from the submissions:

- Code-Based Signatures: These use error-correcting code assumptions to make signatures. Historically, code-based signatures (like the old CFS signature from 2001) had very large public keys, which made them impractical. New designs like CROSS (Codes + RSA-like Structured Objects) and LESS (Linear Equivalence Signature Scheme) claim to have improved size or security trade-offs using structured codes. They are in Round 2, indicating some viability. If a code-based signature can be made efficient, it would pair nicely with HQC/McEliece assumptions.

- Multivariate Quadratic Signatures: These are based on the MQ problem – solving multivariate quadratic equations over a finite field. This was the basis of Rainbow, and other schemes (GeMSS, etc.). Multivariate signatures often have fast signing and small signatures, but tend to have huge public keys and have suffered many attacks. In the new submissions, schemes like MAYO, MQOM, UOV variants (e.g., QR-UOV) are present. Rainbow’s failure was a caution, but maybe a tweaked MQ scheme could survive. The Round 2 candidate MAYO is notable; it merges ideas from UOV with additional structure to try to resist known attacks. If any multivariate scheme holds up, it could offer an alternative with potentially short signatures (Rainbow, for instance, had ~ few hundred byte signatures).

- Isogeny-Based Signatures: Despite SIKE’s failure for KEM, there is an isogeny-based signature called SQIsign in Round 2. SQIsign is built on a different isogeny problem (quotienting by 2-torsion, etc.) and is an evolution of a scheme that is significantly faster than old identification schemes but still somewhat experimental. It has extremely small public keys and signatures (on the order of a few hundred bytes) if it’s secure. However, isogeny cryptography is in a delicate spot after 2022 – confidence is a bit shaken. If SQIsign survives analysis, it could be standardized, giving us a very compact signature option that’s not lattice/hash. Time will tell if it holds up to cryptanalysis.

- MPC-in-the-Head (Zero-Knowledge) Signatures: These are like the Picnic signature that was an alternate in the main competition. They work by proving knowledge of a secret key through a zero-knowledge proof that is made non-interactive. They rely only on symmetric primitives (block ciphers, hashes) for security. Picnic was not chosen by NIST (because SLH-DSA offered a similar trust basis with better overall parameters). New entrants like Mirath (a merger of two ZK-based schemes MIRA and MiRitH) and PASTA and Falcon-MPC (not to be confused with FN-DSA, this is different) were submitted. In Round 2, Mirath advanced. FAEST (which uses AES as part of the proof) also advanced as a symmetric/ZK scheme. These schemes often have moderate signature sizes (tens of KB, similar to SLH-DSA or a bit smaller) and slow verification, but they offer non-lattice security. NIST may eventually standardize one if it significantly outperforms SLH-DSA or offers some advantage.

- Lattice-Based (beyond ML-DSA/FN-DSA): NIST was open to lattice submissions that significantly outperform ML-DSA/FN-DSA. One such attempt is HAWK, a lattice signature that uses different techniques (perhaps ring-LWE with different trade-offs). HAWK made it to Round 2, indicating it might offer something novel. However, given ML-DSA’s strength, a new lattice scheme would need to be much smaller or faster to justify adding – which is challenging.

At the time of writing, NIST has 14 signature candidates in Round 2 (having eliminated 26 of the original 40 in Round 1). By mid-to-late 2025, we might see a down-select to Round 3 candidates, and a final selection perhaps by 2026-2027. NIST expects to choose perhaps a couple of these new signature schemes for standardization, to serve as backups alongside ML-DSA, SLH-DSA, and FN-DSA. In particular, a general-purpose signature that is not lattice-based is highly desired (to hedge against any future breakthroughs in lattice cryptanalysis). So, a lot of eyes are on the code-based and multivariate tracks – if CROSS or MAYO or another such scheme emerges unbroken and with acceptable efficiency, that could be a strong contender for standardization. We could also see a “Picnic 2.0” style ZK signature like Mirath or FAEST standardized if they prove efficient enough, since their security relies only on AES and hashes which are very well trusted.

For now, though, these are all candidates. None have yet reached the maturity or confidence level of the first-wave algorithms. Until they do, ML-DSA, SLH-DSA, and FN-DSA remain the only game in town for quantum-safe signatures. Organizations planning their PQC migration likely won’t wait for these; they’ll implement the known standards and keep an eye on the outcome of NIST’s ongoing signature project. Still, it’s reassuring that NIST is cultivating a pipeline of new options – this will ensure that in the long term, we’re not limited to just one or two kinds of signatures.

Other Efforts: Symmetric Key and Hash Standards

It’s worth mentioning that outside the public-key algorithms, standardization bodies are also considering tweaks to symmetric algorithms and hash functions in a post-quantum context. For symmetric ciphers, Grover’s algorithm provides a quadratic speedup (by brute forcing in √N time), effectively halving the security level; the guidance is to double key lengths (e.g., use AES-256 instead of AES-128). For hash functions, the situation is nuanced. Quantum algorithms like Brassard-Høyer-Tapp (BHT) theoretically speed up collision finding more significantly (O(2N/3) complexity). However, these attacks require massive amounts of quantum memory. NIST’s analysis considers the overall computational cost, assessing that SHA-256 still provides adequate security (NIST Level 2). NIST therefore standardized AES-256 and SHA-384 years ago. So there isn’t an urgent need for new symmetric primitives for PQC (we can just use bigger versions of existing ones). However, interestingly, China’s call for PQC algorithms (discussed next) explicitly covers cryptographic hash functions and block ciphers as well, i.e. it’s broader in scope than NIST’s was. This suggests some initiatives might explore new symmetric designs optimized for post-quantum performance or assurance. The general consensus though is that our current symmetric crypto, if used with sufficient parameters, remains sound against quantum attacks (with the caveat that key lengths and hash outputs must be increased to compensate for these quantum threats).

NIST, for its part, has also standardized hash-based signatures (stateful ones like XMSS and LMS) in a separate effort, though those are mainly targeted to specialized use (e.g., in certain governmental or hardware contexts) and have not seen widespread adoption due to state management issues. SLH-DSA was chosen specifically because it’s stateless and more practical for general use than those earlier hash-based schemes.

Overall, the PQC standardization beyond public-key algorithms is relatively quiet – the big focus is on KEMs and signatures. Symmetric crypto and hashes only needed minor adjustments, not new standards (as of now).

Comparing PQC with Classical Cryptography (Practical Considerations)

As organizations start adopting these post-quantum algorithms, a natural question arises: How do they compare to our classical algorithms in practice? We’ve touched on some comparisons above, but here we consolidate the key differences in terms of security, performance, and operational impacts.

Security Assumptions and Confidence

Classical public-key crypto relies on RSA (factoring) or discrete log (finite fields or elliptic curves) – problems that could be easily solved by Shor’s algorithm on a quantum computer. In contrast, the chosen PQC algorithms rely on problems like lattice LWE (ML-KEM, ML-DSA, FN-DSA), hash function hardness (SLH-DSA), error-correcting code decoding (HQC/McEliece), etc., none of which are known to be breakable by quantum algorithms any faster than by classical attacks. In fact, for many of these problems, not even sub-exponential classical algorithms are known – they appear to be much harder than RSA/ECC at comparable parameter sizes. That said, PQC is newer and the assumptions haven’t had as many decades of scrutiny as RSA/DLog. Lattice cryptography, in particular, enjoys a lot of academic confidence (some lattice problems even have worst-case to average-case reductions), but they are young relative to RSA. Thus, in terms of confidence level: RSA/ECC have the benefit of simplicity and history, whereas lattices, codes, etc., bring a diversity of assumptions that are believed quantum-safe but will continue to be studied. Including SLH-DSA (hash-based) in the mix gives an extremely conservative option since hash functions like SHA-256 are very well analyzed and unlikely to be fundamentally broken. NIST has deliberately balanced performance with caution by picking a spread of algorithms (lattice for speed, hash for caution, code as a backup, etc.).

Key Sizes and Bandwidth

One of the most noticeable differences is that PQC algorithms generally have larger public keys, secret keys, and ciphertexts/signatures than the classical algorithms they replace. We saw concrete numbers: e.g., ML-DSA signatures are 2-3 KB vs ECDSA’s ~64 bytes; ML-KEM-768 public keys are ~1184 bytes vs RSA-3072’s ~384 bytes. In key exchange, the TLS handshake using ML-KEM will carry roughly an extra kilobyte or two compared to an ECDH handshake, and a ML-DSA-signed certificate will be a few KB larger than an ECDSA one. These increases will impact bandwidth and storage to some degree:

- Certificates: X.509 certificates with PQC will have larger SubjectPublicKeyInfo and signature fields. A chain of multiple certificates each 2-5 KB in signatures can add tens of KB to the TLS handshake. Protocols may need to adjust size limits or use compression. Efforts are underway to possibly define new certificate formats or compression schemes (e.g., TLS Certificate Compression) to mitigate this.

- Network packets: Some protocols (like DNSSEC, which has UDP packet size limits) might have issues. For example, a DNSSEC response carrying a 2 KB signature might exceed typical UDP 512-byte limits, forcing more TCP fallback. Similarly, IoT protocols that assume very small message overhead might need to account for bigger sizes.

- On-Chain and On-Device Storage: In blockchain systems, if one were to migrate to PQC signatures, block sizes and UTXO sizes would grow. On constrained devices that need to store keys, a 1 KB public key vs a 32 byte one is an increase but likely manageable given modern flash sizes.

Overall, the bandwidth hit, while not negligible, is not catastrophic for most modern systems (we routinely stream megabytes of data; a few extra KB in a handshake is usually fine). But it does mean architects should plan for increased message sizes and perhaps test the performance in high-latency networks (where larger handshake messages can slightly increase handshake completion times, especially if additional round trips are needed for fragmentation). Studies have shown that in good networks, PQC handshakes are only a few milliseconds slower, but in very lossy networks, the larger sizes can exacerbate delays. Techniques like pipelining, early data, or just using robust transport can alleviate this.

To put numbers on it, NIST’s summary of sizes is useful: the “first 15” algorithms had various sizes, but ultimately, the standardized ones have keys/signatures on the order of KB. Most of the Round 4 KEM candidates had public keys between 1-7 KB and ciphertexts 1-15 KB. By comparison, RSA keys are measured in tens or hundreds of bytes, and ECC keys in tens of bytes. So yes, PQC will generally use more bandwidth.

One notable exception is that FN-DSA’s sizes are surprisingly small – an 897-byte public key and 666-byte signature. That’s one reason FN-DSA is kept around: it shows PQC can be competitive even in size with older schemes (FN-DSA’s sig is only ~2.5× the size of a 256-bit RSA sig). And if SQIsign (isogeny signature) or some new scheme pans out, we could get signatures in the few hundred byte range, which would be great – but that’s speculative for now.

To succinctly answer: PQC algorithms do tend to have larger size overhead than classical ones. Expect keys and signatures/ciphertexts to be anywhere from 5× to 20× (or more) larger, depending on the algorithm. This is one of the key trade-offs for quantum safety. Fortunately, computing power, storage, and bandwidth have increased to a point where these larger sizes are usually acceptable.

Computational Performance

In terms of raw computation, many PQC algorithms are quite efficient – in some cases more efficient than the classical algorithms:

Key Generation

PQC keygen can vary. ML-KEM keygen is fast (just picking some random polynomials) – tens of thousands of ops/sec, much faster than RSA keygen which is an involved process of finding large primes (only ~30 ops/sec in the comparison). ML-DSA keygen is also trivial (sample random matrices/vectors). FN-DSA keygen is heavy (solving NTRU, which is slower). Generally, lattice-based schemes have cheap keygen; RSA/ECC keygen is slower.

PQC keygen can vary. ML-KEM keygen is fast (just picking some random polynomials) – tens of thousands of ops, much faster than RSA keygen which is an involved process of finding large primes (only ~30 ops/sec in the comparison). ML-DSA keygen is also efficient (sample random matrices/vectors). FN-DSA keygen is heavy (solving NTRU, which is slower). Generally, lattice-based schemes have cheap keygen. RSA keygen is very slow due to the requirement of finding large primes. ECC keygen is significantly faster than RSA – while the private key is just a random number, generating the corresponding public key requires a computationally intensive elliptic curve scalar multiplication, making it slower than lattice-based key generation.

Encryption/Encapsulation

ML-KEM encapsulation (encryption) involves polynomial multiplications, which are fast. It can be done in a few microseconds, comparable to or slightly slower than an ECC scalar multiplication. RSA encryption with e=65537 is extremely fast too (a millisecond or less even on small devices). So at worst, ML-KEM encap is in the same ballpark as classical encryption; at best, with optimized code, it’s almost as fast or faster. For example, our earlier table shows ML-KEM-512 encap ~80k ops/sec vs RSA-2048 encap 150k ops/sec – so RSA still wins on raw encryption speed, but ML-KEM was within 2×, which is great given the increased security. For decapsulation (decryption), ML-KEM far outstrips RSA (100k vs 1.4k ops/sec), meaning handling incoming connections with ML-KEM is way easier on the CPU than with RSA.

Similarly, ECDH vs ML-KEM: one X25519 operation is fast (~50k ops/sec on a core), whereas ML-KEM decap can reach 100k ops/sec – so actually ML-KEM is faster than X25519 in decapsulation; encapsulation was ~80k vs X25519 ~50k, so also faster. So lattice KEMs are extremely fast and lightweight on CPU (plus they vectorize well, etc.). Code-based KEMs like HQC are slower than ML-KEM but still on the order of maybe thousands of ops/sec (HQC decap measured in IR 8545 was maybe in 0.7 to 1.4 million cycles, which is like 1-2k ops/sec on a 3GHz CPU – not bad, though slower than ML-KEM’s 100k/sec). Classic McEliece decap is even faster (because it’s a simple linear algebra operation). So performance-wise, none of the KEMs are too slow for practical use, except SIKE was slow (but it’s gone).

Signing

ML-DSA signing is relatively fast, but not as fast as ECDSA or EdDSA at low security. Ed25519 can sign ~500k per second on a core; ML-DSA-II might sign ~200k per second (rough estimates from that Go benchmark). At higher security, ML-DSA’s advantage grows – ECDSA-384 dropped to ~76k/sec while ML-DSA-III was ~116k/sec in one test. So for moderate security, we pay maybe a 3× slowdown in signing vs Ed25519. Verification is the opposite: ML-DSA verifies extremely fast (multiple times faster than ECDSA/EdDSA). This is beneficial for server workloads that verify many signatures (like TLS servers verifying client certs, or software updaters verifying code signatures).

FN-DSA signing is slower (~50k ops/sec or less, plus requiring heavy math) and FN-DSA verification is very fast (similar to ML-DSA’s ballpark). SLH-DSA signing is very slow (maybe a few hundred signatures per second on a core, due to thousands of hashes), and verification also relatively slow – thus not suitable for high-frequency signing.

Verification

As noted, lattice sigs verify blazingly fast. Hash-based (SLH-DSA) verify speed is moderate (lots of hashes, but those can be parallelized somewhat). RSA verify is quite fast (public exponent is small usually), ECDSA verify is moderate (requires an EC scalar multiplication or two). In many scenarios, verifying PQC sigs will not be the bottleneck; it’s the size that’s the concern. For instance, a TLS server can handle verifying ML-DSA client signatures easily – the network transfer of that signature likely costs more time than the actual verify computation.

Overall system impact

Many researchers have done prototype integrations (Cloudflare in TLS, AWS in S2N, etc.) and found that the overall impact of switching to PQC can be modest in computational terms. A 2020 study by Cisco showed post-quantum TLS handshakes were only 1-2 ms slower than classical ones in good conditions. Cloudflare reported that hybrid X25519+ML-KEM handshakes were “hard to distinguish by eye” from normal ones in speed. The bigger impact was on packet size, not CPU load, in those tests. So CPU-wise, most devices that can handle RSA/ECC today can handle lattice-based PQC easily. In fact, they might even see reduced CPU for private-key ops (like a server doing many handshakes might find ML-KEM less CPU-intensive than RSA, shifting the bottleneck to network I/O).

Side-channel considerations

One performance-related consideration is that some PQC algorithms require constant-time implementations to avoid side-channel leaks, which can sometimes mean using bit operations or table lookups carefully. For example, implementing ML-DSA or FN-DSA in pure constant time may incur some overhead (though typically still fine). Classic algorithms also needed this (e.g., RSA blinding, etc.). So no major change there except ensuring new code is also hardened.

| Algorithm | Type | Public Key Size (Bytes) | Ciphertext/Signature Size (Bytes) | Security Level (Equivalent) | Performance Notes |

|---|---|---|---|---|---|

| ML-KEM-768 (Kyber) | KEM | 1,184 | 1,088 | AES-192 | Faster decapsulation than RSA; ~80k ops/sec encapsulation |

| ML-DSA-65 (Dilithium) | Signature | 1,952 | 3,293 | NIST Level 3 | Verification ~20x faster than ECDSA; larger than ECC |

| FN-DSA (FALCON, draft) | Signature | 897 (Level 1) | 666 (Level 1) | NIST Level 1 | Compact but complex implementation; fast verification |

| SLH-DSA (SPHINCS+) | Signature | 32 (small) | 7,856 (small) | NIST Level 1 | Slow signing (~hundreds/sec); conservative hash-based |

| HQC-192 | KEM | ~4,500 (approx.) | ~9,000 (approx.) | AES-192 | Balanced speed; larger than ML-KEM but diverse assumption |

In summary, compute demands of PQC are generally quite manageable. Lattice-based schemes in particular are very fast, often comparable to or faster than classical crypto in many operations. The outlier is hash-based schemes (SLH-DSA) which are slow – but those likely won’t be heavily used in performance-sensitive paths. If anything, organizations might notice a drop in RSA decryption CPU usage when moving to ML-KEM, at the expense of slightly more work generating signatures (if using ML-DSA vs ECDSA). But in most real-world scenarios, this is negligible in the grand scheme (network and I/O latency dominate). So compute is not a blocker for PQC – the algorithms were specifically chosen for their excellent performance as well as security. The bigger adjustments will be handling the larger sizes and ensuring system-level compatibility.

Replacing Classical Algorithms: What Goes Where

To be explicit about which PQC algorithms replace which classical ones:

RSA Encryption (e.g., RSA-OAEP)

Replace with ML-KEM (or HQC). Any scenario where you encrypt a symmetric key with an RSA public key (like sending an email in S/MIME, or encrypting a small secret) should transition to using a KEM like ML-KEM. This includes things like key transport in TLS (if that were used), or in hybrid encryption in protocols.

Diffie-Hellman / ECDH (Key Agreement)

Replace with ML-KEM (or HQC). In protocols like TLS 1.3, IKEv2, etc., where we use ECDH (X25519, secp256r1, etc.) to agree on a shared secret, we will instead use a KEM. TLS 1.3, for instance, might advertise a ML-KEM-768 “group” and do a KEM encapsulation to the server’s public key rather than a scalar multiplication. The end result is the same kind of shared secret, but achieved via a KEM flow rather than Diffie-Hellman. As an aside, KEMs are non-interactive so they fit into a Diffie-Hellman slot nicely (client sends an encapsulated key, server decaps and responds).

RSA Signatures (e.g., RSA-PSS)

Replace with ML-DSA (or FN-DSA, SLH-DSA). Any place you do an RSA signature – code signing, certificate signing, document signing (PDFs), etc. – you would use a PQC signature. ML-DSA would be the likely default for most. FN-DSA could be used if signature size is a concern (e.g., certificate authorities might choose FN-DSA to keep certificates small). SLH-DSA could be used if you want to avoid number-theoretic assumptions completely, but you pay in size.

ECDSA/EdDSA Signatures

Replace with ML-DSA/FN-DSA/SLH-DSA similarly. For instance, Bitcoin and many cryptocurrencies use ECDSA or Schnorr (EdDSA) for signing transactions – a PQC migration there could use ML-DSA or FN-DSA (there’s active research on PQC for blockchain; FN-DSA is attractive due to short sigs in that context). WebAuthn and other authentication protocols that use Ed25519 could migrate to ML-DSA or FN-DSA too.

DSA (Discrete Log Signatures)

This is legacy now, but any DSA usage would migrate to PQC signatures as well.

Symmetric algorithms

Not replaced, just maybe strengthened – AES-128 -> AES-256, 3DES (if anyone still uses it) -> AES-256, SHA-256 -> SHA-512 (or SHA3). NIST has already been advising longer keys; the U.S. government’s CNSA suite requires at least AES-256 and SHA-384 for “post-quantum” robustness, even before PQC algorithms are deployed.

One area to note is hybrid schemes: During the transition, many standards bodies (IETF, etc.) are recommending hybrid modes – i.e., use a classical algorithm and a PQC algorithm in parallel. For example, do an ECDH and a ML-KEMexchange and mix the keys, or sign with both ECDSA and ML-DSA on a certificate. This provides security against classical and quantum adversaries in the interim (since PQC is new, maybe one is more comfortable combining it with tried-and-true algorithms until confidence builds). Eventually, the classical part can be dropped once PQC is fully trusted and required. This hybrid approach is a temporary strategy (the German BSI, US NSA, and others have recommended it in certain contexts). It does incur even more overhead (two of everything), but it’s a pragmatic approach for early adopters.

Implementation and Integration Challenges

Replacing crypto primitives in real-world systems is not just about speed and size. There are practical integration issues too:

Standards and Protocol Support

Protocols need to be extended to support new algorithm identifiers, new parameter sizes, etc. We need updates to TLS (there’s already RFC drafts for PQC in TLS 1.3), S/MIME, JWT, VPN protocols, etc. Many of those are in progress, but until finalized and widely implemented, using PQC might mean custom configurations.

Software and Hardware Support

Crypto libraries like OpenSSL, BoringSSL, libsodium, etc., are adding PQC. OpenSSL 3.0+ already includes some post-quantum algorithms in an “provider” module (like an OQS provider). WolfSSL, Microsoft’s SSPI, and others have test implementations. Hardware support (in HSMs, smart cards) is lagging – today’s HSMs mostly don’t do ML-DSA or ML-KEM yet, though vendors are planning it. This might be a bottleneck for adoption in high-security contexts that rely on HSMs for key management.

Side-Channel and New Failure Modes

Implementing PQC securely requires care. For instance, lattice algorithms involve sampling small random values – if not done in constant time, could leak info via timing or power analysis. There have already been some side-channel attacks on early implementations (e.g., one on a FrodoKEM implementation via power analysis). Developers need to follow recommended implementations and harden them. Also, some PQC schemes have unique failure modes – e.g., ML-DSA (Fiat-Shamir) will abort and retry occasionally if some checks fail; if an implementation were to leak whether an abort happened via a timing channel, that could leak the secret key. Proper implementations avoid that, but it’s a new thing devs must be aware of. Similarly, making sure to use the exact parameter sets and not “optimize” them incorrectly is crucial (one must not, say, reduce a security parameter to make signatures smaller – that could be dangerous). NIST’s standards are precise about parameters and even rename algorithms to fix the versions (ML-DSA-65, etc. are fixed sets).

Infrastructure changes

Certificate authorities will need to issue PQC certificates. A whole new PKI might emerge or existing ones will migrate. Key lengths in ASN.1 structures need to accommodate bigger keys (some old code might assume signatures < 1024 bytes and break if they see 2000 bytes, etc.). These are the kinds of things that testing and pilot deployments are discovering.

Overall, while PQC algorithms are solid technically, the ecosystem migration is a multi-year effort. Entities like the U.S. government via OMB memorandum have deadlines (e.g., inventory systems by 2023, test migration by 2025, complete transition by 2035). Ensuring factual correctness and up-to-date info is critical in this phase, as many will rely on standards documentation and scholarly articles to guide their implementations.

Global PQC Standardization Efforts and Differences

While NIST’s process has been the focal point of global PQC standardization (and de facto, many countries intend to follow NIST’s recommendations), it’s not the only effort. Other nations and organizations have their own PQC programs. Here we highlight a few, notably China’s initiative, and briefly mention others like Europe and Russia.

China’s PQC Standardization Initiative

China historically has maintained its own national cryptographic standards, independent of Western standards. For example, China uses the SM series algorithms (SM2 ECC, SM3 hash, SM4 block cipher) domestically instead of RSA/AES. It is therefore not surprising that China is also pursuing its own post-quantum standards. In February 2025, news emerged that China (through the Institute of Commercial Cryptography Standards, ICCS, under the state Cryptography Administration) launched a global call for post-quantum algorithm proposals. This Chinese PQC project appears to parallel NIST’s, but with some differences in scope and process:

- Scope: China’s call covers not only public-key encryption and signatures, but also cryptographic hash functions and block ciphers that are quantum-resistant. This is interesting, as it suggests China might consider new symmetric primitives (though, as noted, current ones just need longer keys). It could be an attempt to build a complete domestic crypto suite that’s quantum-safe, possibly aiming to replace SHA-2/SM3 with a new hash if they find a better one, etc.

- Process Transparency: NIST’s process has been very open, with public conferences, open forum discussions, and published evaluation criteria. China’s process is described as more opaque by experts. The Chinese approach to standardization is likely less publicly transparent – we might not know all submissions or see all analyses published in English, for instance. However, ICCS did invite international participation in their call, indicating they want to be seen as open and global. Whether non-Chinese researchers will actually submit is unclear, but the invitation is there.

- Motivations – Backdoors and Trust: A speculated reason for China’s independent move is concern over potential “back doors” in US-led standards. This stems from historical distrust (e.g., the NSA’s involvement in Dual_EC or speculation about curves with backdoors). Chinese authorities likely prefer algorithms that they have vetted or designed, to ensure they aren’t built with any hidden weaknesses favoring the West. Conversely, there is also external speculation that China might want to incorporate its own covert access into encryption standards it designs – though any algorithm that’s public and globally evaluated would be hard to backdoor without detection. In any case, the goal is technological self-reliance: China wants to reduce dependence on NIST/Western crypto and be a leader in the field on its own terms.

- Timeline: The ICCS call was open as of early 2025, with guidelines for hash function proposals already released (public comment by March 15, 2025). This suggests China is in the early stage of collecting algorithms, similar to where NIST was in 2017. It might be a few years before we hear of Chinese selections. It’s plausible they might adopt algorithms similar to NIST’s if they trust them (Moody noted China previously selected algorithms “similar to those chosen by NIST” in the past; indeed, China’s SM2 is an ECC algorithm much like ECDSA). They could, for example, end up standardizing something like ML-KEM or a variant of it under a different name if they deem it secure. Alternatively, Chinese researchers might have their own lattice or code-based schemes to promote. There have been Chinese contributions in the NIST contest (e.g., the “Dragonfly” signature was by Chinese authors, etc.), so certainly the expertise is there.

- Cooperation vs Competition: NIST has said they will “monitor China’s efforts” and wouldn’t rule out incorporating strong Chinese-developed algorithms into NIST’s framework if they offer improvements. This is an interesting point: cryptography isn’t strictly a zero-sum nationalistic game – if China invents a really good algorithm, the world could benefit from using it (assuming it’s indeed good). On the flip side, if NIST’s algorithms become global standards, China might still adopt them unofficially or dual-standardize them (for instance, implement them for compatibility with global systems, even if not their official national standard).

In short, China’s PQC effort underscores a potential split where multiple standards could emerge. However, the algorithms might not differ drastically – if China finds NIST’s picks secure, they might just standardize “their own version” of them (perhaps with different parameters or implementations). On the other hand, they might come up with distinct algorithms. One example: Chinese researchers have proposed variants of lattice schemes (like “Round5” in the NIST contest had some Chinese contributors, etc.). They might favor certain designs or exclude others.

One should also note that standardization can have political ramifications: if, say, Chinese standards become widely adopted in the Belt and Road Initiative countries, and Western standards elsewhere, we could see a bifurcation in cryptographic ecosystems (similar to how some countries use SM2/SM4 vs RSA/AES). That would complicate interoperability. Ideally, there will be convergence, but it’s too early to tell. The ICCS encouraging international submissions is somewhat promising – it means China wants to be seen as an international player, not just a closed shop.

From a technical perspective, the main difference might end up being in naming and acceptance rather than completely different mathematics. But we won’t know until the Chinese process picks its candidates and we see if they’re novel or known algorithms.

Other International Efforts

- Russia and others: Like China, Russia tends not to trust US standards and do their own. Russia has a tradition of using its GOST algorithms. It’s likely Russia will create or is creating its own PQC algorithms too, though information is scarce. They did send a couple of submissions to NIST (e.g., a hash-based signature submission from Russia, IIRC), but if geopolitical isolation continues, Russia may push its own standards for domestic use.

- Europe: The EU hasn’t run an independent competition, largely aligning with NIST. However, European researchers were heavily involved in NIST’s process (many algorithms had European co-authors, e.g., a lot of the lattice schemes came from EU teams). Bodies like ETSI and ENISA are working on implementation guidelines and migration strategies, but not separate algorithms. One notable European-led initiative is the “PQCrypto” series of conferences and some projects funded by EU (like PQCRYPTO project in the past) which fed into the NIST process. So Europe’s approach is collaborative. Some European countries’ agencies (e.g., Germany’s BSI) have already endorsed using NIST’s selected algorithms (even ahead of formal standardization) for future-proofing communications.

- ISO/IEC: The ISO working group for cryptography (SC27) tends to standardize algorithms that have matured in other venues. It’s likely ISO will take NIST’s finalized algorithms and issue ISO standards for them as well, which helps countries that prefer ISO standards. There might be some modifications (for example, different hashing options or parameter generalizations).

- National Schemes: Besides China and Russia, other nations might have their own takes. For instance, Japan’s CRYPTREC might evaluate PQC for use in Japanese e-government. So far, Japan seems aligned with NIST (many Japanese researchers were part of NIST submissions). Similarly, South Korea’s algorithms (KCDSA, etc.) might have PQC analogues or they’ll adopt NIST ones.

- Industry Consortia: The IETF is actively working on standards for using PQC in Internet protocols. For example, there are active IETF drafts exploring how to integrate hybrid key exchange (e.g., combining ECDH and ML-KEM) into the main TLS 1.3 handshake. Separately, the Hybrid Public Key Encryption (HPKE) standard (RFC 9180), a general framework utilized in features like TLS Encrypted Client Hello, has been officially extended (via RFC 9580) to support standardized PQC KEMs like ML-KEM. The CA/Browser Forum (which governs browser certificate requirements) will have to allow PQC algorithms in certificates. The Cloud Security Alliance has a PQC working group giving migration advice. In telecom, the GSMA has produced guidelines for PQC in 5G/6G. These efforts differ mainly in focus (practical deployment vs algorithm selection).

One interesting standardization twist: patents and IP. Some PQC algorithms had potential patent issues (e.g., certain code-based schemes had patents). NIST required submitters to either provide royalty-free licenses or ensure no encumbrance. This influenced choices too – an algorithm that’s patented (requiring royalties) would be a poor standard. NIST managed to avoid that with its picks (ML-KEM, ML-DSA, etc. are free to use). If any international algorithm came with IP strings attached, it might not see global adoption. So open availability is a must.

Migration and Adoption Timelines

It’s worth noting that even though NIST has picked algorithms, the real world transition is just beginning. Governments and large enterprises are drawing up roadmaps. The US government, for example, through memoranda, aims to have all federal agencies inventory their cryptographic usage and have a plan to transition to PQC by 2035. The NSA’s Suite B (which was ECC-based) has effectively been replaced by interim guidance to prepare for PQC (NSA calls it CNSA 2.0 with “algorithm agility” as a goal). The EU’s ENISA similarly advises starting migration now for critical data.

For techies and developers, the important message is: start experimenting and integrating PQC now, not later. Many libraries (OpenQuantumSafe liboqs, etc.) make it easy to prototype. You can already turn on hybrid post-quantum TLS with some builds of OpenSSL or in browsers like Chrome (Canary builds have had some PQC experiments). The transition period is unique – we have to upgrade the airplane’s engines while in flight, so to speak. We must ensure our new cryptosystems are not only secure but also interoperable and robust in practice.

Conclusion

The dawn of quantum computing has sparked an urgent cryptographic revolution. Post-Quantum Cryptography (PQC) provides the tools we need to secure our digital world against future quantum threats, and the standardization efforts to select those tools have reached major milestones. NIST’s multi-year PQC competition (2016-2022) resulted in four primary algorithms now being standardized: ML-KEM for encryption/KEM, ML-DSA for signatures (with FN-DSA and SLH-DSA as important alternatives). These schemes offer strong security against quantum attacks while remaining practical in performance – a testament to the advances in cryptography. In 2024, NIST published the first three PQC standards (FIPS 203, 204, 205) and encouraged immediate integration of these algorithms into products and protocols.