Quantum Threat Tracker (QTT) Review Praising the Tool Questioning the Demo

Table of Contents

The Quantum Threat Tracker (QTT) is a newly released open-source tool by Cambridge Consultants and the Quantum Software Lab at the University of Edinburgh that aims to forecast when quantum computers will break today’s encryption. It combines quantum resource estimation with hardware development roadmaps to predict when cryptographic protocols will be broken. In other words, QTT estimates how many qubits and runtime are needed to crack something like RSA, then projects forward based on how quickly quantum hardware might improve.

This is a much-needed tool – it translates complex research into an interactive format for security planners without them having to understand the intricacies of quantum computing or cryptography. In many ways QTT is similar to my proposed CRQC Readiness Benchmark but QTT is more analytical with a systemic resource estimation framework. I have no criticism of the tool or its authors; in fact, I think QTT is a great resource that could become very useful for the community. However, I do disagree with its default assumptions and demo scenario, which paint an arguably overly conservative timeline for the “Q-Day” when encryption like RSA-2048 falls.

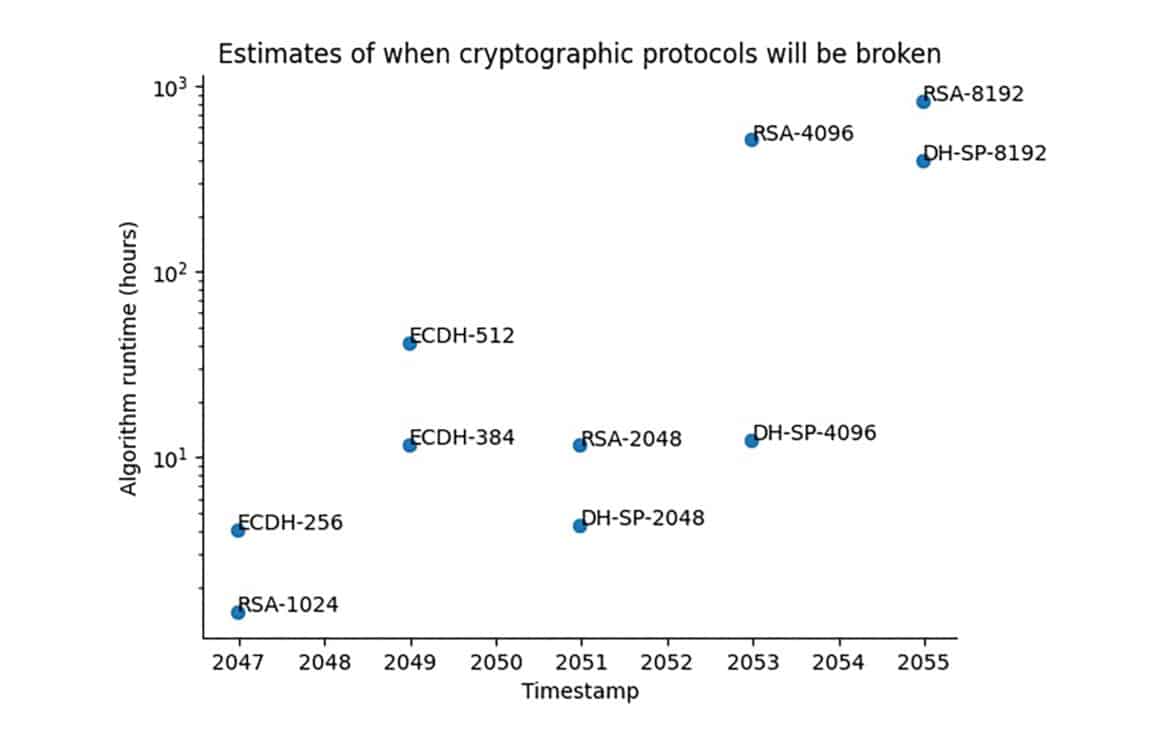

Figure: An example output from the Quantum Threat Tracker’s default settings, showing the estimated years (“Timestamp”) when various cryptographic schemes could be broken by a quantum computer. In this demo, RSA-1024 is projected to fall around 2047, and RSA-2048 around 2051 under the default roadmap assumptions. Larger key sizes (RSA-4096, RSA-8192) and analogous Diffie-Hellman schemes push even later into the 2050s. The vertical axis shows the required quantum algorithm runtime (hours) for the attack, on a logarithmic scale.

In the QTT’s demonstration output (using its default configuration), RSA-2048 is shown being broken only in the year 2051. This late date has raised eyebrows, because it differs drastically from most other predictions – including my own oft-discussed estimate that RSA-2048 will be broken around 2030. Ever since the QTT’s release, I’ve been contacted by many colleagues asking why its forecast is so far out of line with the early-2030s timeline that many of us in the industry have been signaling. Most concerning, however, was seeing this demo image included in a recent board-level awareness session for a Fortune Global 500 enterprise.

To be clear, there is nothing “wrong” with QTT’s calculations per se; the tool allows users to set their own parameters for quantum progress. The default settings, though, appear to use some very pessimistic (or ultra-conservative) assumptions. My concern is that people seem to be taking the demo output (2051 for RSA-2048) as an authoritative prediction from Cambridge/Edinburgh and get a false sense of security. Many busy executives will see a chart like that and conclude “Oh, the experts say we have until 2051” – which would be dangerously misleading. In my opinion, many large enterprises are already late in starting their post-quantum migrations, and publications or tools that imply “no urgency until 2050+” risk giving them another excuse to procrastinate.

How QTT Predicts Cryptographic Break Timelines

The QTT produces “threat timelines” by combining quantum resource estimates with a projected hardware roadmap. In practice, QTT first calculates the quantum resources required to break a given cryptosystem (e.g. RSA-2048) – including the number of logical qubits, gate operations, and runtime – using known quantum algorithms like Shor’s and its variants (Baseline Shor, Gidney-Ekerå, Chevignard, etc.) It then assesses when those resources might become available by referencing a model of hardware progress over time. Essentially, QTT asks: “Given the improving qubit counts, error rates, and gate speeds on quantum hardware, when will a quantum computer be able to execute this attack in a practical timeframe?” The output is a timeline (year by year) showing the time-to-attack for each cryptographic target, and it highlights the year when that time-to-attack becomes short enough to render the cryptosystem insecure. For example, QTT’s demo timeline shows that RSA-2048 remains impractical to crack for decades, only dropping to a few-hour attack around the year 2051 (whereas smaller keys like RSA-1024 or ECC-256 drop earlier, in the 2040s). In other words, QTT forecasts “Q-day” for RSA-2048 around 2051.

Internally, the QTT framework has modules for Quantum Resource Estimation (QRE) and a Lifespan Estimator. The QRE module uses analytic formulas and tools (including Microsoft’s Azure QRE and Google’s Qualtran cost models) to compute the logical qubit count and circuit depth needed for an attack. QTT includes the latest published algorithmic improvements to Shor’s algorithm. For instance, it provides options for a baseline Shor implementation, the Gidney–Ekerå 2019 optimization, and the Chevignard et al. 2024 algorithm These algorithms differ in resource trade-offs; e.g. Gidney & Ekerå’s method significantly reduced qubit and T-gate counts (they estimated factoring 2048-bit RSA with ~6,000 logical qubits and 8 hours runtime, versus far more for naive Shor). Chevignard’s recent technique further cuts qubit requirements by processing the 2048-bit number in small chunks, bringing the needed physical qubits down from many millions to “under one million” (at the cost of a longer circuit). QTT incorporates these improvements (as different attack “models”), so it is not ignoring known algorithmic advances – it explicitly allows choosing improved algorithms like Chevignard’s to lower the resource estimates. Once QTT knows, say, that RSA-2048 factoring will require on the order of e.g. N logical qubits and T hours of operation with a certain error-corrected gate fidelity, it turns to the hardware roadmap to estimate when a machine with ≥N logical qubits and adequate coherence time will exist.

Hardware Trend Extrapolation and Growth Assumptions

A key modeling piece is how QTT extrapolates quantum hardware capability growth. The user (or QTT by default) provides a hardware roadmap – essentially a timeline of projected hardware parameters (years with corresponding qubit counts, error rates, etc.) If no detailed roadmap is given, QTT must rely on historical trends or simple growth rates. In practice, QTT’s default approach appears to assume a steady exponential growth in available logical qubits per year – roughly analogous to “quantum Moore’s Law,” but at a conservative pace. For example, based on QTT’s output, it seems to be assuming that fully error-corrected logical qubit counts will double every few years (or grow by a fixed percentage annually). This yields a gradual climb from essentially 0 logical qubits today (since current devices are NISQ-only) to only a few thousand logical qubits by mid-century. That pace is slower than the most bullish industry roadmaps. Notably, QTT’s forecast of RSA-2048 being breakable ~2051 implies that the model expects it to take ~25+ years for quantum hardware to reach the necessary scale. By contrast, if one assumed the aggressive hardware scaling some companies predict (e.g. full error-corrected million-qubit systems by the 2030s), one would project a much earlier break date. QTT’s longer timeline reveals its conservative hardware extrapolation: it likely bases growth on demonstrated progress to date (which has been slower and linear at the logical-qubit level) rather than on promised breakthroughs.

In concrete terms, QTT may define the roadmap in terms of physical qubits and error rates improving at a fixed rate. For instance, it might assume the number of physical qubits in the largest machine grows by, say, ~10× every decade (which is roughly consistent with going from ~50 qubits in 2017 to ~1000 qubits in 2027, etc.) Coupled with a modest improvement in error rates, this translates to only a handful of logical qubits by the late 2020s, a few dozen by the 2030s, and so on – pushing the era of hundreds or thousands of logical qubits out to the 2040s or later. Under such assumptions, RSA-2048 stays safe for quite a while. This methodology is evidence-based but possibly outdated. It extrapolates past trends (where qubit counts were low and error-corrected qubits nonexistent) and doesn’t fully account for potential inflection points – for example, the advent of modular scaling, better qubit architectures, or massive funding influx which could accelerate progress beyond the historical rate. In short, QTT’s hardware model is a cautious single-exponential growth curve, whereas some in the industry expect super-exponential gains or at least a faster exponential (sometimes called “Neven’s law”). QTT does allow the roadmap to be customized, but its default predictions use a rather flat trajectory for the coming decades.

Treatment of Quantum Error Correction and Overhead

Quantum Error Correction (QEC) is central to QTT’s resource estimates – and it’s one area where assumptions dramatically impact timelines. The QTT explicitly acknowledges that running Shor’s algorithm will require error-corrected qubits and that QEC introduces significant overhead in physical qubit count and runtime. In its modeling, QTT uses a particular QEC scheme (likely the surface code) with specific parameters (physical gate error rates, code distance, etc.) to translate logical qubit requirements into physical qubit demands and runtime slowdown. If QTT’s default error rate is, say, ~$$10^{-3}$$ per gate (typical of today’s superconducting qubits), achieving a logical error rate low enough for a multi-hour Shor’s algorithm might require hundreds or thousands of physical qubits per logical qubit. This overhead can be enormous – Gidney & Ekerå’s oft-cited estimate of ~6,000 logical qubits for RSA-2048 turned into ~20 million physical qubits when assuming surface-code error correction at ~$$10^{-3}$$ fidelity. QTT appears to use similarly pessimistic QEC overhead factors. In other words, its model likely assumes no revolutionary improvements in error-correcting codes beyond the standard surface code, and no drastic reduction in physical error rates in the near term. The consequence is that the “effective” qubit count grows much more slowly (since achieving even one logical qubit might need thousands of physical qubits until error rates improve).

Importantly, QTT may ignore or underweight recent QEC advances. For example, in late 2024 Oxford researchers showed two-qubit gate fidelities of 99.999985% (only 1 error in 6.7 million operations). Such a low error rate, if sustained, would shrink the QEC overhead dramatically, potentially cutting the number of physical qubits per logical qubit by an order of magnitude or more. Likewise, novel QEC codes and decoding techniques could reduce overhead. If QTT’s model hasn’t incorporated these developments, it could be using an outdated error-per-gate and overhead assumption (e.g. assuming ~$$10^{-3}$$ with surface code, instead of ~$$10^{-5}$$ or better or using more efficient codes). This would push its projected CRQC dates later. In summary, QTT errs on the side of high physical-to-logical qubit ratios – a prudent choice a few years ago, but possibly overly pessimistic given recent progress. The project’s documentation itself stresses that QEC is crucial but costly, which aligns with the idea that they built in a substantial overhead factor. If those overhead factors don’t account for, say, next-generation QEC or hardware with intrinsically lower error (ion traps, topological qubits, etc.), the timeline will indeed stretch out longer than it might in reality.

Inclusion (and Omission) of Algorithmic Improvements

On the algorithm side, QTT is fairly up-to-date to a point. It includes major known improvements to quantum factoring and discrete log algorithms up through 2024. For instance, it implements Chevignard et al. (2024), which is a recent circuit optimization that reduced the qubit footprint for RSA factoring by roughly 10× (using an approximate number arithmetic approach). It also includes the full Gidney & Ekerå (2019) algorithm, which was previously the gold-standard optimized Shor’s method. By toggling between Baseline Shor, Gidney-Ekerå, and Chevignard in the QTT, one can see the effect of algorithmic advances on required resources. Thus, QTT does account for improvements in Shor’s algorithm that have already been published. This is important because ignoring these would yield far longer timelines (e.g. a 2016-era estimate might have said RSA-2048 needs billions of qubits, implying no threat this century!). QTT instead recognizes that RSA-2048 in theory only needs a few thousand logical qubits (thanks to algorithmic optimizations) – the challenge is acquiring those under realistic error rates.

However, QTT cannot foresee future algorithmic breakthroughs beyond the current literature, and this is another reason it may project later dates than more bullish forecasts. The project appears to treat Chevignard’s 2024 algorithm as the latest word. But, quantum algorithms often improve in unpredictable leaps rather than steady increments. For example, in 2025 a Google Quantum AI researcher (Craig Gidney) claimed an additional ~20× speedup in Shor’s algorithm execution time by clever circuit optimizations, bringing the physical qubit requirement for RSA-2048 down to “<1 million noisy qubits” to factor it in under a week. If QTT was not updated with that specific result (or similar advances from late 2024/early 2025), its resource estimates might be too high. In short, QTT assumes the current state-of-the-art algorithms (circa 2024) and no dramatic new math. By contrast, some recent analyses posit that continued algorithmic optimizations, even minor, could shave another factor of 2×–5× off the requirements by 2030. QTT’s static set of algorithms might thus omit potential future improvements (such as better quantum RAM techniques, alternative factorization algorithms, etc.), which more aggressive forecasts may be banking on.

That said, even with known algorithms, QTT’s definition of “broken” might be stricter. The project tracks the time-to-attack for each year. It might only declare RSA-2048 truly “insecure” when a quantum computer can break it in, say, hours instead of weeks. This nuance can make QTT’s “break date” later than others. For example, Gidney’s 2025 estimate suggests a machine with ~$$10^6$$ qubits could factor RSA-2048 in ~7 days. If one expects million-qubit machines by 2035, that implies Q-Day ~2035 (with a one-week runtime). But QTT’s timeline might wait until the runtime is down to ~hours or minutes, which might not happen until that machine has more qubits or faster gates a decade or two later. Quantum attacks will initially take tens or hundreds of hours even after the first CRQC appears, meaning early on you could mitigate risk by frequently rotating keys. QTT explicitly highlights this “time-of-attack” aspect. Therefore, its forecast of 2051 for RSA-2048 likely corresponds to a very low attack time (perhaps <24 hours), whereas other forecasts might label RSA-2048 “broken” as soon as it’s possible at all, even if it takes days or weeks. This conservative criterion delays QTT’s predicted break year relative to more optimistic takes.

Conservative Inputs vs. Industry Roadmaps – Why QTT Projects a Later Q-Day

In summary, QTT’s projection of ~2051 for RSA-2048 (significantly later than many industry experts anticipate) can be traced to a combination of cautious modeling assumptions and possibly outdated inputs:

- Hardware Growth Rate: QTT likely assumes a steady but modest growth in quantum hardware capability – perhaps extrapolating the last decade of progress forward. This might equate to qubit counts growing on the order of ~10× per decade and incremental error reduction. It does not seem to incorporate the more ambitious roadmaps that foresee rapid scaling by the late 2020s. For instance, IBM has publicly announced a plan for a 100,000-qubit quantum “supercomputer” by 2033 , and companies like IonQ now claim they can reach ~2 million physical qubits (~40,000–80,000 logical qubits) by 2030. Such figures, if even partially realized, would enable cracking RSA-2048 far earlier than 2050. QTT’s default roadmap appears to neglect these aggressive commitments – perhaps deeming them too speculative. By using more conservative numbers (e.g. assuming only thousands of qubits in the 2030s instead of millions), QTT naturally pushes the CRQC date out. In effect, QTT’s long timelines may stem from “overly pessimistic” hardware assumptions that lag behind the latest quantum industry targets.

- QEC Overhead and Physical Qubit Needs: The project likely uses a high overhead factor for error correction, meaning it might assume that each logical qubit demands thousands of physical qubits at practical error rates. If so, even a device with 1 million physical qubits (which some predict by ~2030) would yield on the order of only ~hundreds of logical qubits – not yet enough to run Shor’s algorithm on 2048-bit RSA (which might need a few thousand logical qubits plus ancillary qubits). QTT’s 2051 date suggests they expect it will take until mid-century to have, say, >4,000 logical qubits available simultaneously with long enough coherence to perform the factorization. This can be due to using dated error-rate assumptions (e.g. $$10^{-3}$$) and not fully accounting for breakthroughs like the Oxford 2024 fidelity record or better codes. In contrast, some forecasts assume error correction overheads will shrink (via error rates ~$$10^{-4}$$–$$10^{-5}$$ or new codes), meaning fewer physical qubits per logical qubit. That optimism shortens the timeline. QTT’s more pessimistic QEC parameters directly contribute to later attack dates.

- Lack of Recent Breakthrough Integration: QTT may not yet reflect the flurry of advances from late 2023 to today that accelerated the quantum timeline. As my recent analysis noted, “researchers have slashed (physical) qubit requirements for factoring RSA-2048 from millions to under one million, demonstrated gate fidelities beyond the threshold for effective QEC, and laid hardware roadmaps for large-scale fault-tolerant quantum computers by the end of this decade”, all suggesting a much earlier RSA threat around 2030. These three areas – algorithmic efficiency, error correction performance, and hardware scale – have all seen rapid improvements. If QTT omitted or underestimates any of these (for example, using the pre-2025 status quo), its predictions would indeed be on the late side. Other industry forecasts incorporate more aggressive assumptions: e.g. expecting continued algorithmic gains (perhaps another 2–10× speedup on top of Chevignard), assuming error correction will use more efficient codes or even different qubit modalities (like topological qubits from Microsoft’s scheme, which promise scaling without increasing error rates ), and trusting that big players will hit their roadmap milestones for qubit counts. QTT’s model appears to omit those optimistic assumptions by design – it sticks closer to proven trends and currently demonstrated capabilities, yielding a later “break” date.

- Risk Posture (Conservative Criterion): Finally, QTT might be intentionally conservative because its purpose is to inform risk for critical infrastructure (indeed it was funded for energy sector threat analysis). It may prefer to under-promise and over-deliver – i.e. not declare an earlier Q-day unless truly confident. Meanwhile, many forecasts by quantum computing firms or some cryptographers might err on the side of urgency (to motivate action, they highlight the soonest plausible date). QTT’s forecast being “significantly later” than others doesn’t necessarily mean it’s wrong – it reflects a different weighting of assumptions. It extrapolates hardware growth observed so far, assumes no miracle jumps, and uses high confidence (worst-case) parameters for error correction. The downside is that it could indeed be overly conservative if the pace of innovation accelerates in reality.

In summary, QTT’s default scenario appears to not fully incorporate these cutting-edge developments. That’s understandable – the tool was likely developed over many months, and some breakthroughs (like Gidney’s May 2025 result) happened just recently. The great thing is QTT is configurable: one can plug in updated numbers for qubit requirements or a faster hardware growth rate and see the timeline shift earlier. I suspect that if we reran QTT with, say, “<1 million qubits needed for RSA-2048” and “1,000 logical qubits by ~2032” as inputs, we’d get a Q-Day estimate in the early 2030s instead of 2051. In fact, that aligns with what many experts are already thinking.

The Early-2030s Consensus (and Why 2051 Feels Too Late)

It’s important to put QTT’s conservative demo in context: most recent roadmaps and expert assessments converge on the early 2030s as the likely time frame for cryptographically relevant quantum computers. My own analysis – which I’ve updated continually as new data emerges – currently predicts Q-Day around 2030. I’m not alone in that view:

Government and Industry Roadmaps

The timelines coming out of government agencies and industry coalitions reflect a sense of urgency before 2035. The European Commission’s coordinated roadmap calls for all high-risk systems (critical infrastructure, finance, government, etc.) in the EU to transition to post-quantum encryption by the end of 2030. Similarly, Canada has set targets around 2030-2031 for securing critical systems. The U.S. initially talked about 2035 as a target for full PQC migration, but many experts (myself included) argued 2035 was too late given the threat trajectory. Indeed, NIST’s post-quantum cryptography program advises that vulnerable crypto be deprecated by 2030 and eliminated by 2035. These dates are not picked arbitrarily – they’re based on risk assessments that a quantum attacker could emerge in the early 2030s. Even the UK’s National Cyber Security Centre (NCSC) has recommended that critical infrastructure plan for quantum threats on a time frame “between now and 2031”, effectively implying that by 2031 one should assume quantum breaches are possible. All of these roadmaps point to the next 5–10 years as the crucial window to get quantum-safe.

Expert Surveys

Various annual surveys of quantum experts also show consensus gravitating to the 2030s. Very few credible experts now say “beyond 2040.” In short, the risk is no longer on some distant horizon; it’s within the planning horizon of current IT projects. The average opinion is that we’ll have an RSA-breaker sometime in the early-to-mid 2030s, and we should act accordingly.

My Prediction (~2030) and Its Rationale

As mentioned, I have publicly forecasted 2030 as the year a quantum computer will break RSA-2048. That is even a bit more aggressive than some, but I moved it up from 2032 after seeing the trio of breakthroughs in 2025 (the improved algorithm, the error-correction milestone, and IBM’s hardware roadmap). Those three together essentially check the boxes of what’s needed: a feasible algorithmic recipe, a path to build the machine, and a reduction in errors. If we simply execute on what has already been demonstrated (even if no new discoveries were made after 2025), a 2032 timeline for RSA’s fall is entirely plausible. And with continued incremental progress, 2030 starts to look more likely than not. This is why seeing a 2051 estimate in the QTT demo is jarring – it’s as if we’re looking at an analysis from 5+ years ago, before the recent acceleration in the field.

Given this broader consensus, I worry that QTT’s default demo (if taken at face value) could send the wrong signal. 2051 is an outlier compared to what most of the quantum community now believes. We should not treat 2051 as a “deadline” by which to finish migration – we should treat it as a worst-case (or best-case depending on your point of view) late scenario. The prudent approach (and the one regulators are increasingly adopting) is to assume Q-Day might hit in the early 2030s and to start getting ready now. The risk of waiting is huge: even if the breakthrough machine isn’t here until 2035, data that is sensitive for years or decades (think health records, state secrets, intellectual property) is at risk today from adversaries who can harvest encrypted data now and decrypt it as soon as the quantum capability comes online. So, any complacency over a “2051” notion could be very dangerous.

A Note to the QTT Authors: Mind the Power of First Impressions

I have one friendly, but sharper, critique for the QTT team. Publishing a screenshot that shouts “RSA‑2048 broken in 2051” in the project’s GitHub read‑me and LinkedIn launch posts was a risky communications choice. The target audience for this tool – CISOs, security program owners, policy makers – rarely have the time (or technical appetite) to tweak command‑line parameters. When they see a polished chart with the University of Edinburgh’s name on it, they will instinctively treat the date as an academic verdict, not a configurable placeholder. That single picture is already circulating in board decks as evidence that “experts at the University of Edinburgh say we have 25 years.” The authors have done nothing technically wrong, yet they underestimated how sticky first impressions are in executive circles. A safer launch would have used a neutral dummy timeline (e.g., “XYZ‑Demo Only”) or splashed a bold disclaimer: “Default assumptions = pessimistic; configure before quoting.” In the era of screenshot culture, the demo you choose becomes the message. I hope future releases swap in a more representative scenario or at least flag the demo as illustrative, not prescriptive – because, like it or not, that one JPEG is now shaping enterprise risk posture far beyond GitHub.

Conclusion: A Valuable Tool – If Used Wisely

The Quantum Threat Tracker is a welcome addition to the cybersecurity toolkit. The fact that Cambridge Consultants and Edinburgh’s Quantum Software Lab made it open-source means anyone can play with scenarios and stress-test their crypto policies. My critique is not of the tool itself or the hard work of its creators – it’s simply a caution about the default scenario that’s been showcased. I strongly encourage readers (and especially decision-makers who see the QTT charts) to understand the assumptions behind any Q-Day prediction. If you try QTT, go ahead and adjust the parameters: plug in a faster development curve or the latest algorithmic advances and see how the projected date jumps earlier. This will give you a range of possible futures, not just the rosiest one where we have until 2050.

Overall, I remain positive about QTT – it has the potential to educate and inform on quantum risks in a quantitative way, which is much needed. Hopefully the tool will be frequently updated as research evolves (perhaps future versions will include the 2025 Gidney result and newer hardware data by default). In the meantime, it’s on us as practitioners to inject the latest knowledge when interpreting Q-Day forecasts. My stance aligns with what the QTT authors themselves likely intended: don’t use any single prediction in isolation. Instead, use tools like QTT to explore why a prediction is what it is, and what happens if you change the underlying assumptions.

(I admit my look at the QTT was quick, so I welcome any clarifications or corrections from the QTT team or other experts. The goal here is to spark a constructive discussion. If the developers of QTT have insights into the default assumptions or plan to update them, I’m all ears – this kind of dialogue will help everyone reach a better understanding of when we truly need to worry about RSA-2048’s demise.)

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.