Microsoft Unveils New 4D Quantum Error-Correcting Codes

Table of Contents

Introduction

Microsoft Quantum’s researchers have introduced a new family of four-dimensional (4D) geometric quantum error-correcting codes that promise to dramatically outperform today’s standard 2D surface codes. Published in a new preprint “A Topologically Fault-Tolerant Quantum Computer with Four Dimensional Geometric Codes” (arXiv:2506.15130v1) and an accompanying Microsoft blog post, these novel 4D LDPC codes (low-density parity-check codes) achieve 1,000-fold lower error rates and require far fewer physical qubits per logical qubit than the best existing approaches.

In other words, by leveraging higher-dimensional structures, Microsoft claims it can reach the ultra-low error levels needed for reliable quantum computing with a small fraction of the qubit overhead previously thought necessary. The new codes can correct errors in a single shot (one round of detection and recovery), potentially speeding up quantum computations and simplifying hardware design. If validated, this could be a significant milestone on the road to scalable fault-tolerant quantum computing (FTQC).

How 4D Codes Outperform Surface Codes

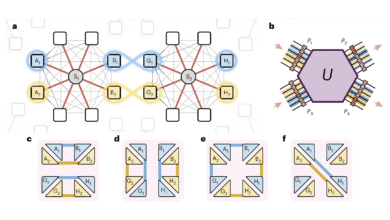

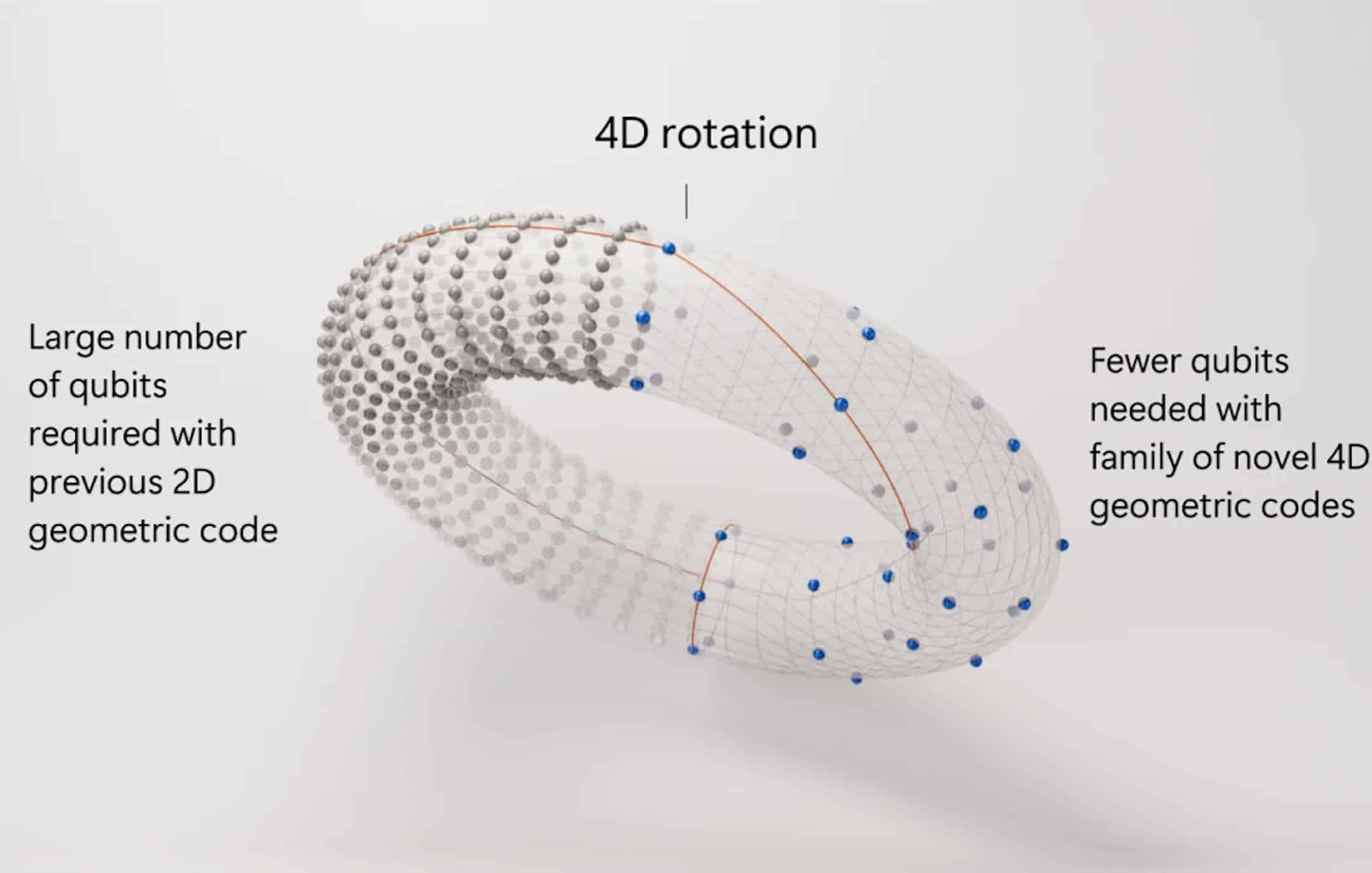

Today’s leading quantum error-correction scheme, the surface code, arranges qubits in a 2D grid with local checks, typically needing hundreds or thousands of physical qubits to encode a single “logical” qubit at useful fidelity. By contrast, Microsoft’s 4D geometric codes use a lattice in four dimensions (imagine a cube interconnected through an extra spatial dimension – essentially a 4D hypercube or tesseract structure) to pack information more densely and catch errors more efficiently. The core idea is that in a higher-dimensional topology, there are additional pathways to encode and protect quantum information. This extra geometric connectivity helps suppress correlated errors (e.g. multiple qubit errors that might sneak through a purely 2D code) and enables more robust error detection in one cycle. In simpler terms: the code “wraps” qubits in a 4D structure (like a torus extended with a twist in a fourth dimension) so that errors are less likely to spread unchecked, and any error that does occur can be identified with minimal redundancy.

According to Microsoft, “rotating the codes in four dimensions” yields roughly a 5× reduction in the number of physical qubits required per logical qubit. For example, one of the team’s 4D codes (dubbed the “Hadamard” code) encodes 6 logical qubits into just 96 physical qubits while still being able to correct up to 3 errors (code distance 8). That’s an encoding rate far higher than the 2D surface code, which typically might need ~1000 physical qubits for 1 logical qubit of similar error tolerance. Simulations indicate these 4D codes have exceptionally high error thresholds: at a physical error rate of 0.1% (10−3), the Hadamard code achieved a logical error rate ~10−6 per cycle – a 1,000× error suppression that vastly outperforms standard surface codes under similar conditions. In fact, the pseudo-threshold (the point at which adding error correction starts paying off) can approach ~1% error rate in some configurations. Such a high threshold means that even relatively noisy qubits could be stabilized by these codes, which is crucial for making fault-tolerant quantum computing feasible without exotic hardware improvements.

Another key advantage is single-shot error correction. Traditional surface code error correction requires multiple rounds of measurements and feedback which slows computations and increases qubit overhead. Microsoft’s 4D codes instead allow errors to be corrected in a single round of syndrome measurement. This is possible because the 4D structure provides enough information in one go to pinpoint errors, rather than needing to accumulate syndrome data over time. The result is faster error correction with lower latency and simpler control logic. Universal quantum operations are also supported: the Microsoft team demonstrated a complete set of logical gates within the 4D code framework. In other words, these codes aren’t limited to just storing qubits – they can actually execute quantum algorithms on the encoded logical qubits, a necessary feature for a full-fledged quantum computer.

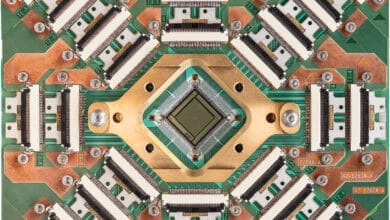

Crucially, this is not just a theoretical exercise: Microsoft designed the 4D codes with real hardware in mind. The codes are especially suited for machines that don’t have the connectivity limitations of a chip-based 2D grid. Platforms like trapped-ion chains, neutral atom arrays, or photonic qubit networks (where any qubit can, in principle, interact with any other via reconfigurable links) can implement the long-range entanglement these LDPC codes require. Unlike the strictly local neighbor-to-neighbor operations of a surface code, a 4D code can thrive on hardware that allows entangling distant qubits easily. Microsoft’s blog post notes the new codes are already available in their Azure Quantum platform for simulation and use, and they’ve been tested in small-scale experiments. Notably, in a separate preprint (“Repeated ancilla reuse for logical computation on a neutral atom quantum computer“) the team even demonstrated the ability to replace lost atoms on the fly in a neutral-atom quantum computer – injecting fresh atoms into a trap array mid-computation without disturbing the encoded logical qubits. This addresses a practical challenge (qubit loss) and shows the single-shot 4D code concept working in a prototype setting. All told, the 4D geometric codes appear to maintain the strong topological protection of surface/toric codes while massively improving encoding efficiency and error-handling speed. It’s a compelling recipe that could cut the cost (in qubits and time) of achieving reliable quantum computing.

Implications: A Boost for Quantum Timelines and Q-Day Predictions

The introduction of high-dimensional quantum codes comes at a very interesting moment. In the past few weeks alone, the quantum field has seen a series of breakthroughs that collectively accelerate the timeline for a cryptographically relevant quantum computer (CRQC) – i.e. a machine capable of breaking today’s public-key encryption. In fact, I just revisited my own “Q-Day” forecast (the projected date when quantum computers can crack RSA-2048) and moved it up from 2032 to around 2030 to reflect three major developments: algorithmic improvement in factoring published in May by Craig Gidney; physical qubit fidelity milestone published by a team from Oxford; and IBM’s recently announced roadmap to fault-tolerant machine.

Now Microsoft’s 4D code announcement adds yet more fuel to this optimistic (or pessimistic if you are in cyber, outlook). It directly tackles the error-correction overhead in a new and different way. If we can cut the qubits-per-logical-qubit by 5× (as Microsoft suggests) and simultaneously reduce the required error-correction cycles thanks to single-shot decoding, the entire quantum computer needed for RSA factoring shrinks in complexity. In other words, Microsoft’s 4D codes seem to slot right into the blueprint for a 2030-era CRQC: they attack the problem from the error correction side, complementing the advances from others.

From my perspective, this development further solidifies the case for a 2030 Q-Day. In the recent analysis, I noted that even if no new scientific breakthroughs occurred after mid-2025, just executing on the discoveries already in hand (the three above) would likely be enough to meet my previous 2032 timeline. But of course breakthroughs will continue – and here we have one in error correction arriving just as I clicked “Publish” on my post.

How Big of a Breakthrough Is 4D Coding?

It’s worth putting Microsoft’s 4D geometric codes in context. Over the past few years, there have been numerous notable improvements in quantum error correction and fault tolerance techniques, each chipping away at the daunting overhead problem. We had several exciting improvements over the last two years (e.g. “yoked” surface codes magic state factories, etc.) Each of these advances is important, but generally they were incremental. By contrast, Microsoft’s 4D codes seem to represent a more fundamental leap – a new family of codes with inherently different scaling properties. We’re not just squeezing a few percent better efficiency; we’re potentially looking at an order-of-magnitude reduction in qubit overhead for the same target error rate. The last time we saw something of comparable impact was perhaps IBM’s introduction of quantum LDPC codes in 2024, which similarly promised ~10× better qubit efficiency than surface codes. Microsoft’s 4D codes are very much in the same spirit, like high-dimensional realization of quantum LDPC, but with the added twist of geometric rotation in four dimensions to optimize the lattice. In technical terms, both efforts show that topological protection need not be confined to 2D surface/toric codes; by exploiting additional connectivity, we can get much more bang for our qubit buck.

So how significant is this 4D code breakthrough relative to past milestones? Potentially quite significant, though we should remain measured. On paper, achieving ~1% threshold and single-shot operation with a fivefold qubit savings is a huge deal – it attacks the scalability problem at its core. It means that if you have, say, a thousand physical qubits of decent quality, you might encode on the order of 10–20 logical qubits with these codes (as opposed to 1 or 2 logical qubits with surface code). That could accelerate experimental progress, allowing small fault-tolerant logic circuits to be tested with far fewer resources. It also suggests that a future million-qubit quantum computer could have a logical qubit count in the many hundreds or low thousands, which is exactly the regime needed for classically intractable problems like breaking RSA, simulating complex chemistry, etc. By comparison, without such codes, a million physical qubits might only net tens of logical qubits if using older methods – not enough for those applications.

However, we must recognize that these gains, while real, will take time to realize in hardware. The Microsoft paper is at the stage of simulations and theoretical analysis (though with some early experimental validation). Translating 4D codes into practical use will require qubit technologies that can perform many-qubit entangling operations across the code’s lattice without introducing new errors. This is non-trivial; even if neutral atoms and ions offer the connectivity, they still have finite gate fidelities and crosstalk that need to be tamed when doing complex LDPC parity checks. The decoding algorithms for LDPC codes can also be demanding, though Microsoft notes that their simulations tried both single-shot decoders and multi-round decoders with success. It’s encouraging that parallel decoders (like belief-propagation or other efficient classical decoding methods) can work with 4D codes – this has long been a selling point of LDPC codes in classical error correction, and it seems to carry over to quantum LDPC as well. By contrast, surface codes often rely on heavy brute-force decoders (like minimum-weight perfect matching) that become very slow as the system grows. So there’s a plausible argument that 4D codes could be not only a better code, but also easier to decode at scale, enabling real-time error correction as the system grows.

The big question is whether 4D geometric codes will replace 2D surface codes as the default choice for future quantum architectures. It’s early to say, but the momentum is certainly building behind LDPC and high-dimensional codes. IBM’s shift away from surface codes in its roadmap was a strong signal. Now Microsoft – which for years had focused on more exotic approaches like topological Majorana qubits – is also heavily investing in quantum error correction for “conventional” qubits, and they’ve chosen the high-dimensional route as well. In the near term, some platforms with limited connectivity (e.g. fixed superconducting qubit lattices) might stick with surface codes, because those codes map naturally onto a 2D grid and are relatively well understood experimentally. Surface codes have the advantage of simplicity and years of research; we know how to implement a distance-3 or distance-5 surface code on small patches of qubits. By contrast, implementing a 4D code even of modest distance might require a more complex entanglement layout that hasn’t been tried yet. So in the next 1–2 years, surface codes will likely continue as the testbed for small-scale demonstrations of logical qubits, while 4D codes remain a cutting-edge concept to be proven out.

Looking a bit further ahead, though, if the promised advantages hold up, 4D codes (and quantum LDPC codes in general) could very well become the standard for large-scale machines. The appeal of needing 5× fewer qubits (or equivalently, packing 5× more logical qubits into the same hardware) is just too strong to ignore in the long run. Moreover, certain emerging hardware might only be practical with such codes. Microsoft’s approach is notable for targeting those platforms (ion traps, neutral atoms, photonics) which naturally have all-to-all or long-range gate capability. In those systems, the historical reason to use surface codes (which need only local gates) vanishes, and a code that takes advantage of connectivity to reduce overhead makes perfect sense. We might even see hybrid approaches: maybe surface codes handle local noise on each module or chip, and LDPC/4D codes knit together the modules at the higher level – a multi-layer error correction scheme. The field is quite actively exploring such possibilities.

In summary, Microsoft’s 4D geometric codes represent an exciting and innovative leap in the quest for fault-tolerant quantum computing. This announcement, delivered with both a scholarly preprint and a splashy blog post, has the feel of a milestone. For cybersecurity professionals, the takeaway is that the timeline to large-scale quantum computers is shrinking (now almost weekly!). Each technical barrier that falls – be it algorithmic complexity, physical qubit fidelity, or error-correcting efficiency – brings quantum attackers closer. Of course, in practice there is a long engineering road ahead to integrate these advances into a full system. But with giants like IBM and Microsoft openly confident about cracking the fault-tolerance challenge by the latter 2020s, the burden of proof has shifted.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.