IBM Unveils “Nighthawk” and “Loon” Quantum Chips: Milestones Toward Quantum Advantage and Fault Tolerance

Table of Contents

13 Nov 2025 – IBM has announced two significant advances in quantum computing as part of its updated roadmap on November 12, 2025. At the annual Quantum Developer Conference, the company introduced IBM Quantum Nighthawk and IBM Quantum Loon – two new processors that mark major steps toward achieving practical quantum advantage by 2026 and fault-tolerant quantum computing by 2029.

The first is a 120-qubit processor aimed at running more complex quantum circuits than ever before, and the second is an experimental chip designed to test the hardware building blocks of error-corrected quantum computation.

IBM’s November 12 Roadmap Update: Two Chips, Two Big Shifts

IBM’s latest announcements reinforce its aggressive quantum computing roadmap. Nighthawk and Loon represent two complementary thrusts in IBM’s strategy: one geared toward near-term quantum advantage on real-world problems, and the other toward long-term fault tolerance through error correction. IBM’s Jay Gambetta (IBM Research Director) emphasized that progress on all fronts – software, hardware, fabrication, and error correction – is essential to deliver truly useful quantum computing. The company is unique in tackling all these aspects in parallel, and the November 12 update showcased milestones in each.

Key confirmed details from the announcement include:

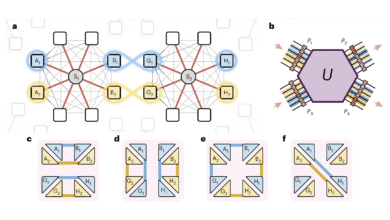

- IBM Quantum Nighthawk: a 120-qubit superconducting processor with a new square lattice topology and 218 tunable couplers connecting qubits. This increased connectivity (20% more couplers than the previous generation Heron chip) lets users run circuits about 30% more complex than before while maintaining low error rates. Nighthawk is slated for cloud access to IBM Quantum users by end of 2025. Importantly, it’s designed as IBM’s workhorse for demonstrating quantum advantage (i.e. solving certain problems better than any classical computer) as early as next year.

- IBM Quantum Loon: an experimental processor (currently in fabrication) that for the first time integrates all the key hardware components needed for fault-tolerant quantum computing. Loon will validate a new architecture for high-efficiency quantum error correction, incorporating features like multi-layer routing on chip, extra-long couplers (IBM calls them “c-couplers”) that link distant qubits, and fast qubit reset circuits. In short, Loon is a testbed for IBM’s quantum Low-Density Parity Check (qLDPC) error-correcting code scheme. It’s not a general-purpose device yet, but it demonstrates the path toward a future large-scale fault-tolerant quantum machine.

IBM also projected timelines associated with these developments. The company reaffirmed its goal of “quantum advantage by end of 2026” – expecting the first verified quantum advantage results to be confirmed by the community within the next year or so – and a viable fault-tolerant quantum computer by 2029. The Nighthawk and Loon chips are key stepping stones toward those milestones, addressing different challenges on the roadmap.

IBM Quantum Nighthawk: Built for Quantum Advantage in 2026

IBM Quantum Nighthawk is IBM’s most advanced quantum processor to date, purpose-built to tackle more complex algorithms with higher fidelity. This 120-qubit chip introduces a square lattice layout of qubits, each coupled to four neighbors via 218 tunable couplers. By comparison, the previous-generation 65-qubit Heron chips had 176 couplers in a hexagonal layout; Nighthawk’s design boosts inter-qubit connectivity by over 20%.

Thanks to the denser web of couplers, Nighthawk can run larger circuits with fewer communication overheads. IBM reports that quantum programs can be about 30% more complex (in terms of gate depth and connections) on Nighthawk than on its predecessor, without accruing additional error. In practical terms, this means developers can attempt more ambitious problems before hitting the error-rate wall. The architecture is optimized to execute circuits requiring up to 5,000 two-qubit gates (entangling operations) – a significant step up in computational heft.

Some key specifications and capabilities of Nighthawk include:

- Qubit count and topology: 120 superconducting qubits arranged in a square lattice. Each qubit connects to four neighbors via 218 total couplers, enabling more direct interactions (fewer SWAP gates needed). This improves circuit efficiency and fidelity on complex entanglement patterns.

- Circuit complexity: Supports quantum circuits ~30% more complex than what was possible on IBM’s Heron processor, while keeping error rates low. Higher connectivity reduces the need for swapping qubits around, which in turn reduces error accumulation on deep circuits.

- Gate depth milestone: Can reliably execute algorithms with up to 5,000 two-qubit gates today. IBM plans to incrementally improve the Nighthawk family such that by 2026 it can handle ~7,500 gate circuits, and ~10,000 by 2027. By 2028, clustering multiple Nighthawk-based units with long-range couplers could allow 15,000 gate operations on 1,000+ effective qubits – a scale approaching the realm needed for truly industrial applications.

- Availability: IBM expects to deliver Nighthawk access to users via its cloud platform by end of 2025. This means developers and researchers worldwide will soon be able to run experiments on this new processor.

IBM explicitly tied Nighthawk to its quantum advantage timeline. The chip is “designed with an architecture to complement high-performing quantum software to deliver quantum advantage next year”. In other words, Nighthawk is the hardware platform on which IBM hopes quantum advantage experiments will materialize by the close of 2026. Rather than declaring a proprietary milestone in a paper, IBM is taking a collaborative approach: any claims of quantum advantage will be rigorously vetted by the broader community. In fact, IBM has contributed multiple candidate benchmark problems to an open Quantum Advantage Tracker it launched with partners. This tracker will allow researchers to compare quantum vs classical results on various problems in real-time. IBM is inviting outside startups, academics, and enterprises to run their own code on Nighthawk (and other IBM QPUs) to test whether a quantum advantage truly holds up.

“We’re confident there’ll be many examples of quantum advantage. But let’s take it out of headlines and papers and actually make a community where you submit your code, and the community tests things” – Jay Gambetta, IBM Research Director

This philosophy, as voiced by Gambetta, underlines IBM’s strategy: to prove quantum advantage in practice, not just proclaim it. IBM is working with a cohort of startups and research groups on this, sharing Nighthawk’s capabilities and results openly so that any quantum-vs-classical claims can be verified by independent experts. If Nighthawk indeed beats classical supercomputers on certain tasks in 2026, the achievement will carry weight because it will have been validated in the open, setting a credible precedent for quantum computing’s usefulness.

It’s also worth noting that IBM isn’t only focused on raw qubit count with Nighthawk, but also on software and workflow improvements to harness those qubits effectively. Alongside the new processor, IBM announced updates to its Qiskit quantum SDK and runtime: a new execution model with finer control, a C++ API for high-performance computing integration, and dynamic circuit capabilities that improved accuracy by 24% in >100 qubit runs. These upgrades mean developers can better optimize circuits for Nighthawk’s architecture and incorporate classical HPC resources for error mitigation. Taken together, the Nighthawk hardware and the software stack advances aim to provide a platform where quantum algorithms can run faster, more accurately, and at a scale that crosses the quantum advantage threshold.

IBM Quantum Loon: Stepping-Stone to Fault-Tolerant Quantum Computing

While Nighthawk targets near-term performance, IBM Quantum Loon is all about the longer-term goal: a fault-tolerant quantum computer. IBM described Loon as a proof-of-concept processor demonstrating “all the key processor components needed for fault-tolerant quantum computing”. In essence, Loon is IBM’s test vehicle for the quantum error correction technologies that will eventually allow quantum computers to scale without being crippled by errors.

The Loon chip introduces a novel quantum processor architecture with several breakthrough hardware features, including:

- Long-range “c-couplers”: Unlike the short nearest-neighbor couplers on current chips, Loon implements crosstalk couplers that can physically link distant qubits on the same chip. This is critical for quantum LDPC error-correcting codes, which require qubits to interact in complex patterns not limited to adjacent neighbors. IBM had previously demonstrated long couplers in isolation; Loon is the first to integrate them on a working chip.

- Multi-layer wiring: Loon’s design uses multiple high-quality routing layers in the chip fabrication to route signals and couplers above/below the qubit plane. By going vertical with wiring (akin to 3D chip architecture), IBM can connect qubits that are far apart without interference, something not possible with a single-layer 2D layout. This is analogous to layering additional metal interconnects in classical CPUs to make more complex circuits.

- Qubit reset gadgets: The processor includes built-in fast reset technology that can quickly return qubits to their ground state between computations. Rapid qubit reset is essential in error correction cycles, where qubits must be reused frequently to measure error syndromes and apply corrections.

- High connectivity and qubit count: IBM has not publicly stated the exact qubit count for Loon, but it’s expected to be on the order of a hundred qubits (reports suggest around 112 physical qubits on Loon). More importantly, each qubit can have up to 6 or more connections given the new couplers, and the layout is a larger lattice designed to support logical qubits encoded across many physical qubits. The chip is larger and more complex than Nighthawk in terms of on-chip features and wiring layers.

In summary, Loon is architected to implement quantum error correction codes (specifically IBM’s quantum LDPC “bicycle” codes) directly in hardware. By combining all these features, IBM intends to demonstrate that a superconducting quantum chip can incorporate the necessary primitives for error correction: long-range entanglement, parallel syndrome measurement, fast decoding, and qubit reuse. “Loon is a device built to demonstrate a path to fault-tolerant quantum computing,” Gambetta noted, explaining it requires many more layers of metal and couplers than a regular processor, but forms IBM’s default path to building fault-tolerant machines by 2029.

As of the announcement, IBM said Loon is “almost out of fabrication, and will be assembled by the end of the year”. This suggests that the first Loon chips are being produced on 300mm wafers (more on IBM’s fabrication shift below) and will soon undergo testing. IBM did not commit to when external users might access Loon; it’s likely a research device for now, not aimed at running commercial workloads. The purpose is to validate that these new hardware elements function together as expected.

One of the most significant achievements related to Loon is IBM’s progress in real-time quantum error decoding. Alongside the processor, IBM announced it has developed a super-fast error decoder that can process quantum error syndrome data and output corrections in under 480 nanoseconds. This decoder leverages a classical FPGA (field-programmable gate array) running a custom algorithm called RelayBP, which IBM researchers tailored from classical belief-propagation codes (originally used in telecommunication error correction). IBM reported that this FPGA decoder can keep up with the quantum error correction cycle times by delivering results in ~0.48 microseconds – roughly 10× faster than other recent approaches in the industry.

Impressively, this milestone was achieved a full year ahead of IBM’s own schedule. In practical terms, such decoding speed is crucial: if error corrections aren’t computed quickly (faster than errors accumulate), a fault-tolerant quantum computer can’t function. IBM’s early success here removes a critical bottleneck. A detailed paper on the RelayBP decoder was released for the community, and it shows IBM’s focus on not just qubit hardware, but also the classical co-processing required to make quantum error correction viable.

By combining the Loon hardware features with the high-speed decoder, IBM now claims to have demonstrated “the cornerstones needed to scale qLDPC codes on high-speed, high-fidelity superconducting qubits”. In other words, they have the pieces in place – on paper and in prototype form – to start building a truly fault-tolerant quantum module. The next steps will be assembling these pieces and showing that an encoded logical qubit on Loon (or its successors) indeed has significantly lower error rates than a physical qubit. If that works, IBM will then replicate and scale up the design into larger systems (codenames in IBM’s roadmap, like Kookaburra in 2026, likely build on Loon’s concepts).

Scaling Up: Manufacturing and Roadmap Outlook

Beyond the chips themselves, IBM’s announcement highlighted an often underappreciated factor in quantum hardware: fabrication technology. IBM revealed that it has transitioned its quantum chip production to an advanced 300mm semiconductor fabrication facility at the Albany NanoTech Complex in New York. This is the same state-of-the-art fab where leading classical 7nm/5nm chips have been made. The move to 300mm wafers (from older 200mm processes) is already paying dividends for IBM:

- They have doubled the speed of quantum processor development, “cutting the time needed to build each new processor by at least half”. Faster iteration means quicker learning and improvement.

- They achieved a 10× increase in physical chip complexity, allowing far more intricate designs (like Loon’s multi-layer routing) to be realized reliably. The precision of modern lithography and tooling enables features that were previously impractical.

- They can now fabricate multiple designs in parallel on one wafer, exploring different architectures concurrently. This flexibility accelerates R&D – for example, test new coupler structures or materials without waiting in line behind a single-process flow.

In short, IBM’s investment in cutting-edge fabrication is a competitive advantage. Mark Horvath, an analyst at Gartner, noted that IBM’s error-correcting chip approach (with additional couplers and circuitry on-chip) is “very clever” but makes the hardware much harder to build. By partnering with the Albany NanoTech fab, IBM ensured it had the manufacturing prowess to attempt this hard route. Gambetta himself cited access to the “same chipmaking tools as the most advanced factories in the world” as key to realizing Loon’s design. This suggests that IBM’s vertical integration – from theory to chip design to fabrication – is enabling it to hit ambitious engineering milestones that might stump others who lack such facilities.

Looking at the roadmap ahead, IBM’s plan remains extremely ambitious yet, so far, largely on track. The company has consistently delivered new quantum processors roughly on the timeline outlined in its multi-year roadmap (often announced at the annual November conferences). For instance, the 127-qubit Eagle (2021) and 433-qubit Osprey (2022) chips were delivered as promised, and though IBM pivoted from a monolithic 1,121-qubit “Condor” in 2023 to the smaller-scale Heron and modular approach, that shift was strategic to improve quality. Now with Nighthawk and Loon in 2025, IBM is demonstrating that scaling isn’t just about qubit count – it’s about architectural innovation and error mitigation.

The near-term marker to watch will be quantum advantage by 2026. IBM is not alone in chasing this – competitors like Google, Quantinuum, and others have all declared they’re targeting useful quantum advantage in the next couple of years. IBM’s differentiator is its insistence on “verified” advantage. By setting up community benchmarks and encouraging external validation, IBM is guarding against quantum hype and ensuring that any claimed advantage truly stands up to scrutiny. If successful, this could solidify IBM’s credibility and leadership in the field. We may see, by late 2026, published results where an IBM quantum processor (likely Nighthawk or its successor) solves a practical problem that no classical supercomputer can match within reasonable time or cost – and that claim will be openly vetted.

Following that, the 2027–2029 horizon is about moving from advantaged prototypes to fault-tolerant quantum computing. IBM explicitly states the goal of a large-scale fault-tolerant machine by 2029. Achieving that means demonstrating quantum error correction actually working (qubit errors suppressed arbitrarily well by adding more qubits). The Loon chip is the first hardware embodiment of that plan. If Loon (2025) and its successors (perhaps a 2026 chip codenamed Kookaburra, etc.) prove out the error correction approach, IBM could start building out modules containing hundreds or thousands of physical qubits that act as a handful of logical qubits. Those modules would then be the building blocks of a truly scaleable quantum computer.

Reaching fault tolerance by 2029 will require not just one breakthrough but a sustained cadence of improvements in qubit count, quality, and error correction overhead. IBM’s confidence on this date suggests they have internal milestones mapped out year-by-year – and notably, they have been hitting many of them early. For example, the real-time decoder arrived one year ahead of schedule, and even the Nighthawk chip’s capabilities appear to slightly exceed what was expected in this timeframe. IBM’s track record so far lends some credibility to the 2029 target, although significant challenges remain.

Why IBM’s Approach Is Significant

I have been following IBM’s quantum roadmap closely, and I remain highly optimistic about their progress. IBM’s November 12 announcements reinforce a pattern we’ve seen over the past few years: IBM consistently meets or exceeds its quantum development milestones, and it does so by tackling problems holistically. Instead of just chasing qubit numbers, IBM is innovating across the entire stack – from chip architecture and fabrication to software and algorithms. This comprehensive approach is arguably why IBM continues to hit targets that some in the industry doubted were possible on such tight timelines.

What stands out to me is the engineering discipline and transparency IBM is bringing to quantum computing. The introduction of Nighthawk, a chip explicitly built to achieve quantum advantage, shows IBM’s confidence that they’re on the cusp of crossing a fundamental threshold. But rather than declaring victory prematurely, IBM is effectively saying: “We think we can get there, but we’ll let the results speak for themselves – and anyone can verify them.” This is a refreshing stance in a field that has seen its share of overhyped claims. IBM’s community-driven validation (via the quantum advantage tracker and open collaboration with startups like Algorithmiq and BlueQubit) is both a scientific and a PR masterstroke. If and when quantum advantage is demonstrated on Nighthawk, it won’t just be IBM telling us – it will be a broader ecosystem confirming it. That kind of validation can accelerate acceptance of quantum computing’s value.

From a technical perspective, the Nighthawk design also exemplifies IBM’s co-design philosophy: the hardware was developed hand-in-hand with improvements in software (compilers, error mitigation) and problem selection (those candidate advantage experiments). IBM isn’t just throwing more qubits out there; it’s ensuring those qubits work better together and do something useful. The 30% complexity boost with Nighthawk, for example, might sound like an incremental gain, but it can make the difference between a toy problem and a practically interesting one. Real-world optimization or simulation tasks often hit a wall on current quantum processors due to limited circuit depth. Nighthawk’s extra connectivity and gate count expand that envelope. In my view, this could unlock new experiments in quantum chemistry or machine learning that simply weren’t feasible before. It’s these qualitative leaps – solving a problem that couldn’t be solved at all previously – that define quantum advantage.

On the IBM Loon side, I’m particularly impressed by how rapidly IBM is pushing toward fault tolerance. Just a couple of years ago, “fault-tolerant quantum computing” sounded like a distant dream, something for the 2030s. IBM is betting it can pull that timeline in to 2029, and crucially, it’s backing the bet with tangible progress: a chip that embodies the required features, and a decoding solution that already hits performance targets. By demonstrating things like long-range couplers and multi-layer quantum chip fabrication now, IBM is essentially de-risking the long-term goal. Each of those could have been a show-stopper if they didn’t pan out. For instance, if long couplers proved unreliable or too noisy, IBM’s whole qLDPC approach might falter. But showing them working on Loon means that piece of the puzzle is closer to solved. There’s a lot more to do, of course – scaling to thousands of qubits with all-to-all connectivity is enormously challenging – but IBM is systematically checking off the checkboxes on the fault-tolerance to-do list.

I also want to highlight IBM’s use of the 300mm fab and advanced manufacturing as a game-changer. This doesn’t grab headlines like qubits and algorithms do, but it’s arguably one of IBM’s strongest advantages. Quantum hardware development has often been slowed by fabrication yield issues and limited access to top-tier processes. IBM leveraging a cutting-edge semiconductor fab (Albany) gives it a leg up in both speed and quality. The doubling of R&D speed and tenfold complexity increase reported are not just numbers – they mean IBM can try bold ideas (like complicated coupler networks, 3D integration, new materials) and get results fast. In conversations about quantum roadmaps, I often say that manufacturability will be a key differentiator. Here, IBM is demonstrating exactly that. Not every player has this level of integration between research and fab. In my opinion, this could make IBM one of the first to actually build the large-scale devices others only theorize about.

Comparatively, IBM’s strategy contrasts with some competitors who have showcased impressive one-off experiments. Google, for instance, made waves with its 2019 “quantum supremacy” demo, and more recently has been exploring new qubit technologies. But IBM’s focus on an incremental, integrative roadmap – steadily improving qubits, connectivity, error rates, software, and verification in concert – feels more sustainable and geared to real-world utility. IBM is not just aiming for a momentary quantum supremacy demonstration; it’s aiming for a broad quantum advantage that is useful and reproducible across a range of problems, and ultimately a fault-tolerant machine that can run any algorithm reliably. This approach may not produce the flashiest headlines at every step, but as a technologist, I find it very credible and exciting. It’s the kind of progress that will convince businesses and governments to start investing in quantum solutions, because they can see a clear path to scalability and robustness.

In conclusion, IBM’s Nighthawk and Loon announcements signal that the era of practical quantum computing is drawing nearer. The company’s ability to execute on its roadmap – often beating its own deadlines – gives me confidence that quantum advantage, and eventually true fault tolerance, might arrive sooner than many expect. There are certainly big challenges ahead (for example, integrating thousands of qubits and managing the complexity of error correction software), but IBM is checking off critical milestones one by one. As someone bullish on IBM’s quantum efforts, I see these developments as validation that their strategy is working. By the end of 2026, we’ll likely have seen at least one real problem where quantum outperforms classical (verified by the community) on IBM hardware. And by decade’s end, if IBM continues this trajectory, we might witness the debut of a first-generation fault-tolerant quantum computer – a moment that would truly be historic for computing.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.