Quantinuum’s Breakthrough Sets Course for Fault-Tolerant Quantum Computing by 2029

Table of Contents

29 Jun 2025 – Quantinuum announced a significant technical breakthrough: the company claims to have overcome the “last major hurdle” on the path to scalable, universal fault-tolerant quantum computers. In a press release accompanying two new research papers, Quantinuum declared itself the first to demonstrate a fully error-corrected universal gate set – meaning it can perform all types of quantum operations (Clifford and non-Clifford) with errors detected and corrected in real-time. This achievement is universally recognized as an essential precursor to building large-scale quantum machines, and Quantinuum boldly asserts that it now has a “de-risked” roadmap to deliver a fully fault-tolerant quantum computer (code-named Apollo) by 2029.

In other words, they believe all the requisite ingredients for fault-tolerance are now in hand, enabling a transition from today’s noisy prototypes to “utility-scale” quantum computers by the end of the decade.

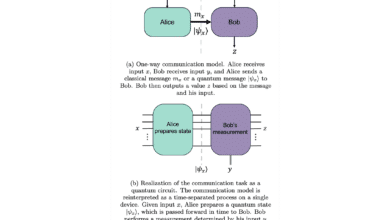

Quantinuum’s announcement centers on results from two experiments, documented in companion papers on arXiv. Both involve generating high-fidelity “magic states” – special quantum resource states used to implement non-Clifford gates fault-tolerantly – and using them to perform logical operations. Non-Clifford gates (like the T-gate, a $$\pi/8$$ rotation) are notoriously challenging to realize in quantum error-correcting codes, yet they are required for universal quantum computation (they’re the “magic” ingredient that, when added to the easier Clifford gates, unlocks algorithms that classical computers can’t efficiently simulate).

The press release emphasizes that without high-fidelity magic states (or an equivalent method for non-Clifford gates), a quantum computer “is not really a quantum computer” in the full sense. Until now, the non-Clifford gate has been a weak link – prior demonstrations of error-corrected qubits could perform Clifford operations with low error, but the T-gate step remained too error-prone to be useful. Quantinuum’s new results claim to close this gap. Below, we summarize each paper’s achievement and why it marks a turning point for fault tolerance.

Breakthrough #1: High-Fidelity Logical Non-Clifford Gate on H1-1

The first paper, titled “Breaking even with magic: demonstration of a high-fidelity logical non-Clifford gate”, showcases a novel, low-overhead protocol for magic state preparation implemented on Quantinuum’s H1-1 trapped-ion system. The H1-1 is a 20-qubit device, and the team used just 8 physical qubits to encode and fault-tolerantly prepare two magic states (|H+⟩ states) in a small [[6,2,2]] error-detecting code. This approach adapts so-called “0-level” distillation ideas to a tiny code, meaning they verify the magic state with ancilla qubits and discard it if a parity check indicates an error. By doing so, they achieve an extraordinarily high fidelity without the typical large overhead of multi-round distillation.

Key results from Paper 1:

- Record Magic-State Fidelity: The encoded magic states were produced with an infidelity of roughly $$7\times10^{-5}$$ (i.e. 99.993% fidelity). To reach this fidelity, about 14.8% of attempts were discarded (due to detection of an error in the verification step). This is an order of magnitude better error rate than any previously published magic state on any platform, setting a new state-of-the-art benchmark.

- “Break-Even” Logical Gate: Using these high-quality magic states, the team executed a non-Clifford two-qubit gate – a controlled-Hadamard (CH) gate – fault-tolerantly on two logical qubits. The resulting logical gate error was ≤$$2.3\times10^{-4}$$, significantly lower than the error of the same gate performed directly on physical qubits (physical CH gate infidelity ≈$$1\times10^{-3}$$). In other words, the error-corrected version outperformed the unprotected hardware gate, a landmark event. This is the first time a logical operation has beaten the physical error rate for a non-Clifford gate, essentially achieving “break-even” or better for a complex quantum gate.

- Low Overhead & Scalability: The experiment used only 8 physical qubits for two logical magic states – an extremely compact implementation. The authors report that their protocol can be concatenated (stacked in multiple layers of error correction) to reach even more astonishing fidelities on larger codes. Simulations indicate that on a [[36,4,4]] code (with higher distance), the approach could reach magic state error rates on the order of $$10^{-10}$$ (or even $$10^{-14}$$ with slightly improved physical gate fidelity). This suggests the method would scale well with hardware improvements, keeping the overhead relatively low while driving error rates down into the regime needed for very large computations.

In accessible terms, Paper 1 demonstrated a way to generate the “hard” part of universal quantum computing (the T-type non-Clifford operation) with quality better than the bare hardware can do alone. By smartly encoding a pair of qubits in a small error-detecting code and checking for errors (discarding when necessary), the Quantinuum team showed they can suppress the error probability quadratically (scaling as ~$$O(p^2)$$ in the physical error rate). The payoff was a cleaner-than-hardware magic state that enabled a fully fault-tolerant controlled-Hadamard gate.

Practically, this is a big deal: it proves that incorporating error correction can actually net an improvement in performance for an operation that’s critical to quantum algorithms. It’s a strong validation that quantum error correction isn’t just a theoretical exercise, but can beat the physical noise when done right. As the authors note, single-qubit gates on H1-1 were already extremely high fidelity (~1e-5 error, so there was no room to “beat” those yet). But for this two-qubit magic-induced operation, the crossover was achieved – a first in the industry. This result removes one of the last technical roadblocks to assembling a full fault-tolerant gate set out of logical qubits.

Breakthrough #2: Magic-State via Code-Switching on H2-1 (Reed-Muller to Steane Code)

The second paper, “Experimental demonstration of high-fidelity logical magic states from code switching,” took a complementary approach to producing a T-type magic state, using a technique called code switching. This experiment ran on Quantinuum’s newer H2-1 trapped-ion system, which can utilize a larger number of qubits (in this case, up to 32 or more qubits were available for the test). The team collaborated with researchers at UC Davis to demonstrate, for the first time, a fault-tolerant code switch between two quantum error-correcting codes: the 15-qubit quantum Reed-Muller (qRM) code and the 7-qubit Steane code. In simple terms, they prepared a magic state in one code where the T-gate is easy to implement (the qRM code has a transversal T-gate), and then switched the encoded state into another code (the Steane code) that supports the rest of the universal gate set (Clifford operations, error correction, etc.). This approach had been proposed as an alternative to brute-force magic state distillation, but had never been experimentally shown between two different codes until now.

Key results from Paper 2:

- High-Fidelity Logical T-State: By leveraging code switching, the team prepared a logical |T⟩ magic state in the 7-qubit Steane code (distance 3) with an error rate well below the physical level. The measured infidelity of the output state was at most ~$$5.1\times10^{-4}$$ (i.e. about 99.95% fidelity). Importantly, this logical error was 2.7× lower than the highest physical error rates of the device (in H2-1, two-qubit gate errors and state preparation/measurement (SPAM) errors were on the order of $$1\times10^{-3}$$). In other words, the quantum processor operated below the pseudo-threshold, meaning the error-correction actually suppressed errors compared to bare operations. Achieving a magic state with higher fidelity than any underlying physical operation is a landmark validation of this fault-tolerant method.

- Post-Selection and Success Rate: The protocol included a verification step (“pre-selection”) similar in spirit to the first paper – if certain checks failed, the attempt was discarded to ensure no bad magic state propagates. The reported success probability was ~82.6% on average, meaning roughly 17% of runs were discarded. This moderate overhead in repeat attempts is a reasonable price for the gain in fidelity. (The team also showed that with additional error-detection during decoding, they could push infidelity even lower to ~$$1.5\times10^{-4}$$, at roughly the same success rate.) All of these figures represent record performance for an encoded magic state in a distance-3 code, exceeding prior experiments by over a factor of 10 in error reduction.

- Resource Efficiency – 28 Qubits, Not Hundreds: Notably, this code-switching magic state was achieved with only 28 physical qubits on the H2-1 processor (22 data qubits across the two codes plus 6 ancilla for checks, reused in stages). Previous methods to get a similar fidelity magic state with error correction (e.g. using brute-force distillation or larger codes) would have required many more qubits (hundreds of qubits were estimated). This experiment demonstrates a much more qubit-efficient route to high-quality magic states, an important consideration for near-term quantum hardware which is still limited in qubit count.

- Full Universal Gate Set Realized: With the successful generation of a high-fidelity T-state, the Steane code’s logical qubit now has all pieces for universality. Quantinuum notes that they had already shown fault-tolerant Clifford operations, state preparation, and measurements in this 2D color code (Steane) in prior work; adding the non-Clifford magic state “completes a universal set of fault-tolerant computational primitives”, with each logical operation’s error rate now equal to or better than the corresponding physical error rate. In essence, they demonstrated that one can perform any quantum gate or circuit on logical qubits while continuously detecting/correcting errors, with performance on par with the raw hardware gates. This is the hallmark of a true fault-tolerant quantum computer, even if done on a small scale.

From a technical standpoint, code switching is the highlight of Paper 2. The team showed that you can bridge two different error-correcting codes in a fault-tolerant way: start with a code (15-qubit qRM) that natively supports the T-gate by a simple transversal operation, use it to produce the magic state, then transfer that state into a second code (7-qubit Steane) where it can be stored or used alongside other fault-tolerant gates. This avoids having to perform a complex T-gate or distillation inside the primary code directly. The experiment is a proof-of-concept that such hybrid techniques can dramatically lower the overhead. Achieving ~0.05% logical error rates with only a few dozen qubits is remarkable – it suggests that even small quantum computers can reach logical fidelities that surpass the physical noise, by intelligently combining codes and post-selection.

The results also provide empirical evidence that Quantinuum’s ion-trap architecture (with all-to-all connectivity and mid-circuit measurement) can handle the coordination of a fairly complex error-correction procedure (multiple ancillas, two-code interaction, etc.) without introducing prohibitive noise. All told, this is the first experimental demonstration of a fault-tolerant T-gate (via magic state injection) whose error is below the native error scale of the hardware, achieved on a fully connected 2D/3D code system.

Why It Matters: A Leap Toward Scalable FTQC (and Implications for CRQC Timelines)

These twin achievements mark an inflection point in the quest for fault-tolerant quantum computing (FTQC). Taken together, they show that all the core ingredients for a universal fault-tolerant quantum computer have now been individually demonstrated at high fidelity. In the broader context, quantum engineers have long been piecing together a kind of “FTQC toolkit” – prior milestones included things like logical memory that outlives physical qubit coherence, error-corrected Clifford gates (e.g. repeated QEC cycles on a small code, logical CNOT gates, etc.), and simple demonstrations of error suppression via redundancy. However, the non-Clifford logical gate was the missing piece; it’s essential for universality but notoriously resource-intensive.

Quantinuum’s work is significant because it removes this last roadblock: we now have a clear experimental path to perform any gate on encoded data with error rates that can beat the physical noise. In other words, the concept of a fully error-corrected quantum computer has moved from theory and partial prototypes into the realm of practical implementation. This builds confidence that scaling up will produce quantum processors that actually improve as they grow, thanks to error correction.

The importance to fault tolerance

By achieving a magic-state fidelity and a logical T-gate error rate better than the hardware’s own error rates, Quantinuum has effectively shown that quantum error correction works for the hardest type of operation. This is a strong validation of the entire fault-tolerance approach – it indicates that if you can get physical error rates into roughly the $$10^{-3}$$ (0.1%) range (as Quantinuum’s ion traps have) and have enough qubits to run these protocols, you can attain logical error rates around $$10^{-4}$$ or lower. It’s especially encouraging that one approach (Paper 1) did so with a mere 8 qubits, and the other (Paper 2) achieved it in a distance-3 code that corrects one error. This means we don’t necessarily need thousands of physical qubits per logical qubit to see benefit – even with dozens of physical qubits per logical qubit, we are getting error suppression. Such efficiency is critical on the road to large-scale systems. Indeed, the company noted that these innovations could reduce qubit requirements by an order of magnitude or more for magic-state production, speeding up the timeline for useful quantum applications.

Credibility of the 2029 timeline

Quantinuum’s public timeline – a fault-tolerant universal quantum computer by 2029 – is undeniably ambitious. However, this announcement lends it credibility. If all fundamental operations can now be done fault-tolerantly at manageable overhead, the remaining challenge is primarily one of engineering scale: integrating more qubits and more cycles while maintaining those error rates.

Quantinuum’s roadmap (as hinted in the press release) involves next-generation hardware like the upcoming “Helios” system in 2025 (projected ~100 qubits with improved error rates) and a larger “Sol” system by 2027, culminating in the “Apollo” system by 2029 which is intended to be the first fully fault-tolerant machine. This parallels similar goals by other major players – for instance, IBM has also outlined a plan for a large-scale fault-tolerant quantum computer (IBM Quantum “Starling”) by 2029, targeting on the order of 200 logical qubits and 100 million gate operations. The convergence of these roadmaps suggests a broad industry confidence that the end of this decade is a realistic target for the dawn of true fault tolerance.

Of course, significant hurdles remain in scaling up from ~20-30 qubit experiments to machines with thousands (or more) physical qubits. Issues of connectivity, yield, crosstalk, and control complexity will intensify with scale. Nonetheless, the fact that a logical gate can outperform a physical gate even at the current scale is a profound proof-of-concept. It means there’s a viable path forward – each incremental hardware improvement can translate into exponential suppression of errors through techniques like concatenation. In short, Quantinuum’s 2029 goal appears aggressive but not implausible, given the evidence that their architecture is already operating at the threshold of fault-tolerance.

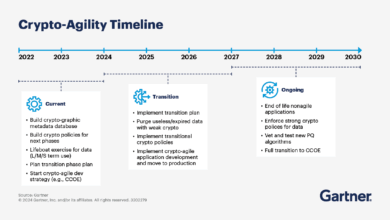

Implications for CRQC (cryptographically relevant quantum computers)

For cybersecurity professionals, one of the first concerns about a fault-tolerant quantum computer is its ability to threaten modern cryptography. A cryptographically relevant quantum computer is often defined as one capable of running Shor’s algorithm (or other quantum attacks) to break RSA/ECC encryption within a practical timeframe. Such a feat is estimated to require on the order of a few hundred to a few thousand logical qubits with error rates low enough to execute billions of operations. The breakthroughs by Quantinuum directly feed into forecasting when this might happen. In fact, I have recently updated my estimate for “Q-Day” (the day a quantum computer breaks RSA-2048) to around 2030 ± 2 years, based on three factors: (1) algorithmic improvements (e.g. better factoring algorithms and error-correction overhead estimates), (2) rapid fidelity gains in hardware (such as the ~$$10^{-5}$$-$$10^{-4}$$ level errors now being demonstrated), and (3) firm roadmaps like Quantinuum’s and IBM’s that foresee ~100-1000 logical qubits in the early 2030s. Quantinuum’s 2025 papers directly address points (2) and (3) – they provide the high fidelities and reinforce the credibility of the roadmaps. If the company indeed delivers a working fault-tolerant Apollo machine by 2029, it could very well have enough logical qubits (and low enough logical error rates) shortly thereafter to factor large integers or run other cryptographically relevant algorithms.

It’s important to note that “fully fault-tolerant by 2029” doesn’t necessarily mean breaking RSA on New Year’s Day 2030 – the first generation of FTQC might still have limited logical qubit counts. However, it would mark the crossing of a threshold beyond which scaling is just a matter of adding more qubits and modules (much like scaling classical computing once the fundamental design is proven). In practical terms, these advances shrink the timeline for CRQC. What might have been thought to be a late-2030s or 2040s possibility now appears to be looming in the early 2030s if progress continues at this pace. For CISOs and security strategists, the take-away is that the risk horizon for quantum threats is coming into sharper focus. Quantinuum’s achievement shows that the theoretical barriers to building a quantum code-breaker are being knocked down one by one. The final barrier was high-fidelity non-Clifford operations, and now we have a roadmap to surmount it.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.