Israel’s IQCC Opens as a “Quantum Data Center” Built for Interoperability, Hybrid HPC, and Sovereignty

Table of Contents

19 Jun, 2024 – In a June 17, 2024 press release, Quantum Machines announced the opening of the Israeli Quantum Computing Center (IQCC) — a new facility in Tel Aviv designed to give researchers and startups something that’s been chronically scarce in quantum computing: hands-on access to serious infrastructure, not just limited cloud time on someone else’s machine.

The center is located at Tel Aviv University and was built with financial backing and support from the Israel Innovation Authority, as part of Israel’s National Quantum Initiative, according to Quantum Machines.

What’s different – and strategically important – is the IQCC’s architecture. Quantum Machines is positioning it as a hybrid quantum–classical facility that co-locates multiple quantum modalities and ties them tightly to high‑performance compute and cloud resources. In the company’s framing, this is not a single “national quantum computer” as a monolith, but a scalable quantum-HPC testbed built around interoperability and modular upgrades.

A new national-scale facility with a global-access posture

Quantum Machines’ announcement describes IQCC as a “world-class research facility” intended to serve both Israel’s ecosystem and the broader global community. The company’s CEO, Itamar Sivan, argued that access itself is a competitive differentiator – pointing out that many leading quantum research facilities are closed or offer limited external access.

The opening ceremony was scheduled for June 24, 2024 as part of Tel Aviv University’s AI and Cyber Week, per the press release.

A key phrase in the press release is “open architecture.” Quantum Machines says the IQCC was designed for interoperability, modularity, and integration with HPC and the cloud, specifically so the facility can be “continuously upgraded and scaled.”

This is an important nuance: “open architecture” here is less about open-sourcing hardware designs, and more about building a facility where:

- different quantum hardware can be introduced over time,

- the control stack can speak to heterogeneous devices,

- hybrid quantum-classical workflows are treated as the default operating model.

This framing is reinforced in a later Quantum Machines blog post about launching the center, which describes IQCC as a future-looking “hybrid quantum-classical datacenter” concept – explicitly “CPU‑GPU‑QPU” – built around a unified classical control infrastructure.

Three quantum systems, multiple modalities

Quantum Machines states that IQCC houses multiple quantum computers of different qubit types, and that additional processors will be added in the coming months.

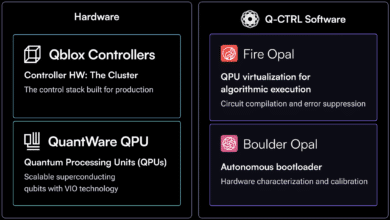

In the “technology and partners” notes within the press release, Quantum Machines highlights:

- a superconducting quantum computer with a 25‑qubit processor manufactured by QuantWare

- an 8‑qumodes photonic quantum computer by ORCA Computing

Independent reporting broadly matches the “multi-system, multi-modality” picture, though some outlets report different qubit counts and names for the superconducting systems. For example, DataCenterDynamics described three systems, including 21‑qubit superconducting systems and an 8‑qumodes photonic system, and explicitly tied one system to the center’s cryogenic testbed.

The precise naming and configuration matters less than the architectural point: IQCC is being built as a heterogeneous facility that can host multiple quantum modalities side-by-side, not a single vendor-locked stack.

The hybrid compute centerpiece: NVIDIA DGX Quantum + CUDA‑Q

A core technical claim in Quantum Machines’ announcement is that IQCC is the first site to “tightly integrate multiple types of quantum computers with supercomputers” using NVIDIA’s DGX Quantum.

Quantum Machines says the DGX Quantum system was co-developed by NVIDIA and Quantum Machines and implements CUDA‑Q (described as an open-source platform for hybrid quantum-classical computing). The press release also states the system includes an on-prem supercomputing cluster “headlined” by Grace Hopper and also including DGX H100, with connections to Amazon Web Services for remote access and additional compute.

From a technology roadmap standpoint, this is a bet on a near-term truth that many in the field increasingly accept: useful quantum computing is likely to emerge first in hybrid workflows where classical accelerators (GPUs/CPUs) and quantum devices work together. Quantum Machines makes that argument explicitly in its own commentary on the IQCC’s design.

The control-plane story: OPX and OPX1000 as the “unifier”

Quantum Machines’ identity in the market is control: hardware and software that orchestrate qubits with precise timing, measurement, and feedback. Unsurprisingly, the IQCC is built around the company’s control systems.

In the press release, Quantum Machines says the center is “tightly integrated” with its OPX control system, and also highlights its newer OPX1000 controller, designed to enable scaling to 1,000+ qubits.

Ynetnews, describing the center’s significance for Israel, similarly emphasizes Quantum Machines’ role as a control-and-monitoring provider rather than a builder of quantum computers — and notes that the center operates OPX1000 for future scaling.

The “testbed” proposition: Bring your chip, don’t build a lab

One of the most interesting parts of the IQCC concept is how explicitly it targets hardware developers.

Quantum Machines’ CTO, Yonatan Cohen, argues that chip developers used to need to build a testing setup costing “millions,” and that IQCC offers a place where researchers can “plug their chip into our testbed.”

The company’s blog expands this idea: IQCC offers cloud access for programming/testing and on‑prem access to a cryogenic testbed capable of housing and operating up to 25 superconducting qubits, explicitly using a “bring your device” approach.

That’s a meaningful shift in the quantum innovation model. Instead of every startup replicating the same expensive lab buildout (dilution refrigerator, control stack, RF chain, and operational know-how), IQCC is trying to behave like a shared advanced-infrastructure platform.

Partners and workflow software: Classiq and ParTec

Beyond hardware, IQCC is being framed as a full environment for hybrid workflows.

Quantum Machines says users will be able to leverage advanced quantum software from Classiq. It also highlights QBridge, a software solution co-developed with ParTec, to support hybrid quantum‑classical workflows.

In other words: the center is not just “some QPUs.” It’s trying to package devices + control + orchestration + scheduling into something closer to what supercomputing centers recognize as a usable platform.

Funding and national context

A national center like this is never just a technical story; it’s industrial strategy.

The Times of Israel reports IQCC was funded by the Israel Innovation Authority with an investment of NIS 100 million (about $27 million), and positioned as part of Israel’s effort to compete in the global race for practical quantum computing.

Quantum Machines itself states that the center is “poised” to help secure Israel’s “quantum independence.”

My take: Why IQCC matters far beyond Israel’s borders

IQCC is easy to cover as a ribbon-cutting story – “new center opens, partners named, GPUs and QPUs under one roof.” But I think the more important story is what kind of quantum future this facility is trying to normalize.

IQCC is QOA in disguise: a facility built like a modular system

In my upcoming deep dive on Quantum Open Architecture (QOA), I argue that quantum computing is moving from a mainframe-era mindset (sealed, vertically integrated stacks) toward a PC-like ecosystem of interoperable modules.

IQCC is a real-world expression of that, but at the data-center / facility layer rather than the single-machine layer.

Instead of betting on one “national quantum computer” provider and then waiting years for upgrades, IQCC is architected to host:

- multiple quantum modalities,

- a unified control plane,

- hybrid HPC resources,

- and a testbed that can accept new devices.

That’s QOA thinking: modularity, swap‑ability, and continuous integration – applied to quantum infrastructure.

If IQCC succeeds, it won’t just produce local research output. It could become a template for how other countries design national quantum facilities: not as a single procurement, but as a continuously upgradeable platform.

Hybrid quantum‑classical integration is becoming the real battleground

Quantum Machines and NVIDIA’s messaging is blunt: tight integration between quantum devices and AI/HPC compute is “essential” to useful quantum computing. Even if you disagree with the absolutism of that claim, the direction of travel is clear.

Why? Because many near- and mid-term quantum approaches rely on classical compute for:

- control optimization,

- calibration loops,

- error mitigation,

- variational algorithms,

- and ultimately, error correction feedback.

If IQCC’s architecture achieves what it promises — low-latency “CPU‑GPU‑QPU” loops – it could meaningfully expand the class of experiments Israel-based teams can run on premises, not just in cloud sandboxes.

In other words, IQCC is trying to turn quantum computing into something that looks like a standard accelerator in a heterogeneous compute stack. That’s a big conceptual step.

The strategic angle: sovereignty is becoming an infrastructure problem, not only a hardware problem

I’ve written elsewhere about Quantum Sovereignty as a mix of technology, policy, and supply-chain control. IQCC is a sovereignty play – but not the simplistic kind.

Israel is not claiming it built the qubit technology end-to-end domestically. Instead, it is building sovereignty by controlling:

- the facility,

- access rules,

- the integration layer,

- the operational know-how,

- and the ability to host and evaluate new devices locally.

This is an underappreciated model of sovereignty: sovereignty-through-integration and operations.

In a world where the best qubits may come from different places for a long time, the ability to evaluate, integrate, secure, and operate quantum systems inside your own borders may be as strategically important as owning the qubit IP itself.

The “open testbed” is a talent and startup multiplier – and it changes what “investment” means

A recurring bottleneck in quantum ecosystems is that the barrier to build and operate hardware is brutally high – not just in capex, but in expertise and time.

IQCC’s “bring your device” and shared testbed approach (as described by Quantum Machines) is essentially a strategy to compress the time-to-experiment for startups and researchers.

That matters because in deep tech, speed compounds. The sooner more teams can run real hardware iterations, the sooner you get:

- better device ideas,

- better calibration techniques,

- better tooling,

- and more people who can credibly say they’ve operated hardware (not just written circuits for simulators).

This is how you build a durable ecosystem: not by one heroic machine, but by enabling many teams to learn faster.

The cybersecurity subtext: a quantum facility is a supply-chain and access-control problem in disguise

Let me put my risk hat on.

A center like IQCC is inherently a multi-tenant critical R&D environment. It blends:

- sensitive hardware IP (from multiple vendors),

- remote access (cloud connectivity is explicitly part of the model),

- high-value algorithm development,

- and a hybrid compute plane that includes GPUs and supercomputers.

That makes IQCC a compelling target – not because “quantum breaks crypto tomorrow,” but because quantum labs are where future advantage is incubated.

When quantum infrastructure becomes a platform for many external users, the core security challenges look familiar – and urgent:

- identity, access management, and tenant isolation

- supply-chain assurance for hardware and firmware components

- secure workflow orchestration across classical + quantum resources

- insider risk and data exfiltration controls

- provenance and integrity for “bring your device” models

Open architecture amplifies innovation, but it also multiplies integration interfaces – and interfaces are where security stories usually begin.

If IQCC becomes the blueprint for “quantum data centers,” then we should start asking: what does zero trust mean when the “endpoint” is a dilution fridge controlled by mixed-vendor electronics and cloud APIs?

Where this leaves us

IQCC is a national milestone for Israel – but it’s also a global signal that the quantum field is starting to invest in a different kind of infrastructure: open, hybrid, modular facilities that treat quantum devices as evolving components inside a larger compute environment.

If the IQCC model proves out, I expect more countries (and more supercomputing centers) to converge on the same idea: don’t wait for the perfect monolithic quantum computer. Build the platform that can absorb whatever the next five years of quantum hardware innovation throws at you.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.