Risk-Driven Strategies for Quantum Readiness When Full Crypto Inventory Isn’t Feasible

Table of Contents

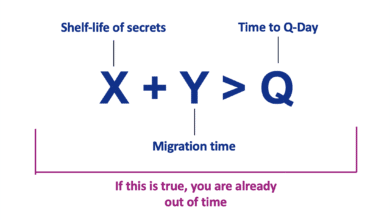

Quantum computing threatens to break or weaken today’s cryptography, putting encrypted data and secure communications at risk. Quantum algorithms like Shor’s can factor RSA and break elliptic-curve cryptography, and Grover’s algorithm can speed up brute-force attacks on symmetric keys by effectively halving their strength. In fact, cryptography experts warn that a sufficiently advanced quantum computer could break a 2048-bit RSA key in a matter of days or hours, whereas it would take classical supercomputers millions of years. This looming threat has prompted urgent action: NIST (the U.S. National Institute of Standards and Technology) has standardized new quantum-resistant algorithms and set a goal of deprecating vulnerable crypto (like RSA/ECC) by 2030, with full disallowance by 2035. In other words, organizations worldwide must prepare to transition their cryptography before these deadlines. Some analysts, including me, even predict that state actors may have quantum decryption capabilities as early as 2030 – meaning the clock is ticking for “Q-Day,” the moment when quantum computers can break current encryption.

However, preparing for the post-quantum era is easier said than done. Security regulators and industry standards almost unanimously recommend conducting a full cryptographic inventory – identifying all places where encryption, digital signatures, and key exchanges are used – as the first step toward quantum readiness. In theory, once you know every instance of vulnerable cryptography in your infrastructure, you can methodically replace or upgrade each one with quantum-safe alternatives. In practice, this comprehensive inventory is an enormous undertaking. Modern IT and OT environments are incredibly complex, and cryptography is ubiquitous – embedded in everything from web servers and databases to IoT devices and third-party software. Organizations often discover that no single tool or scan can find 100% of cryptographic instances, especially when some crypto is hardcoded or undocumented.

Moreover, performing a thorough inventory and upgrade across a large enterprise could take years, requiring code changes, protocol updates, vendor coordination, and significant expense. As one IBM study noted, over 20 billion digital devices may need to be upgraded or replaced worldwide in the next 10–20 years to implement quantum-safe encryption. Achieving complete cryptographic visibility remains the ideal – and ultimately necessary – goal, but many security leaders find it overwhelming to “boil the ocean” and inventory everything at once.

So what can be done if a full inventory isn’t feasible on time? Let’s explore a more pragmatic, risk-driven strategy for quantum readiness. By prioritizing high-risk systems and data, isolating or shielding vulnerable assets, strengthening cryptographic defenses, and layering quantum-safe protections, organizations can begin mitigating quantum threats even if a full cryptographic inventory cannot be completed upfront. The goal is to balance pragmatic risk management with long-term migration: address the most critical quantum risks first, while laying the groundwork for eventual enterprise-wide post-quantum cryptography (PQC) adoption.

(Disclaimer: whenever you diverge from the “ideal” comprehensive approach, you are accepting certain risks. Be sure you and the relevant risk owners understand and accept the risk trade-offs made by these workaround approaches. And if and when it goes wrong, please don’t blame it on me.)

The Quantum Threat vs. Traditional Approach

Today’s widely used encryption schemes (RSA, Diffie-Hellman, ECC for public-key crypto; AES, SHA for symmetric encryption and hashing) derive their security from problems that are intractable for classical computers. For instance, RSA’s security relies on the difficulty of factoring large integers, and elliptic-curve cryptography relies on discrete logarithms – tasks that would take classical computers astronomically long to solve. Symmetric ciphers like AES are secure against brute force as long as keys are sufficiently long (128-bit, 256-bit, etc.), which even the fastest classical computers cannot exhaustively search in realistic time. This has created a strong cryptographic barrier safeguarding data in transit and at rest.

Quantum computing changes the game. Shor’s algorithm, running on a future cryptographically relevant quantum computer (CRQC), can efficiently factor large integers and solve discrete log problems – meaning it could break RSA, DSA, DH, ECDH, ECDSA, and other public-key systems that underpin secure websites, VPNs, digital signatures, and more. Meanwhile, Grover’s algorithm can quadratically speed up brute-force search, effectively cutting the security of symmetric keys in half. For example, Grover’s algorithm could make an $$n$$-bit key as weak as a $$(n/2)$$-bit key: AES-128 would only provide ~64 bits of security, and AES-256 would be reduced to ~128 bits. In practice, this means using stronger symmetric keys (256-bit) and larger hashes (384-bit or 512-bit) to maintain an adequate security margin against quantum attacks. Indeed, the U.S. National Security Agency’s Commercial National Security Algorithm Suite (CNSA) already mandates AES-256 and SHA-384 for high-security systems, to ensure a quantum adversary faces on the order of $$2^{128}$$ operations – a workload deemed astronomical even for quantum computers.

Another critical aspect of the quantum threat are the “harvest now, decrypt later” (HNDL) and “trust now, forge later” (TNFL) attack modes. Adversaries (including nation-state intelligence agencies and advanced cybercriminal groups) are likely stealing encrypted data today and storing it, with the intent to decrypt it once they have a quantum capability. Encrypted files or intercepted network traffic that might be indecipherable now could become valuable intel in a decade. This especially concerns data that remains sensitive for a long time: e.g. personal data, trade secrets, intellectual property, diplomatic or military communications. There is credible evidence that some state-sponsored actors are already harvesting troves of encrypted traffic from critical infrastructure backbones, financial networks, and government systems, anticipating that they can unlock those secrets in the future. This is why e.g. U.S. guidance from NSM-10 explicitly calls out data “that must remain sensitive through 2035” as a top prioritization criterion for migration to PQC.

In response to these threats, governments and standards bodies are issuing urgent directives. NIST’s post-quantum cryptography project has selected new algorithms (for example, CRYSTALS-Kyber for key exchange and CRYSTALS-Dilithium for digital signatures, among others) and in 2024 released draft standards for them. A NIST draft guidance (NIST IR 8547) proposes that widely used legacy algorithms like RSA-2048 and ECC P-256 should be deprecated by 2030 and disallowed by 2035, effectively setting a deadline for organizations to transition. This timeline is not arbitrary – 2030 has been cited by many experts, me included, as a conservative estimate of when quantum computers might realistically begin to threaten 2048-bit RSA. In parallel, the U.S. Office of Management and Budget (OMB) issued Memo M-23-02 (November 2022) instructing federal agencies to prioritize their cryptographic inventory efforts on high-impact systems and sensitive data, with the goal of mitigating as much quantum risk as feasible by 2035. Other national strategies echo similar timelines: for example, the UK’s National Cyber Security Centre (NCSC) released a PQC migration roadmap with milestones at 2028 (planning and initial inventory), 2031 (early migrations for high-priority systems), and 2035 (complete transition). In short, the world is rallying to replace our current crypto arsenal before quantum computers arrive – but the challenge is massive, and time is not on our side.

A Pragmatic, Risk-Driven Approach

Given the practical challenges, organizations may need to begin their quantum-readiness journey with a risk-driven approach rather than a theoretically perfect one. The essence of this strategy is to focus limited resources where they matter most – addressing the highest quantum-vulnerability risks first and implementing interim safeguards for the rest. Even the U.S. government’s guidance recognizes the need for prioritization. For example, the federal memo mentioned above directs agencies to inventory high-impact systems, high-value assets (HVA), and any systems containing data that must remain sensitive through 2035 before worrying about less critical systems. In other words, not all cryptographic assets pose equal risk, so a sensible plan is to triage and tackle them in order of importance. Below, I outline a sample phased risk-driven approach:

1. Identify High-Risk Systems and Data

Begin with a quantum risk assessment across your IT and OT environments. This doesn’t require knowing every algorithm in use; rather, it means surveying your systems, applications, and data to gauge which would have the most damaging impact if their cryptography were compromised by a quantum adversary. Key factors to consider include:

Sensitivity of Data

What data would be most devastating in the wrong hands? Prioritize systems protecting highly sensitive information – e.g. personal customer data, financial transactions, intellectual property, healthcare records, classified or national security data, etc. For example, a bank’s payment processing system or a government agency’s secure communication platform might rank as top priority. Data whose confidentiality or integrity is mission-critical should get quantum-safe protection first. If you handle information that would cause major harm if decrypted, flag those systems now.

Longevity of Security Needed

Quantum attacks pose a unique “harvest now, decrypt later” threat: attackers can steal encrypted data today and store it until they have a quantum computer to decrypt it in the future. Therefore, identify data that needs to remain confidential for many years. If your organization handles information that must stay secure into the 2030s and beyond (e.g. health records, long-term intellectual property, state secrets), those systems are higher risk. Regulators explicitly call out this criterion: if data recorded today will still be sensitive in 2035, the system handling it should be prioritized for post-quantum migration. For instance, archives of patient medical histories or design blueprints for a product still a decade from market – these should be among the first protected.

Besides the “harvest now, decrypt later”, you should also consider the “trust now, forge later” (TNFL)

– the integrity analogue to HNDL. TNFL warns that digital signatures we rely on today (for software updates, contracts, identities, and device commands) could be forged by future quantum adversaries, collapsing trust anchors and creating cyber‑physical, regulatory, and liability exposure.

Public Exposure and Threat Likelihood

Consider which cryptographic systems are exposed to potential attackers. Internet-facing applications using vulnerable crypto (public websites, APIs, VPN gateways, etc.) have a higher likelihood of being targeted or passively intercepted, compared to closed internal systems. Also weigh which systems are likely already under adversary surveillance. High-value targets (critical infrastructure, telecom networks, financial clearing houses, government networks) may have nation-state threat actors sniffing their traffic today. Indeed, security experts believe that nation-state APT groups are actively engaging in long-term espionage, intercepting and hoarding encrypted traffic from such targets. So, prioritize strengthening cryptography for externally exposed channels and high-value data links sooner rather than later. For example, ensure your public-facing web services, external VPN tunnels, and any sensitive data feeds that traverse untrusted networks are high on the quantum readiness list – those are prime candidates for “harvest now, decrypt later” attacks.

Known Weaknesses or Outdated Crypto

Identify any systems still using especially weak or deprecated cryptographic algorithms, as these represent “low-hanging fruit” for both classical and quantum attackers. For instance, any straggling usage of RSA-1024, SHA-1, 3DES, AES-128, or other obsolete algorithms should be high on the list to fix or isolate. Even without quantum computers, those algorithms are on borrowed time – and with quantum, they’d be broken instantly. Remediating these yields an immediate security win. One large organization following a PQC roadmap scanned hundreds of applications and immediately flagged instances of 1024-bit RSA keys and 128-bit ciphers that needed urgent replacement. Upgrading to stronger classical crypto can buy time and provide interim security. For example, moving from AES-128 to AES-256 doubles the key length, which maintains an effective 128-bit security level even against Grover’s algorithm. Likewise, migrating any 2048-bit RSA keys to 3072 or 4096-bit RSA (or to elliptic curves like P-384) raises the quantum attack bar slightly – it’s not quantum-proof, but it forces an attacker to have a larger quantum computer to break it. The U.S. NSA’s CNSA guidance already requires 3072+ bit RSA or P-384 curves for sensitive systems, alongside the symmetric upgrades. While these measures are not substitutes for true PQC, they are pragmatic hardening steps that reduce your exposure in the interim.

By combining these factors, you can triage which systems need attention first. Document your findings in a “quantum risk register” or as an extension of your existing cybersecurity risk register. Many organizations find it useful to assign a quantum risk rating (High/Medium/Low) to each major system, reflecting a combination of the data sensitivity, exposure level, and cryptographic strength. This helps communicate to stakeholders where immediate mitigations are needed. Crucially, it also justifies focusing efforts – and budget – on a manageable subset of systems initially, rather than attempting an all-at-once overhaul. This step mirrors many of government guidances: focus on your most sensitive systems first.

2. Perform a Focused Crypto Inventory (Where It Counts)

With high-risk systems identified, narrow the cryptographic inventory effort to these priority areas first. Rather than scanning everything everywhere, start by mapping the cryptography in your most critical systems and applications. For example, if your analysis flags the corporate VPN and customer-facing web portal as top priorities, perform targeted discovery on those: What cryptographic libraries and protocols do they use (e.g. OpenSSL, TLS 1.2/1.3 with which ciphersuites)? Are they using RSA or ECDHE for key exchange? What certificate authorities (CAs) and certificates are involved? What are the key lengths and algorithms for any data at rest encryption on those systems? By drilling down into a limited set of systems, you can get a clear picture of their cryptographic posture relatively quickly.

Performing a focused inventory like this is far more tractable than an enterprise-wide discovery, and it yields immediate actionable insights. You’ll pinpoint exactly which algorithms need replacement (or which software components need upgrades) on those high-impact systems. One practical tip is to leverage any automated tooling you have (or can acquire) to scan code, binaries, and configurations for cryptographic usage – but target the scan to the critical applications identified in Step 1. Many modern tools (often leveraging concepts like a Cryptography Bill of Materials, or CBOM) can output a report of the algorithms, key lengths, and libraries a given application uses. This helps ensure you don’t overlook a hardcoded cipher or a bundled third-party library within the application. It essentially produces an inventory of crypto components for that system, which is invaluable for planning the remediation. (In fact, NIST and industry groups have championed integrating crypto discovery into the software BOM process; a CBOM provides exactly this kind of detail to aid PQC migration.)

In performing this limited-scope inventory, give special attention to key infrastructure components that can introduce widespread risk. For instance, PKI and certificate systems are often linchpins: if your root CA, internal PKI, or identity federation system is using quantum-vulnerable algorithms, that risk cascades across the organization (since it underpins trust for many other services). Similarly, IoT devices or embedded systems might use hardcoded RSA/ECC that are difficult to update later – cataloging their cryptographic status early is important. By scoping your discovery to critical areas, you can much more quickly establish a partial cryptographic inventory that covers perhaps the top 10–20% of systems which carry 80–90% of the quantum risk. This then forms the foundation of your migration roadmap. Even a partial inventory focused on high-value systems can be turned into a concrete action plan that would significantly reduce your risk exposure and satisfy many of the examiners.

3. Prioritize and Plan Incremental Migration

With a clear view of where your highest risks lie, develop an incremental migration plan focusing on those areas. The plan should lay out which systems to tackle first, what quantum-safe solutions or mitigations to apply, and the timeline and dependencies for each. For example, you might designate Phase 1 to upgrade the cryptography in your external network gateways (VPNs, TLS terminators) and internal PKI, Phase 2 to address all public-facing web applications and APIs, Phase 3 for secondary internal applications and IoT devices, and so on. This phased approach aligns with guidance from standards bodies. The UK NCSC, for instance, suggests a multi-year roadmap with milestones – starting from discovery, then initial migrations in critical services by around 2028–2030, then broadening out to full coverage by 2035. By planning in phases, you ensure that the most urgent vulnerabilities get mitigated first, and you also create breathing room to adjust as standards and technologies evolve.

Importantly, communicate this prioritized roadmap to senior leadership to set expectations and secure support. Emphasize that this is a risk-based prioritization – you are addressing the worst quantum-exposure risks immediately, rather than waiting until you can do everything perfectly. This approach resonates with business stakeholders because it aligns with treating quantum security as a business risk issue: we’re protecting the “crown jewels” first. It can help justify budget and resources, as executives will see a clear plan that protects critical assets and keeps the organization ahead of regulatory mandates. (Increasingly, regulators are expecting to see such plans. For example, U.S. federal agencies must report their progress on PQC migration planning and funding annually.)

As you implement the plan, remain flexible and monitor developments in the PQC landscape. NIST’s standards are emerging, but there may be changes (e.g. new algorithms like HQC being added, or refined guidance on hybrid modes). Crypto-agility – the capacity to swap out cryptographic components without massive disruption – should be a guiding principle. Many organizations are, in parallel, modernizing their crypto infrastructure: upgrading libraries to versions that support PQC (even if not turned on yet), building test environments for post-quantum algorithms, and training engineers on PQC concepts. These efforts will pay dividends as they shorten the response time if, say, you need to pivot to a different algorithm or integrate a new standard down the line.

In summary, by the end of this risk-driven prioritization phase, you should have: (a) a list of high-risk systems and their crypto inventory; (b) a phased roadmap for how you will address them and eventually cover the rest; and (c) interim goals (perhaps yearly or quarterly milestones) to measure progress. This approach ensures that quantum readiness isn’t an amorphous, impossible project, but a structured program where each step tangibly reduces risk.

Mitigation Strategies for At-Risk Systems

While executing the above risk-based plan, organizations should deploy a toolkit of mitigation strategies to protect systems that cannot be immediately upgraded or fully inventoried. These compensating controls help reduce quantum risk in the interim, buying time until full PQC migration is achieved. Think of this as shoring up the defenses of still-vulnerable areas so that even if they remain on classical crypto for a few more years, they’re harder to exploit or eavesdrop on. Below are several key strategies:

Isolate and Shield Vulnerable Systems

One straightforward risk mitigation is to isolate the systems that use vulnerable cryptography, especially if you cannot upgrade them quickly. By isolation, I mean using network architecture and access controls to minimize exposure of those systems to potential attackers. If, for example, an older database is using RSA-based encryption that can’t easily be changed, ensure that database is not directly accessible from the internet or high-risk network segments. Place it in a segmented network zone with strict firewall rules, so only specific application servers can communicate with it – ideally over encrypted channels that are themselves more secure. This way, it’s far less likely that an adversary could intercept its traffic or gain direct access to attempt cryptographic attacks. Essentially, if you can’t immediately fix the cryptography, reduce the attack surface around it.

Isolation can also mean limiting which protocols and clients are allowed to interact with a system, thereby preventing adversaries from exploiting weak crypto handshakes. Many organizations, in response to both classical and quantum threats, have been turning off or blocking obsolete cryptographic protocols at their boundaries. For instance, disabling older SSL/TLS versions (TLS 1.0 and 1.1) and disallowing ciphersuites that use RSA key exchange or old ciphers is a wise move. Most modern browsers and services have moved to TLS 1.2+ with ephemeral Diffie-Hellman key exchanges, which provide Perfect Forward Secrecy (PFS). By enforcing this (for example, configuring your web servers or VPN concentrators to reject any non-PFS ciphers), you ensure there’s no long-term key that an attacker can steal and later use to decrypt past traffic. In a quantum context, PFS is still vulnerable (a quantum computer could solve the discrete log of an ECDH exchange), but it removes one easy attack vector – an attacker can no longer simply record traffic and later compromise a server’s RSA private key to decrypt it. All sessions would have used ephemeral session keys that are not stored long-term. Thus, enforcing PFS buys some safety and also aligns with best practices. As a specific example, many enterprises have already phased out static RSA handshakes entirely; TLS 1.3, which is PFS-only, is strongly recommended wherever feasible.

Another tactic is deploying application-layer proxies or gateways in front of legacy systems as cryptographic shields. These proxies can act as a translator or broker, speaking secure protocols to the outside even if the backend is stuck on something weaker. For example, suppose you have an IoT endpoint or an older application that only supports a deprecated cipher. You could place a gateway that terminates a modern, strong TLS 1.3 connection from the client, and then connects to the legacy system separately. To the outside world, the communication is protected with (say) an ECDHE AES-256-GCM cipher, and only within the secure enclave behind the proxy does it downgrade to the weaker cipher for the legacy device. This effectively firewalls the weak crypto, containing it to a controlled internal link. An analogy is how enterprise email gateways perform TLS encryption: they decrypt inbound email at the edge and then re-encrypt it when forwarding internally. Here, you’re doing the same concept for any protocol – creating a secure wrapper at the boundary. While this doesn’t eliminate the weakness (the internal leg is still vulnerable if an attacker penetrated that far), it removes the weak link from direct exposure on untrusted networks.

Isolation also extends to access management. Under a zero-trust mindset, assume that at some point an attacker will get onto your network, so add extra controls around legacy cryptosystems. Implement strict identity verification and device authentication for any user or system that tries to access a sensitive application – even inside the perimeter. Use multi-factor authentication and continuous monitoring so that even if encryption were broken, an attacker would additionally need valid credentials (which hopefully trigger alerts if abused). In short, don’t rely solely on encryption for security. If you can’t fully trust the encryption, compensate with layered defenses: limit access pathways, require authentication at multiple steps, and closely monitor interactions with the vulnerable system.

Add Quantum-Safe Layers (“Encapsulate” Communications)

While working toward replacing vulnerable algorithms within systems, another approach is to encapsulate those systems’ communications inside an additional quantum-safe tunnel. Think of this as “encryption on top of encryption” – an outer layer that is quantum-resistant, protecting the inner (possibly weaker) layer. This technique can be implemented with emerging quantum-safe network solutions. For instance, some vendors offer quantum-safe VPNs or appliances that perform a post-quantum key exchange to create a secure tunnel between two sites, within which you run your normal TLS or IPsec traffic. The outer tunnel uses newly standardized PQC algorithms (such as NIST’s CRYSTALS-Kyber for key establishment, or hybrid modes that combine classical and PQC) to ensure that even if an attacker records the traffic, they cannot break the outer layer without a quantum computer – and by design, the outer layer is built to resist that. This effectively “buys time” for the inner legacy encryption: even if the inner TLS or SSH or database encryption might be broken later, an eavesdropper would first have to break the outer PQC wrapper, which is not known to be breakable by quantum attacks.

A concrete example is Quantum Xchange’s Phio TX system (note: I’m not endorsing any specific products when I mention them. Just illustrating concepts). It’s essentially an overlay network that delivers encryption keys via a quantum-safe method, parallel to your existing channels. In one deployment mode, Phio TX creates a separate, PQC-protected AES tunnel that parallels the normal TLS connection. The TLS session keys are supplemented (or even replaced) by keys delivered out-of-band through this secure channel. An eavesdropper tapping the line would see the AES-encrypted outer tunnel, for which the key exchange was done via a PQC algorithm or even quantum key distribution (QKD). Thus, the content (including the inner TLS handshake and data) is inaccessible without breaking the PQC layer. In effect, the solution makes the traditional encryption “quantum-safe” by wrapping it in an outer shield. If an adversary tries harvest-and-decrypt, they face the outer layer’s protection. Products like this often advertise that they can make your existing network “quantum-ready” without waiting – and indeed, it’s a form of defense-in-depth for crypto.

Organizations can start adopting such quantum-safe overlay tunnels for particularly sensitive links. For example, if you have two data centers replicating sensitive data, and currently rely on classical VPN encryption, you could deploy a quantum-safe tunneling appliance at each end to add that extra layer. Similarly, when setting up new dedicated network links or inter-office connections, consider solutions that support PQC-based key exchanges. Early adopters in industry (banks, cloud providers, telecoms) have already piloted PQC in TLS libraries and VPN products in the last couple of years. We saw public tests of hybrid post-quantum TLS 1.3 as early as 2022–2023 by companies like Cloudflare, Google, AWS, IBM, etc., and standards for incorporating PQC into protocols are maturing. If you keep an eye on these developments, you can leverage them in your environment as they become stable. The goal is that even if an application’s own crypto isn’t yet quantum-resistant, the channel it communicates over can be made quantum-resistant, greatly mitigating the “harvest now, decrypt later” risk for data in transit.

Beyond tunnels, consider “cryptographic gateways.” These are specialized proxies or middleware that handle cryptographic operations on behalf of less-capable endpoints. A gateway could, for instance, sit in front of an IoT device that only speaks RSA, and handle a post-quantum TLS handshake with the client, then feed the IoT device the symmetric key through a secure local link. Or a gateway might accept a connection from a legacy client using classical TLS, and re-initiate it to a server using a PQC cipher suite (translating in the middle). This intermediary approach is recommended by experts as a compatibility bridge: implementing PQC in a layered manner, using intermediaries, can allow you to introduce quantum-safe algorithms without waiting for every single application and device to support them natively. In essence, gateways and tunnels act as quantum-safe “translators”, allowing you to protect data in transit now, even if the end systems will only be upgraded later.

One caution: when adding layers of encryption or intermediary steps, be mindful of performance and latency, as well as any regulatory considerations (e.g. you might need to ensure the gateway meets certain cryptographic module standards if it’s handling keys). Test these solutions in a pilot before broad rollout. But from a risk perspective, layering encryption is a robust strategy – it’s similar to how we layer defenses in other domains (firewalls, IDS, etc.), except here we layer ciphers.

Enforce Strong Cryptographic Policies and Monitoring

Another important aspect of a risk-driven approach is adapting your security monitoring and policies to the quantum threat. While we might not have specific “quantum attack” alerts yet (since no one is actively decrypting traffic with a quantum computer at this moment), we can bolster our telemetry and defenses in ways that anticipate quantum-related tactics:

Monitor for Unusual Bulk Data Egress

A hallmark of “harvest now, decrypt later” campaigns is the theft of large quantities of encrypted data. Attackers might quietly exfiltrate databases, file servers, or enormous sets of network traffic logs, then bide their time. Your SOC should already be watching for large data exfiltration, but it’s worth tuning those systems to be extra sensitive to unusual encrypted data flows. For example, if you suddenly observe an internal process or user account exporting gigabytes of data (especially if it’s encrypted archives or database dumps), that should raise a red flag. According to cybersecurity stats, over 70% of ransomware attacks now include data exfiltration before the data is encrypted in place – attackers are already in the habit of stealing data for leverage. For quantum threats, the motivation is different (future decryption rather than extortion), but the behavior might look similar. Implement Data Loss Prevention (DLP) rules and anomaly detection to catch things like “user X usually transfers ~100 MB a day, but today transferred 50 GB of data from a sensitive share.” While not every large transfer is malicious, err on the side of caution. It’s far easier to investigate and find it was a backup job, than to miss it and discover years later that your entire customer database was siphoned. The key is to detect and stop the harvesting phase if at all possible, since you won’t have an opportunity to stop the decryption phase once quantum capabilities are available.

Keep Tabs on Cryptographic Systems and Assets

Treat your cryptographic keys and certificates as the high-value targets they are. Monitor your certificate authorities (public or private) for any suspicious activity – e.g. unauthorized issuance of certificates (which could indicate someone attempting a man-in-the-middle with a fake cert), or unusual access to CA signing keys. If an attacker can steal your private CA key or trick your PKI, they might not need to break the crypto; they’d just issue themselves valid credentials. Also, log and watch the usage of encryption libraries and modules. If suddenly an internal system starts using an outdated SSL library or an insecure cipher suite (perhaps due to a misconfiguration or downgrade attack), generate alerts. Modern SIEMs can be tuned to flag handshake anomalies, such as a client and server negotiating a cipher that’s below your policy standard. Even if you temporarily allow a weak algorithm for compatibility, make sure you have visibility whenever it’s used. Over time, you want to eliminate those, but during transition, visibility is vital. Consider tagging systems in your CMDB with their crypto risk level (from Step 1) and then having monitoring in place for any changes or access to those systems.

Intrusion Prevention with Crypto Enforcement

Many next-gen firewalls and intrusion prevention systems (IPS) have features to enforce cryptographic policies on the fly. For instance, if you have an enterprise proxy or firewall that can inspect TLS handshakes (via trusted CA certs or in a TLS termination mode), you can configure it to block disallowed algorithms. You might set a rule: “Deny any SSL/TLS connection that tries to use RSA key exchange or any cipher below 128-bit strength.” This means even if a legacy server or rogue device tries to communicate with an insecure cipher, the connection won’t be allowed. You’re essentially “virtually patching” cryptographic weaknesses by making them non-negotiable on your network. Similarly, ensure only strong protocols are enabled: no SSH v1, no older IPsec transforms, etc. If you can’t outright disable these on the endpoints, an IPS can sometimes detect and block them. As PQC algorithms become standardized in protocols, you can update these policies to allow them (or even require them for certain high-security communications). But in the meantime, focus on eliminating known-weak crypto. A practical example: a company configured its network monitors to block any TLS handshake not using TLS 1.3 or TLS 1.2 with PFS – effectively banning the old RSA handshakes. This way, even if there were a misconfigured server, an active attacker couldn’t exploit it to force a weak cipher without setting off alarms or being blocked.

Continuous Discovery and Auditing

Finally, institute an ongoing process to update your cryptographic inventory and risk assessment as you progress. The risk-driven approach is not a one-time set-and-forget; it requires revisiting and refining. Each time you mitigate a high-risk area, move down the list to the next priority. If new systems come online or you undergo digital transformation projects, include crypto review as part of that process. (For example, if you’re adopting a new SaaS platform, ask the vendor what crypto algorithms it uses and whether it has a PQC transition plan. If you’re developing new software, build in crypto-agility from day one.) You should integrate quantum readiness checks into architecture reviews, procurement criteria, and vendor management. Some organizations have started adding contract language that requires vendors to upgrade to PQC within a certain timeframe once standards stabilize. Also, take advantage of industry collaboration – many sectors have working groups or information-sharing on quantum migration challenges (e.g. the GSMA Post-Quantum Telco Taskforce for telecommunications). Learn from peers and share tools or techniques (some open-source tools for scanning crypto in code have emerged, for instance). Over time, these incremental efforts – monitoring, enforcement, and continuous inventory – will shrink the unknown areas and give you increasing confidence in your quantum readiness posture.

Building Toward Full Quantum Readiness

By following a risk-driven approach, an organization can make tangible progress on quantum readiness without being paralyzed by the enormity of a full inventory and migration. You will have secured your most sensitive assets against the looming quantum threat and put compensating controls around the weaker points. This not only reduces actual risk, but also buys time for the organization to eventually tackle the harder, long-tail issues. It’s important to recognize that the end-state does need to be a comprehensive migration to quantum-resistant cryptography across the board – regulators and standards bodies worldwide expect no less by the early 2030s. The difference with a risk-driven approach is that you are sequencing and staging that migration smartly, rather than attempting it all at once.

Crucially, a risk-focused strategy should be coupled with efforts to increase crypto-agility and preparedness. While you mitigate immediate risks, also invest in modernizing your crypto infrastructure to make it more adaptable. This includes steps like: deploying libraries and hardware modules that can support new algorithms (even if not used yet), refactoring applications to centralize cryptographic functions (so they can be updated in one place), and establishing a governance framework for cryptographic changes. Frameworks like the Crypto Agility Risk Assessment Framework (CARAF) specifically emphasize developing the ability to swap out cryptographic components with minimal disruption. The NIST National Cybersecurity Center of Excellence (NCCoE) has a project on Migration to Post-Quantum Cryptography that provides example implementations for crypto-agility and hybrid solutions. By engaging with such resources, you can ensure that as new PQC standards emerge or existing ones are refined, your organization can adopt them relatively smoothly. For example, you might initially implement hybrid cryptographic modes (combining a classical algorithm with a PQC algorithm) in certain places to gain familiarity and ensure compatibility. Some early adopters are experimenting with hybrid certificates (certificates that contain both a classical and a PQC public key) so that either can be used to verify signatures. These kinds of interim steps not only reduce risk (by adding quantum resistance) but also build institutional knowledge and capability for the eventual full transition.

It’s also worth highlighting that many improvements made in the name of quantum readiness yield security benefits today. Eliminating obsolete crypto, enforcing stricter network segmentation, improving key management monitoring, and achieving crypto-agility are all best practices independent of the quantum threat. They protect against present-day threats as well (like cryptographic failures, insider threats, and classical computing advances). Thus, your quantum readiness program can be framed as part of overall cyber resilience enhancement. The NCSC noted that a successful PQC migration will be underpinned by fundamentals like good asset management, clear visibility into systems and data, and actively managed supply chains – essentially pillars of sound cybersecurity governance. In other words, preparing for PQC is an opportunity to also clean house in terms of cryptographic hygiene and architecture.

To recap, a sensible strategy in the face of quantum uncertainty is: acknowledge the ideal, but start with the feasible. By doing the above, you create a quantum readiness program that is both strategic and practical.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.