Cryptographic Stack in Modern Interbank Payment Systems

Table of Contents

Preface

For years I’ve been knee‑deep in cryptographic inventories – pulling threads through hardware roots of trust, HSM clusters, TLS meshes, signing keys, and the odd “mystery cipher” that no one remembers deploying. That work taught me a simple truth: in real systems, cryptography isn’t a feature or a system that can be easily analyzed and replaced. It’s the substrate. And it’s so layered, inherited, and embedded that no single person can see all of it at once.

This article is part of a series in which I map those layers in real-life` systems – not to impress with complexity, but to make it visible and therefore manageable. I began with telecommunications, tracing how a modern network encrypts and authenticates everything from SIM‑based identity to roaming gateways and service‑mesh APIs. Today I’m turning to financial services, where cryptography is even more pervasive: from the customer app and bank core, through SWIFT and correspondent links, to central‑bank settlement and the audit trails that make value movements non‑repudiable.

This one is personal. I spent years managing massive projects redeveloping and replacing national payments infrastructure – from being the project manager for the redevelopment and replacement of the UK national payments infrastructure in 2006, and leading Bank of America’s payments development teams, to securing SWIFT systems for some of the largest global banks after the 2016 famous SWIFT breach. The experience left me with deep respect for the invisible trust fabric that keeps money moving – HSMs and key ceremonies, mutual TLS and message‑level signing, tokenization at rest and layered encryption in flight, all stitched together across institutions and jurisdictions. My aim here isn’t to catalogue every primitive or standard; it’s to show how many distinct trust anchors and controls a single cross‑border payment can traverse – and why “do we know all our crypto?” is not a rhetorical question but an operational obligation.

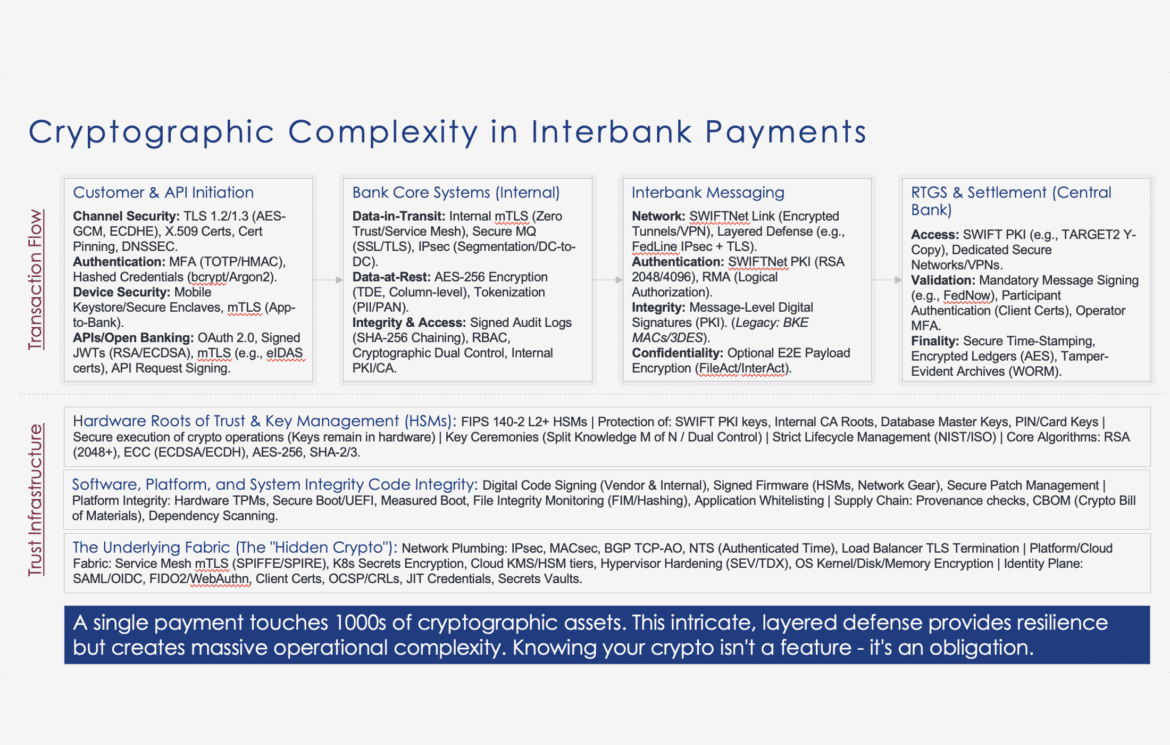

Below is a somewhat idealized example of an interbank payment use case based on multiple engagements. Certain parts of it can vary in implementation from one bank to another, but I believe this is an overall good representation.

Introduction

International interbank payments rely on multiple layers of classical cryptography to ensure security from end to end. When a user initiates a cross-border transfer at their local bank, cryptographic mechanisms protect the transaction at every stage – from the customer’s online banking session, through the bank’s internal systems, across the SWIFT interbank messaging network, to settlement in a central Real-Time Gross Settlement (RTGS) system.

This post (or maybe a whitepaper? it ended up being much longer than I anticipated) provides a comprehensive (but not exhaustive!), layered overview of these cryptographic protections, illustrating how confidentiality, integrity, authentication, and non-repudiation are achieved without the use of post-quantum cryptography. I examine each actor and system in the payment chain – the end-user, the commercial banks, the SWIFT network, and the central bank infrastructure – and detail the security techniques in place.

1. Customer-to-Bank Channel Security

Secure Communication (TLS): When a customer uses online or mobile banking to initiate a payment, the connection to the bank is protected by robust transport encryption. Banks enforce HTTPS with modern TLS (Transport Layer Security) to encrypt data in transit, preventing eavesdropping or tampering by attackers. This means that account details, payment instructions, and any personal data the user enters are scrambled using algorithms like AES within the TLS protocol, ensuring only the user’s device and the bank’s server can decrypt the information. The TLS channel also provides server authentication (and sometimes client authentication) via X.509 certificates, so the user’s app/browser verifies it’s communicating with the genuine bank server.

User Authentication and MFA: The customer typically must authenticate with strong credentials. Banks store customer passwords securely (hashed and salted in the database) so that even if the database is compromised, the original passwords cannot be easily recovered. Beyond passwords, multi-factor authentication is employed to add cryptographic assurance of the user’s identity. One-time password (OTP) tokens or mobile authenticator apps generate login codes based on cryptographic algorithms (e.g. TOTP uses HMAC-SHA1/SHA256 with a secret key and time). Many banks issue hardware tokens or smartphone apps that contain a secret key (sometimes stored in a secure element of the device) to compute OTPs – a form of cryptographic challenge that an attacker cannot easily duplicate. Some banks use SMS OTP (less secure), but many have moved to app-based or token-based codes that rely on classical crypto algorithms (HMAC or AES in challenge-response tokens). For high-value transactions or new payees, users may be required to digitally sign the transaction with a PIN-protected OTP or a challenge/response code from their token, providing an additional cryptographic check that the authorized user is approving the specific payment.

Channel Integrity and Phishing Protection: TLS not only encrypts the channel but also ensures message integrity – any alteration in transit would be detected by the TLS MAC or AEAD cipher. Users are encouraged to verify the bank’s certificate or use the official banking app to thwart man-in-the-middle attacks with fake sites. Modern banking apps often perform certificate pinning (ensuring the server’s cert or public key matches the bank’s known identity) for added security. The combination of TLS encryption, certificate-based server authentication, and user-side MFA creates a secure tunnel from the user to the bank. This layer ensures that the initial payment instruction enters the banking system with confidentiality and a verified identity. Even if the user’s network is untrusted (e.g. public Wi-Fi), the strong TLS encryption (often TLS 1.2/1.3 with 256-bit AES or 128-bit AES-GCM and ECDHE key exchange) prevents attackers from sniffing or modifying the transaction data.

Secure Mobile Banking Applications: In mobile banking, additional cryptographic measures protect data. Apps often use the mobile OS’s keystore or Keychain to securely store cryptographic keys (like an OAuth token or device binding key). They may establish a mutually authenticated TLS (mTLS) session with the bank – where the app presents a client certificate or a token derived from one, adding a second layer of authentication beyond just user login. Sensitive information on the device (e.g. cached account balances or transaction history) is usually encrypted by the app using keys only accessible when the device is unlocked. Thus, if a phone is lost, an attacker cannot read cached data without the phone’s PIN/biometric (this leverages device encryption provided by iOS/Android). In summary, cryptography at the customer layer centers on TLS encryption, digital certificates, hashed credentials, and OTP/MFA algorithms, ensuring a secure starting point for the payment.

2. Cryptography Inside the Bank’s Core Systems

Once the payment instruction reaches the bank, it enters the core banking environment – which is segmented into front-office, mid-office, and back-office systems. These internal systems leverage cryptographic protections for both data in motion (APIs, internal messaging) and data at rest (databases, logs).

Data-in-Transit (Internal APIs and Messaging): Modern banks often have a service-oriented or microservice architecture, where the core banking system, fraud detection, customer information system, etc., communicate via internal APIs or message queues. These internal channels are secured by encryption and authentication just like external ones. Banks use industry-standard protocols (e.g. HTTPS or secure message queues) for internal service calls. For example, RESTful API calls between microservices are protected by TLS even within the data center to ensure that even an insider or malware cannot sniff sensitive data on the internal network. Many banks implement mutual TLS for service-to-service communications – each service has its own X.509 certificate (often issued by the bank’s internal CA) to authenticate to other services, ensuring only authorized services can connect. This creates an internal zero-trust environment where trust is established cryptographically rather than by network location. If legacy systems use message queues (IBM MQ or JMS), those channels are configured to use SSL/TLS encryption for data in transit. In some cases, IPsec VPN tunnels protect data flows between different network segments or between the bank’s offices and its data center. The use of “defense in depth” is common – for instance, an internal API call carrying payment data might be encrypted at the application layer (HTTPS/TLS), and the connection between data centers might also be encrypted at the network layer via IPsec, adding redundant protection. All these ensure that as the payment details traverse various internal systems (core banking to the payments hub, etc.), they remain confidential and unaltered.

Data-at-Rest Encryption: Banks protect stored sensitive information using encryption and tokenization. Core banking databases contain account balances, personal customer data (PII), and payment records. It is common to encrypt databases or specific fields using strong symmetric ciphers (AES-256 is an industry standard for database encryption). Encryption at rest can be done at multiple levels: full-disk or file-system encryption ensures that if someone obtains the storage media, they cannot read the data without the key; database-level or column-level encryption applies crypto to particular sensitive columns (like account numbers, national IDs, or cryptographic keys) within the DB. Many banks implement tokenization for highly sensitive data: for example, a credit card number or an internal account number might be stored in a tokenized form (replaced by a random token that maps to the actual value in a secure vault). The actual PAN or account number is thus not present in most database records – only a token is, which has no value if stolen. This technique is widely used to protect card data and is expanding to other personal data fields, aligning with data protection regulations. The FedNow system (as an example of a modern financial service) explicitly notes that data in its environment is encrypted at rest and that certain sensitive data elements are tokenized, reflecting a broader industry practice.

Internal Credential and Access Protection: Within the bank, employees and system accounts access systems under strict cryptographic controls. Administrative access to core systems (like SWIFT interfaces or payment processing applications) requires multi-factor authentication as per SWIFT’s Customer Security Programme controls. Staff passwords are stored hashed (e.g. using bcrypt or PBKDF2) to prevent retrieval. Privileged actions (like releasing a large payment or changing a SWIFT transfer) often require dual control – two authorized people must cryptographically sign or approve the action. For instance, one user might create a payment batch and another user must approve it in the system; the approvals are logged with user IDs and timestamps, and sometimes a digital signature or HMAC in the transaction record to bind the approver’s identity to that action (ensuring non-repudiation within the bank). Role-based access control (RBAC) ensures that keys to specific cryptographic operations (like the key that signs SWIFT messages) are only accessible to the software or HSM processes, not to any single user. Furthermore, sensitive internal credentials (API keys, service account passwords) are often stored in secure vaults or hardware modules and encrypted when at rest.

Integrity and Logging: Banks maintain extensive audit logs for transactions and system events. Cryptography enhances the integrity and trustworthiness of these logs. Some institutions implement cryptographically signed audit logs – each log entry or block of log entries is hashed (using SHA-256, for example), and the hashes are chained or periodically signed with a digital signature. This means any attempt to alter past log records would be detectable, as it would break the hash chain or signature verification. Even when full log signing is not used, at minimum, logs are time-stamped (often using a synchronized atomic clock or NTP server for accuracy) and secured in append-only storage. This provides an audit trail that, combined with off-system backups, achieves an integrity guarantee. Non-repudiation within the bank is also achieved by linking user actions to cryptographic credentials – e.g. a staff uses a smart card or software certificate to log into the payments system, so any transactions they initiate can be traced to their certificate or token ID. In case of disputes (“I didn’t approve that transfer”), the bank can produce log records or even signed approvals to resolve accountability.

In summary, the bank’s internal environment uses AES and TLS for encryption, HMACs and digital signatures for integrity and authentication, and strict key management and access controls to confine who and what can perform sensitive operations. Cryptographic best practices like least-privilege (with cryptographically enforced access), segmentation (with encrypted channels between zones), and diligent key handling are in place to create a secure processing environment before the payment ever leaves the bank.

3. Hardware Security Modules and Key Management

Throughout the banking and payments ecosystem, Hardware Security Modules (HSMs) play a pivotal role in safeguarding cryptographic keys and performing sensitive operations. An HSM is a tamper-resistant hardware device designed to securely generate, store, and use cryptographic keys without exposing them in plain form. In a modern interbank payment system, HSMs are deployed by both banks and financial infrastructures for various purposes:

Protection of SWIFT Credentials: Every SWIFT participant (bank) has a set of PKI key pairs for identity and authentication on the SWIFT network. These private keys are stored on HSMs rather than on disk, as recommended by SWIFT. SWIFT provides HSM solutions to its members (either network-attached HSM boxes or USB token HSMs) precisely to store these PKI keys. The HSM ensures that the private key used to sign outgoing SWIFT messages or to establish secure sessions cannot be extracted or copied – all cryptographic operations happen inside the device. The SWIFT Certificate Policy mandates that subscriber private keys be generated and kept in at least FIPS 140-2 Level 2 certified HSMs, (Level 3 for qualified certificates). This means the bank’s BIC identity keys for SWIFT messaging (and any other SWIFT PKI certificates) are never in the clear on a server – they are in a secure module that requires authentication (and often multi-person authorization) to use or manage.

Interbank Payment Signing and MACing: In some cases, banks use HSMs to generate payment message authentication codes (MACs) or digital signatures on transactions. For example, historically, SWIFT FIN messages between certain counterparties included a field for a message authentication code computed with a bilateral symmetric key (this was the old “Bilateral Key Exchange” system). Those symmetric keys (often 128-bit 3DES keys) were kept in HSMs, and the MACs were computed inside HSMs to prevent key exposure. Today, SWIFT has shifted to PKI-based signatures for authentication, but the principle remains: whether using symmetric or asymmetric cryptography, the sensitive keys live in HSMs. A bank’s HSM might also sign outbound payment files or SEPA transactions, if required by local schemes. Whenever a digital signature (RSA, ECDSA, etc.) is needed on a transaction or message, the operation can be offloaded to an HSM that holds the signing key, ensuring the key cannot be stolen even if the server is compromised.

Encryption Key Management: Banks use HSMs to secure master keys for data encryption as well. For example, the master key that encrypts a database column containing account PINs or passwords might reside in an HSM, which can perform decrypt/encrypt operations or release derived keys only under strict policy. Payment systems for cards (like those dealing with PIN verification, card issuance, or ATM keys) heavily use HSMs – typically for Triple DES (3DES) based PIN block encryption and verification. While this is tangential to interbank transfers, it exemplifies the reliance on HSMs for any critical cryptographic computation in banks. The HSMs ensure that keys like the PIN Verification Key or Card Master Key are never in plaintext in software memory. Instead, keys are often stored in hierarchies where a root key (the master key) never leaves the HSM, and working keys are exchanged in encrypted form (encrypted under a master or zone key) between HSMs.

Digital Certificates and CAs: Some large banks operate their own internal Public Key Infrastructure (PKI) – for issuing certificates to internal systems (for mutual TLS) or to employees. The private keys of the root and intermediate Certificate Authorities are kept in HSMs, with multi-person authorization required to use them (e.g., 3 out of 5 key custodians must each present a smartcard and PIN to activate the CA key for signing). This ensures the trust anchors for internal certificates are well protected. Even code-signing keys (for signing software deployed in the bank) might be stored in an HSM or at least a secure cryptographic appliance, to prevent malicious code signing.

Key Characteristics and Algorithms: All cryptographic mechanisms in the interbank stack use classical algorithms of adequate strength. RSA (2048-bit and above) is widely used for digital signatures and certificate keys; in fact, SWIFT’s current PKI uses 2048-bit RSA for entity certificates and a 4096-bit RSA key for the Certification Authority. Many banks and regulators are also embracing elliptic curve cryptography: for example, ECDSA or Ed25519 for digital signatures, and ECDH for key exchanges in TLS (e.g., TLS 1.3 usually uses ECDHE with curves like P-256 or X25519). Symmetric encryption standards are typically AES with 256-bit keys for new systems, while some legacy systems might still use Triple DES (168-bit) especially in financial message contexts (Triple DES has been historically prevalent in banking, but it is being phased out in favor of AES). Hash functions commonly used are SHA-256 or SHA-512 for digital signatures and hashing passwords (often via PBKDF2, bcrypt, or Argon2 to add work factor). HMAC (Hash-based Message Authentication Code) using SHA-256 is often used internally for things like API request signing, integrity of stored records, etc.

Key Management Processes: Managing all these keys is itself a critical task. Banks adhere to strict key management life cycles: keys have defined expiration or renewal intervals, especially certificates which might be renewed every 1-3 years for SWIFT or TLS. When exchanging keys (e.g., in correspondent banking, if a symmetric key is used for a specific interface), they use secure channels or out-of-band processes with encryption. Many banks follow the NIST or ISO standards for key management. For example, a key custodian team might handle HSM master key ceremonies – where multiple people must jointly initialize or rotate the HSM master key (using split knowledge and dual control: each person holds a part of the key). This guarantees no single person ever knows the full key, reducing insider risk. Backup of keys (where allowed) is done in encrypted form, and HSMs offer mechanisms for this (like splitting a backup key among smartcards given to different custodians).

In summary, HSMs and strong key management are the backbone ensuring that private keys remain private and cryptographic operations are securely executed. They provide a root of trust for the entire payment system’s cryptography. Without HSMs, the strength of algorithms like RSA or AES could be undermined by key theft; with HSMs, even if attackers penetrate system defenses, they cannot extract the crown jewel keys that would allow cloning identities or decrypting large volumes of data. The consistent use of certified HSMs across the industry (e.g., SWIFT’s own HSM devices and those banks deploy) and formal processes for key handling give the global payment ecosystem a robust trust infrastructure based on classical cryptographic principles.

4. SWIFT Interbank Messaging Security

Once the payment is approved and prepared to be sent to another bank, it enters the interbank messaging realm – dominated by the SWIFT network for international transfers. SWIFT (Society for Worldwide Interbank Financial Telecommunication) provides the secure messaging services (FIN for traditional MT messages, and InterAct/FileAct for XML-based ISO 20022 messages and file transfers). The cryptographic mechanisms in SWIFT are multi-layered, addressing both the network transport and the message content.

SWIFT Secure Network and PKI: SWIFT operates a private, global network called SWIFTNet, to which member banks connect through secure gateways. A Public Key Infrastructure (PKI) underpins the security of SWIFTNet. Each institution has a SWIFTNet digital certificate (actually a pair: one for authentication/encryption and one for digital signing, under SWIFT’s PKI). These certificates are issued by SWIFT’s Certification Authority and loaded onto the bank’s SWIFT gateway (protected by an HSM as discussed). When a bank’s interface (e.g. Alliance Access or Alliance Lite2) connects to SWIFT, it uses its private key to authenticate and establish secure sessions. All SWIFTNet traffic between the bank and SWIFT is both authenticated and encrypted using this PKI system. In practice, this means that messages or files sent over SWIFT are encrypted end-to-network (between the bank’s gateway and the central SWIFT server) so that intermediate telecom providers or internet infrastructure cannot read them. The encryption uses strong protocols (SWIFT currently uses TLS or a similar mechanism under the hood of SWIFTNet Link, with mutual certificate authentication) – effectively forming a VPN tunnel between each institution and SWIFT. The use of digital certificates also ensures that only genuine, enrolled banks can connect: a rogue entity cannot impersonate a bank without the private key certified by SWIFT.

Message Authentication and Integrity: Beyond transport-level encryption, SWIFT provides message-level integrity controls. With the advent of SWIFTNet PKI, each FIN message or InterAct message can be digitally signed by the sender’s system. This signature travels with the message and is verified by the receiver (and by SWIFT as an intermediary) to ensure the message truly came from the stated sender and was not altered in transit. Under the older FIN system, a pair of banks would agree on bilateral authentication keys (symmetric keys) and SWIFT messages could include a Message Authentication Code (MAC) computed with those keys. This bilateral key exchange (BKE) system was the old method to verify correspondent identity. As of the migration to SWIFTNet Phase 2 around 2007, SWIFT introduced a unified PKI-based security model, replacing bilateral symmetric MACs with digital signatures. Now, a single security model with public-key cryptography secures all message types on SWIFT. For FIN (MT messages), SWIFTNet PKI ensures that a receiving bank can trust the sender’s identity; the sender’s SWIFT certificate (and by extension their BIC identity) is authenticated by SWIFT’s CA. In effect, FIN messages now have authentication and integrity “baked in” via the PKI – any tampering with a message in transit or any attempt to spoof a sender would be detected because the digital signature (or authentication tag) would fail to verify.

Closed User Groups and RMA: In addition to cryptographic authentication, SWIFT enforces logical controls on who can send to whom. This is done through Closed User Groups (for certain services) and the Relationship Management Application (RMA) for FIN. RMA is a security feature that requires bilateral authorization before one institution can send FIN messages to another. Each bank exchanges RMA authorization messages (secured by SWIFT, of course) that essentially whitelist the message types that the sending bank is allowed to send to the receiving bank. Even if Bank A is technically able to craft a SWIFT message to Bank B, if Bank B has not granted RMA authorization, SWIFT will not deliver the message. This prevents unsolicited or fraudulent traffic. While RMA is not a cryptographic algorithm, it leverages the security of the SWIFT network (the RMA exchange itself is an authenticated message) and acts as an application-layer control ensuring proper bilateral relationships. It’s often described as a “message security” service because it mitigates abuse of the network.

SWIFTNet Link and HSM Integration: The SWIFTNet Link (SNL) is the software at the bank that interfaces with the SWIFT network. It implements the cryptographic protocols mandated by SWIFT. Notably, SNL uses the bank’s HSM to perform all cryptographic operations (signing, encryption) required for messages. For example, if a bank sends an InterAct request (an ISO 20022 message), SNL can automatically sign the message payload with the bank’s private key and/or encrypt it for the recipient, depending on the service’s requirements. SWIFT recommends end-to-end signing for request/response messages – meaning the sender signs the message content and the receiver verifies it, on top of the normal transport security. This provides non-repudiation of origin and ensures integrity at the business level. The cryptographic module in SNL handles these PKI operations such that applications using SWIFT (like the Alliance Access interface) don’t need to implement crypto themselves – they just pass the message to SNL with flags indicating whether to sign or encrypt, and the SNL+HSM takes care of it. This design centralizes and hardens cryptography at the gateway.

Encryption of Messages and Data on SWIFT: By default, SWIFT provides confidentiality for data in transit (between the bank and SWIFT, and SWIFT to the other bank). Within SWIFT’s own operations centers, messages are briefly stored (for store-and-forward delivery) and processed. SWIFT employs strong internal controls and likely encrypts data at rest in its databases as well, though details are not public. In some services like FileAct (used to send bulk files, e.g. batch payment files or securities data), SWIFT explicitly offers end-to-end encryption options: a FileAct payload can be encrypted such that only the recipient can decrypt it. InterAct (for XML messages) similarly allows optional encryption of the payload. This is particularly useful if the message contains highly sensitive information that even the SWIFT central store-and-forward server should not be able to read (in some scenarios SWIFT can operate as a pure blind router, e.g. FileAct). For most payment messages, however, SWIFT itself is a trusted intermediary that needs to read certain header fields for routing, so end-to-end encryption is used selectively (e.g., in certain closed user groups).

Non-Repudiation and Proof of Delivery: SWIFT services offer non-repudiation of emission and reception as optional features. Non-repudiation of emission means the sender cannot deny having sent a message, and non-repudiation of receipt means the receiver cannot deny having received it. Cryptographically, this is achieved through digital signatures and audit trails. For instance, SWIFT can provide digital evidence (like signed acknowledgments or archived signed messages) if there is a dispute. SWIFT’s FIN and InterAct have built-in acknowledgment messages (ACK/NACK) that are digitally authenticated, and SWIFT keeps copies of messages for some days. If needed (with proper legal process), SWIFT can confirm that message X was sent by bank A and delivered to bank B at a certain time. This capability relies on the fact that all messages are authenticated and logged with tamper-evident records, essentially creating an auditable history. Many RTGS systems that use SWIFT (like TARGET2) leverage this to have a robust audit of all payment instructions.

SWIFT Interface Security (Alliance Access/WebStation): The bank’s own SWIFT interface software has additional security layers. Alliance Access, for example, requires operator logins and can be integrated with hardware authentication (smartcards or USB tokens for operators). It also segregates duties: an Alliance Security Officer role manages the configuration and keys, while operators handle messages – this ensures no single user has control over everything. The workstation through which a user at the bank releases a SWIFT payment often uses MFA as well – e.g., a smart card certificate or an OTP token in addition to a password, as mandated by SWIFT’s Customer Security Controls Framework. The SWIFT environment within a bank is typically a segregated secure zone (often physically and logically separated from general IT). Access to this secure zone requires additional authentication and is heavily monitored. While these measures are more procedural, they intertwine with cryptography (smartcard certs, etc.) to guard the integrity of SWIFT operations. For example, the Alliance WebStation (a web interface for SWIFT) might use HTTPS with client certificates for bank staff to log in, adding a layer of cryptographic auth.

In essence, SWIFT’s messaging layer brings together network cryptography (VPN/TLS) and application-layer cryptography (digital signatures, MACs) to ensure that a payment instruction leaving Bank A arrives at Bank B securely and can be trusted. Classical cryptographic algorithms – RSA for signatures, AES/3DES for encryption, SHA for hashing – are employed throughout this process under a globally coordinated trust model (the SWIFT PKI). The result is that banks can transact with each other across continents as if on a secure dedicated line, even though the communication may traverse various carriers and the open internet in parts, all without exposing transaction data or risking undetected alteration.

5. Interbank Links and Correspondent Banking

In many cases, a payment from Bank A to Bank B might involve intermediary institutions or different networks. For example, if two banks don’t have a direct relationship, the payment could be routed via a correspondent bank (Bank C). Alternatively, some payments go through local clearing houses or alternative networks. Regardless of the path, cryptography is consistently applied to link communications to maintain security.

Correspondent Banking via SWIFT: The prevalent scenario for cross-border payments is correspondent banking, which still uses SWIFT messages as the communication method. If Bank A in country X wants to send USD to Bank B in country Y, Bank A will likely send a SWIFT MT103 to its USD correspondent (Bank C), which then sends an MT202 COV to Bank B’s USD correspondent or Bank B itself. Each hop is a SWIFT message – protected by the same SWIFT PKI and network encryption discussed above. So even multi-hop transactions benefit from SWIFT’s end-to-end confidentiality and integrity. The correspondent banks themselves are bound by SWIFT security, and each leg of the message path is cryptographically secure and authenticated. This prevents attackers from inserting themselves in the chain or altering instructions (e.g., an attacker can’t intercept and change the beneficiary account number in the SWIFT message – it would invalidate the signature or MAC and be rejected).

Domestic Interbank Networks: Some domestic payments might travel over networks other than SWIFT. For instance, SEPA (EUR payments in EU) or domestic wire systems might use proprietary networks or internet-based APIs. However, the same cryptographic principles apply. In SEPA, banks connect to clearing via secure VPNs or leased lines using TLS encryption. Emerging API-based interbank systems (for instant payments, for example) use mutual TLS and JSON message signatures. A concrete example is the U.S. FedACH or EPN for ACH payments – files are transmitted over secured channels and often PGP-encrypted as an extra layer. Even for check image exchange, banks use SSL/TLS tunnels or SFTP with SSH encryption to transfer data. Thus, whenever SWIFT isn’t the carrier, some other secure transport (IPSec VPN, SSL/TLS, or SSH) takes its place, but not in the clear. Many countries have government or central-bank-run secure networks (sometimes called “financial extranets”) that member banks connect to via routers or VPN devices; these networks enforce encryption for all traffic.

IPSec and VPN Encryption: A notable example is the U.S. Federal Reserve’s FedLine system. Banks connect to Fedwire (FedLine Direct or Advantage) through a combination of encrypted channels. One description notes that the connection uses “a dedicated device providing an IPSEC VPN tunnel” in addition to TLS (HTTPS) for the application data. This double-encryption is an example of defense in depth: IPsec at the network layer (with 256-bit AES, for instance) and TLS at the session layer (with its own AES encryption), so even if one were broken, the data remains safe. Similarly, connections to RTGS systems in various countries often use dedicated encryption appliances. These might be point-to-point circuits encrypted with AES or modern MPLS VPNs with IPsec. All aim to prevent any outsider from listening to or injecting traffic into critical payment systems.

Messaging Middleware (MQ) Channels: Banks frequently use middleware like IBM MQ or other message brokers to queue and route transactions internally and externally (for example, some banks send payment instructions to a central bank via secure MQ channels). These message queues support TLS encryption for data in transit. In cases where the payment system is integrated via MQ, banks configure mutual SSL authentication on the channel – both sides present digital certificates and establish a TLS session so that the messages are encrypted on the wire. Additionally, some banks layer message-level encryption on top: e.g., encrypting the payload of a message with a symmetric key that only the receiving end (like the central bank’s interface) can decrypt. This would be analogous to how PGP encrypts an email’s content even though the transport (SMTP) might also be TLS-encrypted.

SWIFT vs. Alternative Networks: SWIFT remains the dominant secure network for cross-border payments, but initiatives like SWIFT gpi and others don’t alter the cryptographic underpinnings – they are more about tracking and speed. Meanwhile, some networks like RippleNet or other fintech solutions also use classical cryptography (in those cases, typically TLS for communication and perhaps signing of API calls). If a bank were to use such a network, it would similarly rely on mutual TLS channels, API keys or certificates for authentication, and perhaps HSMs for safeguarding those keys.

In summary, whether the payment goes direct via SWIFT, or detours through a correspondent, or travels over a national system, each link in the chain is secured by encryption and authentication. The banks and infrastructures hand off the transaction like runners passing a baton, but the baton is always cryptographically wrapped – ensuring confidentiality and integrity across organizational boundaries. Attackers cannot pick it up or alter it without detection because the chain of trust (rooted in things like SWIFT’s PKI or the central bank’s security controls) persists across the hops. The receiving bank can be confident that the payment instruction it finally receives is exactly what the sending bank issued, thanks to these layered cryptographic assurances.

6. Central Bank RTGS Settlement Security

At the end of the chain, most large-value payments settle in central bank RTGS (Real-Time Gross Settlement) systems (e.g., Fedwire in the US, TARGET2/EURO1 in Europe, BoJ-Net in Japan, etc.). These systems are the ultimate ledger updates between banks. Ensuring security here is paramount, as RTGS systems concentrate systemic value. The cryptographic mechanisms used by RTGS infrastructures are similar to, and often integrated with, those we’ve discussed, with some additional considerations for resilience and finality.

Secure Access to RTGS: Banks typically access RTGS systems either via the SWIFT network or via proprietary access channels, but in both cases, strong encryption and authentication are enforced. TARGET2 in the Eurosystem, for example, uses SWIFTNet services (FIN Y-Copy for MT messages and more recently ISO 20022 via SWIFT InterAct). Therefore, all communications from participant banks to TARGET2 are secured by SWIFT’s PKI and network encryption – effectively identical to bank-to-bank SWIFT security. In fact, TARGET2 messages (MT or MX) are often copy messages: a payment message is sent to SWIFT, which then forwards a copy to the central bank for settlement. That copy and the return confirmation are transmitted with the same PKI-based security. The ECB states unequivocally: “All external communication is encrypted by means of TLS/SSL (transport layer security)”, to prevent eavesdropping or tampering. This applies to communications between banks and the TARGET2 platform.

In the US, Fedwire historically operated on a private network; today it also allows access via internet VPN. In both cases, the Fed ensures encryption and authentication. As mentioned, FedLine (the Fed’s access service) uses layered security: a VPN (with IPsec) and application-level TLS, plus certificates and/or hardware tokens for institution authentication. Moreover, the Fed requires two-factor authentication for any operator initiating payments through FedLine. So a bank sending a Fedwire transfer uses a combination of device certificates (to establish the secure tunnel) and user credentials (with MFA) to initiate the transaction.

Digital Signatures and Message Validation: There is a trend toward explicit digital signing of payment messages in RTGS. FedNow, the new instant payment service by the Federal Reserve, requires that all messages exchanged with participants are cryptographically signed. While FedNow is not a classical RTGS (it’s real-time retail), it indicates the direction: messages have a signature to verify integrity and authenticity at the application level, not just relying on the transport security. This non-repudiation measure might make its way into other systems too. TARGET2 and other RTGS often rely on the underlying SWIFT validation (which ensures the BICs and MAC/signatures are correct). Some RTGS do not require member banks to separately sign messages because the network layer (SWIFT or dedicated network) is trusted and authenticated. But given evolving threat landscapes, adding message-level signatures is an extra safeguard. It’s worth noting that SWIFT’s own non-repudiation service could be leveraged by RTGS – for example, CHAPS in the UK (which uses SWIFT Y-Copy) could choose to require that banks use the SWIFT PKI signature option so that each payment instruction copy carries a digital signature from the sending bank that the receiving central bank can verify.

Encryption of Data within RTGS: Just like banks, central banks encrypt sensitive data at rest in their systems. A central bank’s RTGS will have databases of account balances (bank reserves), transaction archives, etc. These are typically stored on highly secure systems with limited access. The data may be encrypted on disk, and backups are certainly encrypted. For example, the FedNow documentation notes that in the FedNow environment, data at rest is encrypted and certain sensitive data is tokenized. We can extrapolate that similar practices are applied in RTGS: account numbers or participant identifiers might not need tokenization, but any personal data or extended references might. At minimum, disk encryption and strict access control protect the RTGS data. Additionally, central banks often have stringent continuity plans – data is replicated to secondary sites over encrypted links and sometimes written to WORM (write-once-read-many) media for tamper-evident storage.

Time-Stamped Audit Trails: Settlement systems produce definitive records of fund transfers that must be beyond reproach. Cryptography assists in this through secure logging as discussed, and also through time synchronization. Many RTGS use time-stamping authorities or at least synchronized clocks to mark transaction times, which is a form of security to prevent disputes about ordering. Some have considered digital notarization of daily settlement data (e.g., taking a hash of the day’s transaction ledger and potentially publishing it or archiving it securely to detect any future alterations). While not widely advertised, it’s conceptually possible that a central bank could use a hash chain or even a blockchain-inspired method to lock in the integrity of historical records. The key point is that all audit logs and data exports are handled with the same care: if data is extracted, it’s digitally signed by the system so that recipients (like bank supervisors or investigators) can verify its authenticity.

Resilience and Failover Security: RTGS systems must be resilient (disaster recovery, etc.), and cryptography plays a role here too. For example, if an RTGS has a hot standby site, the secure communications must be maintained seamlessly in failover. Typically, banks have two sets of certificates or connections (active/passive) and the failover site’s equipment is pre-configured with the same PKI credentials or its own that are trusted. The use of HSMs in the central bank ensures that even in DR scenarios, keys are not exposed. They might use HSMs with secure cloning features to replicate keys to backup sites in a controlled way.

Participant Authentication and Authorization: Beyond the message signing, RTGS systems authenticate participant banks when they log in or send files. This can involve client TLS certificates or signed login messages. For example, a web-based interface for an RTGS (for smaller institutions) might use client-authenticated TLS – the bank’s user has a smart card or token with a certificate issued by the central bank’s CA to log into the system. Or they might use secure SSH with key pairs for file-based interfaces. These methods ensure only authorized institutions (and authorized users at those institutions) can initiate payments or view account info. Role separation is enforced: one credential might only query balances while another can initiate transfers, etc., similar to internal bank controls but now at the central system. All these authentication events are logged (often with digital signing of the login session or non-repudiation tokens).

In summary, central bank settlement systems maintain the chain of cryptographic trust: banks communicate with them over encrypted channels (TLS/IPsec), often using the same PKI credentials they use with other systems or a dedicated PKI from the central bank. Transactions are validated and possibly signed, and every step is logged in a secure manner. The result is that final settlement – the movement of money in central bank books – enjoys the highest level of integrity and authenticity. The moment a payment is marked as settled, there is cryptographic evidence of who sent it, that it was authorized, and that it was not altered, thereby guaranteeing finality and non-repudiation at the core of the financial system.

7. Secure Software Development and Update Management

Underpinning all these systems is the software and hardware that implements cryptography. Ensuring the integrity of that software is itself a cryptographic challenge. Financial institutions and critical infrastructures employ rigorous update and change management procedures fortified by cryptographic techniques to prevent subversion of the system through malware or unauthorized changes.

Digital Signatures on Software and Firmware: Vendors of critical banking software (core banking systems, SWIFT Alliance, HSM firmware, database systems, etc.) digitally sign their software releases. For example, SWIFT distributes Alliance Access and SWIFTNet Link updates as signed packages – the software will verify the signature (using SWIFT’s public code-signing certificate) before installing, to ensure the update hasn’t been tampered with in transit or from an unofficial source. Operating systems and database software have similar signing (e.g., Microsoft signs Windows updates; Linux distributions sign packages). Banks will only trust patches that come from the authentic vendor and pass signature verification. Even hardware devices like HSMs only accept firmware updates if they are signed by the manufacturer; this prevents an attacker from loading a modified firmware that could leak keys. This secure update process is critical because even the strongest cryptography can be undermined by a malicious code insertion – hence ensuring code integrity via signatures is a must.

Change Management with Cryptographic Checks: When banks develop or configure software in-house, they often use hash-based integrity checks to monitor changes. For instance, a baseline checksum of critical configuration files or binaries is maintained (in a secure system), and periodic scans compute hashes of current files to detect any unexpected modification (a technique often aided by file integrity monitoring tools). Some institutions leverage TPMs (Trusted Platform Modules) or code-signing internally: e.g., requiring that any internally developed application or script be signed by a trusted developer’s code-signing key. The runtime environment then verifies the signature before execution. This is not yet universal, but the concept of only running signed code is gaining traction to prevent unauthorized code from running on sensitive systems.

Environment Hardening and Whitelisting: In secure zones like the SWIFT environment, application whitelisting is sometimes used. This means only executables with specific hashes or signatures are allowed to run; everything else is blocked. Cryptography (hashes) underlies this whitelisting. By maintaining a list of approved software hashes, the bank ensures that if malware somehow gets onto the server, it won’t match an approved hash and thus can’t execute. Similarly, critical configuration files can be digitally signed by the security team – if someone tries to alter a configuration (say, to redirect SWIFT messages to a fraudulent account), the signature would break and the system could detect it or refuse to load the altered config.

Secure Boot and Hardware Root of Trust: Modern servers and devices include secure boot capabilities. This uses cryptographic signatures and hashes at the firmware/bootloader level to ensure the operating system hasn’t been tampered with. For example, UEFI Secure Boot can be enabled such that the bootloader is signed by Microsoft or the organization, and it will only load an OS kernel that is properly signed. Network devices used in interbank connectivity (like routers, firewalls, VPN appliances) also often support secure boot and signed firmware. Central banks and large financial institutions are increasingly requiring that critical hardware in the payment chain (HSMs, switches, etc.) are from trusted sources with unbroken secure supply chains.

Controlled Updates and Patches: The process of updating systems in a bank is tightly controlled. Cryptographic checksums or signatures are used when transferring software from a staging environment to production – for instance, a hash of the new SWIFT configuration might be compared to a hash computed by a second engineer, to ensure it wasn’t altered in transit or by a rogue admin. Some banks produce a “golden hash” for each release of an internal application, and after deployment the operations team confirms the binary’s hash matches the golden hash before activating the system. This procedure is essentially a manual verification using cryptography as the yardstick.

Third-Party Components: Banks depend on numerous third-party libraries and components (OpenSSL for TLS, etc.). They manage the risk by tracking versions and verifying signatures of those components as well. Dependency management tools often fetch libraries over TLS and verify digital signatures or published checksums from vendors.

In essence, ensuring software and code integrity is a meta-layer of cryptography ensuring that the cryptographic systems themselves haven’t been compromised. A compromised software update is one of the most effective ways to break all the cryptographic protections described in the previous sections. By using digital signatures, secure hashes, and trusted boot mechanisms, the institutions add yet another ring of defense. This way, from the moment code is authored to the time it runs on production servers or devices, there are cryptographic signatures and verifications at critical points confirming that what is running is exactly what was intended by the trusted sources.

8. Application-Layer Cryptography in Open Banking and APIs

An emerging layer in international payments is the use of open APIs and financial technology (fintech) integrations – for example, when corporates directly integrate with bank systems, or when banks offer services over APIs. These use standard web protocols, which are heavily reliant on classical cryptography.

HTTPS and REST APIs: Banks expose APIs for services like payment initiation, account information (under PSD2/Open Banking in Europe, for example). These APIs use HTTPS over TLS for encryption and server authentication, just as the user-facing website does. In many cases, banks require mutual TLS for partner API connections – the consuming client (maybe a fintech app or a corporate treasury system) must present a client-side certificate issued by the bank or a trusted CA. This ensures the bank’s API only accepts connections from authenticated machines. For instance, a fintech with an e-money license might have an eIDAS certificate to identify itself to a bank’s API. This certificate is checked and the TLS connection will only be established if it’s valid, preventing imposter services from calling the API.

OAuth 2.0 and JWTs: On top of TLS, API calls often use token-based authentication/authorization. OAuth 2.0 is widely used, where the client obtains a token (an access token, often formatted as a JWT – JSON Web Token). These JWTs are a compact way to convey the client’s identity and scopes, and they are cryptographically signed by the issuer (typically the bank’s authorization server) using a classical algorithm (RSA/RSASSA-PSS, ECDSA, or sometimes HMAC with SHA-256 for symmetric signing). The signature in the JWT means that the bank’s APIs can verify that the token was issued by their trusted auth server and not altered. For example, if a corporate initiates a payment via API, it will first obtain a JWT asserting it’s allowed to do so; the JWT might be signed with the bank’s private key. The API receiving the payment instruction will check the JWT’s signature and claims (using the corresponding public key) to ensure it’s valid. This mechanism again uses classical cryptography (public-key signatures and hashing) to provide integrity and authenticity at the application layer.

Request Signing: Some API standards (especially in financial services) require each request to be signed. For instance, in certain Open Banking specs or in secure fintech API exchanges, the client will sign the HTTP request (often by computing a digest of the payload and signing that with its private key, including some headers). The server will verify this signature using the client’s public key. This provides non-repudiation for the API calls – the client cannot later claim “I didn’t send that payment order,” because the order was signed with its key. This is analogous to how SWIFT signs messages, but applied to RESTful APIs. It’s another example of layering cryptography: TLS already protects the channel, but signing the request adds an extra guarantee and binds the client’s identity to the specific content of the request.

Data Encryption and Privacy: Sometimes data fields in API payloads are additionally encrypted for privacy. For example, if an API call includes very sensitive personal data, the client might encrypt that field with the server’s public key (using RSA-OAEP or an ECIES scheme) so that even if logs or intermediary systems saw the JSON payload, they couldn’t read that field without the private key. This isn’t extremely common in practice (since TLS covers it), but certain regulations might encourage double encryption for specific data elements.

Mobile and Web Client Security: For fintech apps or web clients that initiate payments, the same principles of cryptographic protection apply as described in the customer section. These apps might use platform-provided secure storage for API credentials, and ensure all API communications are over TLS with certificate validation. Some banks employ DNSSEC and certificate pinning to prevent man-in-the-middle attacks on API endpoints, adding cryptographic verification at the DNS resolution stage.

Auditing and Monitoring: Every API call in the payments context is usually logged with timestamps and some form of cryptographic correlation. For example, a transaction initiated via API might be assigned a unique ID and included in a hash chain of daily transactions for audit. At the very least, the JWT or signature provides a cryptographic link to the client identity for that call, which is preserved in audit logs.

As financial services become more open and interconnected, they continue to rely on the bedrock protocols and algorithms that have secured traditional banking. TLS, RSA, ECC, AES, HMAC – these remain at the core, now often implemented in modern frameworks and libraries. The end result is that whether a payment is initiated through a user clicking “Transfer” in their banking app or through a corporate’s automated API call, the depth of cryptographic security is equivalent. Every request is encrypted in transit, authenticated by keys or certificates, checked for integrity, and processed by systems whose software and keys are tightly guarded.

Conclusion

From the moment a customer initiates an international payment to the final settlement in central bank records, an intricate stack of classical cryptography ensures the transaction’s security. We saw how TLS encryption protects data on the wire at every hop – user to bank, bank to SWIFT, SWIFT to bank, bank to central bank – using algorithms like AES and protocols like IPsec to prevent eavesdropping. We examined how digital signatures and message authentication codes (MACs) verify identities and integrity – whether it’s a user’s OTP proving they authorized the transfer, a bank’s SWIFTNet PKI signature proving a payment instruction is genuine, or a central system’s signed acknowledgment providing non-repudiation. We highlighted the pervasive role of HSMs and key management, ensuring that the secrets underpinning all these cryptographic operations are safe from theft or misuse.

Crucially, these mechanisms do not stand alone; they are layered and mutually reinforcing. A SWIFT payment instruction, for example, benefits from network encryption, application-layer authentication, bilateral authorization (RMA), and independent logging, all at once. If one layer were to falter, the others maintain security – a design principle known as defense in depth. Moreover, the system relies on widely vetted classical algorithms (RSA, ECC, AES, 3DES, SHA) whose security is well-understood, and which are kept strong by using sufficiently large key sizes and rotating keys periodically (e.g. 2048+ bit RSA keys, 256-bit AES keys, etc.).

The global financial system has built a distributed trust fabric using these cryptographic tools. Each party – customer, bank, network, central institution – trusts the data it receives because it can verify it cryptographically and knows that at the previous step, similar verification occurred. This trust is what allows trillions of dollars to flow daily across the world with confidence. As we move into an era of new technologies, the classical cryptography described here remains the foundation. In anticipation of future threats (like quantum computing), institutions and standard bodies are assessing and upgrading algorithms, but as of 2025, the described framework stands strong without PQC (post-quantum cryptography). It is a remarkable orchestra of cryptographic schemes working in concert, largely invisibly to users, but critically enabling modern international finance.

The following table summarizes the cryptographic components across the system’s layers and parties for an international payment:

Cryptographic Components Across System Layers and Parties

| Security Aspect | Customer / End-User | Commercial Bank (Sending/Receiving) | SWIFT Network | Central Bank / RTGS |

|---|---|---|---|---|

| Authentication & Identity | – User login with password (hashed & salted) – Multi-factor auth (OTP tokens using HMAC or time-based codes)– Device identity via client certificates or app secrets for mobile | – Staff/admin login with MFA (hardware token or smartcard)– Internal user roles enforced by RBAC (least privilege)– Service accounts with keys or certificates for inter-service auth | – Institution identity via SWIFT PKI certificate (BIC DN) – SWIFTNet mutual authentication of gateways (client/server certs)– RMA authorization controlling counterpart access | – Bank identity via participant certificate or assignment in directory– Operator login via token or certificate (for manual operations)– Role separation (dealer vs. approver) with dual controls |

| Communication Encryption (in transit) | – TLS 1.2/1.3 encryption on user’s connection (HTTPS) – Mobile app uses TLS (often pinned cert) and may use an extra encryption layer for specific sensitive fields | – Internal network encryption: TLS on APIs, SSL/TLS on message queues, IPsec VPNs between data centers– Encrypted backup links and DR replication (usually via VPN/TLS)– Bank-to-SWIFT channel encrypted (TLS over SWIFTNet Link) | – All SWIFT traffic encrypted in transit (SWIFTNet VPN over IP, using TLS/IPsec) – Option for end-to-end payload encryption (InterAct, FileAct) for user data – Secure network isolated from public Internet (access via Alliance Gateway) | – Bank-to-RTGS comms encrypted (TLS over VPN or leased line) – Internal RTGS components communicate over secured channels– Data replication between primary/backup site encrypted (often hardware encryption) |

| Data Encryption (at rest) | – Device storage encrypted (full-disk on phone/PC; secure enclave for keys)– Sensitive app data (credentials, tokens) stored in OS keystore with encryption | – Core databases encrypted (tablespaces or column encryption with AES-256)– File servers and disk arrays use encryption (self-encrypting drives or software FDE)– Backups encrypted (keys in HSM or key vault)– Tokenization of extremely sensitive data (card numbers, etc.) to remove live PANs | – SWIFT data centers protect stored messages; likely DB and file encryption (not publicly detailed, but assumed best practice)– SWIFTNet PKI private keys stored in HSMs, never on disk | – RTGS database encryption for transaction records and account balances – Logging servers and data warehouses use encryption– Some systems may tokenize personal data (e.g. payment reference info) for privacy |

| Message Integrity & Authentication | – Transaction details shown for user confirmation (out-of-band verification for integrity)– OTP or digital signing of transaction by user binds their approval (integrity of instruction)– Mobile apps may sign critical requests with device key | – Internal messages (between modules) often use HMAC for integrity or are within TLS which covers integrity– Transactions inside core systems identified by secure IDs to prevent duplication– Audit trails link to transaction IDs to detect any mismatch | – All messages authenticated by sender’s SWIFT keys (digital signature or legacy MAC) – SWIFTNet PKI ensures integrity: any change in transit breaks signature and is rejected – Sequence numbers and acknowledgements (ACK/NACK) used to detect missing or altered messages | – RTGS input messages validated end-to-end (if via SWIFT, then by SWIFT PKI; if direct, by RTGS’s own auth)– RTGS may require message signatures from participants – Strict format validations and duplicate detection to prevent manipulation |

| Non-Repudiation & Audit | – User receives confirmation (e-receipt or notification) possibly containing a transaction ID or digital signature from the bank for proof– In dispute, user’s OTP or digital consent is evidence of their authorization | – Detailed audit logs of all payment actions (who, when, what)– Logs are time-stamped (synchronized clocks) and often sent to a secure SIEM system– Some banks use cryptographically sealed logs (hash chains or signed daily digests) – Dual control and maker-checker processes provide procedural non-repudiation (two people saw it) | – SWIFT provides non-repudiation of emission and receipt (e.g., signed ACKs, copy of messages) – Message origination is bound to cert identity, so sender cannot deny it– SWIFT retains message history for a period; audit trails can be retrieved if needed under controlled conditions | – RTGS generates irrevocable settlement records (once settled cannot repudiate)– Central bank logs every instruction and its source (e.g. BIC, terminal ID) with timestamps– End-of-day or real-time logs may be signed or archived in tamper-proof systems for forensics– Some RTGS (e.g. newer systems) issue digitally signed confirmations of settlement to participants |

| Key Management & HSMs | – If user has a hardware token, it’s a form of mini-HSM for OTP (key inside not exportable)– Mobile apps use OS keystore (backed by hardware security module in phone) for sensitive keys (e.g. private key for client cert or token encryption key) | – Bank uses HSMs for:• SWIFT PKI private keys (signing and encryption keys) • Keys for card PIN encryption and verification (3DES/AES)• Master keys for database encryption (stored and used in HSM)• CA keys if bank runs internal PKI• Code signing keys (if signing internal software)– Key rotation and lifecycle managed by a dedicated team (with split knowledge for master keys)– Strict access: admins cannot extract keys from HSM; operations require quorum for key ceremonies | – SWIFT’s CA and sub-CA keys reside in HSMs (FIPS 140-2 Level 3) – SWIFT issues HSM tokens/boxes to customers for their keys – Session keys for encryption are negotiated per connection (e.g. TLS keys) and discarded after use– SWIFT manages CRLs and key revocation; keys have expiring certificates forcing renewal (e.g. every 2 years) | – Central bank likely uses HSMs for its critical keys:• Keys for any PKI or TLS termination of RTGS• Signing keys for system messages or time-stamp authority• HSM-protected database encryption keys– Master keys for RTGS (if any offline processing, like encrypting stored data or backup tapes) guarded by multi-person control– If the RTGS uses DNS or other infra, DNSSEC keys etc. in HSM as well (to prevent hijacking communications) |

| Software Integrity & Updates | – User app from official store (digitally signed by the developer & vetted). App verification on install.– Desktop users rely on browser security updates (signed by vendors). | – Strict change management: test signatures/hashes of software before production.– OS patches verified (signed by Microsoft/Linux vendor) and applied in controlled windows.– SWIFT software updates are signed by SWIFT; banks verify checksums. – Application whitelisting on SWIFT systems (only executables with known hashes can run).– Antivirus/EDR with whitelisted cryptographic hashes to prevent code injection. | – SWIFT Net and interface software undergo regular updates; SWIFT distributes these securely to banks (via secured portal) with digital signatures to ensure authenticity.– SWIFT’s own infrastructure software/firmware is maintained under change control and likely code-signed (though details not public, SWIFT follows high assurance processes). | – Central bank RTGS software updates (often provided by major vendors or in-house) are PGP or digitally signed.– Any change to RTGS parameters (like cutoff times or config) is done via secure, authenticated interface by authorized personnel.– Systems have backup images hashed and stored, to compare and ensure running code hasn’t been altered.– Penetration tests and audits use hash/signature tools to verify no unexpected binaries in system. |

Table 1: Summary of cryptographic mechanisms at different layers: customer, bank, SWIFT network, and central RTGS. These mechanisms collectively secure a global transaction’s confidentiality, integrity, authenticity, and non-repudiation across all parties.

Image 1: One-slide summary of interbank payments’ cryptographic complexity.

Each layer of the payment process implements rigorous security controls using classical cryptography, creating an overlapping and robust defense. This holistic approach is what allows international interbank payments to move swiftly (pun intended) while maintaining trust and security at every step.

This walkthrough is not exhaustive. It couldn’t be. Even in a single cross‑border payment, the cryptography you can see – TLS handshakes, SWIFT PKI, HSM‑backed signing, message authentication, tokenization, encrypted databases – is only the visible tip. Much more cryptography is running everywhere:

- Device trust anchors and firmware: TPMs/secure enclaves, UEFI/secure boot, measured boot, firmware and driver signing, OS kernel module signing.

- Operating system and virtualization: disk and memory encryption, credential guards, hypervisor hardening (e.g., SEV/TDX), code integrity policies, application whitelisting.

- Container and cloud fabric: image signing (e.g., Sigstore/cosign), admission controls, service‑mesh mTLS (SPIFFE/SPIRE), K8s secrets encryption with KMS, envelope encryption for app secrets, cloud KMS/HSM tiers.

- Network layers below and beside TLS: IPsec and MACsec, BGP session protection (TCP‑AO), DNSSEC for name integrity, NTS for authenticated time, load‑balancer and proxy TLS termination, DDoS/WAF front doors.

- Messaging, data, and storage plumbing: MQ/Kafka/Pulsar with TLS and SASL, database TDE and field‑level encryption, object‑store SSE‑KMS, backup/snapshot/archive encryption, hash‑chained or signed logs, tamper‑evident archives.

- Identity and access plane: SAML/OIDC, OAuth2/JWT signing, FIDO2/WebAuthn, client certs and mTLS, CRLs/OCSP, key rotation policies, just‑in‑time credentials from vaults.

- Payments adjacencies: EMV and card/PIN cryptography, POS and ATM key hierarchies, P2PE devices, HSM‑mediated key ceremonies across schemes and acquirers.

- Operational infrastructure: out‑of‑band management (BMC) secured channels, code‑signing for internal software, patch/package signatures, supply‑chain provenance checks, digital timestamping/notarization for evidence.

- Third parties and edges: fintech APIs, correspondent gateways, anti‑fraud and AML pipelines, observability stacks—each with their own keys, certs, and ciphers.

Here, I deliberately teased out only the cryptography directly in the payment use case – the pieces a payment instruction actually touches as it travels from customer to bank, across SWIFT/correspondents, and into RTGS. But that path runs on top of thousands of other cryptographic systems in the underlying infrastructure. Each adds trust, but also surface area: more keys to inventory, more lifecycles to manage, more policies to enforce, more ways for drift and silent failure to accumulate.

Three practical takeaways:

- Treat cryptography as critical infrastructure, not a library call. Maintain a living cryptographic inventory and CBOM (crypto bill of materials): where keys live, who owns them, which algorithms/modes/lengths are in use, how rotation and revocation happen, which HSMs and KMSs guard what.

- Engineer for failure and change. Assume a layer can degrade without alarms. Build defense‑in‑depth with explicit ownership at each boundary (customer, bank, network, settlement). Automate checks for weak/legacy crypto, enforce policy as code, rotate and test keys regularly, and rehearse key compromise/failover runbooks.

- Continuously discover the “hidden crypto.” Scan dependencies, appliances, and platforms for embedded ciphers and ad‑hoc keys (proprietary modules, vendor appliances, observability tooling). Log and prove cryptographic control‑plane integrity: who issued what cert, which tokens were used, which signatures verified.

This series aims to make the invisible visible – not to overwhelm, but to create the shared map you need for governance and resilience. If all you take away is that a single payment crosses many distinct trust anchors (and that each has a separate owner, policy, and failure mode), that’s enough to justify disciplined discovery and continuous assurance.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.