Microsoft Announces Record Breaking Logical Qubit Results

Microsoft and Quantinuum have announced a major quantum computing breakthrough: the creation of the most reliable logical qubits on record, with error rates 800 times lower than those of physical qubits. In an achievement unveiled on April 3, 2024, the teams reported running over 14,000 quantum circuit trials without a single uncorrected error. This accomplishment was made possible by combining Microsoft’s innovative qubit virtualization and error-correction software with Quantinuum’s high-fidelity ion-trap hardware. The result is a dramatic leap in quantum reliability that many experts believed was still years away.

Beyond setting a record, this milestone is significant for the entire quantum ecosystem. It effectively pushes quantum computing beyond the noisy intermediate-scale era (NISQ) into what Microsoft calls “Level 2 Resilient” quantum computing. In other words, the experiment demonstrated for the first time that error-corrected qubits can outperform the basic physical qubits by a wide margin, marking an essential step toward full fault-tolerant quantum computing (FTQC). Achieving such a low error rate for logical qubits is viewed as a critical turning point on the road to practical quantum machines. It suggests that the long-anticipated transition from today’s error-prone quantum processors to tomorrow’s robust, application-ready quantum computers is finally underway.

This breakthrough paves the way for more complex quantum computations and even hybrid supercomputers that integrate quantum processors with classical high-performance computing and AI. The news underscores a new phase in the quantum computing race, injecting fresh optimism that real-world problems solvable only by quantum means may come within reach sooner than expected.

Understanding Logical Qubits and Error Correction

Logical qubits are an essential concept for scaling quantum computers beyond the fragile performance of individual physical qubits. A logical qubit isn’t a single physical unit, but rather a virtual qubit encoded across multiple physical qubits with the aim of detecting and correcting errors on the fly. By entangling a group of physical qubits and spreading out quantum information among them, a logical qubit effectively stores the same data in different places. This redundancy means that if one of the underlying physical qubits experiences an error (as quantum bits often do), the error can be identified and corrected by the others, rather than corrupting the overall computation. In simple terms, a logical qubit is to quantum computing what RAID is to hard drives or what error-correcting codes are to classical data: a way to use extra resources to guard against failures.

Why are logical qubits so crucial for large-scale, fault-tolerant quantum computing? The reason is that today’s physical qubits (the raw qubits in a device) are extremely “noisy” – prone to flip from 0 to 1 or lose their quantum state due to even tiny disturbances. In fact, the error rates of physical qubits in current machines are on the order of 1%, i.e. about 1 error per 100 operations. At such rates, trying to run a long algorithm with hundreds or thousands of operations becomes hopeless – errors will accumulate and overwhelm the computation long before it finishes. This is the dilemma of the NISQ era: adding more qubits alone doesn’t help if each qubit is highly error-prone. As Microsoft notes, simply scaling up the count of noisy physical qubits “is futile” without improving error rates. Logical qubits solve this by trading quantity for quality: they bundle many imperfect qubits together in a smart way to behave as one very reliable qubit. Using reliable logical qubits is the only known path to perform long, complex computations on a quantum computer – in other words, to achieve the holy grail of a fault-tolerant quantum machine.

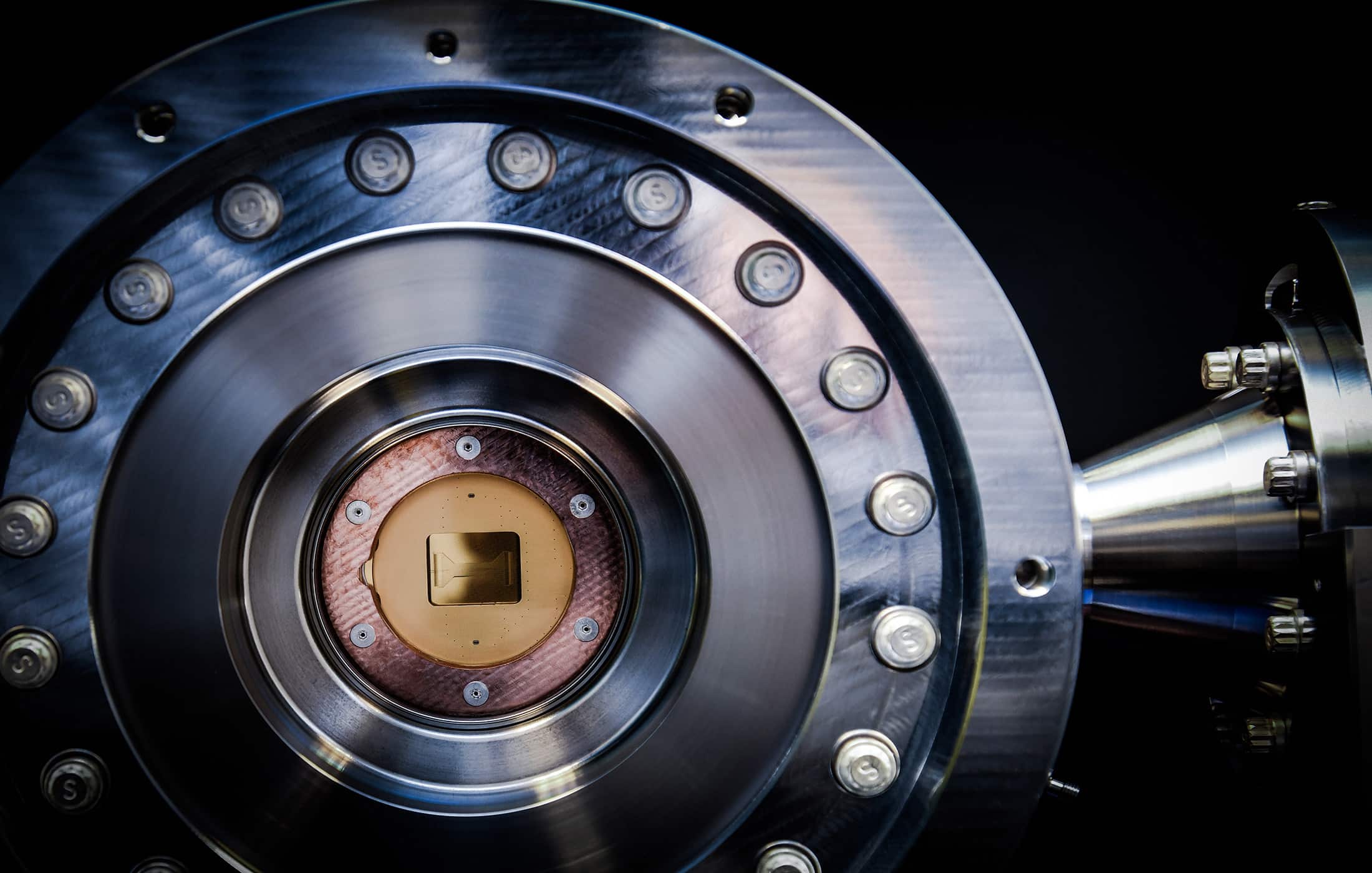

In this breakthrough, Microsoft contributed a qubit virtualization system – essentially a sophisticated software and control framework that orchestrates multiple physical qubits as one logical qubit. This system provides active error diagnostics and correction capabilities running in real-time on the quantum hardware. Quantinuum provided the hardware platform: the H2 ion-trap quantum processor, which offers unique advantages for error correction. The H2 machine has 32 qubits that are fully connected (any qubit can interact with any other), and it boasts a two-qubit gate fidelity of 99.8%, among the highest in the industry. These features are important because many error-correction codes require frequent multi-qubit operations and measurements; having high-fidelity gates and flexible connectivity greatly reduces the overhead of implementing a logical qubit. In fact, the close integration of Microsoft’s software with Quantinuum’s hardware allowed the team to dramatically reduce the resources needed for error correction. Initially, they estimated around 300 physical qubits would be required to create a few logical qubits, but by optimizing the scheme they achieved 4 logical qubits using only 30 physical qubits on H2. This 10× compression of the code – made possible by the H2’s design and Microsoft’s algorithms – challenges the long-held assumption that a useful logical qubit would need hundreds or thousands of physical qubits. It’s a prime example of hardware-software co-design: tailoring the error-correction approach to the strengths of the hardware resulted in a far more efficient implementation than expected.

However, it’s important to note that quantum error correction is famously challenging, and has been a primary bottleneck in scaling quantum computers. The fundamental obstacle is the “threshold” problem: if your physical qubits are too error-prone, adding error correction will actually make things worse, not better. Every error-correcting operation itself can introduce new errors (a case of the cure being as bad as the disease). As researchers often say, “the more the corrections, the more the errors.” Only when the physical error rate falls below a certain threshold can an error-correcting code produce a net improvement in reliability. Hitting this threshold has required years of improvements in qubit quality. This is why quantum error correction has been a bottleneck: it demands an extremely low base error rate and a large number of spare qubits, both of which have only become available in the latest devices. Microsoft and Quantinuum’s experiment is a proof that we have now crossed that threshold for the first time on an actual system. By meeting the strict requirements for error correction (high fidelity, many qubits, fast control), they demonstrated that the logical qubits’ error rate can be drastically lower than the physical qubits’ error rate – in this case by a factor of 800. It’s a clear validation that error correction can work in practice given sufficient qubit quality. This success relieves some skepticism in the field and suggests that we can begin to scale up quantum processors in a fault-tolerant way without needing millions of qubits upfront.

Mathematical and Technical Explanation

Achieving such a dramatic error reduction relied on advanced quantum error-correction techniques. At the heart of Microsoft’s approach is a method called “active syndrome extraction”, which is essentially the quantum equivalent of real-time error checking. In a logical qubit, you cannot directly copy or measure the data qubits to see if an error occurred – that would collapse their quantum state (as per the no-cloning theorem). Instead, the system uses additional qubits and clever circuit constructions to detect any errors indirectly. These extra qubits periodically gather syndrome information, which is like a red flag indicating an error happened, without revealing the actual quantum data. The syndrome is then read out (a measurement in the middle of the computation) and interpreted by the control system. If an error is detected, a corresponding correction (such as a bit-flip or phase-flip on the affected qubit) can be applied – all while the logical quantum information remains intact and unobserved. This process might be repeated in cycles to continually suppress errors as they occur. In the Microsoft–Quantinuum experiment, the team successfully performed multiple rounds of active syndrome extraction on their logical qubits, diagnosing and correcting errors without destroying the encoded information. This capability is essential for running long algorithms: it’s how a quantum computation can keep going for millions of operations by constantly catching and fixing glitches along the way.

By using such error-correction protocols, the researchers managed to create logical qubits of unprecedented fidelity and put them through stringent tests. One key benchmark was an entangled logical qubit experiment. They prepared pairs of logical qubits in a special entangled state and measured them in various ways to see if any discrepancies appeared – essentially checking if the entanglement held up perfectly or if errors crept in. After repeating this procedure 14,000 times, they observed zero disagreements in the measurement outcomes between the entangled partners. In other words, any error that did occur was immediately detected and corrected, such that it never manifested in the final results. This corresponds to a logical error rate below 1 in 14,000 per circuit run, or about 0.007% per operation, which is in line with the quoted rate of roughly 1 in 100,000 (0.001%) for individual logical qubit errors. For comparison, physical qubits on the same hardware had error probabilities around 1% (1 in 100) per operation. That gap – physical error ~1e-2 versus logical error ~1e-5 – is the 800× improvement being celebrated. It’s not just above the theoretical threshold; it’s well into the territory where genuinely useful quantum computations become conceivable. As the team noted, they have now achieved a “large separation between logical and physical error rates,” and they also demonstrated they could correct every individual circuit error that occurred, as well as entangle multiple logical qubits together – meeting all three criteria for entering the realm of reliable quantum computing.

Measuring error rates in a quantum computer is tricky, but the basic idea is to run many trials of a known circuit and see how often the outcome is wrong. In the logical qubit tests, a “wrong” outcome would be a case where an entangled pair’s measurements disagree when they should be the same. Getting zero such cases out of 14,000 trials gives a statistical upper bound on the error rate (and strong evidence the true error rate is extremely low). The significance of an 800× improvement is profound. It means the logical qubit can perform about 800 operations in a row before an error slips through, whereas a physical qubit would fail after just 1 operation on average. In practice, it suggests that computations which would have been impossible on the physical qubits (due to error accumulation) can now be attempted reliably on the logical qubits. This is the essence of fault tolerance: the system can handle many operations and even multiple error events, as long as those errors are caught and fixed in time, leaving the final result unaffected.

A natural question is how scalable this approach is. Thus far, the demonstration involved a handful of logical qubits (initially 4 logical qubits in the April 2024 result), so what about more complex computations requiring dozens or hundreds of logical qubits? The outlook is cautiously optimistic.

Because the error correction worked so effectively, adding more logical qubits should be a matter of providing more physical qubits and maintaining high fidelity. However, scaling further will require quantum hardware with significantly more physical qubits. Each logical qubit still consumes several physical qubits (in the current scheme, roughly 7–8 physical qubits per logical qubit were used on average ). To get, say, 100 logical qubits – which Microsoft forecasts could deliver a “scientific advantage” in solving important problems – one might need on the order of a few hundred physical qubits of similar quality. And to reach ~1,000 logical qubits for a commercially advantageous quantum computer, thousands of physical qubits could be required. These numbers are still far beyond today’s machines, which typically have between 50 and a few hundred qubits. The good news is that hardware is steadily improving. Moreover, Microsoft is pursuing advanced qubit designs like the topological qubit – a novel physical qubit with built-in error resistance – which could further reduce the overhead for error correction in the future.

In summary, the 800× error reduction achieved here is scalable in principle, but going from a dozen logical qubits to hundreds will require continued improvements in both the quantity and quality of qubits. The approach will need to be refined and extended, but no new scientific roadblocks have emerged at this level – it’s now mostly an engineering challenge of “more qubits and even lower error rates,” which is a challenge the quantum industry is actively tackling.

Comparison with Other Efforts in Fault-Tolerant Computing

Microsoft and Quantinuum’s achievement comes amid a broader push toward fault-tolerant quantum computing by many players in industry and academia. Over the past few years, companies like Google and IBM, as well as university research teams, have been steadily advancing the frontier of logical qubit performance – though each with different approaches and milestones.

Google has focused on the popular surface code error-correction scheme using its superconducting qubit processors. In 2021–2023, Google’s Quantum AI team demonstrated the first instances of a logical qubit that could outperform a physical qubit in their system. Specifically, they showed that by increasing the number of physical qubits in the surface code (going from a distance-3 code to a larger distance-5 code, for example), the logical error rate decreased – a clear sign of error correction working as intended. This was hailed as Google’s “Milestone 2,” the first prototype logical qubit that gets more reliable with more qubits, proving the viability of quantum error correction on their hardware. In a 2024 experiment, Google went further by demonstrating a logical qubit (as a quantum memory) that “outlasted its best physical qubit by more than double”, meaning the logical qubit’s coherence time was over 2× longer than any single qubit’s. In other words, error correction extended the qubit’s useful life beyond the break-even point. This was achieved by operating the code below the surface-code error threshold, an accomplishment many thought was still out of reach for superconducting qubits. Google’s experiments, however, have so far largely dealt with one logical qubit at a time (quantum memory tests without multi-logical-qubit entanglement), whereas Microsoft and Quantinuum demonstrated multiple logical qubits interacting. The goals are complementary: Google has been proving the concept of error rate suppression with larger codes, while Microsoft/Quantinuum proved you can get a very low error rate with a smaller code by leveraging exceptional hardware fidelity. Both approaches are crucial steps toward full fault tolerance.

IBM, on the other hand, has been pursuing a strategy of “hardware-aware” error correction codes and rapid iterative improvements in their superconducting qubit platforms. IBM’s quantum hardware uses a heavy-hexagon qubit connectivity (a modified lattice) which they co-designed with a tailored subsystem code that is a variant of the surface code. In 2022, IBM researchers demonstrated multi-round quantum error correction on a distance-3 logical qubit encoded in this heavy-hexagon code. That experiment showed they could detect and correct errors over several cycles on real hardware – an important proof that their approach is viable. IBM has also invested in discovering new error-correcting codes that are more efficient. In 2023, IBM scientists reported new LDPC quantum codes that in theory could achieve the same error suppression with 10× fewer qubits than the surface code. The implication is that a fault-tolerant quantum computer might not require “millions of physical qubits” as earlier estimates suggested, but perhaps only hundreds of thousands or even tens of thousands, if such advanced codes are used. IBM is thus targeting a reduction of overhead through code design improvements, in parallel with improving their qubit quality. While IBM’s current experimental logical qubits have not yet reached the 800× error reduction of the Microsoft-Quantinuum result, their continual progress in both error mitigation (short-term techniques to reduce errors) and error correction shows steady momentum. IBM emphasizes co-designing the codes with the hardware – much like Microsoft did – noting that error correction and hardware development must progress hand-in-hand rather than in silos.

Academic groups and other quantum startups are also exploring alternative routes to fault tolerance. One notable approach is using bosonic quantum codes in hybrid superconducting systems. For instance, a 2022 experiment by Yale University and partners demonstrated a bosonic error-correcting code (encoding a qubit in states of a microwave cavity) that extended the logical qubit’s lifetime beyond that of the best physical qubit by about 16%, effectively achieving the break-even point for the first time. Instead of many physical qubits, bosonic schemes use a single high-quality resonator mode to encode the logical qubit, with an auxiliary qubit to perform syndrome measurements. This approach differs from Microsoft/Quantinuum’s (which used many distinct ion-trap qubits), but it shares the goal of improving fidelity through clever encoding. Researchers have also demonstrated small logical qubits using other codes: for example, a team implemented Shor’s code (one of the earliest QEC codes) on a trapped-ion system, showing that a 9-qubit Shor logical state could be prepared with nearly 99% fidelity. Additionally, experimentalists have begun exploring color codes and other exotic codes on different hardware platforms as alternatives to the surface code. Each technique has its own trade-offs in terms of qubit overhead, connectivity requirements, and tolerance to different error types.

In summary, Microsoft and Quantinuum’s logical qubit performance (800× error reduction, 12 entangled logical qubits) currently stands as a state-of-the-art achievement, but it exists in the context of a worldwide effort. Google’s surface-code experiments have validated that logical errors can be exponentially suppressed by increasing code size, though at a higher overhead and so far only for a single logical qubit. IBM’s research is yielding more qubit-efficient codes and demonstrating that small-distance codes can run in real time on their devices. Academic labs have hit the crucial break-even point in various systems, showing error correction is beginning to confer net benefits. The Microsoft–Quantinuum collaboration distinguishes itself by achieving an unprecedented low error rate and doing so on a platform with all-to-all connectivity (ion traps), which meant they could correct errors with fewer qubits. In contrast, Google and IBM use nearest-neighbor superconducting qubits, which usually require larger code patches to compensate for connectivity limits. Despite these differences, all paths are converging toward the same goal: qubits that are reliable enough to perform useful computations for as long as necessary. It’s likely that continued competition and collaboration among these groups will drive rapid improvements. What we see now is essentially a race to quality – who can get logical error rates down the fastest and manage to scale up at the same time. And as the 800× error reduction has shown, surprises can happen; assumptions about needing millions of qubits or many years of development are being challenged by innovative leaps in both theory and implementation.

Industry Implications and Roadmap to Practical Quantum Computing

This breakthrough solidifies Microsoft and Quantinuum’s positions as front-runners in the quest for practical, fault-tolerant quantum computing. For Microsoft, which historically did not build its own quantum hardware (instead pursuing a longer-term topological qubit approach), this achievement showcases the power of software–hardware co-design and the value of its Azure Quantum platform strategy. By partnering with Quantinuum and focusing on the “brain” of the quantum computer (the error correction and orchestration layer), Microsoft was able to leap ahead on the reliability metric without having a giant processor of its own. It underscores that in quantum computing, it’s not just about qubit count – it’s about qubit quality and how intelligently those qubits are used. Quantinuum, for its part, demonstrates that its ion-trap hardware is among the best in the world, not just in raw fidelity but in being “QEC-ready” – capable of supporting complex error correction protocols in real time. This positions Quantinuum very strongly against other hardware makers; for example, IBM’s latest 127-qubit and 433-qubit processors still operate in the NISQ regime without full error correction, whereas Quantinuum’s much smaller 32-qubit H2 has now reached the next level of resilient computing. The collaboration highlights a possible model for the industry: specialization and partnership – with one party providing top-notch hardware and another providing the sophisticated software stack – to accelerate progress. In fact, this joint effort has been ongoing since 2019, suggesting that deep, years-long collaboration is what it takes to crack the toughest problems in quantum technology.

One immediate implication is that we may soon see early access to fault-tolerant quantum computing services. Microsoft has announced plans to integrate these reliable logical qubits into its Azure cloud offering. Azure Quantum customers will be offered priority access to this nascent fault-tolerant hardware in a preview program. This means that for the first time, end-users (researchers, enterprises, etc.) could run experiments on logical qubits in the cloud, testing algorithms that rely on error-corrected operations. It’s a significant step toward commercializing quantum error correction. The ability to program algorithms at the logical qubit level, without worrying about each physical gate error, could unlock new applications even before full-scale quantum computers arrive. For instance, Microsoft revealed that they have already performed the first end-to-end chemistry simulation using these logical qubits combined with classical high-performance computing and AI tools. This kind of hybrid workflow – where a quantum algorithm (running on logical qubits) tackles the quantum part of a chemistry problem and feeds results into classical simulations – is a preview of how near-term scientific advantage might be achieved. It shows that even a relatively small number of highly reliable qubits can start to tackle meaningful problems in domains like chemistry, materials science, and pharmacology. As more logical qubits come online, we can expect increasingly complex simulations and computations to become feasible, bridging the gap between today’s toy problems and tomorrow’s breakthrough solutions.

The collaboration also illustrates a broader point for the industry: the necessity of quantum hardware and software co-design. To get to this milestone, the hardware needed specific features (like all-to-all connectivity and fast mid-circuit measurement), and the software had to be tailored to exploit those features with maximal efficiency. Moving forward, co-design will likely become the norm. Companies are realizing that achieving fault tolerance isn’t just about one element in isolation; it requires optimizing the entire stack, from the qubit physics up to the error-correcting code and even to the algorithms that will run. This breakthrough “800× qubit” is a case study in that principle – neither Microsoft nor Quantinuum could have achieved it alone with a generic approach. As Inside Quantum Technology News noted, “co-designing high-quality hardware components and sophisticated error-handling capabilities” is key to attaining reliable and scalable quantum computations. We may therefore see more partnerships akin to this one, for example Microsoft’s newly announced partnership with Atom Computing to develop a neutral-atom quantum machine integrated into Azure Quantum, aiming to combine the best of Microsoft’s software with novel hardware from others.

Is this achievement a true turning point for quantum computing? Many in the field believe it is. It’s the clearest proof yet that fault-tolerant quantum computing is not only possible in theory but achievable in practice. It refutes the argument from skeptics who thought that error rates could never be lowered enough or that the overhead would be prohibitive. By showing that you can get a logical qubit vastly more stable than physical ones with only tens of qubits, Microsoft and Quantinuum have changed the outlook. The timeline to practical quantum computing could accelerate as a result. Goals that once seemed a decade away might now be closer at hand, assuming the approach scales. There is, of course, still a long road from a dozen logical qubits to a fault-tolerant machine with thousands of logical qubits. But the roadmap looks more attainable now. We can sketch a plausible path: continue improving ion-trap or other hardware to perhaps a few hundred qubits with 99.9% fidelity (next 2–3 years), use those to encode on the order of 50–100 logical qubits (mid to late 2020s), at which point early “quantum advantage” for useful problems may be demonstrated. From there, industrial-scale machines of 1,000+ logical qubits (which might need ~10k physical qubits if the efficiency holds) could be envisioned in the early 2030s. This is speculative, but it’s grounded in the real performance data we now have. Notably, IBM’s 2023 error-correction roadmap also projects needing on the order of thousands, not millions, of physical qubits thanks to more efficient codes. In essence, the feasibility of building a quantum computer that can outperform classical machines on important tasks seems much higher after this breakthrough. Companies and governments will surely double down on their quantum investments, seeing that the elusive goal of a fault-tolerant quantum computer is coming into focus.

Impact on Cryptography and Security

Whenever quantum computing makes a leap forward, it inevitably raises the question: Does this bring us closer to quantum computers that can break encryption? The ability to run Shor’s algorithm on a large-scale, error-corrected quantum computer to factor large numbers (and thus break RSA encryption) is often considered the threshold for a cryptographically relevant quantum computer (CRQC). The logical qubit advance by Microsoft and Quantinuum is a step in that direction, but we should clarify where it stands on the path to cryptography-breaking power. On one hand, demonstrating reliable logical qubits is a necessary precondition for eventually running the algorithms that threaten classical cryptography. You would need hundreds or likely thousands of logical qubits with very low error rates to factor, say, a 2048-bit RSA key. This breakthrough shows that high-fidelity logical qubits are possible, suggesting that, in time, one could scale up to the counts needed for such tasks. In that sense, it does accelerate the arrival of CRQC because it validates the approach and may shorten the development timeline. Indeed, national security agencies and organizations like NIST keep a close eye on these milestones. NIST recently published a report recommending that industry transition to post-quantum cryptography by 2035, basing this deadline on an expectation that a quantum code-breaking capability could exist in the early-to-mid 2030s. Progress like the 800× error-rate improvement reinforces the plausibility of that timeline – or even suggests that a CRQC could materialize earlier if progress accelerates further.

On the other hand, it’s important to emphasize that current quantum machines are still far from attacking modern cryptography. The experiments discussed involved a few qubits, nowhere near the scale required to run Shor’s or other cryptographically relevant algorithms. Each logical qubit in this experiment can be seen as an incredibly stable “bit” of quantum memory or processing, but you’d need many of them working in concert to do something like factor a 2048-bit number (estimates vary, but roughly a few thousand logical qubits might be required for a full break, running for many hours). Achieving that will require successive generations of technology beyond what exists now. So while this doesn’t immediately put our encryption at risk, it is a signal. It signals that the foundational pieces of a CRQC are falling into place. Much like seeing the first transistors work reliably signaled the eventual rise of powerful computers, seeing logical qubits work reliably signals that powerful quantum computers capable of decryption feats are on the horizon.

For governments, enterprises, and anyone concerned with long-term data security, the takeaway is clear: post-quantum cryptography (PQC) needs to be on the agenda now, not later. The concept of “harvest now, decrypt later” attacks – where adversaries steal encrypted data now in hopes of decrypting it in the future with a quantum computer – makes this urgent. The safer course is to assume that a CRQC could be achieved within a decade or so and to begin deploying quantum-resistant encryption algorithms well before that. In fact, the US government (through NIST) has already standardized some PQC algorithms and is urging organizations to start the transition, with guidelines suggesting all sensitive systems shift to PQC by 2035. This quantum computing breakthrough will likely add momentum to such efforts. It demonstrates rapid progress – an 800× error reduction was not anticipated so soon by many – and thus serves as a wake-up call that one should not underestimate the pace of quantum advancements. In practical terms, industries dealing with long-lived secrets (like healthcare records, state secrets, critical infrastructure) should accelerate testing and adoption of PQC. Just as the Space Race spurred technological leaps, the Quantum Race is now spurring a race in cybersecurity to be quantum-ready in time. While we can celebrate the positive implications of reliable quantum computers (for science, medicine, etc.), we must also proactively guard against their dark side by future-proofing encryption and security protocols.

Future Outlook

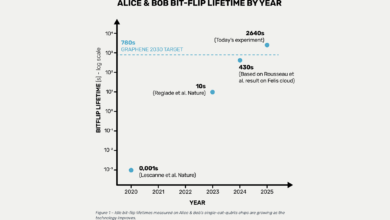

The successful demonstration of high-fidelity logical qubits is a milestone, but it’s also just the beginning of a new chapter. Experts agree that several things need to happen next to scale this achievement further. First and foremost is increasing the number of logical qubits available while maintaining low error rates. This likely means scaling up the physical qubit count and connectivity in hardware like Quantinuum’s ion traps or similar systems. We can expect to see processors with hundreds of physical qubits emerge in the coming years, whether in ion-trap form or other architectures, specifically designed with error correction in mind. As noted, Quantinuum’s roadmap includes a new machine with improved specs to support 10+ logical qubits as early as 2025. If that machine succeeds, the next goal might be 50 logical qubits, then 100, and so on. Each step will test the limits of the error-correction scheme – for instance, ensuring that crosstalk or correlated errors don’t undermine the gains when many logical qubits operate simultaneously. Ongoing research will focus on improving error-correction algorithms too. The Microsoft team will likely refine their qubit virtualization system to make it more efficient, possibly reducing the physical qubit overhead per logical qubit even further. We may see hybrid codes or adaptive error correction, where the code adjusts on the fly depending on error patterns, to squeeze even more reliability out of the hardware.

Another key area for future work is logical qubit operations. So far, much of the focus has been on creating logical qubits and keeping them stable (quantum memory) or entangling them in simple ways. The next step is to perform a variety of quantum logic gates between logical qubits and to do so fault-tolerantly. Executing a logical two-qubit gate (like a controlled-NOT) in a way that any error during the gate can be corrected is a non-trivial extension of this work. Demonstrating a universal set of logical gates with error rates similarly suppressed by orders of magnitude will be crucial for running full algorithms. The good news is that many of the components are similar to what’s been shown (syndrome extraction etc.), but it will add complexity and require more ancilla qubits. We can look forward to papers and announcements about entangling logical qubits with high fidelity and perhaps implementing small logic circuits entirely at the logical level. In fact, Microsoft’s report of entangling 12 logical qubits hints that they are already exploring multi-qubit logical operations in a network of logical qubits.

We should also keep an eye on other platforms attempting similar breakthroughs. It would not be surprising if in the next year or two, a superconducting qubit platform (like those of Google or IBM) also achieves an impressive logical qubit error rate improvement, perhaps not 800× initially but crossing some new threshold. As hardware improves (for example, superconducting qubits getting beyond 99.9% fidelity or introducing faster feedback loops), those systems might catch up in logical qubit performance. Similarly, neutral-atom qubit platforms (e.g., from startups like Atom Computing or QuEra) might integrate new error correction methods. Microsoft’s collaboration with Atom Computing aims to eventually deliver a highly reliable neutral-atom quantum machine – an indication that the field is broadening the types of hardware that can achieve low-error logical qubits. In the next few years, we may see leapfrog moments: one group achieves a new record in logical qubit count, another in logical fidelity, etc. These will push everyone forward.

The ultimate vision shared by many is a hybrid quantum-classical supercomputer where on the order of 100–1000 logical qubits work in tandem with exascale classical computing and AI to solve problems that neither could alone. This vision will guide the next decade of development. To reach it, milestones to watch for include: 1) Logical error rates below $$10^{–6}$$ or $$10^{–7}$$ (even better than the $$10^{–5}$$ achieved here, as Microsoft cites a goal of <1 error per 100 million operations for truly large-scale systems ); 2) Dozens of logical qubits running error-corrected algorithms (not just toy circuits, but algorithms like quantum chemistry simulations or simple instances of quantum optimization problems); 3) Integration of logical qubits in cloud platforms where users can start programming at the logical level, abstracting away physical qubit management. Each of these, when achieved, will mark a significant step toward practical quantum computing.

Will similar breakthroughs follow in coming years? The safe bet is yes. Quantum computing has a competitive but also collaborative spirit – major advances often inspire parallel efforts elsewhere. We might soon see, for example, a demonstration of a logical qubit on a superconducting system with a comparable 100× or more improvement over physical qubits, or an entirely different error-correcting code (like a color code) implemented with record fidelity. The landscape of quantum hardware is also expanding, and each platform could yield its own breakthrough: trapped ions (Quantinuum, IonQ), superconducting circuits (IBM, Google, Rigetti), neutral atoms, photonics, even topological qubits if Microsoft’s long-term research pays off. Each success will bring the era of fault-tolerant quantum computing closer.

In conclusion, the Microsoft and Quantinuum achievement is a trailblazer for the quantum industry. It provides a tangible proof-point that will likely accelerate investment and research across the board. The next few years will be exciting as we witness the quest for more logical qubits with ever-lower error rates. If progress continues at this pace, we may sooner than expected enter the realm where quantum computers can tackle problems of real scientific and economic importance – and do so reliably. The journey to large-scale quantum computing is far from over, but with this 800× error reduction milestone, the destination feels closer than ever. Each new breakthrough will build on this foundation, and we can be optimistic that quantum computing’s most revolutionary applications – from discovering new materials to breaking cryptographic codes (for better or worse) – will become achievable in the not-too-distant future. The race toward fault tolerance is officially on, and the finish line, while still ahead, is now visibly in sight.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.