The Five Stages from Idea to Impact: Google’s Framework for Quantum Applications

Table of Contents

13 Nov 2025 – Google’s Quantum AI team has unveiled a new five-stage framework to guide the development of useful quantum computing applications. Published as a perspective paper on arXiv and summarized in a Google blog post, this framework shifts focus from merely building quantum hardware to verifying real-world utility. As large-scale fault-tolerant quantum computers (FTQC) inch closer to reality, the question “What will we do with them?” looms large. Google’s framework attempts to answer this by mapping the journey from a theoretical idea to a deployed solution. It emphasizes milestones like finding hard problem instances, proving quantum advantage in practice, and engineering solutions under realistic constraints. In doing so, it provides a conceptual model of application research maturity – a roadmap not just for hardware progress, but for the algorithms and applications that will justify quantum computing’s promise.

Overview of Google’s Five-Stage Quantum Application Framework

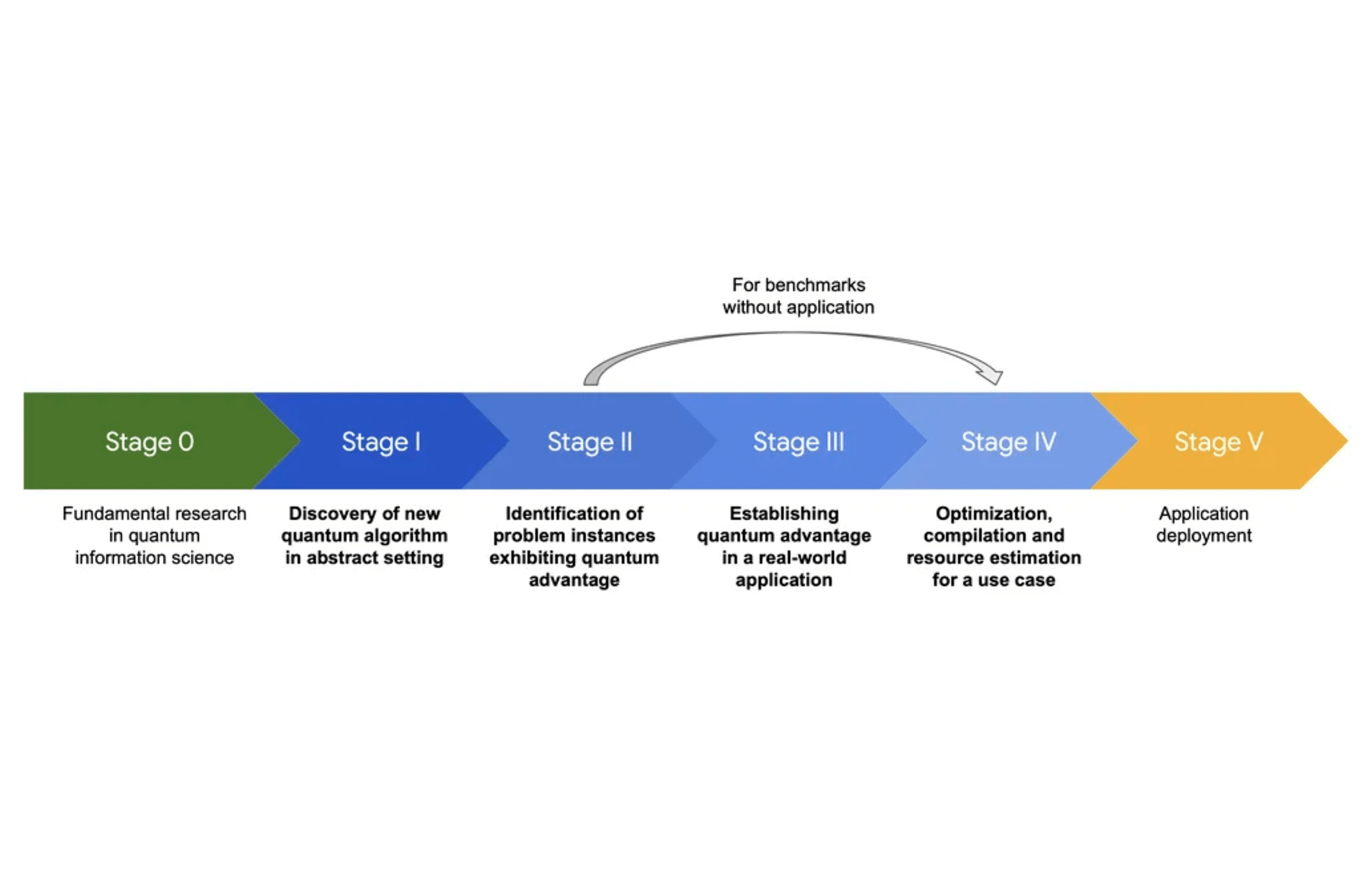

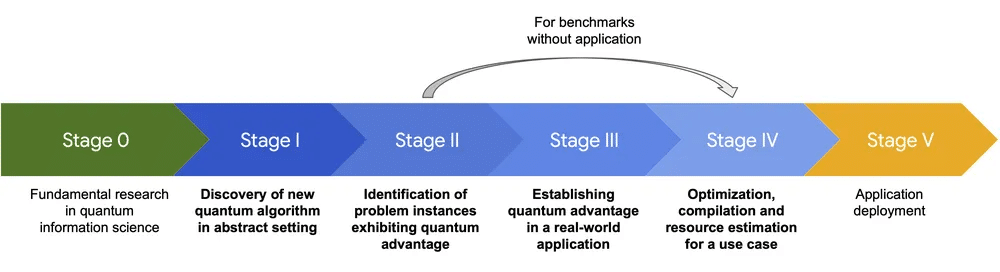

Google’s framework defines five main stages (plus a foundational “Stage 0”) that a quantum computing idea passes through on its way to real-world impact. Each stage marks a higher level of maturity for the application:

- Stage I – Discovery: Abstract algorithm ideation. A new quantum algorithm is conceived and analyzed theoretically (e.g. Grover’s search or Shor’s factoring algorithm). At this stage the algorithm might offer asymptotic speedups on paper, but its practical utility is unproven. Stage I often stems from fundamental research (“Stage 0”) in quantum information science exploring what is computationally possible (and impossible).

- Stage II – Problem Instance Identification: Find hard, verifiable instances. Here the abstract algorithm is applied to concrete problem instances to demonstrate a quantum speedup over the best known classical methods. Crucially, those instances should be verifiable – either classically (where possible) or by some quantum means – to prove the quantum advantage is real. This is often challenging: many real-world problem instances can still be solved classically, so researchers must pinpoint special cases (e.g. certain molecules, optimization inputs, or mathematical structures) that are classically intractable but tractable for the quantum algorithm. Stage II is about provable quantum advantage on specific tasks, even if the instances are contrived. Google notes this stage has been underappreciated and is currently a key bottleneck – “despite its critical role as a bridge from theory to practice, Stage II is frequently overlooked…and its difficulty underestimated”. In cryptography and quantum chemistry, however, researchers have made Stage II progress because identifying hard instances (e.g. tough encryption keys or complex molecules) is directly tied to those fields’ goals.

- Stage III – Real-World Advantage: Connect to practical use. This “so what?” stage asks whether the hard instances from Stage II actually matter in the real world. It’s not enough to win on a toy problem; the question is what real application does that instance represent and does solving it quantumly create value? For example, if Stage II showed a quantum advantage for simulating a particular molecule, Stage III asks: does simulating that molecule help with a practical goal like drug discovery or battery design ? This stage often reveals a “devil in the details” problem – many algorithms that pass Stage II fail to translate into useful outcomes because either the instances aren’t actually relevant or domain experts and quantum scientists lack a shared language. Indeed, bridging the gap between quantum algorithms and domain-specific problems is so challenging that Google calls Stage III the biggest bottleneck today. Few algorithms outside of cryptography and quantum physics have reached Stage III so far. Shor’s algorithm is one famous example that did (it directly targets breaking real cryptosystems), and quantum simulation of materials is another area where Stage III case studies are emerging (e.g. quantum algorithms for fusion energy or battery chemistry applications).

- Stage IV – Engineering for Use: Optimize and scale. Once a quantum solution for a real problem is identified, Stage IV focuses on the practical engineering needed to run it on actual hardware. This involves detailed resource estimation, error-correcting code design, compiler optimizations, and minimizing the runtime cost. The key questions become: How many physical and logical qubits are required? How many gate operations? How long will it take? All the overhead of fault-tolerant quantum computing (FTQC) must be accounted for here. The good news is that a decade of research in this stage has dramatically reduced the estimated resources for some famous applications – for example, the number of qubits needed to factor large integers or simulate complex molecules has dropped by orders of magnitude from early estimates. (Google’s paper shows a graph of resource requirements for factoring RSA-2048 and for the FeMoco molecule’s chemistry, plummeting from billions of qubits/gates to millions, between 2010 and 2025.) Stage IV also spurs development of software tools and languages (e.g. Google’s Qualtran or Microsoft’s Q#) to automate compiling algorithms into error-corrected circuits. In short, Stage IV is where an idea is made executable on an early fault-tolerant quantum machine.

- Stage V – Deployment: Real-world deployment and integration. The final stage is when a quantum solution is fully implemented in hardware and delivering an advantage in a production setting. This means a quantum computer is not just running the algorithm, but doing so better than all classical alternatives in a way that matters to end-users or an industry workflow. As of today, Stage V remains entirely aspirational – no end-to-end quantum application has conclusively reached this point with current hardware. It represents the future moment when quantum computing moves out of the lab and into everyday use for some problem of consequence.

Framing the Road to FTQC and CRQ

Google explicitly designed this framework to help chart the path toward useful fault-tolerant quantum computing. Fault tolerance – the ability to run quantum algorithms with error correction – is essential to reach Stage V for most meaningful tasks. The framework makes clear that achieving FTQC is not just a hardware challenge but also an algorithm and application challenge. Stage IV is where FTQC enters the picture: here, algorithm developers must grapple with quantum error correction overhead and optimize their solutions for early fault-tolerant devices. Google argues that we are on the cusp of an “early fault-tolerant era” and that success will require co-designing algorithms with the architecture of error-corrected hardware in mind. Simply trying to minimize circuit depth or qubit count in isolation may be misleading; instead, algorithm researchers need to understand the nuances of error-correction (stabilizer codes, syndrome extraction, etc.) when crafting algorithms for the first generation of FTQCs. In other words, Stage IV is about ensuring that once hardware achieves a few logical qubits and gate fidelities at threshold, we actually have something valuable to run.

Cryptographically relevant quantum computing (CRQC) – often defined as a quantum computer capable of breaking modern encryption like RSA – is a specific example frequently discussed in terms of these stages. Shor’s factoring algorithm essentially leapt through Stage I, II, and III in one go back in the 1990s (it was a new algorithm, targeted a concrete problem instance – large composite numbers – and had an immediate real-world application: breaking RSA). Since then, researchers have worked hard on Stage IV for Shor’s algorithm: recent estimates show that factoring a 2048-bit RSA key could be done with under a million noisy qubits (using error correction), down from billions estimated earlier. In Google’s terms, the cryptanalysis application (integer factoring) is currently at Stage IV maturity – we know what needs to be done and roughly how many qubits and operations it will cost – but we await Stage V deployment when a large enough FTQC is built. Notably, Google’s framework demands verifiable advantage in Stage II; cryptography is one domain where that’s naturally required (a factored number is easy to check). Google’s Ryan Babbush and colleagues even caution that post-quantum cryptography’s security depends on robustly searching for quantum attacks – essentially a Stage I/II effort to make sure no undiscovered quantum algorithm could threaten new encryption schemes.

How does this framework inform predictions of “Q-Day”, the day a quantum computer breaks RSA? It provides a structure to evaluate progress. For example, Quantinuum’s recent breakthroughs in quantum error correction can be seen as accelerating the Stage IV→V transition for cryptography. Recently Quantinuum announced it had demonstrated a fully error-corrected universal gate set (including the hard-to-error-correct non-Clifford gates) on its ion trap processors – a milestone many see as “the last major hurdle” to building scalable FTQCs. They boldly set a 2029 target for a fully fault-tolerant machine. If such hardware arrives on that timeline, Google’s framework suggests that RSA-breaking capability (a Stage V deployment of Shor’s algorithm) would soon follow, since the earlier stages for that application are essentially complete. Indeed, my previous analysis noted that Quantinuum’s advances shrink the timeline for CRQC – what might have been thought a late-2030s scenario now appears to be looming in the early 2030s if progress continues at this pace. In practical terms, once a few hundred logical qubits with low error rates are available (as several credible roadmaps anticipate by 2029 to early 2030s), cryptographers expect RSA-2048 could be cracked. Google’s framework would classify that achievement as the completion of Stage V for a cryptanalysis application – a pivotal moment for cybersecurity.

Key Contributions and Calls to Action

Beyond mapping out stages, Google’s framework contributes several strategic insights for the quantum R&D community:

- Emphasizing Verifiability and “True” Advantage: The framework sets a high bar in Stage II by insisting on verifiable problem instances where quantum win(s) can be definitively proven. This is a reaction to past claims of quantum advantage that were hard to validate or only held under narrow assumptions. In Google’s recent “Quantum Echoes” experiment, for example, the team demonstrated a quantum simulation that could be run forward and backward to verify the result – making it the “first example of an algorithm run on a quantum computer with verifiable quantum advantage”. Verification builds trust that a quantum solution is not a black box, and the framework suggests it’s a prerequisite to reaching practical use (Stage III and beyond). This focus on rigorous proof and validation is crucial for applications like quantum chemistry or optimization, where decision-makers will need confidence that the quantum output is correct.

- Problem-Finding as Much as Problem-Solving: The framework shines a light on problem instance identification (Stage II) as an intellectual challenge in its own right. This is somewhat analogous to “problem-finding” in research – identifying what to ask a quantum computer such that it showcases a clear win. Google argues that this bridge from theory to practice has been under-resourced. They call for funding agencies and the community to invest more in finding classically hard instances, even if they seem esoteric. Interestingly, cryptographers have always done this (seeking hard instances for encryption schemes), and the framework generalizes that approach to other domains. Verifiable and hard instances are “as much the heart of the quest for quantum advantage as Stage I [algorithm discovery]” the authors write. In other words, a brilliant algorithm (Stage I) isn’t useful until someone finds where it actually beats everything else – a subtle point sometimes lost amid hardware hype.

- A Maturity Model for Quantum Applications: By explicitly categorizing the “research maturity” of an application idea, the five-stage model can help set realistic expectations. It becomes clear which promising algorithms are still early-stage (I/II) and which are closer to realization. For instance, Google applies the framework to three example applications in their blog: industrial chemistry simulations (which they place around Stage III), quantum factorization (Stage IV for the core algorithm, awaiting hardware), and certain optimization/ML algorithms (somewhere in Stage II). This kind of assessment can guide R&D investment. If an application is stuck in Stage II, perhaps more interdisciplinary effort (to reach Stage III) is needed; if it’s in Stage IV, maybe hardware development is the gating factor. The framework could serve as a benchmarking tool in that sense – not a numeric benchmark like “quantum volume,” but a qualitative one. Governments might even measure how many applications have been shepherded to Stage III or IV as a metric of progress beyond qubit counts. Google explicitly urges research funders to target Stages II and III in their programs, as these are the current bottlenecks.

- Algorithm-First, Then Application: A Strategic Pivot: One of Google’s recommendations is to “adopt an algorithm-first approach”. Historically, some efforts started with an industry problem and then asked “can a quantum computer help here?” – which often led to disappointment. Instead, Google suggests focusing on pushing algorithms through Stage II (proving a quantum advantage on some instance) before worrying about the exact business case. Once the algorithmic advantage is clear, they argue, one can actively search for a matching real-world application (Stage III). This approach might accelerate progress by not getting bogged down in overly broad use-case fishing at early stages. It also ties back to verifiability: an algorithm that clears Stage II with a verified advantage is a solid foundation on which to build a practical tool.

- Cross-Disciplinary “Translators” and AI Assistance: To conquer Stage III’s knowledge gap, Google highlights the need for talent and tools that span quantum computing and whatever domain (chemistry, finance, materials, etc.) the application lies in. They call for more cross-disciplinary experts – people who are conversant in quantum algorithms and in, say, drug discovery or grid optimization. These “bilingual” teams can identify real-world problems where the quirks of a quantum algorithm actually align with an industry need. Additionally, Google is “optimistic that AI could be a powerful tool” to help bridge this gap. For example, a large language model or literature-mining AI could scan through thousands of papers in chemistry and suggest where a known quantum algorithm might apply. Using AI to connect the dots between abstract quantum techniques and concrete domain problems is an intriguing idea mentioned in the paper. It’s essentially leveraging generative AI to propose Stage III mappings.

In essence, Google’s five-stage framework not only diagnoses where we stand on the road to useful quantum computing, but also prescribes remedies for speeding up the journey. It urges the community to widen the pipeline in the middle stages, lest we end up with powerful quantum hardware and too few proven applications to run.

A Quick Comparison: Google vs. IBM and Others

Google is not alone in charting milestones toward quantum advantage, but its framework has a distinct flavor.

IBM, for example, has been discussing the era of “quantum utility” – defined as using today’s quantum processors to solve problems at a scale beyond brute-force classical methods (even if not provably faster than all classical approaches). In 2023, IBM researchers demonstrated a 127-qubit computation that reached an accurate result for a challenging simulation problem, something impossible by direct classical computation (relying instead on classical approximations). IBM dubbed this evidence of quantum utility, marking “the first time in history” that a quantum computer tackled a meaningful task beyond classical brute force. Notably, IBM’s definition of utility stops short of full Stage II/III quantum advantage – it doesn’t claim the quantum method beat all classical methods, only that it achieved a useful result at a scale where exact classical computation was infeasible. In Google’s framework terms, one might say IBM has demonstrated something between Stage II and Stage III on certain problems: quantum computers providing a new alternative for large-scale simulations (useful, albeit not yet outperforming the best classical algorithms). IBM expects the path to true quantum advantage will be incremental – a “growing collection of problems” where quantum beats classical will accumulate over time, rather than one big leap. This aligns well with Google’s staged model, though IBM’s messaging focuses on near-term utility (on noisy devices) whereas Google is largely painting the picture for fault-tolerant systems where clear advantage is eventually attained.

Other players like Quantinuum and academic groups have set technical benchmarks chiefly around hardware performance – e.g. achieving “three nines” fidelity (99.9% two-qubit gate fidelity) or breaking quantum volume records – and around error correction milestones. Quantinuum’s 2025 announcement of a high-fidelity logical T-gate (a non-Clifford gate) is a prime example. It was hailed as proof that all ingredients for fault tolerance are now in hand, giving credibility to timelines for a full FTQC by the end of the decade. These achievements map to Stage IV in Google’s framework: they are about reducing error rates and resource overhead for known algorithms (ensuring we can implement the algorithms when hardware scales up). Indeed, Google cites the steady progress in Stage IV resource reduction as one of the success stories so far. What the five-stage framework adds, relative to such hardware-centric roadmaps, is a complementary focus on algorithmic readiness. It implicitly asks: by the time we have, say, 100 logical qubits, will we have a Stage III application to run that truly needs them? If not, then we risk a gap where hardware outpaces our problems – a scenario Google wants to avoid by encouraging more work in Stage II and III now.

In summary, IBM’s “quantum utility” and similar milestones emphasize the hardware’s capability and the quantum computer’s performance. Google’s five-stage framework emphasizes the application’s lifecycle and the community’s capability to find and prove useful quantum algorithms. They are two sides of the same coin – and ideally, progress in both should advance in tandem. As Google’s team writes, it’s critical to match investment in hardware with algorithmic progress, providing “clear evidence of [quantum computing’s] future value through concrete applications”. The five-stage model is one way to measure that algorithmic progress in a structured, transparent way.

Outlook: A Roadmap for the Quantum Community

Google’s five-stage framework could serve as a common reference for researchers, companies, and funding agencies to discuss where we are and what’s needed next on the road to practical quantum computing. It highlights that while we’ve made impressive gains in Stage I (theory) and Stage IV (engineering), the toughest challenges now lie in the middle: translating theoretical quantum speedups into verifiable, hard problem instances (Stage II) and into valuable real-world use cases (Stage III). By identifying these weak links, the framework effectively calls for a realignment of R&D priorities. For technologists and practitioners, it suggests focusing on new algorithms and problem classes now – to have a pipeline of proven applications ready when quantum hardware crosses the fault-tolerance threshold.

Will this framework also help predict when we’ll achieve FTQC and specific milestones like CRQC? Potentially, yes – if we track how applications move through the stages. For example, if a dozen high-value problems reach Stage III by the late 2020s and a few hit Stage IV resource parity, that would be a strong indicator that useful FTQC (Stage V deployments) will soon follow once adequate hardware is online. Conversely, if hardware arrives at a million-qubit scale but most algorithms are still stuck at Stage II, that spells trouble (or at least a delay in realizing value). In this way, the framework could become a benchmarking tool for “quantum readiness.” In fact, I offer a CRQC Readiness Benchmark and Q-Day estimator that implicitly weigh hardware progress against algorithm progress 0 concepts very much in the spirit of Google’s stage-gated model.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.