Google AI’s Surface Code Breaks the Quantum Error Threshold

Table of Contents

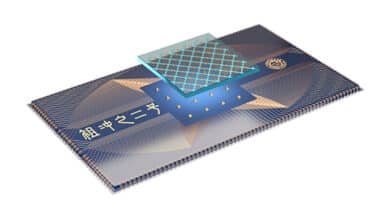

December 31, 2024 – In a landmark experiment, Google Quantum AI researchers have demonstrated the first quantum memory operating below the error-correction threshold on a superconducting processor. Using two new “Willow” quantum chips of 72 and 105 qubits, the team ran surface code error-correction cycles that actually outperformed the best physical qubits, a long-sought milestone in quantum computing. For the larger distance-7 surface code (spanning 101 physical qubits), they measured a logical error rate of only ~0.143% per cycle – about half the error seen in a smaller distance-5 code on the same device. This >2× error suppression (Λ ≈ 2.14) when increasing the code size is a clear signature of below-threshold operation. In practical terms, the logical qubit’s lifetime more than doubled compared to even the best single qubit on the chip. T

he result, reported in Nature, marks the first time a quantum error-correction code has improved qubit fidelity in the real world, validating a core principle of fault-tolerant quantum computing.

A Quantum Memory Beyond Break-Even

Crucially, Google’s distance-5 and 7 surface codes achieved “break-even” and beyond – meaning the error-corrected logical qubit survived longer than any of its constituent physical qubits. Previous experiments had hinted at QEC scaling behavior, but none had crossed this threshold where error correction truly outpaces decoherence. The surface code, which arranges qubits in a 2D grid with nearest-neighbor entangling gates, has long been a leading candidate for quantum memory. However, it demands physical gate error rates below a critical threshold (around 1% or less) to realize exponential suppression of errors with increasing code distance. The Google team’s processors met this demanding criterion: high-fidelity transmon qubits (average two-qubit gate fidelity ~99.9%) combined with stability enhancements and faster operation cycles. Under these conditions, enlarging the code from distance 5 to 7 lowered the logical error probability per cycle from ~0.3% to ~0.14%, roughly a 2× improvement. This behavior is exactly what one expects below threshold, where each additional layer of redundancy yields a dramatic reduction in error rate.

Equally significant, the logical qubit outlived the physical hardware. In fact, the distance-7 logical memory preserved quantum information for 2.4±0.3 times longer than even the best individual qubit in the array. This extends quantum information beyond the natural T₁/T₂ coherence time of any single transmon – a feat often described as passing the “break-even” point. It underscores that the overhead of the code (using many qubits and gates to encode one qubit) has paid off in net error reduction. In other words, the quantum error-correction cycle is removing more entropy from the logical qubit than it introduces, resulting in a net gain in coherence time.

Real-Time Decoding at Million-Cycle Scales

Another breakthrough in this experiment was the incorporation of a real-time decoder keeping pace with the device’s rapid 1.1 µs cycle time. Each round of the surface code involves measuring dozens of syndrome bits (error signals) which must be interpreted by classical software to correct errors on the fly. Google’s system achieved an average decoder latency of just ~63 µs on the distance-5 code, fast enough to decode and correct up to one million QEC cycles in real time. This is a remarkable engineering accomplishment – previous demonstrations of QEC often relied on offline decoding or much slower cycles. Here, a custom hardware-and-software decoder infrastructure (including a neural network and a matching algorithm ensemble) was integrated with the chip to provide live error corrections without pausing the experiment. The result is a sustained quantum memory operation that runs for extended periods (on the order of milliseconds) while actively correcting errors as they occur.

Maintaining below-threshold performance at such high speed and scale required not only excellent qubit fidelity but also tackling subtle error sources. The team reported observing rare correlated error events – for example, bursts of failures perhaps caused by cosmic rays or other glitches – occurring roughly once every hour, which correspond to an error rate around 10^(-10) per cycle in a long repetition code test. These infrequent big errors set a floor on the logical error rate and will need to be mitigated (through shielding or error detection strategies) as systems grow. Nevertheless, even with those events, the logical qubit showed consistent improvement over physical qubits for the first time.

Why It Matters

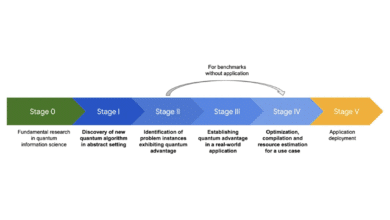

Google’s achievement is a pivotal validation of fault-tolerant quantum computing in a real device. It demonstrates that with sufficient qubit quality and architecture, one can build a quantum memory that scales favorably – larger codes yield better protection, as theory predicts. This milestone suggests that if the technology can be scaled up (to, say, thousands of qubits with similar performance), the resulting logical qubits could reach the ultra-low error rates (<10^(-10) per operation) needed for practical quantum algorithms. In the broader context, it’s the strongest evidence yet that quantum error correction is not just a theoretical idea but is actively extending quantum coherence in cutting-edge hardware.

The experiment also showcases the readiness of superconducting qubit platforms for complex QEC tasks. Fast gate speeds (tens of nanoseconds) gave an advantage in running many cycles quickly, while improved fabrication and control kept errors low. By meeting the strict timing demands of real-time decoding and achieving hours-long stability, the Google team addressed several oft-cited hurdles on the road to large-scale quantum computers. There are still many challenges ahead – including reducing correlated errors, integrating logical operations (not just memory), and managing the immense complexity of more qubits – but a critical proof-of-concept has been delivered. As the authors note, their system’s performance, “if scaled, could realize the operational requirements of large-scale fault-tolerant quantum algorithms”.

In summary, the demonstration of a below-threshold surface code memory is a watershed moment, signaling that the quantum community is finally at the dawn of error-corrected computation.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.