The Quantum Approximate Optimization Algorithm (QAOA) – A Beginner’s Guide

Table of Contents

Introduction: The Challenge of Combinatorial Optimization

Every day, we encounter problems that involve making a series of choices to optimize some outcome – from finding the shortest route that visits all your errands, to scheduling jobs on machines, to grouping people or objects optimally. These are combinatorial optimization problems, where the goal is to pick the best solution from a finite (but often enormous) set of possibilities. The catch is that the number of possible solutions usually grows exponentially fast as the problem size increases – a phenomenon often called the “combinatorial explosion”. For example, if you have 100 binary choices (bits) to assign, there are $$2^{100}$$ possible combinations – an astronomically large number. This exponential growth means that a brute-force search for the optimal solution quickly becomes infeasible on classical computers as the problem size grows.

Many combinatorial optimization problems of practical interest are classified as NP-hard, which informally means there is no known efficient (polynomial-time) algorithm to solve all instances exactly. In other words, as the problem gets larger, solving it exactly could take longer than the age of the universe with any algorithm we know today. Famous examples include the Traveling Salesman Problem, the Knapsack Problem, Max-Cut, job scheduling, vehicle routing, and many others. In each case, the space of possible solutions is huge, and finding the best one is computationally challenging. We often have to resort to heuristics or approximation algorithms on classical computers, which trade optimality for tractability.

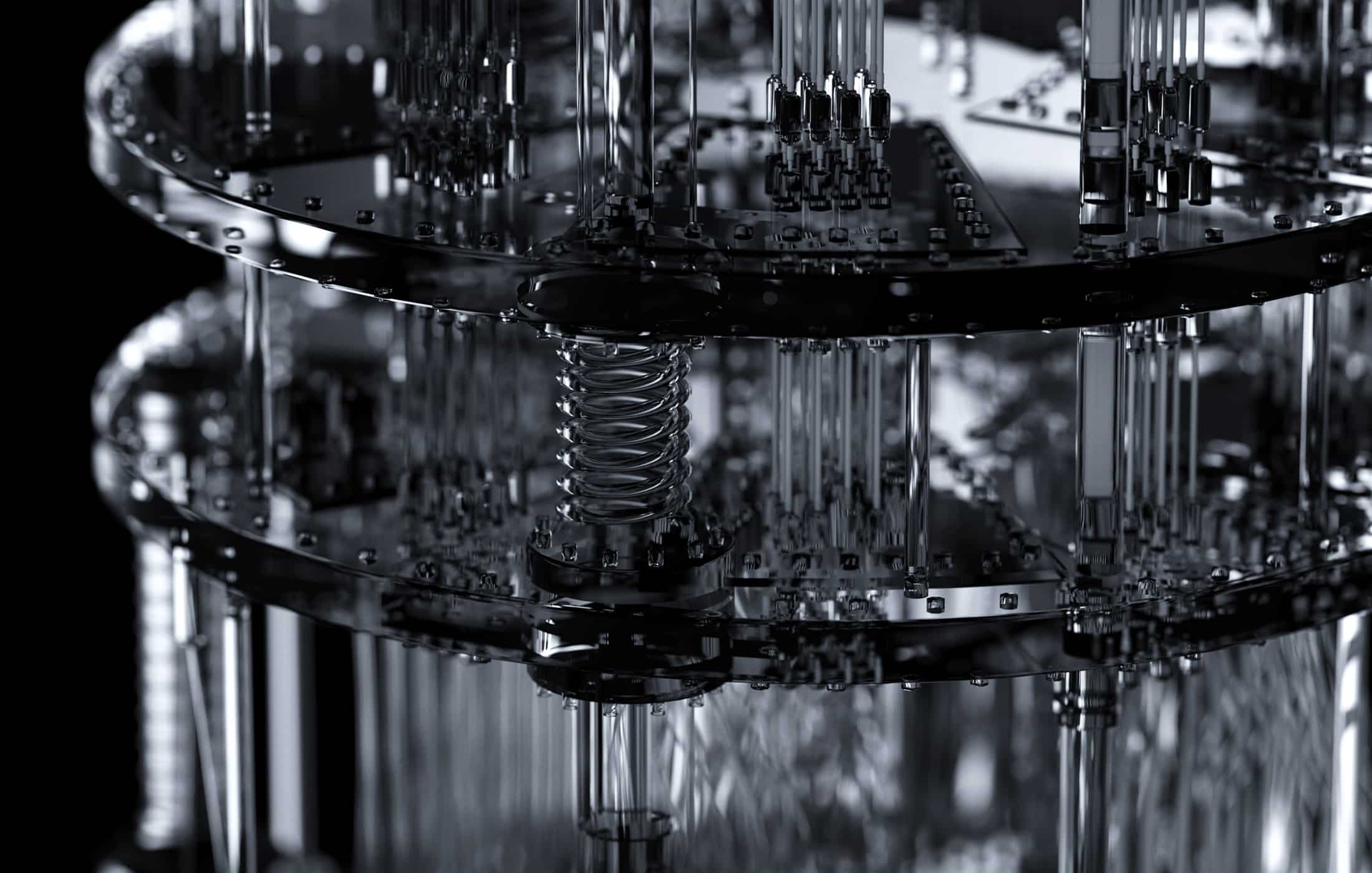

This is where quantum computing enters the scene with the hope of providing new tools for these hard problems. Quantum computers leverage phenomena like superposition (quantum bits can represent many possible states at once) and entanglement (correlations between qubits) to process information in ways classical machines cannot. A particularly promising approach for near-term quantum devices is to use variational quantum algorithms – hybrid methods that use a quantum computer to prepare parameterized quantum states and a classical computer to steer those parameters toward an optimal solution. The Quantum Approximate Optimization Algorithm (QAOA) is one of the leading examples of such a hybrid algorithm, tailored specifically for combinatorial optimization problems on noisy quantum hardware. QAOA doesn’t guarantee the optimal solution every time – as the name “approximate” suggests, it aims for good solutions – but it leverages quantum parallelism to explore the solution space in clever ways that might outperform some classical strategies.

QAOA in a Nutshell: Mixing Quantum and Classical Optimization

At its core, QAOA is a hybrid quantum-classical algorithm that constructs a special kind of quantum circuit (or “ansatz”) to represent a candidate solution, and then uses a classical optimizer to tweak that circuit for better results. It was introduced in 2014 by Edward Farhi and collaborators as an algorithm that “produces approximate solutions for combinatorial optimization problems” and that improves in quality as you increase a certain parameter p (which controls the circuit’s depth). In essence, QAOA is like a recipe with two key quantum ingredients that you alternate: one ingredient encodes the problem’s objective, and the other ingredient helps explore the search space. By alternating these ingredients p times (where p can be 1, 2, 3, …), you “cook up” a quantum state that hopefully concentrates a lot of probability on good solutions. Then you measure the quantum state to get an actual solution (a bitstring), and use classical feedback to adjust the cooking process (the angles or durations for each ingredient) to get an even better outcome. This loop repeats until we’re satisfied with the solution quality.

Let’s break that down more concretely. The two key quantum ingredients in QAOA are usually described in terms of Hamiltonians (a term from physics, but here you can think of a Hamiltonian simply as an energy function represented by an operator on the qubits):

- Cost Hamiltonian (H_C): This corresponds to the optimization problem we want to solve. We design H_C such that its lowest-energy state (ground state) encodes the optimal solution to our problem. For example, if we’re solving Max-Cut on a graph, we would set up H_C so that states (bitstrings) with many edges cut have lower energy (or higher “cost” value, depending on convention) than states with fewer edges cut. In practice, H_C is often expressed as a sum of terms for each element of the problem (each edge of a graph, each clause of a SAT formula, etc.), using Pauli Z operators to represent the binary variables. Don’t be intimidated by the term Hamiltonian – it’s basically a way to score each quantum basis state by how well it satisfies the optimization objective.

- Mixer Hamiltonian (H_M): This is a contrasting operator that does not commute with H_C and is used to “mix” or traverse the space of possible solutions. A common choice for H_M is a sum of Pauli X operators on each qubit, H_M = \sum_j X_j, which essentially tries to flip bits (since the Pauli X acting on a qubit flips it from 0 to 1 or vice versa). The role of the mixer is to ensure the algorithm can explore different candidate solutions. If you only had the cost Hamiltonian alone, you might get stuck in whatever state you started in. The mixer shakes things up.

With these two Hamiltonians in hand, QAOA proceeds as follows (per Farhi et al.’s construction ):

- Initialize the qubits in a simple starting state. A typical choice is the uniform superposition over all possible bitstrings, which you can prepare by setting each qubit to $$|0\rangle$$ and then applying a Hadamard gate so it goes to $$|+\rangle = \frac{|0\rangle+|1\rangle}{\sqrt{2}}$$. We’ll call this initial state $$|s\rangle$$. It’s an equal mix of all $$2^n$$ solutions for an n-qubit (n-bit) problem, giving the algorithm a “blank slate” of possibilities.

- Apply the cost Hamiltonian H_C for a while. In quantum computing, “applying a Hamiltonian” for a time $$\gamma$$ means applying a unitary operation $$U_C(\gamma) = e^{-i \gamma H_C}$$. You can think of this as “phase evolving” the state according to the cost function. States (bitstrings) that have higher cost (worse solutions) pick up different phases than states with lower cost. This step is sometimes called the phase separator or problem unitary, and $$\gamma$$ is a tunable parameter (often called a phase angle).

- Apply the mixer Hamiltonian H_M for a while. This is another unitary $$U_M(\beta) = e^{-i \beta H_M}$$ applied for some time $$\beta$$. If $$H_M = \sum X_j, then U_M(\beta)$$ is essentially a rotation of each qubit around the X-axis by an angle $$\beta$$. This has the effect of mixing amplitude between $$|0\rangle$$ and $$|1\rangle$$ states for each qubit – in other words, it lets the state explore different bit configurations. $$\beta$$ is another tunable parameter (often called a mixing angle).

- Repeat the above two steps p times. We choose a positive integer p, which is the number of layers (or “depth”) of the QAOA circuit. Each layer consists of a $$H_C$$ application followed by a $$H_M$$ application. After p layers, our quantum state (call it $$|\psi(\boldsymbol{\gamma}, \boldsymbol{\beta})\rangle)$$ is: $$|\psi(\boldsymbol{\gamma}, \boldsymbol{\beta})\rangle = U_M(\beta_p) U_C(\gamma_p) \;\dots\; U_M(\beta_1) U_C(\gamma_1) \;|s\rangle$$, where $$\boldsymbol{\gamma} = (\gamma_1,\dots,\gamma_p)$$ and $$\boldsymbol{\beta} = (\beta_1,\dots,\beta_p)$$ are the sets of all $$2p$$ parameters. For p=1, we just do one $$U_C$$ and one $$U_M$$; for p=2, $$U_C U_M U_C U_M$$; and so on. The higher the p, the more complex (and potentially powerful) the resulting state can be, because we’re giving the quantum circuit more opportunity to entangle qubits and sculpt the probability distribution of solutions. In fact, Farhi et al. noted that the quality of the solution improves as p is increased, in principle approaching the optimal solution as p becomes large. However, larger p also means a deeper circuit which is harder to run on real hardware due to noise, so there’s a trade-off in practice.

- Measure the quantum state to get a candidate solution. After all layers, we measure each qubit in the computational basis (|0/1〉basis). This yields a bitstring (e.g., 0101…10), which is one candidate solution to our problem. Because of how we constructed the state, the measurement outcomes are biased towards states with lower energy under $$H_C$$ (i.e. better solutions) – at least, that’s the idea if our parameters were chosen well. One can repeat the measurement many times to build up statistics or find multiple candidate solutions.

- Use a classical optimizer to tweak the parameters ($$\boldsymbol{\gamma}, \boldsymbol{\beta}$$). We need a strategy to choose the angles $$\gamma_i, \beta_i$$ that we used in those unitary operators. Initially, we might just guess some random angles or use educated guesses (maybe small angles, or angles inspired by related known results). Then, we evaluate how good the resulting state was. Typically, we can compute the expectation value of the cost Hamiltonian $$\langle \psi(\boldsymbol{\gamma}, \boldsymbol{\beta}) | H_C | \psi(\boldsymbol{\gamma}, \boldsymbol{\beta}) \rangle$$ by sampling many measurements – this expectation gives us an estimate of the average cost objective for the current quantum state. The goal is to maximize this expectation (if we set it up so high expectation = good solution quality) or minimize it (if lower energy = better, depending on formulation). A classical algorithm (like gradient descent, Nelder-Mead, COBYLA, etc.) then adjusts the $$\gamma$$ and $$\beta parameters$$ to try to improve that expectation value. We iteratively loop: run the quantum circuit with current parameters, compute the cost expectation, update parameters towards better expectation. Over time, the parameters hopefully converge to a set that yields a state with a high concentration of good solutions. Once optimized, we do a final measurement of the quantum state to output a solution.

In summary, QAOA uses the quantum computer to prepare a cleverly biased superposition of candidate answers (biased by the parameters we feed in), and a classical computer to learn which quantum bias produces the best outcomes. It’s a quantum version of “guess-and-check-and-update.” The algorithm is variational because it variationally adjusts parameters to extremize an objective (much like how variational algorithms in classical ML train parameters to minimize a cost function). One big reason QAOA is so appealing is that for a fixed p (say p=1 or p=2), the quantum circuit is relatively shallow – the depth scales linearly with p and with the number of terms in H_C. This shallow depth is crucial for near-term NISQ (Noisy Intermediate-Scale Quantum) devices, which cannot maintain coherent operations for very long. In fact, QAOA was originally proposed with the idea that it might be robust enough to run on noisy hardware while still potentially offering an advantage for optimization problems. It’s essentially a digitized, short version of adiabatic quantum optimization: instead of slowly evolving a Hamiltonian over a long time, QAOA jumps between H_C and H_M in bursts, with tunable durations, to approximate that evolution in a shorter time. (Indeed, the structure of QAOA is inspired by the Quantum Adiabatic Algorithm, with the insight that even a non-adiabatic, stepwise application can work if parameters are optimized.)

We’ve outlined the broad mechanics. Now let’s see how this plays out with a concrete example – the Max-Cut problem – which was the original problem used to showcase QAOA and is a canonical benchmark for it.

Example: How QAOA Solves Max-Cut

Max-Cut is a classic NP-hard problem that’s easy to describe: given a graph (a set of vertices connected by edges, each edge maybe having a weight), the goal is to divide the vertices into two groups such that the sum of weights of edges that cross between the groups is as large as possible. In other words, we want to “cut” as many edges (with high weight) as possible by the partition. If you assign each vertex a bit value (0 or 1) indicating which group it belongs to, an edge contributes to the cut if and only if the two endpoints have different bits. Max-Cut is interesting because it’s simple to state but, in general, hard to solve optimally. It also maps nicely onto the kind of binary optimization QAOA can handle – each vertex is a binary variable.

Formulating Max-Cut for QAOA: We need to construct a cost Hamiltonian H_C for Max-Cut. One common formulation is: for each edge (i,j) with weight w_{ij}, add a term to the cost that gives 1 (or w_{ij}) if the edge is cut and 0 if it’s not. Using qubit language, we can let the qubit’s $$|0\rangle and |1\rangle$$ states represent the two different partitions for a vertex. We introduce a binary variable z_i for each vertex i, which can take values +1 or –1 (think of z=+1 corresponding to $$|0\rangle$$ and z=-1 to $$|1\rangle$$, for example). Then one way to write the cost function is:

$$$C_{ij} = \frac{1}{2} w_{ij} (1 – z_i z_j)$$$

For a single edge, this formula yields C_{ij} = 0 if z_i = z_j (both vertices in the same group, so the edge is not cut) and C_{ij} = w_{ij} if z_i and z_j are opposite (edge is cut). Summing this over all edges gives a total cost equal to the weight of the cut (since each cut edge contributes its weight). We then translate z_i into a quantum operator. We can use the Pauli Z operator Z_i, which has eigenvalues +1 and –1 on $$|0\rangle$$ and $$|1\rangle$$ respectively, to represent the value of z_i for qubit i. So the cost Hamiltonian for Max-Cut can be written as:

$$$H_C = \sum_{(i,j)\in E} \frac{w_{ij}}{2}(I – Z_i Z_j)$$$

where I is the identity (so that I – Z_i Z_j has eigenvalue 0 if qubits are aligned and 2 if anti-aligned). Up to a constant factor, minimizing energy under this H_C corresponds to maximizing the cut value. The ground state of H_C (the lowest energy state) ideally corresponds to the optimal cut solution.

The mixer Hamiltonian for QAOA in this case (and in most cases) we choose as $$H_M = \sum_i X_i$$ (summing Pauli X on each qubit). This choice works because H_M will flip qubits between $$|0\rangle$$ and $$|1\rangle$$, allowing the state to explore different partitions of the graph.

Now, what does one round of QAOA (p = 1) do for Max-Cut? Starting from the uniform superposition $$|+\,+\,+\cdots +\rangle$$ (all qubits in $$|+\rangle$$, which is an equal superposition of all $$2^n$$ bit assignments), we apply $$U_C(\gamma) = e^{-i\gamma H_C}$$. This operation will multiply each basis state $$|z_1 z_2 \dots z_n\rangle$$ by a phase $$e^{-i\gamma C(z)}$$, where C(z) is the total cut cost for that bitstring (since the eigenvalue of H_C on that state is proportional to that cost). States with higher cut value get a different phase rotation than those with lower cut value. This is analogous to what happens in Grover’s algorithm or phase oracle algorithms: good solutions’ amplitudes get marked by a phase.

Then we apply $$U_M(\beta) = e^{-i \beta \sum X_i}$$. Because $$e^{-i \beta X_i}$$ is just a rotation of qubit i around the X-axis by angle \beta, when applied to $$|+\rangle$$ states it has the effect of partially reintroducing superposition between $$|0\rangle$$ and $$|1\rangle$$. Intuitively, $$U_M(\beta)$$ takes the phase information that was “painted” onto the amplitudes by $$U_C(\gamma)$$ and tries to turn some of that into amplitude differences (probabilities) by rotating the state. The result after $$U_C(\gamma) U_M(\beta)$$ is a complicated superposition of bitstrings where, if we chose $$\gamma$$, $$\beta$$ well, the amplitudes interfere constructively on high-cut states and destructively on low-cut states. Measuring this state will then yield a bitstring that hopefully corresponds to a good cut.

Of course, at p=1 we have only two parameters $$\gamma_1, \beta_1$$ to play with. We won’t generally hit the optimal solution in one shot for large graphs – but we might get an approximation that’s better than random. Farhi et al. showed for example that with p=1, QAOA on 3-regular graphs guarantees a cut at least 0.6924 times the optimal (about 69.24% of optimal), which was notable because it briefly beat the best-known classical approximation ratio for that specific case. (Subsequently, classical algorithms improved, as often happens; the interplay between quantum and classical algorithm advances is ongoing.) If we increase to p=2 or p=3, we add more alternating layers, introducing more parameters $$\gamma_2,\beta_2,\dots$$ and more opportunities for constructive interference toward the optimal solution. In theory, as $$p \to \infty$$, QAOA can approximate the adiabatic algorithm and reach the optimal solution (though p might need to scale with problem size in a way that could be inefficient – an active area of research).

From a circuit perspective, a QAOA circuit for Max-Cut on, say, a graph of 5 nodes would look like: start with Hadamards on all 5 qubits (to create $$|+++++\rangle$$), then for each edge, apply a two-qubit gate that corresponds to $$e^{-i\gamma (I – Z_i Z_j)/2}$$ (this can be implemented with CNOTs and single-qubit rotations in practice), then apply single-qubit X-rotations by angle \beta on all qubits. That’s one layer. Repeat that sequence p times. The depth of the circuit is basically $$O(p \times (\text{# of edges}))$$ in terms of two-qubit operations, but many of those can potentially be done in parallel if the hardware allows, since each edge’s phase gate acts on a different pair. This shallow-depth, structured circuit is a big reason why QAOA is feasible on current hardware for small instances.

After running the circuit, measuring the qubits yields one candidate cut. By repeating the circuit and measurement many times, one can estimate the expected cut weight for the current parameters $$\boldsymbol{\gamma}, \boldsymbol{\beta}$$. The classical optimizer then, for instance, nudges $$\gamma_1$$ a bit up or down and sees if the expectation improved, and similarly for $$\beta_1$$, or uses more sophisticated techniques to find better parameters. This hybrid loop continues until, say, the improvement is below a threshold or a time limit is reached. The best bitstring observed is taken as the output solution.

Even with just this one example, you can see how QAOA is problem-agnostic in its template but problem-specific in the details: the overall algorithm is the same for any combinatorial optimization task, but we plug in a different H_C for each problem (and possibly a correspondingly different optimal H_M choice if needed). Max-Cut uses a simple Ising form for H_C. If we wanted to solve a different problem, like a scheduling problem or a SAT formula, we would encode that into H_C instead (often as an Ising or QUBO model, since any NP problem can be reduced to a Boolean form). QAOA has been formulated for many such problems – graph coloring, traveling salesman (though encoding TSP can be heavy), maximum independent set, portfolio optimization, etc. The algorithmic steps remain the same.

Beyond Toy Problems: Applications of QAOA in the Wild

QAOA is not just a theoretical idea; it’s actively being explored as a tool for a variety of optimization challenges. Here we survey how QAOA is being applied or tested in different domains, from traditional optimization problems like scheduling and logistics to more unconventional uses in machine learning and quantum chemistry/physics.

- Logistics and Supply Chain Optimization: Logistics problems (like vehicle routing, supply chain management, warehouse picking optimization) are combinatorial by nature and often NP-hard. A quintessential example is the vehicle routing problem (VRP), where a fleet of trucks must deliver to a set of locations with minimal cost. Classical solutions use heuristics, but quantum algorithms promise a new approach. QAOA has been considered for route optimization by formulating VRP or related problems as QUBOs. In fact, some early experiments suggest that QAOA can find better routes than certain classical methods by exploiting quantum superposition to evaluate many possible routes in parallel. Companies like Volkswagen and DHL have dabbled in quantum computing for route optimization and scheduling, indicating real industrial interest. While full-scale logistics problems are too large for current quantum hardware, smaller instances or simplified versions have been tackled. The hope is that as quantum hardware scales, QAOA could handle sizes of practical relevance, potentially improving how goods are routed through complex supply chains.

- Job Scheduling and Manufacturing: Scheduling problems (assigning jobs to machines or tasks to time slots under constraints) are critical in manufacturing and computing (think of scheduling in factories or tasks in cloud computing). Researchers have applied QAOA to the Job Shop Scheduling Problem (JSSP), a notoriously tough optimization problem in operations research. One recent study demonstrated how to encode JSSP for QAOA and even introduced more efficient encodings that drastically reduce the number of qubits required. By using clever encoding techniques, they made it feasible to solve small instances of scheduling on current quantum processors, which usually have limited qubit counts. The results showed that QAOA could indeed find valid schedules and sometimes improve efficiency metrics like makespan (total completion time), although these were still very small scales. The fact that Siemens and other industry players co-authored such work shows the strong interest in applying QAOA to real-world scheduling optimization. These are baby steps, but important ones toward quantum-optimized manufacturing processes in the future.

- Machine Learning and AI: At first glance, machine learning might seem unrelated to combinatorial optimization, but there are overlaps. Many ML tasks involve discrete choices or can be formulated as optimization problems. For example, feature selection in a model (choosing a subset of features that gives the best predictive performance) is a combinatorial task. So is clustering data into groups (which relates to graph partitioning problems), or optimizing the structure of certain AI models. QAOA, being a combinatorial optimizer, can potentially assist in these areas. Researchers have begun exploring QAOA for tasks like community detection in networks or clustering, by encoding those as cost Hamiltonians. There’s also interest in using QAOA or its variants in quantum-enhanced ML algorithms – for instance, a QAOA-like approach could be used to train certain quantum Boltzmann machines or do discrete optimization inside a larger ML pipeline. While still in early research, one could imagine using QAOA to solve the combinatorial sub-problems that appear in ML (such as optimizing decision tree splits, selecting optimal neural network architectures, etc.). Additionally, techniques like Quantum Neural Networks or variational quantum classifiers suffer from similar training challenges as QAOA (because they also rely on tuning parameters in a quantum circuit). Insights from QAOA about navigating parameter space or avoiding barren plateaus (discussed later) could transfer to those quantum ML models. In summary, QAOA’s direct applications to machine learning are still mostly exploratory, but any place in ML that needs a discrete optimizer might benefit from QAOA down the line. Some have even envisioned hybrid approaches where a quantum optimizer (QAOA) works in tandem with classical AI, e.g., using QAOA to optimally configure a classical machine learning model – a sort of quantum bolt-on to classical AI.

- Quantum Chemistry and Materials Science: Quantum chemistry typically involves continuous optimization (finding minimum energy configurations of molecules, which is what the Variational Quantum Eigensolver (VQE) is designed for). QAOA, on the other hand, deals with discrete problems. However, there is a surprising crossover: certain chemistry or physics problems can be mapped onto spin models or combinatorial formulations. For example, finding the ground state of a complex magnetic material or a frustrated spin system can be framed as an optimization of a discrete Hamiltonian. Researchers have successfully used QAOA to approximate ground states of certain spin-lattice models in condensed matter physics. A recent study prepared the ground state of a frustrated magnet (the Shastry-Sutherland model, a 2D quantum magnet) on a small 9-qubit ion-trap quantum computer using QAOA, and it matched the expected results with high probability. This is a big deal because it shows QAOA can act as a heuristic quantum simulator for certain many-body systems, potentially finding low-energy states that are hard to get classically. In quantum chemistry, one could envision using QAOA for problems like finding the arrangement of atoms in a molecule that minimizes energy if that problem is translated into a discrete form (perhaps via a lattice model or an Ising formulation of a molecular configuration problem). While VQE remains the go-to for molecular ground states, QAOA or QAOA-inspired techniques might help with discrete aspects, like assigning spin configurations or optimizing dihedral angle configurations that are effectively discrete choices. Another angle is using QAOA in quantum error correction codes design or quantum control, which are sort of like “combinatorial chemistry” of quantum circuits – these are niche, but people have tried formulating error-correcting code design as an optimization that QAOA could tackle. Overall, the use of QAOA in chemistry and materials is nascent but shows that the algorithm is quite versatile beyond just “textbook” combinatorial problems.

- Finance and Portfolio Optimization: Portfolio optimization (deciding how to allocate investments to maximize return for a given risk, etc.) can be formulated as a binary optimization (e.g., include or exclude certain assets in a portfolio under constraints). There have been explorations of using QAOA for such financial optimizations by encoding them as Ising models. Some financial institutions and startups have tested small portfolio instances on quantum hardware using QAOA, comparing results with classical solvers. Similarly, things like option pricing or risk analysis sometimes boil down to optimization problems where QAOA could play a role. Thus far, quantum hardware isn’t at a point to solve anything beyond toy-sized finance problems, but interest is there.

- Other Domains: Practically any field with hard optimization problems is eyeing QAOA. This includes telecommunications (e.g., optimizing network configurations or error-correcting code parameters), government and defense (allocating resources, scheduling missions), energy (optimizing power grid configurations or traffic light schedules), and more. The key is always: can you encode your problem’s cost function into a qubit Hamiltonian? If yes, QAOA might be able to tackle it. The good news is there is a whole field of binary optimization (Ising or QUBO formulations) that has encoded many problems already – QAOA can readily take those formulations as input.

Now, it’s important to note that most of these applications are still in the research or proof-of-concept stage. Current QAOA experiments often involve small problem instances (like graphs with maybe 10–20 nodes, or a few jobs in a scheduling problem) – sizes that classical algorithms can handle in microseconds. The point of these experiments is not to beat classical computing yet, but to learn how QAOA behaves, how to best encode problems, and how it scales. It’s a bit like the early days of classical computing where people solved small examples on room-sized computers to prove the concept.

Let’s look at some recent achievements and milestones that show how far QAOA has come in practice:

- Google’s 23-Qubit QAOA Demonstration (2021): A team at Google ran QAOA on their 53-qubit Sycamore superconducting processor for solving MaxCut and the Sherrington-Kirkpatrick spin glass on up to 23 qubits (they limited to 23 for the non-planar problems due to mapping constraints). They reported that QAOA achieved a consistent approximation ratio independent of problem size for MaxCut instances that fit the chip’s native connectivity, and importantly, that the solution quality improved as they increased the circuit depth p (they observed better performance going from p=1 to p=3, for example). This was one of the first times a quantum algorithm’s performance clearly got better with added circuit depth on real hardware for an optimization problem – a positive sign that QAOA isn’t fundamentally stuck at very shallow depths. For more irregular graph problems that required additional swaps (non-native to the hardware), they found that performance degraded as problem size grew, but even with circuits involving “several thousand gates,” QAOA still did better than random guessing. This highlighted both the promise and the challenges: QAOA can work on hardware, but mapping problems that don’t align with hardware connectivity costs a lot of extra gates (overhead), which hurts performance in the NISQ era. The Google team suggested using QAOA not just to solve problems, but also as a benchmark for quantum processors – because it uses essentially the full stack (multiple layers of two-qubit gates, etc.) and can showcase the device’s effective computational capability on a holistic task.

- IBM’s 127-Qubit QAOA Tests (2022-2024): IBM has been pushing the envelope with larger quantum processors (127 qubits as of their “Eagle” chip, with 433-qubit and larger chips on the roadmap). Running QAOA at those scales is extremely challenging due to noise, but IBM researchers have undertaken studies to see how far one can go. In one experiment, they ran QAOA on instances using 127 qubits at p=1,2,3 and observed that up to p=2 they could still see improvement, but at p=3 the noise started to overwhelm the gains. For smaller 27-qubit setups, improvement was visible up to p=3 before plateauing. They also studied a phenomenon called parameter transferability – the idea that the optimal angles $$\gamma_i, \beta_i$$ found for a small instance might also work (or be a good starting point) for larger instances of a similar type. They found evidence of parameter concentration/transfer on real hardware: optimized angles at p=1 for one size could be applied to bigger sizes with similar results, indicating some robustness in choosing parameters. Moreover, IBM’s team developed better circuit transpiling strategies for QAOA, since connecting qubits that are far apart on a chip might require a chain of SWAP operations that add depth. By optimizing how they lay out the problem onto the heavy-hex lattice of IBM chips, they reduced circuit overhead. Even so, their studies concluded that to make QAOA truly competitive, improvements in gate fidelity and speed are needed, as well as reducing the large number of measurements (shots) required. They demonstrated a QAOA on 7- and 27-qubit problems using IBM’s Qiskit Runtime with some success, but scaling to dense problems on 127 qubits required error rates far lower than currently available (deep below the fault-tolerant threshold). These results, while showing current limitations, are valuable because they pinpoint what needs to get better as hardware evolves (fidelity, connectivity, etc.).

- Academic breakthroughs in scaling and performance: Outside of the big companies, many academic collaborations are advancing QAOA. In late 2023, a team involving national labs and universities provided numerical evidence of a potential scaling advantage of QAOA over classical algorithms for certain problems. They studied random instances of an NP-hard problem (like 8-SAT and a problem called “Low Autocorrelation Binary Sequences”) and found that in simulations, the time-to-solution for QAOA with fixed depth grew slower (in terms of exponential base) with problem size than the best known classical heuristics. For example, one report showed QAOA’s effective search time scaling as ~$$1.23^N$$ for random 8-SAT (with N variables) versus ~1.25^N for a classical solver, and even better scaling (~$$1.11^N$$) if QAOA was combined with a quantum amplitude amplification trick. While these differences might not sound huge, in exponential terms any reduction in the base is significant as N grows. They also ran some of these QAOA instances on massive parallel GPU simulators (emulating up to 40 qubits) to project behavior for larger sizes. The bottom line is that there are hints QAOA could outperform classical algorithms in the scaling sense on certain carefully chosen hard problems, if we had ideal quantum hardware. That’s a tantalizing hint of quantum advantage in the future.

- Variations and improvements of QAOA: There’s active development of improved versions of QAOA. For instance, researchers have explored “warm-start” QAOA, where a classical heuristic solution is used to initialize the quantum state in a better starting superposition (not uniform, but biased by the classical solution) and then QAOA refines it – this can speed up convergence. Another variant is Recursive QAOA (RQAOA), which interleaves QAOA rounds with fixing certain variables and reducing the problem size, effectively a divide-and-conquer strategy. RQAOA has been shown in some cases to improve the solution quality systematically for things like MaxCut as you recurse, at the cost of more measurements. There are also proposals for analog QAOA and continuous QAOA blending quantum annealing with the digital QAOA approach. Even the structure of the mixer can be adapted for problems with constraints (e.g., mixers that preserve the number of 1s for problems where that’s required). Each of these developments often comes with its own set of results and early experiments, contributing to the overall progress of QAOA.

All these achievements show that QAOA is not just a theoretical curiosity; it’s being actively tested and scaled on real devices, and it has a community pushing its boundaries. That said, we should be candid: QAOA has not yet outperformed classical algorithms on any problem of practical size. The instances where QAOA showed better scaling were still tiny (N up to 40 or so) or in simulation. On real hardware, noise and qubit limitations mean we’re far from a clear “quantum advantage.” But the research is accelerating, and each incremental advance (a slightly bigger graph solved, a slightly better approximation ratio achieved, a new trick to pick angles) is bringing that possibility closer.

Open Challenges and Research Directions

While QAOA is promising, there are several open challenges and active research questions that need to be addressed for it to reach its full potential. As someone new to quantum computing, it’s good to be aware of these – both to temper expectations and to appreciate where innovation is needed (perhaps even by you in the future!). Here are some of the key issues:

- Choosing (and Optimizing) Good Parameters: QAOA’s performance hinges on finding the “right” values of the 2p angles $$\gamma_1,\ldots,\gamma_p,\; \beta_1,\ldots,\beta_p$$. For small p, sometimes one can analytically or empirically find good values (for example, there are known optimal angles for certain symmetric cases at p=1). But in general, as p and problem size grow, the parameter landscape becomes very complex – full of local optima and flat regions. In fact, it’s been shown that optimally tuning QAOA parameters for arbitrary problems is itself an NP-hard problem in the worst case! The parameter space is continuous and high-dimensional, and standard classical optimizers can get stuck or require many function evaluations (which means many quantum circuit runs, which are expensive). Researchers are exploring various strategies here:

- Heuristics and Initialization: Using educated guesses for initial parameters (maybe extrapolating from smaller instances or lower p). As mentioned, parameter transfer is a promising idea: optimize angles on a small instance or a simplified problem, then reuse them for a larger instance. Empirical studies have observed a phenomenon called parameter concentration where optimal parameters don’t vary too much as the problem size grows for certain random problem ensembles. This suggests one could optimize on small cases and apply to big cases (at least for similar graph structures), significantly cutting down the cost of parameter search.

- Machine Learning Approaches: There’s work on using machine learning to predict good QAOA parameters. For instance, one can train a model (perhaps a neural network or regression) on a bunch of solved instances (where you brute-force or heavily optimize QAOA angles for small cases) and have it predict angles for new instances. This is like a meta-learning or transfer learning approach for QAOA. Some use clustering or unsupervised learning on parameter landscapes, or even reinforcement learning to adjust angles on the fly.

- Alternate Ansätze: A different line of attack is to modify the QAOA ansatz itself to make it easier to optimize. There are proposals like the “Multi-Angle QAOA” where instead of one global angle $$\gamma$$ for the whole cost Hamiltonian per layer, you have separate angles for each term or each part of the Hamiltonian, increasing flexibility (at the cost of more parameters). Surprisingly, this can sometimes make optimization easier or achieve better results with the same or less depth. However, more parameters also means a potentially harder classical optimization problem, so it’s a balance.

- Gradient-based methods: If one can compute or estimate the gradient of the cost expectation with respect to the angles, one could use gradient descent or more advanced algorithms. There is a well-known parameter-shift rule that allows calculation of gradients on quantum hardware by evaluating the function at shifted parameters. This works for QAOA too, but it requires multiple circuit evaluations and the “barren plateau” issue (coming next) can make those gradients tiny.

- Barren Plateaus and Local Minima: A major concern for variational quantum algorithms (VQAs) in general (including QAOA) is the phenomenon of barren plateaus. This refers to the optimization landscape being essentially flat (zero gradient) in large regions, especially as the system size grows, making it exponentially hard for a classical optimizer to find a direction for improvement. Barren plateaus can be caused by having too random or too deep of an ansatz, or by noise in the quantum circuit (noise can also induce flatness in the landscape). QAOA, by its structured nature, was initially hoped to evade barren plateaus, and indeed for low-depth QAOA on specific problems, people have observed it doesn’t suffer the severe barren plateau that, say, a random deep circuit might. However, as p increases or for certain problem classes, optimization can still get very hard. The landscape of QAOA’s cost vs. parameters can have many local optima – like a multi-peaked mountain range – and broad flat regions where gradients are nearly zero. This means an optimizer might wander aimlessly (in a barren plateau) or get stuck on a suboptimal peak (a local optimum) and not find the best parameters. To mitigate this:

- Researchers study the structure of QAOA landscapes to understand where plateaus occur and where good solutions might lie. Some results tie this to properties of the problem instance or to symmetries.

- Techniques like adiabatically adjusting parameters or layer-by-layer training (optimize 1 layer, then add second, etc.) can sometimes avoid bad regions.

- Noise reduction is key because noise-induced barren plateaus are rigorously proven: even a little noise can flatten out the landscape exponentially in some cases. Error mitigation techniques, or circuit cutting, might help to reduce this effect on near-term devices.

- Ultimately, the barren plateau issue is an ongoing research front – a lot of smart minds are trying to find ways around it, because it affects not just QAOA but essentially all variational quantum algorithms.

- Hardware Limitations and Noise: Current quantum hardware is the biggest bottleneck for QAOA’s real-world performance. QAOA circuits involve many two-qubit gates (especially for a fully connected graph problem, each layer might require a two-qubit gate for every edge or interaction). Each gate introduces some error, and today’s superconducting and trapped-ion qubits have gate error rates on the order of 0.1% to 1%. When you compose tens or hundreds of them in a circuit, the probability of the circuit working without error falls off dramatically. Additionally, qubits decohere over time – and although QAOA is relatively fast (circuits can be run in microseconds on superconducting hardware), if p is large, the circuit may become too long to maintain coherence. The result is that beyond a certain circuit depth (which is hardware and problem dependent), adding more layers p doesn’t help because noise dominates, as seen in IBM’s experiments (beyond p=2 or 3 on 127 qubits, things got worse). Moreover, devices have limited connectivity – not every qubit can directly interact with every other. If your problem graph is not aligned with the hardware connectivity graph, the compiler has to insert additional swap gates to move qubits around, which adds even more gates (hence more depth and error). This was evident in Google’s non-planar MaxCut experiment, where having to compile to a planar chip reduced performance for larger non-planar problems. Some of the steps being taken:

- Error Mitigation: Techniques like extrapolating to zero noise or probabilistic error cancellation can be applied to QAOA outcomes to mitigate errors without full fault tolerance. This can improve the expectation value estimates, though it comes with overhead.

- Problem Mapping: There’s a lot of effort in finding embeddings of problems onto hardware graphs that minimize swaps. For example, if a problem is on a dense graph, one might split it or use clever ordering to embed onto a line or heavy-hex lattice efficiently. In some cases, minor gadget transformations can turn a dense problem into a sparser one at the cost of more qubits, trading one resource for another.

- Hardware improvements: Faster gates and lower error rates directly help QAOA. If two-qubit gate fidelity goes from 99% to 99.9%, the depth you can handle increases significantly. Some hardware (like ion traps) have all-to-all connectivity which spares the need for swaps, though their gate speeds are slower than superconducting. Each hardware platform (superconducting, ion trap, photonic, etc.) has pros/cons for QAOA (connectivity, speed, coherence). There’s even thinking about analog quantum simulators implementing QAOA steps more directly (e.g., using a programmable analog Hamiltonian for H_C and H_M).

- Scaling qubit count: Obviously, to tackle bigger problems we need more qubits. But more qubits often mean more noise and control issues. The largest experiments so far are still under 30 qubits for multi-layer QAOA. Going to, say, 100 qubits with depth 5 in a meaningful way will likely require further hardware breakthroughs or error correction.

- Performance vs Classical Algorithms: A more theoretical (but important) challenge is to understand when (if ever) QAOA actually outperforms the best classical algorithms. We know it can match some classical algorithms’ performance for certain problems at low depth, and for a moment had a better approximation for a specific case (regular MaxCut) until classical research caught up. The bar is always rising because classical algorithms aren’t static targets – new heuristics or even improved CPUs can raise the baseline that QAOA must beat. For QAOA to be truly useful, we need to identify problem instances or regimes where it has an edge. It might be that QAOA excels on very structured problems where classical methods struggle, or vice versa. Recent theoretical results like the Overlap Gap Property (OGP) suggest there may be inherent limitations: basically, certain hard instances have a solution landscape geometry (with many near-optimal solutions far from each other) that might stymie low-depth QAOA, requiring depth to grow with problem size (which is impractical in NISQ). If true generally, that would curb QAOA’s advantage unless we can get to higher depth regimes (perhaps with error-corrected quantum computers in the future). On the other hand, those scaling studies on specific problems hint that there could be an advantage if we choose the right scenario. Thus, an ongoing challenge is: find the sweet spot – the combination of problem type, size, and QAOA depth where it beats everything classical within the same runtime or resources. This might involve hybridizing QAOA with classical steps (not just as an optimizer, but maybe interleaved with classical improvement steps).

- Extending QAOA’s Framework: QAOA itself is one algorithm, but its framework of alternating operators can be extended. One open direction is handling constraints more naturally. If a problem has hard constraints (say you need exactly k of the variables to be 1, or other conditions), the basic QAOA might sample some invalid solutions. There are modified mixers that conserve constraints (like mixers that only transition between valid states). Designing these problem-specific mixers and proving they help is an active area. Another extension is combining QAOA with quantum subroutines – for example, one proposal combined QAOA with Grover’s algorithm for unstructured search to amplify the success probability, which is essentially what the amplitude amplification idea in the scaling study was. The combination (sometimes dubbed QAOA+) could amplify the chance of finding the optimal after QAOA concentrates amplitude in good regions. There’s also interest in how QAOA might integrate into a larger quantum algorithm (for instance, as a part of iterative quantum algorithms or quantum game theory approaches).

- Understanding QAOA’s Limits: Finally, there’s the theoretical work to delineate what QAOA cannot do or what its limitations are without higher depth. For example, there are proofs that depth-p QAOA cannot achieve better than XYZ approximation ratio for certain problems unless p grows at least on the order of \log(n) or more. Knowing these limits helps manage expectations and guides where to focus – maybe QAOA isn’t the right tool for some problems but is for others. It also spurs development of other algorithms (e.g., the Quantum Alternating Operator Ansatz is a generalization, or entirely different approaches like quantum annealers or other variational forms).

In summary, while QAOA has shown a lot of promise, turning it into a practical optimizer for large problems will require surmounting these challenges. The good news is that each challenge is an active research front, and progress is being made. For instance, better classical parameter-setting methods are being published, hardware is steadily improving, and our theoretical understanding of QAOA’s power is deepening each year. Even issues like barren plateaus, which sound ominous, are spawning creative solutions (like restricting the ansatz or designing layer-wise training schemes).

Conclusion

The Quantum Approximate Optimization Algorithm sits at the forefront of near-term quantum algorithms, representing both the hopes and the hurdles of the NISQ era. It provides an intuitive bridge between a tough optimization problem and a quantum processor: by encoding the problem into a cost Hamiltonian and repeatedly mixing in a dash of quantum exploration, QAOA turns the quantum computer into a sort of “optimization laboratory” where good solutions can be distilled from a quantum brew of possibilities. For newcomers to quantum computing, QAOA is a fantastic case study because it encapsulates many core ideas – superposition of many candidate solutions, interference guided by an objective function, hybrid quantum-classical feedback loops – all in a relatively simple algorithmic structure.

As of 2024, QAOA stands as a benchmark and testbed for quantum optimization. It’s often the first algorithm tried on new quantum hardware to evaluate how well the machine can handle an optimization task end-to-end. It’s also a fertile playground for ideas in quantum algorithm design – many new variational concepts are tested in the context of QAOA first. Whether QAOA itself will be the algorithm that delivers a clear quantum advantage, or whether it will be an evolved descendant or a totally different approach, remains to be seen. What is clear is that QAOA has significantly advanced our understanding of how to use near-term quantum computers for useful work and has spurred a lot of innovation in the process.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.