Key Principles and Theorems in Quantum Computing and Networks

Table of Contents

(This article, originally written in 2016, was updated in 2024 to highlight latest achievements)

Introduction

Quantum mechanics has upended our classical intuitions, revealing a world where particles can exist in multiple states at once and influence each other across vast distances. These strange phenomena are no longer just scientific curiosities—they form the foundation of quantum computing and quantum networks. In essence, quantum technologies harness effects like superposition and entanglement to process information in ways impossible for classical systems. For the cybersecurity professional, this is a double-edged sword. On one hand, quantum computers threaten to break many of today’s cryptographic algorithms by solving certain mathematical problems exponentially faster than classical machines. On the other, quantum physics offers new defenses: for example, quantum key distribution (QKD) uses the laws of physics (instead of computational complexity) to enable provably secure communication, making undetected eavesdropping fundamentally impossible.

Understanding the key principles behind these technologies is crucial for anticipating both the risks and opportunities they bring to cybersecurity. Below, I’ll introduce the core quantum-mechanical principles and theorems—each explained in accessible terms—before exploring real-world applications and security implications. From Heisenberg’s famous uncertainty principle to the mind-bending phenomenon of entanglement, these concepts are the building blocks of the quantum revolution that is now underway. Quantum-secured messages are already being sent in the real world, such as bank transfers and election results protected by QKD, even as researchers race to build more powerful quantum computers. By grasping how these principles work, cybersecurity experts can better prepare for a future where quantum technology is part of the security landscape.

Key Principles and Theorems

Heisenberg’s Uncertainty Principle

One of the most famous tenets of quantum mechanics, Heisenberg’s Uncertainty Principle, states that certain pairs of properties (called complementary or conjugate variables) cannot both be known to arbitrary precision at the same time. In simple terms, the act of measuring one property unavoidably disturbs the other. For example, in the atomic world you cannot precisely measure a particle’s position and momentum simultaneously – if you pin down the position more accurately, the momentum becomes more uncertain, and vice versa. In quantum communication, a practical example is a photon’s polarization: polarization can be measured in different bases (say, vertical/horizontal vs. diagonal). These are complementary measurements. Any attempt to observe a photon’s state in the wrong basis will irrevocably disturb it.

Heisenberg’s principle is not a limitation of our measurement tools; it’s a fundamental property of nature. This has profound implications for security. It means that quantum data can be encoded in such a way that any eavesdropper’s observation introduces a disturbance. In QKD protocols like BB84, Alice sends qubits (photons) polarized randomly in one of two bases. If Eve (an eavesdropper) intercepts and measures these qubits in the wrong basis, the uncertainty principle guarantees she cannot obtain precise information without altering the qubits’ states. This disturbance shows up as errors when Bob later measures the photons in the correct basis. Alice and Bob can compare a subset of their measurements publicly to detect Eve’s interference. In essence, the uncertainty principle ensures that observation = disturbance, which makes undetected eavesdropping impossible. As one source succinctly puts it: in quantum cryptography, any attempt to observe or measure a qubit will inherently disturb its state. This guarantee—rooted in Heisenberg’s principle—is a cornerstone of quantum security protocols.

No-Cloning Theorem

In the classical world, copying data is trivial – think copy-paste or packet sniffers duplicating network traffic. Quantum information obeys very different rules. The No-Cloning Theorem, proven in 1982, says that it is impossible to create an identical copy of an arbitrary unknown quantum state. In other words, you can’t make a perfect clone of a qubit unless you already know exactly what state it’s in. If that sounds abstract, consider its practical effect: an eavesdropper cannot intercept a quantum signal, copy it, and send a replica on to the intended recipient without leaving a trace. Any attempt to duplicate a quantum state inevitably involves measuring it (or interacting with it), which by the uncertainty principle will disturb the original state. The would-be quantum “photocopier” spoils the very data it tries to copy.

This no-cloning rule has profound implications for cybersecurity. It provides a fundamental reason why quantum communications can be perfectly secure. For example, in QKD, Eve might try to copy the photons carrying the key bits, hoping to measure the copies and leave the originals untouched. The no-cloning theorem forbids this: Eve cannot clone the quantum key photons. Any interception attempt means she must interact with the photon itself, unavoidably changing it. As a result, Alice and Bob will notice discrepancies in their key checks. This is complemented by the fact that if an attacker even looks at (measures) the quantum system, the system will change in a detectable way. Thus, no-cloning ensures that quantum encryption keys cannot be illicitly copied or broadcast – a fundamentally different situation from classical keys, which in theory could be duplicated if computational barriers are overcome. (Notably, the no-cloning theorem also poses challenges in quantum computing, disallowing the “fan-out” of qubits for error checking, which makes quantum error correction more complex – more on that later.)

Bell’s Theorem & Quantum Entanglement

Perhaps the most counterintuitive (and intriguing) quantum principle for non-specialists is entanglement – what Einstein famously dubbed “spooky action at a distance”. Entanglement occurs when two or more particles become deeply correlated such that the state of one instantly influences the state of the other, no matter how far apart they are. An entangled pair of qubits, for instance, can be created in a state where if one qubit is measured and found to be 0, the other qubit is guaranteed to be 1, and vice versa, even if they are on opposite sides of the planet. Importantly, before measurement, neither qubit has a definite value; they exist in a fused, joint state. This leads to the eerie situation noted by Einstein, Podolsky, and Rosen in 1935 (the EPR paradox): it seemed quantum theory allowed one particle to instantly affect another across distance, challenging the idea of locality. For decades, scientists debated whether perhaps some hidden classical variables could explain these correlations behind the scenes, avoiding the need for “spooky” nonlocal effects.

In 1964, physicist John Bell formulated Bell’s Theorem to settle this debate. Bell derived an inequality – a constraint on correlations – that any local realist theory (with hidden variables and no faster-than-light influence) must satisfy. Quantum mechanics, however, predicts that entangled particles can violate this inequality. Experiments starting in the 1970s and continually refined since have overwhelmingly shown that Bell’s inequality is violated in nature, ruling out all local hidden-variable explanations. In other words, entanglement is real and cannot be explained away by classical physics: measuring one entangled particle does indeed instantly influence its partner’s state in a way that defies classical causality (though it doesn’t allow us to send usable information faster than light). Bell’s theorem and its experimental confirmation (which earned researchers the 2022 Nobel Prize in Physics) laid the foundation for quantum information science. It proved that quantum mechanics is fundamentally incompatible with any theory of “local” hidden information – entangled particles genuinely behave as a single system, no matter the separation.

For cybersecurity and communications, quantum entanglement is a powerful resource. It means two distant parties (say, Alice and Bob) can share perfectly correlated random bits if they each possess one particle of an entangled pair. Any attempt at eavesdropping on one of the particles will disrupt the entanglement correlation, which can be detected. In fact, a QKD scheme proposed by Artur Ekert in 1991 uses entangled photon pairs and Bell’s theorem as a security check: if an eavesdropper interferes, the measured correlations between Alice’s and Bob’s photons will fall below the threshold (violating Bell’s inequality) and reveal the presence of spying. Bell’s theorem is used to test for eavesdropping in this entanglement-based cryptographic protocol. Beyond QKD, entanglement underpins other protocols like quantum teleportation (used to transfer quantum states securely) and certain forms of quantum computing. An entangled state of many qubits (a GHZ state, for example) can be part of error-correcting codes or distributed computing tasks. Summing up the magic of entanglement: two qubits can be inextricably interlinked, each mirroring the other’s state instantly, as if communicating telepathically. Exploiting this “non-local” linkage in a controlled way is at the heart of quantum networks and cryptographic applications of quantum mechanics.

Superposition

At the heart of quantum computing lies superposition – the principle that a quantum system can exist in a combination of multiple states at once. A classical bit is binary (0 or 1, off or on), but a quantum bit or qubit can be in a state that is simultaneously 0 and 1 (with certain probabilities) until you measure it. It’s as if you flipped a coin and it started spinning in the air – during that spin it’s not strictly heads or tails, but a blend of both. Only when it lands (analogous to a measurement) do you get a definite outcome. In the quantum realm, a better analogy is a qubit being like a wave that contains both possibilities until observation. As one description puts it, qubits are “more like spinning coins, which are undecidedly both heads and tails” until measured. This duality is superposition, and it allows qubits to hold a lot more information in combination than classical bits. Instead of encoding a single value, a qubit’s state is described by a wavefunction – essentially a set of probability amplitudes for 0 and 1. When you have multiple qubits, they can be in a superposition of all combinations of 0s and 1s. For instance, two qubits can simultaneously represent 00, 01, 10, and 11 (four states at once), and n qubits can represent $$2^n$$ states at once in a superposed state.

Why is this so important for computing? Because in a sense, a quantum computer can explore many possible solutions to a problem in parallel, by putting its qubits into a superposition of many states. An algorithm then carefully interferes these possibilities (using quantum gates) such that wrong answers cancel out and right answers are amplified. The end result is that certain problems can be solved exponentially faster than by any classical algorithm, thanks to superposition and interference. A famous example is Shor’s factoring algorithm, which uses superposition to find the periodicity in a number (a step crucial to factoring) in polynomial time, whereas a classical brute-force would take astronomically longer. Another is Grover’s algorithm for unstructured search, which provides a quadratic speedup by effectively checking many possibilities at once in superposition.

From a cybersecurity viewpoint, superposition is the engine of quantum algorithms that threaten classical encryption (like Shor’s breaking RSA), but it’s also what enables quantum-secure protocols (since qubits can encode secret randomness in multiple states). It’s a reminder that quantum computers don’t “speed up everything” – but for certain tasks like factoring, they transform a daunting sequential trial-and-error process into a kind of massive parallel exploration of outcomes. When we say a future quantum computer could try an absurd number of combinations rapidly, it’s superposition (managed by clever quantum logic) that makes that possible.

Quantum Decoherence

If superposition and entanglement are the superpowers of quantum information, decoherence is their kryptonite. Quantum decoherence is the process by which a quantum system loses its quantum properties (like coherence between states) due to interaction with its environment. Essentially, the delicate quantum state “leaks” information into its surroundings, and the system’s behavior transitions toward classical norms. When a qubit decoheres, its superposition collapses into a definite state (or a random mixture of states), and any entanglement it had with other qubits is destroyed. This can happen from something as tiny as a stray electromagnetic field, a collision with a air molecule, or imperfection in the hardware. Quantum information is extremely fragile: “the slightest bump” to a fiber carrying qubits, a bit of thermal noise, or a photon from the environment can make two entangled qubits fall out of entanglement. In a quantum computer, decoherence might mean that after a short time (microseconds or milliseconds, depending on the platform), the qubits can no longer maintain the complex superposed state needed for computation – essentially the quantum data has been partially “measured” by the environment and becomes scrambled.

For quantum technologists, decoherence is the central engineering challenge. To build a useful quantum computer or a long-distance quantum network, one must fight against the relentless tendency of quantum states to decohere. This is where quantum error correction (QEC) comes into play. QEC is a suite of techniques that encode quantum information across multiple physical qubits in such a way that, even if some of them decohere or experience errors, the logical information can be recovered. It might involve entangling a cluster of qubits and using clever measurement of some ancillary qubits to infer errors on the others without directly measuring (and collapsing) the data qubits. For instance, the Shor code uses 9 physical qubits to store 1 logical qubit, allowing detection and correction of both bit-flip and phase-flip errors. The need for such redundancy is a direct consequence of decoherence and the no-cloning/measurement constraints: one cannot simply copy the quantum state to create a backup, nor measure it periodically to check for errors (since measurement would collapse it). Instead, QEC cleverly catches errors by measuring parity checks (syndromes) that don’t reveal the actual quantum information. Effective QEC is theorized to be essential for achieving fault-tolerant quantum computing – meaning quantum computers that can operate indefinitely long to run complex algorithms, by constantly correcting decoherence-induced errors in the background.

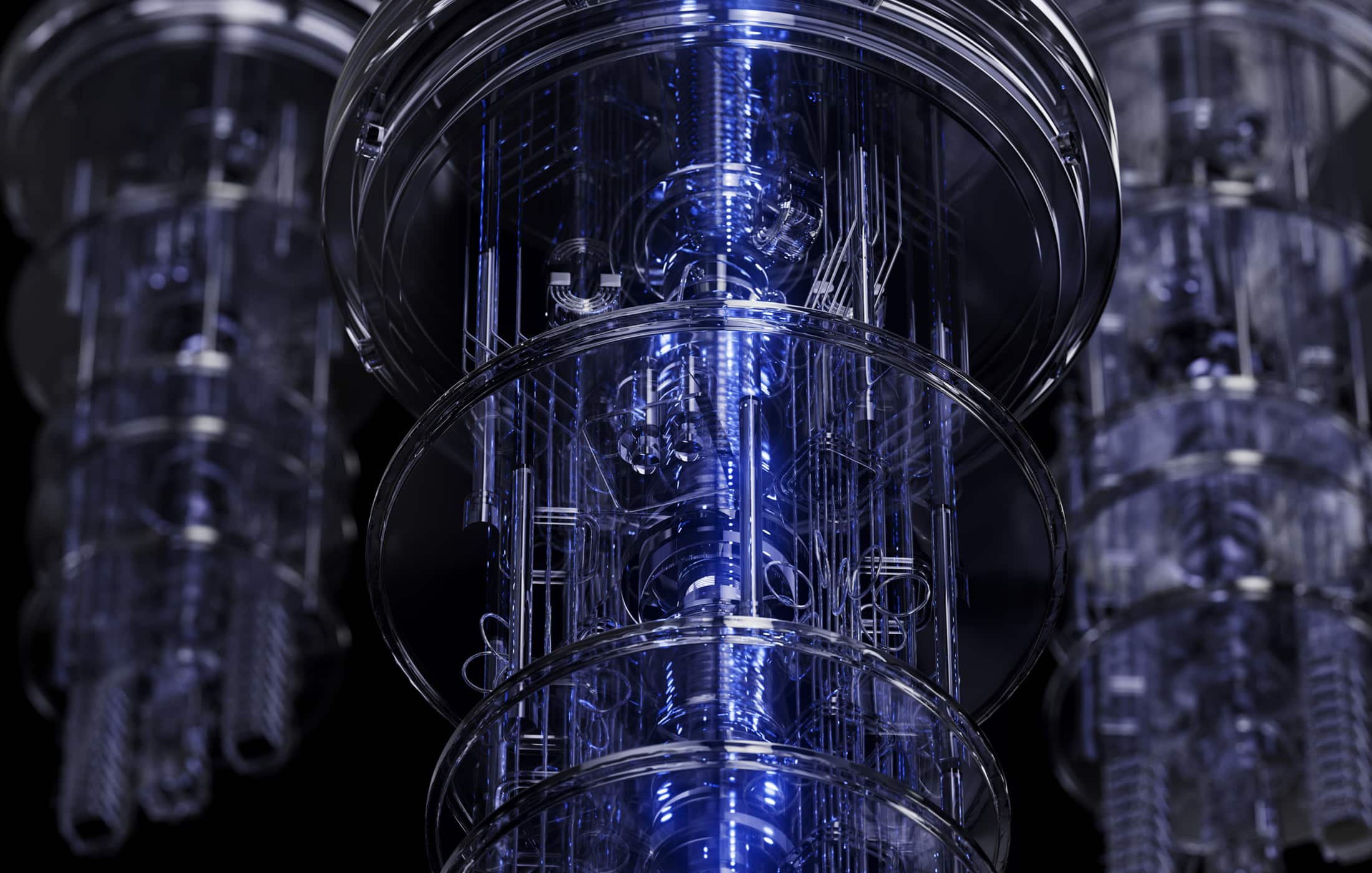

In current systems, qubit coherence times (how long they stay quantum) range from microseconds (superconducting circuits) to seconds (trapped ions) depending on the technology, and all are improving. Yet to perform thousands or millions of operations (as a cryptographically relevant algorithm might require), error rates must be drastically suppressed or corrected. From a network perspective, decoherence limits the distance quantum information can travel: photons through fiber can only go so far before losses and decoherence ruin their quantum state, which is why direct QKD links have distance limits (typically a few hundred kilometers in fiber). Quantum repeaters (discussed later) are being developed to overcome that, effectively performing entanglement swapping and purification to extend range despite decoherence. In short, decoherence represents the constant pull of the classical world on any quantum system – a tendency for Schrödinger’s cat to “choose” alive or dead when jostled. Overcoming decoherence, by isolation, cryogenics, error correction, or other tricks, is vital to making quantum computing and networks robust. Researchers describe decoherence as the interaction with environment that leads to loss of quantum information, hindering scalable quantum computing. It’s the reason quantum engineers work in ultra-cold, shielded labs, and why quantum chips are kept in dilution refrigerators at millikelvin temperatures. Every extra millisecond of coherence we can squeeze out of qubits is a victory that brings practical quantum tech closer.

Quantum Tunneling

Quantum tunneling is a phenomenon with a touch of magic: it’s as if a particle can walk through walls. More precisely, tunneling refers to a quantum particle’s ability to pass through an energy barrier that it classically shouldn’t be able to surmount. In the quantum world, particles are also waves, and if a wave can “leak” through a barrier, there is a chance the particle will pop out on the other side. This effect is responsible for many natural and technological wonders—from the nuclear fusion that powers the sun (protons tunneling through repulsive barriers) to devices like the scanning tunneling microscope. In quantum computing hardware, tunneling is often not a bug but a feature. A notable example is in superconducting qubits (used by IBM, Google, etc.): these are essentially tiny circuits where current is carried by Cooper pairs of electrons. Two superconductors separated by a thin insulator form what’s known as a Josephson junction, and the Cooper pairs can tunnel through the insulator without resistance. This quantum tunneling of paired electrons is what gives a superconducting qubit its quantum behavior. By allowing a phase difference (or current) to tunnel back and forth, the circuit can occupy discrete energy states that serve as the |0> and |1> of a qubit. In essence, the Josephson junction (enabled by tunneling) is the heart of superconducting qubits. Control pulses (microwaves) manipulate the quantum state across this junction, and readout measures the resulting state. Without tunneling, the electrons would be stuck, and the circuit would act like a normal (non-quantum) inductor or capacitor.

Another place tunneling appears is in quantum annealers like those made by D-Wave. In these systems, qubits are represented by tiny magnetic loops, and finding the lowest energy configuration of all loops corresponds to solving an optimization problem. The qubits can tunnel between different magnetic orientations (say, representing 0 or 1), which helps them escape local energy minima and find a global minimum solution. This is sometimes called quantum tunneling-assisted search. While these annealers are a specialized form of quantum computing, they leverage tunneling to potentially solve certain problems faster by literally going through energy barriers instead of over them.

From a cybersecurity hardware perspective, one could note that tunneling sets fundamental limits and opportunities. On one hand, as classical transistors shrink, unwanted quantum tunneling can cause leakage currents (a challenge in chip design around 5nm scale and below). On the other hand, in quantum devices we intentionally use tunneling to create qubits (as in Josephson junctions) or to read them out (e.g., quantum dot qubits often use tunneling of electrons for measurement). Tunneling’s relevance in quantum networks is more indirect, but one might consider that detectors for single photons (like avalanche photodiodes or superconducting nanowires) rely on quantum effects including tunneling to amplify a one-photon event into a macroscopic signal. In summary, quantum tunneling is a vivid reminder that quantum particles don’t follow classical rules: given a chance, they’ll take shortcuts through walls. This behavior is both a tool (enabling key hardware components) and a factor to account for when designing quantum systems.

Quantum Measurement Problem

The quantum measurement problem is the conceptual puzzle of how and when quantum possibilities “collapse” into a single observed reality. While interpretations of quantum mechanics vary, the basic operational fact is: when you measure a quantum system, you get a definite outcome, and the system’s state snaps into (or is projected onto) the corresponding eigenstate. Before measurement, the system may have been in a superposition of many states; after measurement, it appears to be in just one. Schrödinger’s cat (alive and dead in superposition until the box is opened) is the famous thought experiment illustrating the paradox. For our purposes, the key point is that observation is not passive in quantum mechanics – it fundamentally changes the state of what is being observed. If you have an electron in a superposition of here and there, measuring its position will suddenly “localize” it to one place, eliminating the superposition. This collapse is seemingly random (obeying only probability rules given by the wavefunction), and quantum theory doesn’t specify a physical mechanism for it – hence a “problem” that is philosophical as well as physical.

From an information security standpoint, the collapse on measurement is less a problem and more a feature. It means you cannot observe quantum information without disturbing it. This is essentially the same point we discussed with uncertainty and no-cloning, now viewed from the angle of measurement theory. If Eve tries to measure a qubit that encodes part of a secret key, she forces that qubit into a definite state. It would be like someone checking a random combination lock – the act of testing a combination either opens it (and you know) or it doesn’t (and you’ve effectively changed the state of what you’re testing). In quantum terms, if the qubit was in a superposition, measurement yields one value and destroys the superposition, so Eve cannot put the qubit back into its original delicate state. Meanwhile, Bob’s subsequent measurement (or Alice’s test for eavesdropping) will show anomalies. As Caltech’s Science Exchange explains: with quantum keys, there is no way to “listen in” on the transmission without disturbing the photons and changing the outcomes observed by the legitimate parties. This is precisely due to the act of measurement collapsing the state. The Heisenberg uncertainty principle can be viewed as one manifestation of this (certain properties change when others are measured), and the no-cloning theorem is another (you can’t make a measurement-like interaction that copies a state without collapsing it).

In practical terms, the measurement postulate forces quantum cryptographic protocols to be designed in a very clever way. For example, in QKD, Alice and Bob can’t directly measure their quantum states during distribution to check for eavesdroppers – that would destroy the information they’re trying to send! Instead, they measure after the transmission, and then use classical communication to compare certain subsets and statistics. In quantum computing, the measurement problem means you typically don’t want to measure any qubit until the end of your computation; measuring intermediate steps would collapse the computation’s superpositions. It also means algorithms have to be designed to give the answer in a measurable form at the end (often requiring many runs to get good statistics). For a cybersecurity analogy, think of the measurement problem as the ultimate tamper-evidence mechanism: any interception or observation of the quantum data is immediately obvious and irreversible. While physicists debate what “really” happens during wavefunction collapse, engineers and security experts can treat it as a given tool—a quantum alarm system. The moment someone observes what they shouldn’t, the quantum state changes, and the intrusion is revealed. This fundamental fact underlies the trust we have in quantum cryptographic security.

Entanglement Swapping

Entanglement swapping is a technique that sounds like quantum alchemy: it allows you to take two independent entangled pairs and “swap” their entanglement such that two particles that never interacted become entangled. Why is this useful? Because it is the core idea behind quantum networks and quantum repeaters, enabling entanglement (and thus quantum correlation or secure keys) to be distributed over long distances by stitching shorter entangled links together. Here’s how it works in principle: Suppose we have two entangled pairs: pair 1 entangles particles A & B, and pair 2 entangles particles C & D. Particle B and C are at some intermediate node (let’s call it Charlie), while A is with Alice and D is with Bob, who may be far apart. Initially, A is entangled with B, and C with D. Now, if Charlie performs a special joint measurement on B and C – a Bell-state measurement – something remarkable happens: particles A and D, which have never met, become entangled as a result of that measurement. The entanglement between A-B and C-D has been “swapped,” so that now A-D share entanglement, while B-C’s entanglement is gone (consumed by the measurement result). Charlie’s measurement gives a random outcome which Alice and Bob can use as needed (and sometimes they may need to communicate that result classically to complete certain protocols like quantum teleportation).

Entanglement swapping is the fundamental operation of a quantum repeater. Since direct transmission of entanglement (e.g., sending a photon) is lossy over distance, one can set up a chain of entangled segments: Alice–Charlie, Charlie–Bob. By entanglement swapping at Charlie’s node, Alice and Bob end up entangled, effectively creating a longer link out of two shorter ones. By chaining many such segments, a “giant entangled chain” can be formed across great distances. This is analogous to how classical repeaters or relays extend a signal, but with a crucial difference: a classical repeater reads and copies the signal (which would destroy quantum states), whereas a quantum repeater never directly measures the data. It only does entanglement swapping and related quantum operations, so the end-to-end entanglement (and thus security) is preserved. A nice analogy used by one source is that instead of a relay race (passing along a baton that could be intercepted), entanglement swapping is like a game of “Simon Says” across the network: each qubit in the chain mirrors the state of its neighbor, so the end nodes mirror each other as if they were directly entangled. If an outsider tries to meddle or copy the information at any point, the chain’s correlation is disrupted and the attempt is revealed – the same no-cloning, measurement-disturbance principles apply at every link.

Entanglement swapping isn’t just theoretical. It’s been demonstrated in laboratories and even in early-stage quantum network experiments. For instance, researchers have entangled photons across multiple nodes and performed swapping to extend entanglement range. In 2020, a team entangled photons between distant locations using intermediate swaps, and China’s Micius satellite experiments have effectively done entanglement swapping to distribute entanglement between two ground stations over 1,200 km via space. Swapping is also a critical ingredient in quantum teleportation: to teleport a quantum state from Alice to Bob, Alice entangles her particle with the one to be teleported, then does a joint (Bell) measurement between them, collapsing her particle and the input into a result that, when sent to Bob, allows him to transform his initially entangled particle into the input state. This teleportation protocol can be seen as entanglement swapping where the unknown state gets transferred to the distant particle. From a networking perspective, entanglement swapping lets you build large entangled networks out of smaller pieces. As we create prototype quantum networks, entanglement swapping will be routinely used in quantum switches and repeaters to connect users who do not have a direct physical quantum channel. It is fair to say that without entanglement swapping, a future quantum internet would not be possible in any scalable way.

Real-World Applications and Examples

All these quantum principles aren’t just theoretical musings; they are manifesting in real technologies and experiments that are rapidly advancing. Below are some of the key applications and examples of how these principles come together in practice:

Quantum Key Distribution (QKD)

Secure communications backed by physics. QKD is arguably the most mature quantum technology in use today. It exploits the uncertainty principle and no-cloning theorem to distribute encryption keys with security guaranteed by the laws of quantum mechanics. In the widely used BB84 protocol, for example, bits are encoded in photon polarizations. If an eavesdropper intercepts the photons, their measurements (being in the wrong polarization basis half the time) disturb the states and introduce errors, alerting the legitimate parties. QKD has been successfully demonstrated over optical fiber networks and even via satellites. In fact, quantum-encrypted links are already operational in niche settings: a Swiss company famously used QKD for securing an election ballot transmission, and Chinese researchers achieved QKD between Beijing and Shanghai by using trusted nodes, as well as satellite QKD from China to Europe. Banks and government agencies in multiple countries have tested QKD for secure data links. While current QKD systems are mostly limited to distances on the order of 100–200 km in fiber (due to photon loss and no amplification), the use of satellites (like Micius) and the development of quantum repeaters promise to extend this globally.

The unique value of QKD is that its security does not rely on unproven mathematical assumptions (as RSA and others do); if set up properly, even a quantum computer-wielding adversary cannot crack it, because there is simply no secret for them to compute – any intrusion is immediately evident. This makes QKD a compelling tool for securing the most critical communications (though it’s a complement to, not a replacement for, classical cryptography in the near term, given practical constraints).

Quantum Algorithms and Cryptanalysis

The flip side: quantum computers breaking classical crypto. The superposition and entanglement principles have enabled algorithms that can solve certain problems far more efficiently than classical algorithms. The poster child is Shor’s algorithm for integer factoring. As discussed, Shor’s algorithm can factor a large number exponentially faster than the best-known classical methods. This directly threatens RSA and other public-key cryptosystems (like elliptic-curve cryptography and Diffie-Hellman key exchange) which base their security on the assumption that factoring or discrete logarithms are infeasible to compute within the age of the universe. In theory, a quantum computer of sufficient size (thousands or millions of logical qubits with error correction) could crack a 2048-bit RSA key in a matter of hours or days, an effort that would take a classical supercomputer billions of years. This is why the advent of quantum computing is often cited as a looming Y2K-like scenario for encryption.

Another important algorithm is Grover’s algorithm, which can speed up unstructured search (and by extension brute-force attacks on symmetric cryptography) quadratically. Grover’s algorithm would cut the effective security of an N-bit symmetric key in half. Meaning AES-256 could be attacked with effort roughly equal to $$2^128$$ steps, which is still huge, but AES-128 would drop to $$2^64$$, potentially within range of large quantum computing efforts in the future.

These possibilities are driving a major effort in the cybersecurity world to develop and deploy post-quantum cryptography (PQC) – new encryption algorithms designed to be resistant to quantum attacks. In 2022, after a multi-year global competition, NIST announced a set of PQC algorithms (based on mathematical problems like lattices, error-correcting codes, multivariate equations, etc.) to begin standardization.

Governments and companies are urged to start transitioning to these quantum-resistant algorithms, ideally well before large quantum computers come online. Some estimates by experts suggest a “cryptographically relevant” quantum computer could possibly emerge in the next decade or two, though nobody knows for sure. This uncertainty, combined with the tactic of “harvest now, decrypt later” (adversaries saving encrypted data now to decrypt when they have a quantum computer), means proactive measures are needed. The U.S. government, for example, has set targets to phase out vulnerable cryptography by 2030. In summary, quantum algorithms exemplify both the power of quantum computing and its most dramatic cybersecurity implication: the potential obsolescence of our current public-key infrastructure. They underscore why cybersecurity professionals must pay attention to quantum developments today, not in some distant future.

Quantum Error Correction and Computing Progress

Taming decoherence for reliable qubits. While large-scale quantum computers capable of running Shor’s algorithm on RSA-sized numbers don’t exist yet, strides are being made. Companies like IBM, Google, and startups worldwide are building devices with tens or hundreds of physical qubits, and early versions of error-corrected logical qubits. The principles of superposition, entanglement, and measurement theory all come together in this endeavor: qubits are kept in superposition to perform computations, entanglement is used both as a resource for algorithms and within error correction codes, and measurements are cleverly orchestrated (e.g., measuring ancilla qubits to detect errors without collapsing the data qubits’ state)qutech.nl. A real-world milestone occurred in 2019 when Google’s quantum processor achieved “quantum supremacy” by performing a contrived random circuit sampling task that would have been infeasible for a classical supercomputer (though the practical usefulness of that task was limited). More relevant to security, companies have demonstrated small instances of quantum simulations (which could eventually break certain cryptographic schemes by modeling physics or chemistry problems) and are exploring quantum machine learning for pattern recognition. Each step forward is fundamentally an exercise in managing decoherence – for example, IBM’s latest quantum chips boast coherence times over 100 microseconds and advanced cryogenic engineering. There have also been demonstrations of logical qubits that can last longer than any of the individual physical qubits that compose them, thanks to error correction. While we’re still some years away from a fault-tolerant quantum computer, the rapid pace of progress suggests that what was once purely academic (like Shor’s algorithm) could become a practical threat in the not-too-distant futurenist.govnist.gov. For cybersecurity experts, watching these developments is crucial: it informs when and how urgently to roll out quantum-safe encryption. On the flip side, quantum computing might also eventually aid cybersecurity by enabling new encryption methods or faster threat detection algorithms, though such applications are speculative at this stage compared to the clear threat to current cryptography.

Quantum Networks and Teleportation

Towards a quantum internet. Using entanglement and entanglement swapping, researchers are building rudimentary quantum networks connecting multiple nodes. For instance, the Dutch Quantum Internet Consortium connected three nodes in a line (Delft, The Hague, etc.) with entangled links and performed entanglement swapping to establish end-to-end entanglement. In Chicago, a metropolitan quantum network testbed is being built to experiment with quantum repeaters and network protocols. China has integrated a large fiber QKD network (with trusted nodes) and satellite links to achieve QKD over 2,600 km (from Beijing to Vienna, combining ground and space channels).

Though these are early steps, they demonstrate that a quantum internet – a network capable of delivering entanglement or quantum states between any two points – is on the horizon. Quantum teleportation has been achieved between distant labs and via satellites, essentially swapping quantum states over long distances. One real-world example: in 2017, using the Micius satellite, scientists teleported the quantum state of a photon from a ground station in Tibet to another station 1,400 kilometers away, using entangled photons and classical communication of the Bell measurement result. Such feats show that even with today’s technology, the principles of entanglement can be utilized on a global scale. The ultimate vision is a global quantum network enabling secure communication, distributed quantum computing (linking quantum processors), and new cryptographic paradigms like device-independent security (where even the devices can be untrusted, yet security stems from fundamental quantum correlations verified by Bell tests).

Each of those achievements is a direct application of the principles described: QKD uses uncertainty and no-cloning; quantum networks use entanglement and swapping; teleportation uses entanglement and the measurement-collapse process; even quantum clock synchronization uses entangled signals for precision timing. As a tangible example, national agencies have transmitted ultra-secure quantum keys to embassies and between banking centers, and companies are emerging that offer “quantum-secured communications as a service.” While still limited, these real-world uses are proof that quantum principles are not just laboratory oddities—they are enabling new capabilities in information security.

Cybersecurity Implications

The advent of quantum computing and networks carries significant implications for the field of cybersecurity. Professionals in this space must grapple with a future where the assumptions underpinning data security are fundamentally altered. Here we outline the key implications:

- Impending Threat to Classical Encryption: Most of today’s secure communications—VPNs, HTTPS, financial transactions, military communications—rely on cryptographic algorithms (RSA, ECC, Diffie-Hellman, etc.) that could be broken by a sufficiently powerful quantum computer. As noted, Shor’s algorithm could factor the large primes that secure RSA and solve the discrete log problems underlying elliptic-curve schemes, rendering nearly all public-key encryption and digital signatures insecure. The consensus in the security community is that this is not a question of “if,” but “when.” Current estimates vary, but many experts believe that within 15-20 years, and possibly sooner, attackers may have access to quantum machines capable of this feat This has led to warnings that any data with a long secrecy requirement (health records, state secrets, intellectual property) encrypted under old algorithms could be compromised retroactively. Adversaries may already be engaging in “harvest-now, decrypt-later” attacks – intercepting and storing encrypted data now, anticipating future decryption once quantum capabilities are available. This puts a ticking clock on our existing cryptographic protections.

- Transition to Post-Quantum Cryptography: The primary defense against the above threat is to transition to post-quantum (quantum-resistant) cryptographic algorithms. These are new (or in some cases not-so-new) algorithms based on mathematical problems believed to resist quantum attack, such as lattice-based cryptography (Learning With Errors, NTRU, etc.), hash-based signatures, multivariate polynomial cryptography, and others. In 2022, NIST selected several candidate algorithms for standardization, and planning is underway globally to deploy them. This transition is a massive logistical challenge: it means updating protocols, software, hardware (like HSMs and smart cards), and certificates across the entire internet and communications ecosystem. The U.S. National Security Agency and NIST have guidelines urging organizations to inventory their cryptographic usage and prepare for migration. In fact, NIST has set an official deadline of 2030 for government systems to switch away from legacy encryption (like RSA/ECC) to PQC, highlighting the urgency. For businesses and infosec teams, quantum preparedness is becoming a part of risk management. Some companies are already testing hybrid solutions (combining classical and post-quantum keys) in TLS handshakes to ensure future-proof security. The takeaway is that quantum computing is spurring perhaps the largest overhaul of cryptographic infrastructure in decades. Cybersecurity professionals will need to understand and implement these new algorithms well before large quantum computers arrive, to avoid being caught off guard.

- New Quantum Cryptographic Tools: On the positive side, quantum mechanics offers new tools for enhancing security. Quantum Key Distribution is one such tool that’s commercially available now, providing encryption keys with security based on physics rather than math. While QKD can’t replace public-key cryptography in all situations (since it generally requires specialized hardware and direct point-to-point links or relays), it can be extremely valuable for securing critical backbone links or communications between high-value sites (data centers, government facilities) where fiber or line-of-sight links can be established. QKD’s ability to detect eavesdropping gives it a unique niche: it can ensure the confidentiality of a key exchange even in the face of unlimited computing power on the adversary’s side. Beyond QKD, there are concepts like quantum secret sharing (splitting a secret among parties such that only quantum correlations allow it to be reconstructed) and quantum random number generators (producing truly random numbers by measuring quantum processes, which can strengthen cryptographic key generation). These technologies could augment classical security systems; for example, a quantum random number generator could replace pseudorandom software generators to achieve higher entropy keys. We may also see device-independent cryptography become important: using entanglement and Bell’s theorem, users can verify that a device (even one that might be built by a malicious supplier) is producing genuine random keys or performing encryption correctly, because any deviation would break the quantum correlations. Such protocols are complex but show how quantum principles can even help address supply-chain trust issues by leaning on fundamental physics for verification.

- Evolving Threat Landscape and “Cryptographic Agility”: The coming quantum era adds another dimension to the threat landscape. Nation-state adversaries are heavily investing in quantum research, not just for offensive capabilities but also to secure their own communications. For instance, China’s large-scale QKD network and satellite QKD experiments indicate a strategic priority on quantum-secure comms. This could lead to an asymmetry: a country that deploys quantum-proof communications early might secure their secrets while potentially exploiting others that lag behind. From an offensive standpoint, intelligence agencies are certainly aware of the value of harvesting encrypted traffic now for later decryption. Organizations dealing in sensitive data should assume that anything intercepted today could be decrypted in a decade if it’s still valuable by then. Thus, protecting today’s data against tomorrow’s quantum adversary is a real concern in sectors like healthcare, finance, critical infrastructure, and defense. This all points to the importance of crypto agility – designing systems that can swap out cryptographic primitives with minimal disruption. Protocols should be flexible to adopt post-quantum algorithms or incorporate quantum key exchange when available. Monitoring advances in quantum computing is now part of strategic cybersecurity planning; for example, a sudden breakthrough in qubit technology could accelerate timelines and would need an immediate reassessment of cryptographic risks.

- Ethical and Workforce Implications: Finally, the rise of quantum tech in security will require education and perhaps new ethical guidelines. Security professionals will need at least a high-level understanding of quantum principles to make informed decisions. Training programs and certifications are already starting to include quantum-safe encryption topics. Governments may establish policies about quantum cryptography exports or the handling of quantum computers, similar to how classical cryptography was once regulated, because a quantum computer is effectively a powerful cyber weapon in the context of cryptanalysis. There’s also the question of quantum cryptography vs. post-quantum cryptography – which to use when, and how to combine them for maximum security. It’s likely not an either/or: PQC will secure most day-to-day communications because it’s software-based and doesn’t require new infrastructure, while quantum cryptography will be used in specialized high-security contexts, potentially as an extra layer of defense. Understanding the interplay of these will be part of the job for future CISOs and security architects.

In short, the cybersecurity implications of quantum principles are twofold: a need to defend against the new capabilities quantum attackers will have, and an opportunity to leverage quantum effects to bolster security. The transition has already begun, with standards bodies, academics, and industry working on mitigation (PQC) and implementation (QKD, quantum randomness) strategies. Those in the security field who stay ahead of these developments will help ensure a smoother and safer migration to the post-quantum world, whereas those who ignore it may find that one day, overnight, their cryptographic shields turn to glass.

How These Principles Tie Together

As we’ve seen, the core principles of quantum mechanics – uncertainty, superposition, entanglement, and more – are deeply interwoven in the fabric of quantum computing and networking. Each principle plays a distinct role, yet they come together to enable the quantum feats that excite scientists and alarm cryptographers. Superposition and entanglement form the lifeblood of quantum computational power, allowing parallelism and nonlocal correlations that give quantum algorithms their edge. Entanglement swapping builds on these to create extended networks, linking distant parties in a web of quantum correlations that can be used for teleportation and secret-sharing across the globe. Underlying these almost magical capabilities are the sobering no-go theorems: the uncertainty principle and no-cloning theorem enforce that quantum information cannot be observed or duplicated without consequences. These ensure the security of quantum communications (any eavesdropping creates a disturbance) even as they complicate the engineering of quantum devices (you can’t copy qubits to help correct errors, you can’t measure them freely to see what’s wrong). The quantum measurement problem reminds us that at some point quantum potentials become single outcomes – a limitation for computing (randomness in results) but a boon for cryptography (collapse reveals tampering). And quantum tunneling demonstrates both the strangeness and utility of quantum physics, showing how particles can bypass classical constraints – a phenomenon harnessed in hardware and one that symbolizes the “nothing is impossible” ethos of quantum technology.

For the cybersecurity professional, the interplay of these principles means that quantum mechanics simultaneously threatens and enriches the security landscape. The very feature that makes quantum encryption unbreakable – that no one can measure a quantum key without disturbing it – is the flip side of what makes quantum computing so threatening to classical encryption – that a quantum computer can explore many paths at once (superposition) and find hidden variables (like secret factors) that classical computers cannot. It’s a grand irony: the same theory that gives us uncrackable quantum keys also gives us the tool to crack all our non-quantum keys. This underscores a broader point: quantum information science is holistic. One cannot fully appreciate the impact on security by looking at one principle alone. They reinforce each other. For example, if entanglement were not a thing, there would be no Bell’s theorem and likely no powerful quantum algorithms (since entanglement is often cited as the resource that gives quantum computers extra computational oomph). If superposition did not exist, neither would uncertainty in its current form, and without uncertainty, quantum cryptography’s basic premise fails. Yet, with entanglement and superposition comes decoherence – hence the need for error correction which, interestingly, also uses entanglement (in stabilizer codes) to protect superpositions from decohering. It’s a closed loop of concepts: each quantum principle can be seen as solving one problem and creating another.

Consider a quantum network scenario: You want to share a secure key using entangled photons (entanglement). You distribute them via intermediate nodes (entanglement swapping) because direct transmission is lossy (decoherence). The no-cloning theorem means you can’t just amplify or copy photons at routers, so instead you use quantum repeaters that perform swaps without measurement. The uncertainty principle and measurement problem ensure that if anyone tries to intercept, the entanglement is broken and you’ll notice. Meanwhile, in the quantum computer that might sit at each network node, superposition and entanglement within the processor allow it to encrypt/decrypt or run complex protocols, but quantum error correction is employed to fend off decoherence. When a message is sent, it might be encoded in such a way (perhaps using quantum one-time pads or teleportation) that the only way to decode it is by quantum measurements that collapse the right states at the end. In this journey from theory to practice, each principle finds its place: some as enablers, others as protectors, and some as challenges to be managed.

In the broader picture of quantum technology and security, these principles together paint a vision of the future: one in which we have quantum computers as powerful problem-solvers and code-breakers, and alongside them quantum-secured channels as ultra-safe communication links. We might store our most sensitive data not just encrypted with a quantum-resistant algorithm, but also distributed across entangled quantum memories such that no single point of failure exists (reminiscent of secret sharing schemes, but quantum). Authentication might involve quantum tokens that cannot be forged or copied due to no-cloning. Even the concept of quantum zero-knowledge proofs is being explored, where one party can prove to another that they know a secret without revealing it, leveraging quantum states that lose their value if tampered with. It’s a rich tapestry, and the principles we’ve discussed are the threads.

To conclude, the landscape of quantum computing and quantum networks is an exciting frontier where physics and cybersecurity intersect. We’re witnessing the early days of this quantum revolution. As quantum hardware scales and quantum protocols move from labs to real-world deployment, security experts will need to collaborate with physicists like never before. By mastering concepts like Heisenberg’s uncertainty, Bell’s theorem, and the no-cloning rule, cybersecurity professionals equip themselves to navigate this new terrain. These principles and theorems are more than just scientific trivia; they are the rules of engagement for information in the quantum age – an age where, hopefully, our understanding of these rules allows us to unlock unprecedented computational power while still preserving (and even enhancing) the safety and privacy of information. The quantum future is both promising and challenging, but with knowledge of its foundations, we can approach it with confidence rather than fear. In the end, the strange laws of quantum mechanics might not only threaten our security; they may also ensure that our secrets stay truly safe.