RSA-2048 Within Reach of 1730 Qubits. Or is it?

A new research paper has dramatically lowered the qubit requirements for breaking some of our most critical cryptography. “Reducing the Number of Qubits in Quantum Factoring” (Chevignard, Fouque, Schrottenloher, IACR ePrint 2024/222) shows how clever quantum circuit design can factor RSA moduli or compute discrete logarithms with far fewer logical qubits than previously thought. Let’s dig into what it means for the impending “Q-Day” when quantum computers can crack current encryption.

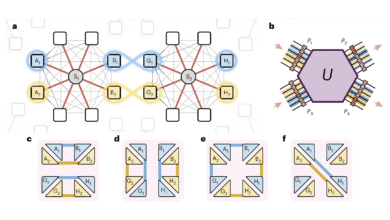

The paper’s main claim is striking: by using approximate residue arithmetic and other optimizations, one can factor an RSA-2048 number with around 1730 logical qubits – dramatically down from the ~4000–6000 logical qubits required by earlier methods. (See one of my recent posts “4,099 Qubits: The Myth and Reality of Breaking RSA-2048 with Quantum Computers” for more info on how we gt to oft-cited 4,099 qubits number.) In other words, instead of needing on the order of twice the bit-length of the RSA modulus in qubits (as older designs did), the new approach brings the requirement to roughly half the bit-length (plus some overhead). This is achieved by streamlining the modular exponentiation step in Shor’s algorithm: the authors manage to compute only the essential least significant bits of the exponentiation result on-the-fly, rather than storing the entire large number in quantum memory. By working in a Residue Number System (RNS) and truncating unneeded information, the circuit frees up qubits that would normally hold intermediate “garbage” results. It’s akin to doing long multiplication but only keeping track of the last few digits at a time – greatly reducing the scratch space needed.

The trick is that their quantum circuit doesn’t output the full $$A^x \bmod N$$ at once (as in traditional Shor); instead it computes an approximate residue – essentially the result modulo a carefully chosen small number (like $$2^r$$ for some $$r$$). This gives the least significant $$r$$ bits of the true result. Those $$r$$ bits are enough to deduce the factors or discrete log with some extra classical processing, especially if the process can be repeated. Because only a small slice of the calculation is handled per run, the algorithm uses only o(log N) extra “workspace” qubits for that slice. All other qubits are devoted to the necessary inputs/outputs, drastically shrinking the quantum memory footprint. In plainer terms, the authors found a way to compress the quantum computation’s space requirement – somewhat like using a streaming computation that processes data in chunks, rather than storing a huge data block in memory.

Another innovation is aggressive ancilla recycling. Any temporary qubits (ancillas) used during the modular arithmetic are cleaned up (uncomputed) and reused whenever possible, avoiding the accumulation of “junk” bits that would normally bloat the qubit count. The consequence of all this space-saving, however, is that the algorithm doesn’t succeed in one shot. It has a certain probability of success and typically needs to be repeated several times to reliably get the answer. For RSA-2048, the authors estimate about 40 runs on average are required. Each run produces a small piece of the puzzle (like a few crucial bits of the secret exponent or a factor), and after enough runs, the pieces can be assembled via classical post-processing (the paper builds on the Ekerå–Håstad algorithm that uses multiple quantum runs with shorter inputs) .

To put numbers on it, Chevignard et al. report that factoring a 2048-bit RSA modulus (n = 2048) can be done with ~1730 logical qubits and about $$2^{36}$$ Toffoli gates (a type of universal logic gate for quantum computers) in a single run. Because ~40 runs are needed, the total gate count comes to roughly $$2^{40.9}$$ (about 1–2 trillion operations). They performed a similar analysis for discrete logarithms in a safe-prime group of size 2048 bits (commonly used in Diffie–Hellman key exchange). To break a 224-bit secret exponent (which has ~112-bit classical security) in such a group, one would need only ~684 logical qubits, running a circuit with $$2^{32}$$ Toffoli gates about 20 times. To the authors’ knowledge, these are the lowest qubit counts ever reported for quantum attacks on cryptographic parameters still considered classically secure today.

This breakthrough did not come from nowhere – it stands on years of quantum circuit optimization research. Here’s how it compares to a couple of important milestones in quantum factoring:

- Beauregard (2003): More than two decades ago, Beauregard designed a Shor’s algorithm circuit using roughly 2n + 3 qubits (for an n-bit number). For RSA-2048, that’s about 4,100 logical qubits. The gate complexity was on the order of $$n^3 \log n$$ , which for n=2048 would be tens of billions of operations. This was a seminal space-optimized design, using a semi-classical Fourier transform to reuse a single qubit for phase accumulation, but it still required thousands of qubits for the modular multiplication itself.

- Gidney & Ekerå (2019): Fast forward to 2019, Craig Gidney and Martin Ekerå introduced new techniques (like “oblivious carry runways” for addition) that significantly cut down gate counts at the expense of using more qubits. Their design used roughly 3n logical qubits (≈6144 for RSA-2048) and about $$0.3·n^3$$ Toffoli gates. In concrete terms, they estimated ~2.5 billion Toffoli gates for RSA-2048 and projected that a quantum computer with ~20 million physical qubits could factor 2048-bit RSA in about 8 hours under reasonable error-correction assumptions. This was considered a feasible path if quantum hardware reached the million-qubit scale.

- Chevignard et al. (2024): The new 2024 approach brings the qubit count down to ~n/2 (plus lower-order terms) – about 1730 logical qubits for n=2048 – a factor of 2–3× reduction in qubits compared to prior state-of-the-art. The trade-off is a higher gate count: roughly $$2^{36}$$ (~68 billion) gates per run, and trillions in total due to repeat runs. In essence, they traded time for space: using more operations and repeated runs to minimize the quantum memory required at any one moment. The table below summarizes the trade-offs:

In summary, Chevignard and colleagues have achieved a major reduction in quantum hardware requirements for breaking RSA and Diffie–Hellman-type cryptosystems. This doesn’t mean anyone can do it today – the time (gate count) and error-corrected stability needed are still daunting – but it shifts the conversation. The research was even implemented as a classical simulation for verification, giving credibility to these resource estimates.

Implications for Q-Day and Beyond

The fact that RSA-2048 could in principle be factored with only ~1.7k logical qubits is a game-changer for the quantum timeline. Not long ago, experts were saying we’d need on the order of 4,000–6,000 logical qubits (equating to millions of physical qubits after error correction) to tackle RSA-2048, which put the threat comfortably decades away. Now, thanks to this work, the goalposts are moving.

The new paper reinforces that the number of qubits is no longer the only roadblock on the way to factoring RSA or breaking Diffie–Hellman. We now have a plausible recipe that brings the logical qubit requirement into the low thousands, which is a scale many in the industry expect could be achieved in the next decade or so. The remaining challenges are formidable but more about quality and scale than fundamental theory: we need high enough gate fidelities and error-correction to perform trillions of operations within a reasonable time, and we need hardware that can sustain perhaps millions of physical qubits to support those ~1000+ logical qubits. In essence, the bottleneck has shifted to execution time and error correction overhead. Even with only 1730 logical qubits, performing $$10^{12}$$ operations with, say, a $$10^{-3}$$ error rate per operation means a lot of error correcting layers and probably weeks of actual runtime. However, these are exactly the kind of challenges quantum engineers are actively working on – improving gate speeds, error rates, and error-correction efficiency. They’re no longer abstract “maybe someday” problems; they’re concrete technical milestones on roadmaps.

What we are witnessing is quantum computing growing up and facing the classic engineering trade-offs. Chevignard et al. took the space-efficient route: minimize qubits and accept a time penalty. These space–time tradeoffs will likely define how the first generation of cryptographically relevant quantum computers are built. For instance, an agency with virtually unlimited budget might build a large quantum computer with, say, millions of qubits to crack RSA-2048 quickly. Another might opt for a smaller device with fewer qubits that can do it more slowly (taking weeks or months). Both routes lead to the same finish line: the security assumptions underpinning RSA and similar schemes are coming down from the clouds and into the realm of practicality. It’s sobering that breaking RSA-2048 is now more a question of “how fast” and “at what cost” – a matter of engineering optimization – rather than an impossible task requiring an exponential leap in science.

But, just to be 100% clear – technical challenges are still massive and will take many years to address. RSA is not under any immediate threat. While I am excited about these algorithmic improvements, I’m aware how much more work remains.

Nevertheless, for the security community, the message is to be proactive rather than reactive. We’ve now been warned by concrete research that RSA-2048’s days are numbered. The transition to post-quantum cryptography (PQC) needs to happen before these quantum algorithms go from papers to machines. That means starting now, testing PQC solutions, and having a migration plan well before the quantum “safe-cracking” machine is switched on.

Quantum Upside & Quantum Risk - Handled

My company - Applied Quantum - helps governments, enterprises, and investors prepare for both the upside and the risk of quantum technologies. We deliver concise board and investor briefings; demystify quantum computing, sensing, and communications; craft national and corporate strategies to capture advantage; and turn plans into delivery. We help you mitigate the quantum risk by executing crypto‑inventory, crypto‑agility implementation, PQC migration, and broader defenses against the quantum threat. We run vendor due diligence, proof‑of‑value pilots, standards and policy alignment, workforce training, and procurement support, then oversee implementation across your organization. Contact me if you want help.