Quantum Computing Modalities: Superconducting Qubits

Table of Contents

(For other quantum computing modalities and architectures, see Taxonomy of Quantum Computing: Modalities & Architectures)

What It Is

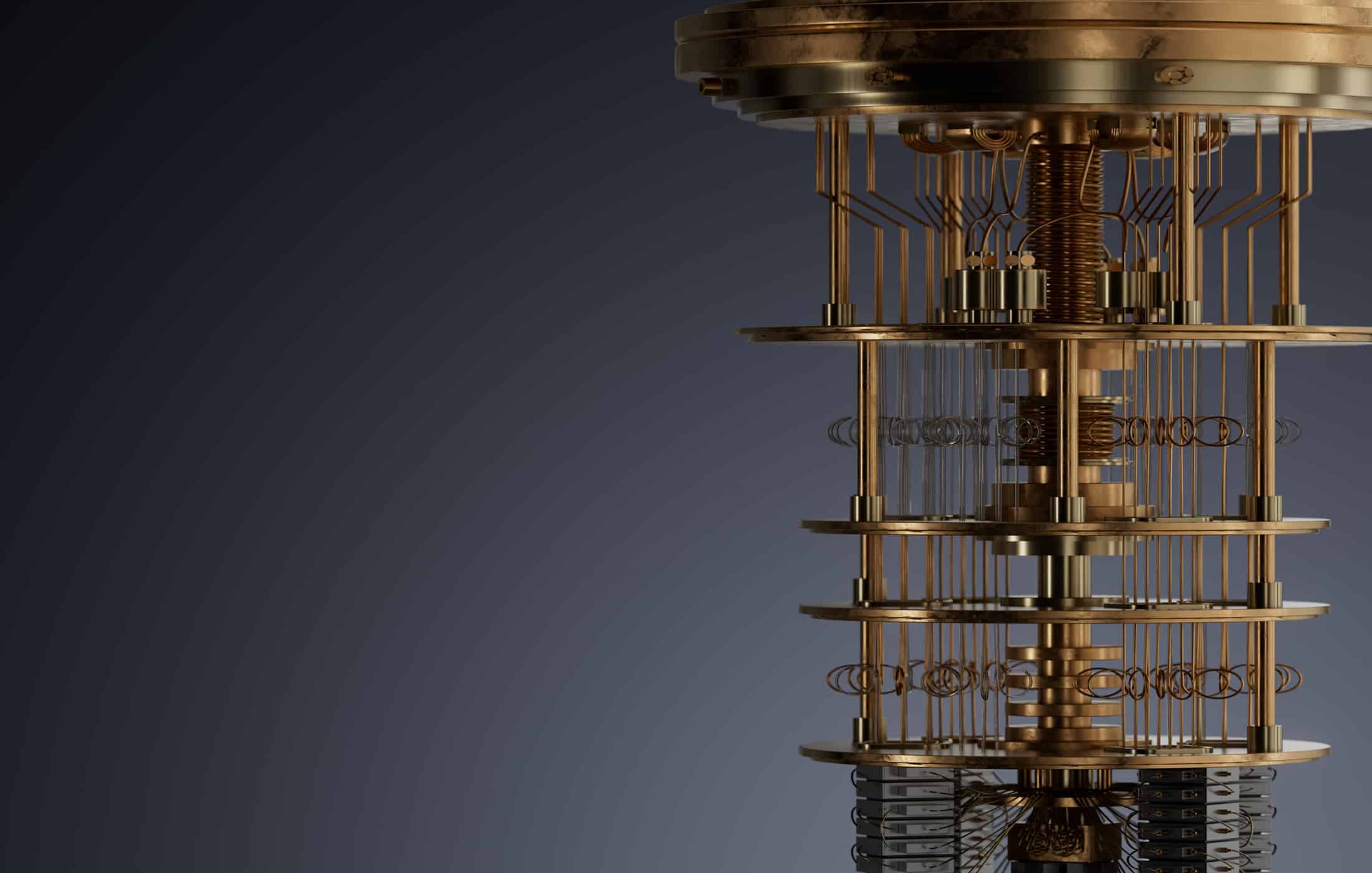

Superconducting qubits are quantum bits implemented using superconducting electrical circuits cooled to extremely low temperatures. They behave as artificial atoms with quantized energy levels: the two lowest energy states (ground and first excited state) serve as the qubit’s 0 and 1 states. These circuits often consist of an inductor and capacitor (an LC oscillator) made from superconducting materials (like aluminum, niobium, or tantalum) connected by a Josephson junction – a non-linear element that introduces anharmonicity. The anharmonic energy spectrum is crucial, as it ensures only two levels act as the qubit (preventing unintended excitation of higher levels). Superconductivity (achieved by cooling devices to ~10 mK in dilution refrigerators) grants zero electrical resistance, so currents can flow without dissipating energy. This allows quantum coherence to be preserved in the circuit, a fundamental requirement for quantum computing.

Superconducting qubits are a leading approach in modern quantum computing. Tech giants and research labs worldwide have adopted this platform – e.g. IBM, Google, Rigetti, and others have built quantum chips using superconducting qubits. The approach has rapidly progressed from a few qubits to tens of qubits over the past two decades. In 2019, Google’s 53-qubit superconducting processor famously achieved quantum supremacy, performing in 200 seconds a task that was estimated to take 10,000 years on a classical supercomputer. This milestone highlighted the relevance of superconducting qubits: they have enabled some of the largest quantum processors to date and are used as testbeds for quantum algorithms in the noisy intermediate-scale quantum (NISQ) era. Many quantum cloud computing services (IBM Quantum, Google Quantum AI, etc.) provide public access to superconducting qubit processors, underscoring their prominence in the industry. In summary, superconducting qubits leverage low-temperature superconductivity to create robust, tunable two-level quantum systems, making them a cornerstone of today’s quantum computing research and development.

Key Academic Papers

Superconducting qubit research has been driven by a series of foundational papers and breakthroughs over the last few decades:

- Josephson Junction Qubits (1980s–1990s): The theoretical basis lies in Brian Josephson’s 1962 prediction of tunneling Cooper pairs, but a key experimental milestone came in 1985 when researchers observed quantized energy levels in a current-biased Josephson junction (Martinis, Devoret, and Clarke, 1985). The late 1990s saw the first realization of a superconducting qubit. Yasunobu Nakamura et al. (1999) demonstrated coherent control of a “charge qubit” – a small superconducting island (Cooper-pair box) where the presence or absence of a Cooper pair represented 0 or 1. This was the first evidence that a Josephson junction circuit could behave as a two-level quantum system, showing Rabi oscillations between quantum states. However, these early qubits suffered from short coherence (only nanoseconds to microseconds) and measurements that destroyed the qubit state.

- Flux and Phase Qubits (Early 2000s): Building on that breakthrough, other designs emerged. Flux qubits (Mooij et al., 1999; Friedman et al., 2000) used a superconducting loop with multiple Josephson junctions, where clockwise vs. counter-clockwise circulating currents encode |0⟩ and |1⟩. Similarly, phase qubits (Martinis et al., 2002) used the phase difference across a Josephson junction in a current-biased circuit as the qubit variable. Both demonstrated quantum coherence and tunneling in macroscopic circuits. These papers proved different modalities (charge, flux, phase) could serve as qubits, though each had specific noise sensitivities (charge qubits were vulnerable to charge fluctuations; flux qubits to magnetic noise; phase qubits to critical current noise).

- Transmon Qubit – Koch et al. (2007): A major breakthrough in improving coherence was the invention of the transmon qubit (Jens Koch et al., 2007). The transmon is essentially an evolved charge qubit with a large shunt capacitor added to the Josephson junction, which dramatically reduces its sensitivity to charge noise. Houck et al. (2009) reported that transmons significantly improved coherence times. This design offers an optimal trade-off: it makes the energy levels more closely spaced (reducing charge dispersion) while still retaining enough anharmonicity for qubit operations. The transmon quickly became the workhorse qubit in the field – it’s used in most modern superconducting processors (including IBM’s and Google’s chips). By mitigating charge noise, the transmon paved the way for multi-qubit devices with substantially longer coherence than the earlier charge qubits.

- Circuit QED and Coupling (2004–2007): Another influential development was the integration of superconducting qubits with microwave cavities, known as circuit quantum electrodynamics (cQED). In 2004, Wallraff et al. demonstrated strong coupling between a Cooper-pair box qubit and a high-Q coplanar waveguide resonator, directly analogizing cavity QED in quantum optics. Andreas Blais et al. (2004–2007) developed the theory for using a cavity as a “quantum bus” to mediate interactions between qubits. These works established how to perform two-qubit gates and high-fidelity qubit readout using microwave photons, and they are foundational to multi-qubit superconducting architectures.

- Quantum Error Correction in Superconducting Circuits: As qubit coherence improved, researchers turned to quantum error correction (QEC). A seminal theory paper by Fowler, Martinis, and others (2012) outlined the surface code approach well-suited for 2D qubit lattices like those in superconducting chips. Experimentally, in 2015–2016, the first QEC demonstrations appeared: Corcoles et al. (IBM, 2015) and Kelly et al. (Google, 2015) showed detection of single-qubit errors using small parity-check circuits. A recent milestone is Google Quantum AI’s 2023 experiment implementing a distance-5 (and 7) surface code on 47 physical superconducting qubits – they reported that increasing the code size reduced the logical error rate (a key sign of QEC working). This result, published in Nature (“Quantum error correction below the surface code threshold”), demonstrated for the first time that logical qubits based on superconducting qubits can outperform the physical qubits by growing the code. While fully error-corrected, fault-tolerant quantum computing is still ahead, these academic contributions – from Nakamura’s first qubit to surface-code QEC – form the backbone of superconducting qubit research.

- Quantum Supremacy and Beyond (2019–Present): Arute et al. (2019) is another landmark paper, wherein Google’s Martinis group used a 53-qubit superconducting processor (“Sycamore”) to perform a random circuit sampling task to showcase quantum supremacy. This validated that superconducting qubits could be controlled at a scale and fidelity high enough to exceed what a classical computer could simulate (at least for a specialized problem). Subsequent research has focused on increasing qubit counts (IBM and others) and improving fidelity to reach quantum advantage for useful tasks. For instance, Wu et al. (2021) from USTC in China reported a 66-qubit superconducting chip (“Zuchongzhi”) performing a similar sampling task, further pushing the boundary of quantum computational power. Each of these papers – spanning fundamental physics, engineering improvements (transmon, materials), and demonstrations of algorithmic capability – marks a step in the evolution of superconducting qubits from lab curiosities to the forefront of quantum computing.

How It Works

Superconducting qubits operate on principles of circuit quantum electrodynamics and Josephson junction physics. Key technical aspects include:

Josephson Junctions as Non-Linear Elements

At the heart of every superconducting qubit is the Josephson junction – two superconductors separated by a thin insulating barrier. A junction has a critical property: it behaves like a non-linear inductor with zero resistance. Unlike a normal inductor or capacitor (which have linear energy levels), a Josephson junction gives the LC circuit an anharmonic (unevenly spaced) energy spectrum. This is crucial for qubit operation: it isolates two levels for use as |0⟩ and |1⟩ by ensuring that the 0→1 transition frequency differs from the 1→2 frequency. Without this non-linearity, a quantum LC circuit would behave as a simple harmonic oscillator (with equally spaced levels) and could not be used as a two-level qubit. Thus, the Josephson junction “pins” the qubit’s two-level system. It permits a superconducting loop to carry a persistent supercurrent and exhibit a well-defined quantum phase difference. The junction’s physics – described by the Josephson relations – allows phenomena like quantum tunneling of Cooper pairs and flux quantization, enabling macroscopic quantum states.

In summary, Josephson junctions provide the only known non-dissipative, strongly non-linear circuit element at low temperature and hence form the basis of superconducting qubits.

Superconducting Qubit Types

Several varieties of superconducting qubits exist, all built from Josephson junction circuits but differing in how the qubit’s two states are encoded:

- Charge Qubits: These are Cooper pair boxes where the qubit states correspond to different numbers of Cooper pairs on a small superconducting island. They are controlled via gate voltage. Example: the original Nakamura qubit (1999) was a charge qubit. Charge qubits are relatively simple but suffered from short coherence due to charge noise (random charge fluctuations would rapidly dephase the qubit).

- Flux Qubits: These use a superconducting loop interrupted by one or more Josephson junctions. The two qubit states are typically clockwise vs. counter-clockwise circulating currents (which correspond to two distinct magnetic flux states in the loop). They’re controlled by applied magnetic flux. Flux qubits are like tiny superconducting loops acting as quantum two-level systems. They have longer coherence than early charge qubits, but flux noise (fluctuations in magnetic environment) can still cause decoherence. An improved form is the fluxonium (Manucharyan et al., 2009), which adds a large inductance to the loop, yielding even greater anharmonicity and coherence.

- Phase Qubits: These qubits encode information in the quantum phase across a Josephson junction. In practice, a phase qubit is a current-biased junction where the two lowest energy metastable states in the tilted washboard potential serve as |0⟩ and |1⟩. Phase qubits were actively developed in the 2000s (Martinis’s group) and achieved reasonable gate fidelities, but reading them out involved inducing the junction to switch to the voltage state (which was somewhat invasive). Transmon qubits largely supplanted phase qubits due to better stability.

- Transmon Qubits: The transmon is the dominant design today. It is essentially a charge qubit in which a large shunt capacitor is added to the junction to reduce the qubit’s charging energy. By design, transmons operate in a regime where the ratio of Josephson energy to charging energy is high, which mitigates charge noise dramatically. The trade-off is a smaller anharmonicity, but still enough for selective control of the |0⟩↔|1⟩ transition. Transmons are relatively insensitive to both charge and flux noise, making them robust. They can be made fixed-frequency or tunable (by replacing one junction with a SQUID to allow adjusting the effective Josephson energy with flux). Variants of the transmon include the Xmon (a planar transmon with an X-shaped capacitor, used by Google for better connectivity) and the gatemon (a transmon-like qubit where the Josephson junction is a semiconductor nanowire, allowing tunability via a gate voltage). Most large superconducting quantum processors (e.g. IBM’s 127-qubit Eagle, Google’s Sycamore) consist of transmon qubits. Another recent variant is the unimon (2022), which uses a single junction shunted by a linear inductor in a resonator – designed for increased anharmonicity and reduced noise sensitivity.

Each qubit type operates on the same principle – manipulating the quantum states of a superconducting circuit – but with different circuit parameters to balance coherence and controllability.

Coupling Mechanisms and Microwave Resonators

To perform multi-qubit operations, superconducting qubits need to interact. The primary coupling methods are either direct (capacitive or inductive coupling between qubits) or mediated via a resonator. A popular approach is using a quantum bus resonator: a microwave cavity that two or more qubits connect to. The cavity (often a coplanar waveguide resonator on chip) can exchange virtual photons with the qubits, allowing them to interact even if they are not nearest neighbors. In this circuit QED scheme, qubits play the role of “atoms” and the resonator the role of a “photon mode,” analogous to an optical cavity QED system. By tuning qubits in and out of resonance with the cavity, entangling two-qubit gates (like controlled-NOT or iSWAP) can be implemented. For example, the cross-resonance gate (used by IBM) involves driving one transmon at the transition frequency of a second, coupled transmon, inducing an effective CZ interaction.

Other two-qubit gate techniques use tunable couplers or resonant interactions (e.g. bringing qubits on resonance to swap excitations). The net result is that superconducting circuits can realize entangling gates in tens of nanoseconds to a few hundred nanoseconds by appropriate coupling via capacitors, inductors, or microwave photons. Microwave resonators also serve as readout devices – each qubit is typically coupled to a readout resonator that shifts frequency depending on the qubit state. By measuring the microwave transmission or reflection, one can infer the qubit’s state without destroying it (a quantum non-demolition measurement). This circuit QED readout scheme was another key to the success of superconducting qubits.

Coherence Times and Noise Mitigation

Early superconducting qubits had coherence times (T1 energy relaxation and T2 dephasing) on the order of nanoseconds to a few microseconds. Today, state-of-the-art transmon qubits have T1 in the 100–300 microsecond range, and T2 (with echo or dynamical decoupling) comparable or higher. This is a result of numerous improvements: better materials (e.g. using tantalum or eliminating lossy interfaces) have dramatically reduced dielectric loss; improved fabrication and surface treatments have cut down two-level system (TLS) defects; shielding and filtering have reduced electromagnetic interference. For instance, experiments replacing niobium with tantalum films achieved coherence times >0.3 milliseconds (300 µs) in planar transmons. Another group recently reported fluxonium qubits with T2 over 1 ms. These advances push decoherence further out, giving more time to perform operations.

Despite these gains, decoherence and noise remain central challenges. Superconducting qubits couple to a variety of noise sources: dielectric loss from surfaces/interfaces, flux noise from magnetic impurities, photon noise from stray microwave modes, and critical current noise in junctions. Thermal photons even at millikelvin can cause qubit excitations. Mitigation techniques include: filtering and attenuating lines to prevent thermal and electronic noise from reaching the chip; enclosing qubits in superconducting shields; using “cold” attenuators and isolators for readout lines; and optimizing device geometry to minimize participation of lossy materials (the “surface participation ratio” approach). Moreover, error mitigation strategies are used in software – for example, dynamical decoupling pulses can refocus low-frequency noise, and symmetry verification or zero-noise extrapolation can reduce the impact of errors in near-term algorithms.

Gate Fidelities

The fidelity of single- and two-qubit gates on superconducting qubits has steadily improved. As of 2023, single-qubit gates routinely achieve >99.9% fidelity, and two-qubit gates around 99%–99.5% in the best systems. For example, a two-qubit controlled-Z gate demonstrated by a leading group reached 99.9% fidelity – an error rate of only 1 in 1000 operations. Achieving such performance requires precise pulse shaping (to minimize leakage out of the qubit subspace and avoid exciting parasitic modes) and calibration routines that compensate for qubit frequency drift or cross-talk. Techniques like DRAG (Derivative Removal by Adiabatic Gate) pulses are used to reduce leakage errors. Researchers are also exploring microwave-activated tunable couplers to turn interactions on/off cleanly, which can improve two-qubit gate consistency. Ongoing innovations in control electronics – including cryogenic control chips placed close to the qubits – aim to reduce latency and noise, potentially enabling faster and more reliable gate operations. Overall, superconducting qubits leverage fast control (gates in ~10–100 ns) and continuous improvement in coherence to perform a growing set of quantum operations with high fidelity, while quantum error correction is expected to further suppress the remaining errors.

Comparison to Other Modalitiess

Superconducting qubits are one of several quantum computing Modalities. Each approach has distinct advantages and limitations:

Superconducting vs. Trapped Ions

These are the two leading platforms. Superconducting qubits (SC) are solid-state circuits with fast gate speeds (nanoseconds) and can be fabricated using semiconductor techniques, which bodes well for scaling in 2D arrays. In contrast, trapped ion qubits use individual ions (e.g. Yb<sup>+</sup>, Ca<sup>+</sup>) trapped in electromagnetic fields; they have incredibly long coherence times (seconds to hours) and very high gate fidelities, but gate operations are slower (microseconds to milliseconds) and the ions are typically manipulated with laser beams.

In ion traps, every ion can in principle interact with every other via collective motional modes, giving an all-to-all connectivity (beneficial for algorithms that need many qubit interactions). Superconducting qubits usually have only nearest-neighbor couplings on a chip, though schemes like bus resonators can extend connectivity. However, as qubit counts grow, ion traps face challenges in managing many ions and laser beam control (crosstalk, mode crowding), whereas superconducting qubits face challenges in wiring and cryogenics.

Currently, SC qubits have achieved higher quantum circuit depth and qubit counts (IBM and Google have built 50+ qubit processors), whereas trapped ions lead in single- and two-qubit accuracy and stability. The choice often comes down to speed vs. fidelity: superconducting qubits execute many more operations per second, but each operation is a bit less reliable than in ion systems. Both communities are actively researching how to scale to hundreds or thousands of qubits; superconducting platforms lean on microfabrication and multiplexed control, while ion traps explore modular architectures with photonic interconnects.

Superconducting vs. Photonic Qubits

Photonic qubits encode quantum information in particles of light, typically using photon polarization or path as the two-level system. The big advantage of photonic qubits is that they naturally travel at light-speed and don’t require extreme cooling – they can operate at room temperature. Photonic quantum computers are thus well-suited for communication tasks (quantum networking, QKD) and potentially for optical computing. They also suffer virtually no decoherence in flight (a photon will maintain its quantum state over long distances, aside from loss). However, making two photons interact (for two-qubit gates) is notoriously challenging – it usually requires delicate interference and measurements (e.g. using beam splitters and ancillary photons), which are probabilistic. As a result, photonic quantum computing often relies on measurement-based quantum computing (creating large entangled cluster states of photons and then measuring them), which consumes a lot of physical resources. In contrast, superconducting qubits are matter-based and can interact directly via electrical couplings, enabling deterministic gates.

Superconducting qubits need cryogenic cooling, whereas photonic systems can be at ambient temperature (though sources and detectors may need cooling) and can integrate well with existing telecom technologies. Another issue is photon loss: fiber or optical losses can severely limit photonic circuits as they scale.

Currently, photonic approaches (pursued by companies like PsiQuantum and Xanadu) are behind in qubit count and gate fidelity compared to SC qubits, but they hold promise for long-distance quantum communication and potentially easier networking of modules. Superconducting qubits, by contrast, excel at on-chip computation with strong interactions but are limited to one “chassis” (a dilution refrigerator) per processor. In the future, one could envision hybrid systems where superconducting processors are connected by photonic links, marrying the strengths of both.

Superconducting vs. Silicon Spin Qubits

Spin qubits in silicon (and related semiconductor quantum dots) represent another solid-state approach, but instead of superconductivity, they use the spin of single electrons (or nuclei) trapped in semiconductor structures as qubits.

The advantages of spin qubits include their extremely small size (a qubit can be a single electron in a quantum dot), and the ability to leverage the huge semiconductor manufacturing infrastructure (the idea of “CMOS-compatible” qubits). Silicon spin qubits have achieved impressively long coherence times as well – in isotopically purified silicon, single electron spins can have T2 of seconds (using isotope enrichment and magnetic vacuum techniques). They also operate at slightly higher temperatures (~millikelvin to 1K, potentially) compared to superconducting qubits that require ~10 mK, easing cooling requirements a bit.

However, spin qubits are still in early development in terms of scaling; controlling many individual spins and coupling them is challenging. Typically, spins are coupled either via exchange interactions (quantum dots adjacent to each other) or via photonic (microwave) mediators or electron shuttling, but integrating a large network of them with control lines is non-trivial. A report noted that spin qubits benefit from their compact size and can reuse classical chip fabrication know-how, but they face similar scaling challenges as superconductors – error rates increase as qubit count grows, and the need for precise control and cooling is intensified by their small size.

In essence, many of the techniques required to scale superconducting qubits (extreme cryogenics, advanced packaging, crosstalk reduction) are also needed for spin qubits. At present, superconducting platforms are ahead in demonstrated multi-qubit operations (tens of qubits vs. the few-qubit logic demonstrated in silicon). But silicon spin qubits hold the long-term promise of integration onto classical silicon chips (possibly co-locating qubits and control electronics) and high density. It’s conceivable that in the future, superconducting qubits might achieve medium-scale quantum processors faster, while spin qubits might take over for very large-scale integration if their fabrication can be perfected.

In summary, superconducting qubits stand out for their fast gates and scalability using chip fabrication, whereas trapped ions offer excellent qubit quality and connectivity at the cost of speed, photonic qubits offer communication at scale and room-temperature operation but face gate implementation hurdles, and silicon spin qubits promise nano-scale integration and semiconductor industry compatibility but are still catching up in coherence and control at scale. Each modality is under active research, and it’s not yet clear which will win out – they may also coexist, each tailored to different applications (e.g. ions for small ultra-precise processors, superconductors for big quantum computers, photonics for networking, spins for integration with classical chips).

Current Development Status

The superconducting qubit platform has seen rapid industry-driven progress, with increasing qubit counts and performance metrics year over year. Major players include IBM, Google, Rigetti Computing, Intel, Alibaba/USTC (China), and startups like IQM, Quantum Circuits Inc., and others. Here’s an overview of the current state:

Qubit Count and Hardware Roadmaps

IBM has been very transparent with its superconducting quantum roadmap. In 2021, IBM unveiled Eagle, a 127-qubit transmon processor, the first to cross the 100-qubit mark. By 2022, they debuted Osprey with 433 qubits – a nearly 4× jump in qubit count in one year. IBM’s roadmap aims for a 1,121-qubit chip (Condor) in 2023 and >4,000 qubits by 2025. This aggressive scaling is facilitated by innovations like 3D packaging (to route many control lines) and perhaps modular chip architectures. IBM also plans a 133-qubit processor (Heron) as a testbed for connecting multiple chips. The end goal is to reach millions of qubits by combining chips into quantum datacenters – effectively building quantum supercomputers in the long term.

Google’s approach has been slightly different: after demonstrating a high-fidelity 53-qubit chip in 2019 (Sycamore), Google has focused on improving qubit quality and error correction. In 2021, Google announced a goal to build a useful, error-corrected quantum computer by the end of the decade (2029). They have been testing smaller codes (e.g. 21 qubits for a repetition code, 47 qubits for surface code) rather than simply increasing qubit count. Nonetheless, Google’s researchers have hinted at working on 100+ qubit devices as well.

In China, the USTC group led by Jian-Wei Pan built a 66-qubit superconducting processor in 2021 (achieving a sampling task similar to Google’s). Companies like Baidu have also entered the fray – in 2022 Baidu announced plans for a fully integrated superconducting quantum computer with both hardware and software stack developed in-house. This shows how the field is broadening, with new contenders building on the foundation laid by IBM and Google.

Quantum Volume and Performance

It’s not just about qubit count – quantum volume, a holistic performance metric introduced by IBM (which accounts for qubit number, connectivity, and gate fidelity), has been steadily increasing. IBM reported that its Quantum Volume has doubled about every year, reaching 128 in 2021 and 256 by 2022. This roughly year-on-year doubling of Quantum Volume indicates that improvements in error rates and architecture accompany the growth in qubit numbers – quantum processors are not only getting larger but also proportionally more powerful and error-mitigated. For example, IBM’s 27-qubit “Falcon” processors achieved QV=32 (2019), then 64 (2020), and a 65-qubit “Hummingbird” achieved QV=128 (2021), etc., showing a consistent scaling in capability. Meanwhile, Google’s focus on quantum error correction can be seen as working toward a different metric: quantum error reduction. In 2023, Google reported that increasing the size of their error-correcting code (from 3×3 to 5×5 to 7×7 qubit grid) exponentially suppressed error rates, a key milestone toward a “quantum logical volume” metric. These improvements give confidence that superconducting qubits can be scaled with manageable error rates as we approach the thresholds needed for fault tolerance.

- Major Achievements: Aside from the well-known quantum supremacy demonstration by Google in 2019, IBM has also demonstrated complex algorithms on their devices. For instance, in 2020, a team used IBM superconducting qubits to simulate a small molecule’s ground state energy using a variational quantum eigensolver. Google in 2020 performed the largest quantum chemistry simulation at the time (for a model of hydrogen chains and diazene) on 12 qubits of the Sycamore processor. In 2021, USTC’s 66-qubit system performed random circuit sampling 100× faster than classical simulation, marking a quantum advantage claim. We also see increasing integration into real-world computation: in 2022, IBM’s 127-qubit Eagle was used to run a 3×3 surface code as a prototype error-corrected qubit. In 2023, IBM announced deploying a 133-qubit processor in Japan (at RIKEN) to be integrated with a supercomputer, reflecting a trend towards hybrid quantum-classical computing in practice.

- Rigetti and Other Players: Rigetti Computing, a startup focused on superconducting qubits, has also made notable strides. In 2021, Rigetti unveiled an 80-qubit processor (Aspen-M) which was the first to use a multi-chip module: it combined two 40-qubit chips connected via a high-speed interconnect. This modular approach is a path to scaling beyond the fabrication limit of a single die. The Aspen-M also boasted improved readout and gate speeds (Rigetti reported 2.5× faster processing than prior chips and 50% reduction in readout errors). By 2023, Rigetti announced a 84-qubit system with median two-qubit fidelity of 99.5%, showing improvement in quality as well. However, Rigetti’s qubit count has not yet rivaled IBM/Google, and the company is focusing on specific applications via its cloud quantum service. Intel has taken a slightly different spin – they initially invested in superconducting qubits (in partnership with QuTech), but more recently emphasized silicon spin qubits. Still, Intel’s Horse Ridge cryogenic control chip and other contributions help the superconducting ecosystem (e.g., advanced packaging techniques). D-Wave Systems, while not a gate-based quantum computer for general algorithms, uses superconducting flux qubits for quantum annealing. D-Wave’s newest annealer (Advantage system) has over 5,000 qubits (albeit of a different kind, specialized for solving optimization problems via quantum annealing rather than circuit-model computation). This demonstrates the scalability potential of superconducting technology: D-Wave leveraged superconducting flux qubits to build thousands of coupled qubits on a chip (with a different operating principle). Although D-Wave’s machine isn’t used for gate-model algorithms like Shor’s or QEC, it has been applied to optimization problems in logistics, scheduling, and machine learning by various industry clients.

- Commercialization and Access: Today, superconducting quantum processors are accessible through cloud platforms. IBM offers access to 5-qubit and 7-qubit devices freely, and larger systems (27, 65, and 127-qubit) to partners and paying clients. Google provides access to Sycamore via research collaborations and through Google Cloud’s Quantum Engine. Rigetti’s 80-qubit system is available through its Quantum Cloud Services and via Amazon Braket. Quantum Volume and other benchmarks are used to advertise improvements: for instance, Honeywell (now Quantinuum, though they use trapped ions) achieved a quantum volume of 64 and 128 around 2020, which IBM matched and surpassed in the following year. This competitive leapfrogging shows a healthy race in performance. On the application front, several corporate partnerships have formed – e.g., JPMorgan Chase working with IBM on quantum algorithms for finance, Merck and others exploring quantum chemistry on these machines, and aerospace companies looking at quantum optimization. While these are early explorations, they underscore that the current status of superconducting qubits is not just in the lab; they are increasingly viewed as prototypical quantum co-processors that businesses and researchers can tap into for experimentation.

In summary, the superconducting qubit industry is marked by rapid hardware scaling (doubling qubits and performance metrics every 1-2 years), improvements in coherence and gate fidelity (nearing the thresholds for error correction), and an expanding set of commercial offerings and collaborations. The community is transitioning from scientific experiments to engineering larger systems, with a vision of multitudes of qubits running error-corrected algorithms within this decade.

Advantages

Superconducting qubits offer several compelling advantages as a quantum computing platform:

- High Scalability Potential: Because superconducting qubits are built using standard microfabrication techniques (lithography, thin-film deposition, etching), they are inherently scalable using chip-based manufacturing. The same processes that produce silicon integrated circuits can, with modifications, produce superconducting quantum chips. This has enabled rapid scaling from a single qubit to dozens and now hundreds on a single chip. Companies have even automated much of the fabrication and packaging process for these qubits. The solid-state nature of SC qubits means they occupy a small footprint (each qubit is on the order of tens of micrometers in size), and many qubits can be placed on a wafer. By contrast, some other qubit types (like ions in a trap) are harder to miniaturize or duplicate in large numbers without manual assembly. Superconducting circuits can also leverage 3D integration (stacking chips, through-silicon vias) to incorporate more qubits and interconnects. All of this implies a clear path toward mass-production and scaling up. Indeed, as noted, IBM and others have roadmaps into the thousands of qubits on a single device, something currently only realistic with a chip-based approach. In essence, superconducting qubits are engineer-friendly – they can be designed with CAD tools and fabricated in advanced chip foundries, offering a level of uniformity and reproducibility that is hard to achieve in more “atomic” qubit systems. This scalability also extends to control: multiple qubits can be controlled with multiplexed microwave lines, and many readout resonators can be frequency-multiplexed on a single feedline, meaning the infrastructure per qubit can be optimized as devices grow. Such engineering scalability is a strong advantage toward building quantum processors with hundreds of qubits in the near future.

- Fast Gate Speeds: Superconducting qubits operate at frequencies in the gigahertz range (typically 4–8 GHz for transmons), which allows for extremely fast quantum gates. A single-qubit X or Y rotation can be done in ~10–20 ns, and two-qubit entangling gates (like CZ or iSWAP) usually in 30–200 ns depending on the scheme. These gate times are orders of magnitude faster than those in some other platforms (for instance, trapped ion gates often take tens of microseconds, and certain solid-state spin gates may take microseconds as well). Fast gates are beneficial because they allow many operations to be performed within the qubit’s coherence time. Even if coherence time is, say, 100 microseconds, one can execute on the order of 1000 two-qubit gates in that timeframe – enough depth for complex quantum error correction cycles or algorithms. High gate speed also means higher clock rates for quantum circuits, potentially enabling more operations per second for algorithms like QAOA or variational algorithms. This speed advantage was explicitly noted in comparisons: superconducting circuits have fast gate clock speeds which help compensate for their shorter coherence. Additionally, fast gates are useful for mitigating certain noise (as qubits spend less time idling where 1/f noise can cause dephasing). The microwave control pulses that drive these fast operations are well-developed in the RF engineering community, meaning we have the tools to generate and shape them precisely. Overall, the ability to execute ~10<sup>7</sup>–10<sup>8</sup> quantum ops per second (vs. ~10<sup>3</sup>–10<sup>5</sup> for some other techs) is a key strength of superconducting qubits in pursuing quantum algorithms that may require many sequential gates.

- Integration with Classical Electronics (Hybrid Systems): Superconducting qubits, being electrical circuits, interface naturally with classical electronics and control systems. They are driven and read out by electrical signals (microwave pulses, currents, voltages) which can be generated and processed by classical hardware. This makes it straightforward to create quantum-classical hybrid systems where a classical computer controls a quantum chip, sends pulses based on measurement outcomes, etc., in a fast feedback loop. Indeed, current experiments regularly use FPGAs and fast electronics at room temperature to do real-time feedback and error correction with superconducting qubits. Furthermore, there are active efforts to integrate classical control closer to the quantum chip – for instance, using cryogenic CMOS control chips operating at 4 K or 15 mK, which would sit inside the fridge and directly drive qubits. Such integration is unique to solid-state qubits like superconducting and spin qubits. In principle, one can imagine a superconducting qubit chip bonded to a silicon CMOS chip (3D integration) that handles multiplexing and signal routing. This kind of integration leverages decades of classical computing development. No other platform is as amenable to on-chip integration; ions and photons, for example, require very different hardware (lasers, optical components) that are not part of standard electronic infrastructure. Superconducting qubits can also be networked via classical means: using microwave-to-optical transducers, their states could be converted to light and sent over fiber, enabling distributed quantum computing. Another aspect of hybrid integration is that superconducting qubits can be combined with microwave resonators and amplifiers that are themselves superconducting (e.g. Josephson parametric amplifiers for near-quantum-limited readout). This synergy of quantum and classical superconducting tech in one cohesive system is a significant advantage. It means, for example, one company can design both the qubit chip and the control ASICs, using standard digital design flows for the latter. In short, superconducting qubits fit well into the existing electronic ecosystem, paving the way for quantum accelerators that sit next to classical processors, all integrated in a future high-performance computing setup.

- Demonstrated Multi-Qubit Capability and Maturity: Among competing modalities, superconducting qubits have one of the longest track records of multi-qubit operation and complex circuit demonstrations. They were the first to demonstrate a quantum processor exceeding 50 qubits (Google’s Sycamore) and to run certain algorithms at that scale. They’ve achieved the highest Quantum Volume to date for any platform. This maturity means a vast library of practical knowledge exists – e.g. calibration routines for many qubits, microwave crosstalk management, automated qubit tuning, etc. The platform has a robust software and control stack (e.g. IBM’s Qiskit/Pulse for programming pulses, and advanced microwave instrumentation from companies like Keysight or Zurich Instruments tailored to SC qubits). This ecosystem maturity is an advantage for anyone looking to build or use a quantum computer now. By contrast, some other platforms might have superior theoretical properties but less developed infrastructure or fewer qubits demonstrated. The superconducting community has also pioneered many techniques in quantum computing (tomography methods, randomized benchmarking, quantum volume measurement, etc.), which others have adopted. All of this makes superconducting qubits a leading candidate for near-term quantum advantage experiments, since they can leverage fast innovation cycles in both academia and industry.

In summary, superconducting qubits combine scalability, speed, and integrability. They can be manufactured in large quantities and connected in planar arrays, operate fast enough to perform complex algorithms within coherence times, and work hand-in-hand with classical electronics. These strengths have allowed superconducting qubits to spearhead the quest for quantum computational advantage in the NISQ era, and they form a solid foundation for building future fault-tolerant quantum machines.

Disadvantages

Despite their promise, superconducting qubits face significant challenges and limitations that researchers must overcome to reach large-scale, fault-tolerant quantum computing:

- Decoherence and Error Rates: Superconducting qubits, being macroscopic electrical circuits, are inherently coupled to many environmental degrees of freedom, which leads to faster decoherence compared to some atomic systems. Their coherence times, while improved, are on the order of tens to hundreds of microseconds, whereas trapped ions or certain spin systems can have coherence times from seconds to hours. This means SC qubits accumulate errors relatively quickly if operations are not completed fast. Moreover, current superconducting devices still have non-negligible error rates on the order of 0.1%–1% per gate for two-qubit operations. In practice, even state-of-the-art devices will typically experience at least one failure in every thousand operations. This error rate is above the threshold required for efficient quantum error correction (which typically demands error < ~0.01% per gate, depending on the code). Thus, without error correction, algorithms running on today’s superconducting chips must remain shallow (few hundred gates at most) before noise dominates. While gate fidelities are improving, pushing errors down another order of magnitude is challenging and an active area of research. Decoherence mechanisms like energy relaxation (T1) fundamentally limit how long a qubit can operate. For instance, a qubit with T1 = 100 µs effectively has at most ~100k coherent operations at 5 GHz before it decays (and in practice dephasing often cuts this shorter). In comparison, an ion qubit might maintain state for many minutes, allowing millions of operations. Thus, superconducting qubits are in a race to perform computations before decoherence sets in, and any slow-down or idle time can be detrimental. This places extreme requirements on synchronization and timing in control electronics.

- Cryogenic Cooling Requirements: Superconducting qubit processors must be cooled to millikelvin temperatures (about 10–20 mK, which is over 10,000 times colder than outer space). This is necessary to maintain superconductivity and to freeze out thermal photons that would disturb the qubits. The need for a dilution refrigerator and cryogenics infrastructure is a significant practical disadvantage. These fridges are expensive, power-hungry, and have limited cooling power at millikelvin (just a few microwatts typically). All control wiring into the fridge must be carefully thermalized and filtered to avoid heating the qubit chip. The cryogenic requirement also currently limits connectivity between quantum machines – two superconducting chips cannot easily talk to each other unless they’re in the same cryostat or connected by specialized cryogenic links. In contrast, some other platforms (photonic qubits, neutral atoms) don’t require such extreme cooling. The complexity of running a dilution refrigerator – continuous operation, vibration isolation, etc. – means superconducting quantum computers are currently bulky and costly installations, not simple devices. Scaling to thousands or millions of qubits will require either much larger cryostats or many cryostats networked together, raising engineering challenges. Researchers note that for most quantum tech (superconducting included), “the required scale of the cooling equipment in size and power is beyond the feasibility of currently available equipment” when envisioning millions of qubits. Thus, new advances in cryogenic engineering or operation at higher temperatures (e.g. using materials with higher Tc or coupling qubits to higher-temperature electronics) may be needed. The cooling requirement also introduces a latency bottleneck – signals must travel from room-temperature electronics down to mK and back, which can be slow (microsecond latency) and limits feedback speed. While cryogenic technology is steadily advancing (with some fridges now designed for 1000+ qubit systems), it remains a bottleneck that other platforms don’t suffer to the same degree. Simply put, superconducting quantum computers currently function like “large, specialized physics experiments” due to their cooling needs, whereas in the future we’d want them to be more like commodities – bridging that gap is non-trivial.

- Scaling and Connectivity Challenges: As the number of qubits on a superconducting chip grows, so do issues of control complexity and crosstalk. Each qubit requires control lines (for XY drive and sometimes Z flux tuning) and a readout resonator (with its own line, unless multiplexed). Managing hundreds or thousands of microwave lines into a cryostat is a serious wiring challenge. Even if technically feasible through multiplexing, crosstalk can occur: control pulses meant for one qubit can slightly rotate neighbors due to spurious couplings; frequency crowding can cause interference between qubit gates and readouts. Furthermore, fabricating identical qubits at scale is hard – there are always variations in junction critical currents that make qubit frequencies differ; too small variation and qubits may unintentionally resonate with each other, too large and uniform control is hard. Scaling also exacerbates the chip’s sensitivity to fabrication defects – e.g., a single two-level defect in the substrate can ruin the coherence of any qubit whose frequency matches it. As devices scale, ensuring high yield and uniform performance across all qubits is a big challenge (already, in a 50-qubit device, one or two qubits often perform worse than the rest). Another limitation is geometry: superconducting qubits laid out in a planar array typically have only nearest-neighbor coupling (usually fixed). This limits the connectivity of the “quantum circuit” – two distant qubits must interact via a chain of intermediate swaps, which costs time and fidelity. Some architectures add bus resonators for long-range hops, but those come with their own overhead. While schemes exist to improve connectivity (like 3D integration or tunable couplers that allow interactions between non-neighbors), implementing them adds complexity. By contrast, ion traps and photonic cluster states can have more flexible connectivity. Thus, mapping certain quantum algorithms onto the fixed coupling graph of a superconducting chip can be inefficient.

- Need for Error Correction and Overhead: Because of the above issues, fault-tolerant quantum computing with superconducting qubits will require substantial resource overhead. The consensus is that to combat decoherence and gate errors, each logical qubit (error-corrected qubit) will require on the order of 100–1000 physical superconducting qubits, depending on error rates and the code used. For example, a surface code with physical error ~0.1% might need several hundred physical qubits per logical qubit to get logical error ~$$10^{–9}$$. This overhead means a full-scale machine capable of, say, factoring large numbers or running Shor’s algorithm on RSA-2048 would need millions of physical qubits – a daunting prospect given current technology. Achieving such scale will stress all the issues mentioned: fabrication yield, cryo wiring, power dissipation (each qubit’s control consumes some mW at room temp, which turns to µW at mK, adding heat load). While these are long-term considerations, they are challenges unique to solid-state approaches like superconductors – in an ideal ion trap, you might need fewer physical qubits per logical qubit if error rates are lower. In any case, until error correction is in place, superconducting qubits are stuck in the “NISQ” regime with limited algorithmic depth. This exposes them to another practical disadvantage: noise variability and calibration drift. Superconducting qubits require frequent calibration (sometimes hours of tuning per day) because qubit frequencies can drift with temperature or trapped magnetic flux, and crosstalk couplings can change. The maintenance overhead to keep a large superconducting processor tuned up is non-trivial. It’s essentially an analog processor that must be kept in alignment through calibration of hundreds of parameters – an effort that may not scale easily without automation.

In summary, superconducting qubits, for all their strengths, grapple with being “noisy” qubits that live in a demanding environment. They decohere quickly (relative to atomic qubits), necessitate complex and costly cooling, and will incur a heavy qubit overhead to reach fault tolerance. Scaling up is not just a matter of making a bigger chip; it introduces myriad engineering difficulties from wiring to crosstalk. These disadvantages form the crux of why superconducting qubits, despite leading the race today, are not guaranteed to be the ultimate winning technology – continued research into mitigating noise (via materials, filtering, better designs like fluxonium or novel qubits) and into clever architecture (modular QPUs, photonic interconnects) is needed to address these challenges.

Impact on Cybersecurity

The advent of large-scale superconducting quantum computers carries profound cybersecurity implications. Quantum computers threaten many of the cryptographic protocols that secure today’s digital communications. Here’s how superconducting qubits factor into the cybersecurity picture:

- Breaking Classical Encryption: Quantum algorithms such as Shor’s factoring algorithm and Shor’s discrete log algorithm can theoretically break the two main pillars of modern encryption – RSA/Diffie-Hellman (based on integer factorization and discrete logs) and elliptic curve cryptography – in polynomial time, something impossible for classical computers. The catch is that executing Shor’s algorithm for real-world key sizes (e.g. 2048-bit RSA keys) requires a large, error-corrected quantum computer, likely containing millions of physical qubits. Superconducting qubits are among the most plausible platform to achieve such a machine, given their current head start in qubit count and manufacturability. Thus, often when experts estimate when quantum computers might break encryption, they implicitly are thinking of progress in superconducting (and maybe ion trap) qubit technology. Current estimates vary, but a cryptographically relevant quantum computer might be available by the mid-2030s, according to some government and industry analyses. Once such a machine exists, all data protected by vulnerable encryption could be exposed. For instance, an attacker could record (intercept) encrypted traffic today – say an HTTPS connection or a VPN – and store it, then decrypt it later once they have access to a quantum computer (this is the so-called “harvest now, decrypt later” attack strategy). This especially impacts any sensitive data with long secrecy requirements (e.g. government secrets, personal health data) that might still be valuable in 10–20 years. If an adversary builds a superconducting quantum computer with sufficient qubits in secret, they could potentially decrypt vast amounts of historical data encrypted with RSA/ECC without the world realizing until it’s too late.

- Role of Superconducting Qubits in Quantum Attacks: Among quantum technologies, superconducting qubits have the fastest gates and already demonstrate intermediate-scale processors. This means that if any platform is likely to run Shor’s algorithm first on non-trivial sizes, superconducting qubits are a prime candidate. They will likely reach the threshold of a few thousand logical qubits (enough for breaking some crypto) sooner than others if current trends continue. In fact, recent research shows that proposed hybrid quantum-classical algorithms might reduce the cost of factoring. A 2023 paper by Chinese researchers claimed a combination of lattice-based classical techniques with a small quantum processor could factor RSA-2048 with as few as 372 qubits (though this claim is under debate). Notably, IBM’s 433-qubit Osprey chip already surpasses that qubit count. While other bottlenecks (like gate depth and error rates) make the claim not yet a practical reality, it underscores that superconducting hardware is nearing ranges where serious cryptographic attacks enter the discussion. As soon as a superconducting quantum computer can stably run a circuit with a few thousand gates on a few hundred qubits, it could implement smaller instances of Shor’s algorithm (e.g. 128-bit RSA perhaps) and start attacking classical crypto in a meaningful way. Governments are closely watching this progress – for example, the NSA in the US has indicated that quantum attacks on public key cryptography are a matter of “when, not if,” implicitly pressuring the development of quantum-resistant encryption.

- Post-Quantum Cryptography (PQC) and Standards: In anticipation of the quantum threat, the cybersecurity community is developing post-quantum cryptography – classical cryptographic algorithms that are believed to be resistant to quantum attacks (known quantum algorithms wouldn’t break them efficiently). In July 2022, NIST (National Institute of Standards and Technology) announced the first set of PQC algorithms (like CRYSTALS-Kyber for key exchange and CRYSTALS-Dilithium for digital signatures) to be standardized. NIST explicitly notes this is to “withstand cyberattacks from a quantum computer”. The existence of this standardization effort is directly motivated by the progress in quantum computing hardware such as superconducting qubits. Essentially, NIST and other agencies are racing to get new encryption deployed before quantum computers mature. The finalized standards were released in 2024, and organizations are now planning migration (a process that could take years due to the pervasiveness of RSA/ECC in protocols like TLS, IPsec, cryptocurrencies, etc.). The transition to PQC is urgent because of the “harvest now, decrypt later” threat – an adversary can store encrypted data now and decrypt with a quantum computer later. Thus even current communications face a risk if they aren’t quantum-safe.

- Specific Risks of Superconducting QC: One could argue that a superconducting quantum computer, being a large, complex machine, would likely be built by nation-states or large corporations first. This raises geopolitical and cyber-espionage issues. If, say, a nation-state develops a superconducting quantum computer capable of code-breaking ahead of others, it could secretly decrypt other nations’ secure communications (diplomatic cables, military communications, etc.) without detection, until PQC is universally deployed. This asymmetry is a security risk. There’s also the possibility of quantum computing as a service misuse: cloud-accessible superconducting quantum computers (like those IBM and others provide) could conceivably be used by malicious actors to run quantum attacks on smaller cryptosystems (for instance, breaking shorter RSA keys or cracking certain crypto schemes like discrete log in smaller groups). Already, some researchers demonstrated using IBM’s 5-qubit device to run a simplified version of Shor’s algorithm to factor 15, 21, etc., as educational demos. As devices improve, one could imagine someone leveraging a quantum cloud service to attempt breaking a 512-bit RSA key (which might become feasible earlier than 2048-bit). Cloud providers will likely prohibit such activities in usage policies, but it’s a consideration.

- Threat to Symmetric Cryptography and Hashing: Although Shor’s algorithm doesn’t speed up breaking symmetric ciphers or hash functions, Grover’s algorithm (another quantum algorithm) can quadraticly speed up brute-force searches. This means a quantum computer could brute-force a 128-bit key in roughly $$2^64$$ steps instead of $$2^128$$, which is a big speed-up (though Grover’s algorithm still requires a large quantum runtime to achieve those steps). In practice, this suggests that 128-bit symmetric keys (for AES, for example) provide only ~64-bit security against a quantum adversary – which is borderline. Thus, experts recommend using at least 256-bit keys for symmetric encryption and larger output hashes (like SHA-384/512) to guard against quantum attacks. Superconducting qubits, if scaled, could run Grover’s algorithm iterations much faster than, say, a trapped ion computer due to higher clock speed, potentially making brute-force of shorter keys plausible. For example, a superconducting quantum computer might try keys at, say, megahertz rates versus an ion trap at kilohertz, greatly affecting the feasibility of such attacks. So part of the security community’s response is upgrading symmetric protocols too (e.g., AES-256, SHA3) as a hedge.

- Quantum Cryptanalysis Beyond Shor/Grover: A quantum computer could also accelerate certain attacks like solving systems of linear equations (quantum linear system algorithms) or unstructured search in other contexts. While these are more specialized, one concern is that some currently secure systems might have unrecognized weaknesses exploitable by quantum algorithms. The focus, however, remains on known vulnerable algorithms like RSA/ECC/DH.

- Secure Communication Advancements: On the flip side, quantum computing and quantum information offer new tools for security. For instance, quantum key distribution (QKD) is a technology that allows two parties to generate a shared secret key with security guaranteed by quantum physics (any eavesdropping on the quantum channel will be detected). QKD is implemented with photonic qubits (optical fibers or free-space photons), not superconducting qubits, so it’s somewhat separate. However, a future quantum internet might use superconducting qubit nodes as quantum repeaters or processors to correct errors in entangled states used for QKD. Superconducting qubits could help realize complex cryptographic protocols like quantum secure multi-party computation or blind quantum computing, where a client can have a quantum server compute on encrypted quantum data without learning anything about it. These are farther out, but they illustrate that quantum tech isn’t solely a threat; it also provides new cryptographic capabilities.

In summary, the rise of superconducting qubit quantum computers forces the cybersecurity world to adapt quickly. All commonly used public-key cryptosystems (RSA, Diffie-Hellman, ECDSA, ECDH) will be rendered insecure once a sufficiently large quantum computer (likely built from superconducting qubits given current progress) is operational. This has spurred a global push toward post-quantum cryptography standards and migration, essentially trying to get new algorithms in place before “Q-day” (the day a quantum computer cracks encryption) occurs. Organizations must inventory where they use vulnerable cryptography and have a transition plan. In the meantime, data that needs long-term confidentiality should be protected with quantum-resistant methods now (or with large symmetric keys) to thwart future decryption. The national security implications are huge – quantum computing is sometimes likened to the advent of nuclear weapons in terms of impact on secure communications. That is why there is a bit of a quantum “arms race” among tech-leading nations. Superconducting qubits are at the forefront of this race, and their development is watched not just by scientists and engineers, but also by security agencies and cryptographers.

Industry Use Cases

While universal, fault-tolerant quantum computers are not here yet, today’s superconducting qubit processors (and near-term projected devices) are being explored for various applications across industries. These use cases typically involve problems that are intractable for classical computing but might be addressed by quantum algorithms taking advantage of superposition, entanglement, or the probabilistic nature of quantum states. Some of the most promising domains include:

- Quantum Chemistry and Materials Science: Simulation of molecular and materials systems is a natural application for quantum computers, as Feynman originally envisioned. Even modest-sized molecules have extremely large Hilbert spaces that challenge classical simulation due to the exponential growth of configurations. Superconducting qubits can be used to run variational quantum eigensolver (VQE) and quantum phase estimation algorithms to find molecular ground states, reaction energetics, or properties of new materials. For example, researchers have used IBM’s 6-qubit device to calculate the binding energy of small molecules like LiH and BeH₂, matching chemical accuracy for these simple cases. Google used a superconducting chip to simulate the energy surface of a hydrogen chain and the isomerization of diazene, in what was the largest quantum chemistry simulation to date (in 2020, using 12 qubits and a technique called Hartree-Fock VQE). These studies are proofs of concept that quantum processors can handle the entangled electronic states in chemistry. The hope is that as qubit counts and coherence grow, quantum computers will tackle bigger molecules (for instance, catalysts, complex organic reactions, or high-temperature superconductors’ models). Applications in pharma (drug discovery) and materials (e.g., battery chemistry, solar cell materials) are often cited. Even before fully accurate simulations are possible, quantum computers might help with quantum chemistry heuristics – providing insights into molecular orbitals or reaction pathways that classical heuristics struggle with. Companies like Microsoft, QC Ware, and startups like Menten AI are partnering with chemical and pharma companies to explore using current superconducting qubit machines for small-scale chemistry problems as a way to develop algorithms and know-how. For materials science, algorithms to study lattice models (like the Fermi-Hubbard model for strongly correlated electrons) have been run on superconducting qubits; Google’s team performed a simulation of the Fermi-Hubbard model using 16 qubits in 2016. Understanding such models can lead to discoveries in superconductivity or new quantum materials. Thus, quantum chemistry is viewed as one of the earliest fields that could see quantum advantage, and superconducting qubits are the platform on which many of these pioneering simulations have been and continue to be demonstrated.

- Optimization Problems (Logistics, Finance, etc.): Many industries face complex optimization problems – from airline scheduling and supply chain logistics to portfolio optimization in finance and solving hard combinatorial puzzles. Quantum computers offer new algorithms like the Quantum Approximate Optimization Algorithm (QAOA) and quantum annealing techniques that might find better solutions or speed up solving these optimization problems. Superconducting qubits (in the circuit model or in the annealing model, like D-Wave’s flux qubits) are being tested on various optimization tasks. For instance, Google used a 17-qubit superconducting chip to implement QAOA for the Sherrington-Kirkpatrick model (a prototypical NP-hard spin glass optimization problem). While the results didn’t yet beat classical methods, they showed how increasing circuit depth improved solution quality. In finance, JPMorgan and IBM have jointly experimented with porting portfolio optimization and options pricing problems onto small superconducting quantum processors using QAOA and other hybrid quantum-classical solvers. Likewise, Volkswagen in 2019 worked with D-Wave’s superconducting annealer to optimize taxi routes in Beijing to minimize congestion. Although D-Wave’s system is an annealer (different model), it uses superconducting qubits and was one of the first commercial forays into optimization: they successfully optimized traffic flow on a limited scale. The general idea is that quantum superposition can explore many possible solutions in parallel, and interference can guide the system toward optimal or near-optimal solutions, potentially faster or better than classical heuristics for certain hard cases. Another example: scheduling problems (e.g. job-shop scheduling in manufacturing) have been mapped to superconducting qubits using QAOA. While classical algorithms still often outperform at the small scales tested, these serve as important pilots that teach how to encode problems onto qubits and how quantum hardware behaves for optimization. Financial institutions are interested in quantum computing for risk analysis, asset allocation, derivatives pricing and more, since these often boil down to high-dimensional optimizations or Monte Carlo simulations (which quantum computers can also accelerate via amplitude estimation algorithms). Logistics companies and airlines are eyeing quantum algorithms for route optimization, crew scheduling, etc. Given that superconducting qubits are among the most advanced, many such industry use-case collaborations use them as the test hardware.

- Artificial Intelligence and Machine Learning: There is growing interest in quantum machine learning (QML) – using quantum computers to accelerate or improve machine learning tasks. Several proposals exist: quantum versions of kernel methods, quantum neural network ansatzes (variational circuits that mimic classical neural networks), quantum generative models, etc. Superconducting qubits have been used in early demonstrations of these ideas. For example, researchers have run small quantum classification algorithms on IBM’s hardware, classifying simple datasets by embedding data into qubit states and using quantum interference to separate classes. One experiment used a 4-qubit superconducting processor to classify whether a handwritten digit (from a very downscaled MNIST dataset) was a 0 or 1 by using a quantum feature map and measuring parity as the output – essentially a quantum kernel method. Another area is quantum generative models (like quantum GANs or Born machines): superconducting qubits can represent probability distributions natively via the Born rule (measurement probabilities), so they can model and sample from complex distributions. This could be useful for generating synthetic data or for probabilistic models in AI. Some small-scale quantum GANs have been demonstrated on 2–3 qubits. While these are toy examples, they hint that QML might find niche applications such as learning from limited data by exploiting high-dimensional quantum feature spaces. Additionally, quantum computers can potentially accelerate the training of machine learning models by solving linear algebra subroutines faster (using quantum linear solvers) or by speeding up combinatorial tuning (using QAOA for hyperparameter optimization). Companies like Google and IBM have QML research groups that often test algorithms on their superconducting qubit devices. There is speculation that future quantum computers might efficiently train certain types of neural networks or perform inference for models that are hard for classical GPUs (though this is very exploratory). In the nearer term, quantum-enhanced kernel methods for classification have rigorous evidence of possibly outperforming classical in certain cases, and superconducting qubits are the likely platform to demonstrate that on non-trivial data. Moreover, AI itself can assist quantum computing – e.g., using classical machine learning to calibrate qubits or correct errors – creating a symbiosis between the two fields.

- Cryptanalysis and Secure Communication: As discussed in the cybersecurity section, one “application” of quantum computers is cryptography – albeit from the attacking side. National labs and agencies are certainly interested in using superconducting qubit systems to test out quantum attacks on cryptographic algorithms. For instance, a group might use a superconducting quantum computer to factor an RSA-configuration of intermediate size (maybe 8-bit, 16-bit, up to 32-bit numbers as a stepping stone) – these experiments are already happening as proofs of concept for Shor’s algorithm. In a sense, running Shor’s algorithm on larger and larger numbers is both a benchmark and an application (a somewhat adversarial one). On the flip side, quantum computers can generate true random numbers very naturally (measurement outcomes are inherently probabilistic). While true randomness can be obtained classically too, quantum randomness is useful in cryptographic protocols and for things like Monte Carlo simulations. Some services already use quantum devices as randomness beacons. Another security-related application is in developing and testing quantum-proof algorithms – quantum computers themselves can be used to analyze the strength of candidate post-quantum cryptosystems (for example, by attempting to solve structured problems like lattice reduction or decoding problems with quantum algorithms to see if there’s any speedup). Though currently classical computers are used for that, future quantum computers might provide insights into whether a supposedly PQ-resistant scheme has a subtle quantum weakness. In terms of secure communications, superconducting qubits could play a role in building quantum repeaters for long-distance QKD networks: they could store quantum states (as memory) and perform entanglement swapping operations to extend quantum links. Some protocols propose using solid-state qubits (like NV centers or certain superconducting systems) to interact with photons for this purpose. While this is a bit outside the typical “compute” scenario, it’s a use case in quantum networking where superconducting tech might contribute (though most quantum networking experiments currently use atomic ensembles or NV centers for memory).

- High-Performance Computing Enhancement: Beyond specific verticals, superconducting qubits might be integrated as accelerators in HPC centers to tackle specific tasks faster than CPU/GPU clusters. For example, quantum computers could assist in solving linear systems of equations that arise in large-scale simulations (via the Harrow-Hassidim-Lloyd algorithm), or in optimization loops within classical simulation codes (providing an acceleration for specific hard sub-problems). National labs are investigating hybrid algorithms where a classical simulation delegates a particularly intractable sub-problem (like a quantum chemistry calculation of a molecule or a combinatorial optimizer) to a quantum processor. In 2024, a 20-qubit superconducting quantum processor was successfully integrated into the SuperMUC-NG supercomputer in Germany, creating the first hybrid quantum-HPC platform. The idea is to use the quantum processor for the parts of a computation that might have exponential complexity classically, thus acting as a special-purpose co-processor. This could open up new ways to simulate physical systems or process data by leveraging quantum resources for what they’re best at, and classical for the rest.

In summary, industry use cases for superconducting qubits in the near term revolve around quantum simulation (chemistry, physics), optimization (logistics, finance), and probabilistic computation/AI, as well as continued exploration in cryptography and secure communications. Many of these applications are being pursued through pilot projects and research collaborations. Each use case is currently limited by the size and noise of available quantum processors, so much of the work is proof-of-concept: e.g., showing an algorithm works on 5–10 qubits and analyzing how it might scale. The real payoff will come if/when a superconducting quantum computer with sufficient qubits and low enough error can outperform classical methods at one of these tasks – that would mark the point of “quantum advantage” for that application. For now, industry is investing in quantum R&D to be ready for that tipping point. Notably, even without full fault tolerance, some algorithms (especially in optimization and possibly machine learning) may show a heuristic advantage on NISQ devices, which companies are eager to harness. As superconducting hardware improves, these use cases will be continually tested and refined, helping drive demand for better quantum machines and informing which technical improvements (more qubits vs. better fidelity vs. connectivity etc.) are most crucial for real-world impact.

Broader Technological Impacts

The rise of superconducting quantum computers could have sweeping implications for high-performance computing, scientific discovery, and new computational modalities. Some of the broader impacts include:

- Augmenting High-Performance Computing (HPC): Quantum computers are not expected to outright replace classical supercomputers, but rather to complement them for specific tasks. In the coming years, we will likely see quantum accelerators integrated into HPC environments (as already beginning in places like Jülich and LRZ in Germany, and RIKEN in Japan). This quantum-HPC hybrid model means that supercomputing centers will incorporate racks with cryostats housing superconducting quantum processors alongside traditional CPU/GPU clusters. Workloads can then be scheduled such that certain parts run on the quantum side. For example, a material science simulation on an HPC may call a quantum subroutine to calculate a molecule’s electronic structure more efficiently. This tight integration could significantly speed up computational research in fields like chemistry, fluid dynamics (if quantum linear solvers mature), and optimization-heavy simulations. IBM and others term this vision “quantum-centric supercomputing,” where quantum and classical resources work in tandem on large problems. The impact on HPC is analogous to how GPUs changed HPC in the 2000s: tasks like machine learning and molecular dynamics accelerated massively with specialized hardware. Similarly, quantum accelerators could give HPC centers new capabilities, essentially adding a new dimension of computational power. Over time, if superconducting quantum tech continues to scale, major scientific projects (like climate modeling, nuclear physics simulations, cryptographic key searches) might leverage quantum co-processors to achieve results that would be impossible classically.

- Scientific Discovery and New Physics: Having quantum computers allows scientists to experiment with quantum systems in ways that were previously only theoretical. This could lead to new discoveries in physics and other sciences. For instance, a superconducting quantum simulator can emulate complex quantum matter (high-temperature superconductors, topological phases, etc.), potentially revealing new phenomena or confirming hypotheses about quantum physics. Already, quantum simulations have given insights into quantum phase transitions and entanglement structure in spin systems that are hard to obtain otherwise. Quantum computers also enable direct experimentation with concepts like entanglement entropy or quantum chaos on multi-qubit systems, deepening our understanding of quantum mechanics itself. Moreover, as we push superconducting qubits to their limits, we might uncover new physics in the materials or environments – e.g., understanding noise at ultralow temperatures, discovering unexpected microscopic two-level systems, or observing many-body localization in a controlled setup. In essence, quantum computers are not just computers; they are highly tunable quantum experiments. There’s also the possibility of using quantum computers to design other quantum devices – for example, simulating the behavior of complex nanomaterials or designing more efficient superconductors for the qubits themselves, creating a positive feedback loop of discovery. Another area is fundamental chemistry/biology: quantum computers could help in understanding complex biomolecules (like proteins) by simulating their quantum interactions, potentially accelerating drug discovery or enzymatic research. The ability to handle exponentially large Hilbert spaces might let us solve certain differential equations or models in physics with unprecedented accuracy. All told, superconducting qubits as a scientific tool could usher in new breakthroughs across chemistry, physics, and materials science by tackling problems out of reach for classical computation.

- New Forms of Computation and Algorithms: The development of superconducting quantum computers also broadens our perspective on what “computation” means. It forces computer scientists to generalize concepts of algorithms and complexity to the quantum realm, leading to new algorithmic techniques that sometimes even inspire classical algorithms (quantum-inspired classical algorithms have been created by mimicking quantum approaches). We’re learning how to harness highly parallel quantum states and interference as a resource for computing, which is a new paradigm distinct from classical parallelism. This could influence future computer architectures: for example, perhaps hybrid chips with classical logic and small quantum logic units working together at the chip level might emerge (for specific tasks like random number generation, entropy distillation, etc.). Additionally, quantum computing has brought attention to reversible computing and error correction in ways that may reflect back onto classical computing (like more efficient error-correcting codes or novel reversible logic designs to manage heat dissipation).

- Quantum Information Science Advancements: The push to build superconducting qubit systems has accelerated progress in related technologies like cryogenics, precision electronics, and microwave engineering. Innovations in these areas can spill over to other industries. For instance, developing cryogenic controllers for qubits has parallels in creating ultra-low-noise amplifiers and sensors, which could benefit radio astronomy or deep-space communication. The techniques for minimizing noise and cross-talk in quantum chips can improve high-frequency PCB design and electronic packaging in general. Moreover, quantum computing R&D has trained a generation of scientists and engineers in interdisciplinary problem-solving (mixing physics, CS, and engineering) – their expertise often finds applications in other cutting-edge tech sectors.