Quantum Computing Modalities: Quantum Annealing (QA) & Adiabatic QC (AQC)

Table of Contents

(For other quantum computing modalities and architectures, see Taxonomy of Quantum Computing: Modalities & Architectures)

What It Is

Quantum annealing (QA) and adiabatic quantum computing (AQC) are closely related modalities that use gradual quantum evolution to solve problems. In both approaches, a problem is encoded into a landscape of energy states (a quantum Hamiltonian), and the system is guided to its lowest-energy state which corresponds to the optimal solution. QA and AQC rely on the quantum adiabatic theorem – the principle that if a system’s Hamiltonian is changed slowly enough and without outside disturbance, the system will remain in its ground (lowest-energy) state. By starting from a known ground state and evolving to a Hamiltonian that encodes a computational problem, the solution can be “read out” as the final state of the system.

These two modalities are often grouped together because QA can be viewed as a practical implementation or subset of the adiabatic approach. Adiabatic quantum computing is a universal model of quantum computation – it has been proven polynomially equivalent in power to the standard gate-based model (in principle). QA, on the other hand, usually refers to methods and devices (like D-Wave’s quantum processors) that perform this slow-evolution approach specifically for optimization problems. In essence, QA is the “real-world” version of AQC, applying the same fundamental idea under less ideal conditions to tackle tasks like finding the minimum of a complex function. Both QA and AQC involve harnessing quantum mechanics (such as superposition and tunneling) to navigate complex solution spaces, setting them apart from classical algorithms and making them a distinct category within quantum computing.

Commonalities

QA and AQC share a number of core characteristics, stemming from their similar foundations in quantum physics and optimization. Key commonalities include:

- Adiabatic Quantum Evolution: Both modalities operate by slowly evolving a quantum system’s Hamiltonian from an initial simple form to a final form that represents the problem. According to the adiabatic theorem, if this evolution is sufficiently slow and the system is isolated, it will remain in the ground state of the changing Hamiltonian. This gradual, continuous evolution is the basis for how both QA and AQC carry out computation (as opposed to the discrete sequence of gates in other models).

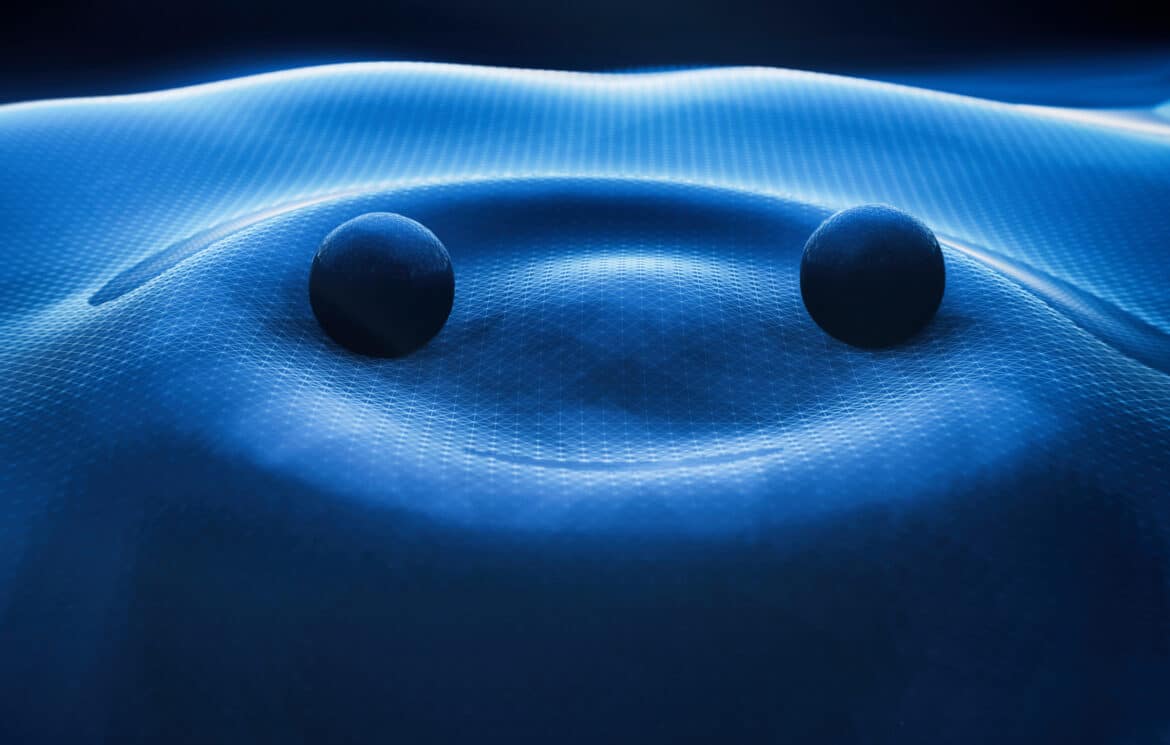

- Energy Landscapes and Ground-State Search: In QA/AQC, problems are encoded in terms of an energy landscape, where each possible solution corresponds to a state with a certain energy. The goal is to find the lowest-energy state (global minimum), which represents the optimal solution. Both approaches harness the natural tendency of quantum systems to seek low-energy states. Essentially, “solving the problem” means guiding the system into the deepest valley of this energy landscape. This makes QA and AQC inherently suited for optimization problems – for example, finding the minimum of a cost function or the best configuration of an NP-hard problem can be translated into finding a ground state of a suitable Hamiltonian.

- Use of Quantum Fluctuations: Both methods exploit quantum effects to explore the solution space. In QA and AQC, the initial Hamiltonian is usually chosen to be something simple (like a strong transverse field) that puts qubits into a superposition of states. Quantum fluctuations (induced by the initial Hamiltonian) help the system tunnel through energy barriers in the landscape, potentially escaping local minima more effectively than classical thermal fluctuations. This is analogous to how classical simulated annealing uses thermal energy to hop over barriers, except QA/AQC use quantum tunneling to find lower-energy states in the landscape.

- Reliance on the Adiabatic Theorem: The success of both QA and AQC is grounded in the adiabatic theorem. If the interpolation from the initial Hamiltonian to the problem Hamiltonian is done slowly enough, the quantum system should, in theory, end up in the ground state of the final Hamiltonian. This shared reliance means both approaches must contend with the same core condition: the evolution must be slow relative to the square of the inverse of the minimum energy gap encountered (to avoid transitions to excited states). In practice, this leads to similar considerations for run-time and problem hardness for QA and AQC – problems with very small energy gaps during the evolution are hard for both, since one must anneal extremely slowly to remain adiabatic.

Given these common features, QA and AQC are often discussed together as adiabatic techniques. Both essentially perform computation by “allowing physics to do the work” – they set up a physical system that, when it evolves naturally (quantum mechanically) into its lowest-energy configuration, yields the answer to a computational question. This natural alignment with optimization has made them attractive for solving complex combinatorial problems, and it explains why QA is sometimes viewed as a specialized practical form of adiabatic quantum computing.

Differences and Boundaries

Despite their strong similarities, there are important differences between quantum annealing and adiabatic quantum computing, especially in how the terms are used in theory versus practice. These differences include implementation details, tolerance to noise, and generality of their computational power:

- Control Mechanisms and Implementation: Adiabatic quantum computing is defined as an idealized model where the Hamiltonian changes are infinitely slow (in the limit) and precisely controlled so that the process is perfectly adiabatic. In principle, AQC can be used to perform any computation that a gate-model quantum computer can, by encoding the problem into a suitable Hamiltonian and evolving adiabatically. Quantum annealing, by contrast, refers to more practical algorithms and devices that follow the same spirit but do not strictly require perfect adiabatic evolution or universality. For example, D-Wave’s machines implement QA to solve specific optimization problems (Ising-model or QUBO formulations) using a fixed schedule of Hamiltonian changes. They do not offer arbitrary Hamiltonians or quantum operations, meaning they are not universal quantum computers. In other words, AQC is a general computational model, whereas QA devices/algorithms are usually specialized for particular problem types and often allow non-adiabatic processes. One analysis succinctly noted: “Quantum annealing (QA) is a framework… to solve computational problems via quantum evolution towards ground states… without necessarily insisting on universality or adiabaticity”. This situates QA in a middle ground between the ideal AQC and the realities of hardware constraints.

- Noise Resilience and Operating Conditions: AQC typically assumes a closed quantum system with no external interference (often thought of at effectively zero temperature). Under those conditions, if run slowly, the algorithm should succeed with high probability. QA, on the other hand, is generally discussed in the context of real, physical hardware that operates at low but finite temperatures and inevitably interacts with its environment. This means QA systems must contend with decoherence and thermal excitations that can knock the system out of the ground state during the evolution. In practice, an adiabatic process is one with no external energy disturbances and infinitely slow change – a true ideal that real devices can only approximate. Because no real-world quantum computer is perfectly isolated, QA is often called the “real-world counterpart” of ideal adiabatic quantum computing. QA devices like the D-Wave are designed to mitigate noise (they operate in dilution refrigerators at ~0.01 Kelvin to suppress thermal jumps), but some level of noise and non-adiabatic transitions is unavoidable. This makes QA somewhat more robust in practice (it will still give you a result even if it’s not the absolute ground state) but also means it doesn’t always find the true optimum if perturbations cause the system to end in an excited state. In short, AQC is an ideal noiseless limit, whereas QA acknowledges and operates amid noise and must balance speed vs. fidelity (too fast an anneal or too much environmental coupling leads to errors).

- Theoretical Universality and Computational Power: Adiabatic quantum computing has a strong theoretical footing – it has been proven to be polynomially equivalent to the standard circuit (gate) model of quantum computing. This means that any problem solvable by a gate-model quantum computer can, in theory, be solved by AQC with at most a polynomial slowdown, provided one can construct the appropriate Hamiltonian and run the evolution long enough. QA, by contrast, is usually not considered universal by itself. Quantum annealers currently realize a limited class of Hamiltonians (typically involving pairwise qubit interactions and stoquastic drivers, as in the transverse-field Ising model). These are excellent for optimization problems, but they cannot directly implement arbitrary computations or complex entangling operations required for universal quantum algorithms. In fact, today’s QA devices use stoquastic Hamiltonians, meaning all off-diagonal terms in the Hamiltonian can be made non-positive in some basis. This property makes their quantum states positive (like a classical probability distribution), which allows certain classical algorithms (quantum Monte Carlo simulations) to simulate their behavior efficiently. As a result, it’s suspected that such restricted QA on its own cannot perform tasks that far exceed classical capabilities in the worst case. A fully universal AQC would require more general (non-stoquastic) Hamiltonians or sequences of Hamiltonians that current annealers don’t support. Thus, one key boundary is: AQC is a broad model capable of universal quantum computation in theory, whereas QA implementations are narrower. They excel at specific optimization tasks but do not natively run Shor’s algorithm for factoring or other non-optimization quantum algorithms. To illustrate, experts have pointed out that D-Wave’s QA machine is not a “universal” annealer – it cannot reach the regime of perfect adiabatic evolution at zero temperature, which would be needed to perform arbitrary quantum computations. If it could, it would essentially be a universal quantum computer, but in reality it’s designed for a special subset of problems under certain conditions.

In summary, QA and AQC differ in scope (special-purpose vs. general), conditions (open, noisy system vs. ideal closed system), and control (predefined analog schedule vs. notional ability to design any adiabatic path). QA is often seen as a heuristic or practical approach under the AQC umbrella, with deliberate compromises (like tolerating some non-adiabaticity and restriction to certain Hamiltonians) to make it realizable on current technology. AQC provides the theoretical framework and goalpost (what would happen with perfect adiabatic evolution), while QA is what we can achieve with today’s devices and algorithms, often supplemented with techniques to handle the deviations from the ideal.

Comparison to Other Quantum Computing Models

Both QA and AQC contrast in significant ways with other models of computation, including the standard gate-model quantum computer, classical “digital annealing” systems, and hybrid approaches. Below is a comparison of how QA/AQC stack up against these:

- Gate-Based Quantum Computing (Circuit Model): The conventional model of quantum computing uses qubits manipulated by discrete logic gates (unitary operations) in a circuit. Gate-model machines (like those built by IBM, Google, etc.) operate via sequences of precise pulses, and in principle they can perform any quantum algorithm. Adiabatic quantum computing has been proven theoretically equivalent in computational power to the gate model – any gate circuit can be mapped to an adiabatic process and vice versa, with only polynomial overhead. However, the approach is very different. QA/AQC perform computations through an analog process: the entire system’s Hamiltonian is continuously changed, and the result emerges from the physics of the system’s evolution. In contrast, gate-model computers are digital in the sense of applying one gate at a time in a sequence. This leads to practical differences: Gate-model devices currently have far fewer qubits (tens or hundreds, often with error correction needed) but each qubit can be reliably manipulated through many operations. QA devices have much larger numbers of qubits (D-Wave’s Advantage system has 5000+ qubits) but those qubits are analog—operating collectively rather than in long logical sequences. Gate model quantum computers can tackle a broad range of algorithms (communication, search, algebraic problems like Shor’s algorithm for factoring) that are not easily formulated as energy minimization. QA and AQC are naturally geared towards combinatorial optimization and sampling tasks. Another key difference is error correction: gate-model quantum computing has a framework for quantum error correction and fault tolerance (at least in theory and increasingly in practice), whereas adiabatic methods currently lack a comparable, practical error-correction scheme. This makes today’s gate-model machines more accurate on small-scale algorithms, while annealers can leverage sheer qubit count for certain problems (at the cost of solution quality). In summary, QA/AQC and gate-model quantum computing are complementary: the former trades fine-grained control for an analog “whole system” evolution, and excels at optimization tasks; the latter provides universal programmability and is marching toward error-corrected large-scale computation, which will excel at a wider array of tasks if and when technology permits.

- Digital Annealing (Quantum-Inspired Classical Annealers): Digital annealing refers to classical algorithms or hardware that mimic the annealing process without using quantum effects. Companies like Fujitsu have built “digital annealer” chips that are inspired by quantum annealing but run on classical electronics. These systems use techniques like simulated annealing or other heuristics implemented in specialized hardware to solve optimization problems. For instance, Fujitsu’s Digital Annealer is a CMOS-based accelerator that can handle fully connected quadratic optimization (QUBO) problems up to 1024 variables, using a parallelized simulated annealing algorithm with clever escape mechanisms. Comparatively, QA uses quantum tunneling and superposition to search for solutions, while digital annealers rely on classical random fluctuations and bit-flip moves. One advantage of digital annealers is that they don’t require cryogenics or extreme isolation – they run at normal conditions and can often scale to larger problem sizes (in terms of variables) because they are not limited by quantum coherence. They also can directly support fully connected problems in hardware (as the Fujitsu example shows), whereas current quantum annealers have sparse connectivity and require embedding for dense problems. On the other hand, digital annealers lack the unique quantum ability to tunnel through tall energy barriers – they might get stuck in local minima that a quantum annealer could theoretically tunnel through. In practice, the performance comparison between quantum annealers and digital annealing is an active area of study. Some research has indicated that when a problem fits in hardware without heavy embedding, current quantum annealers have not yet demonstrated clear superiority over the best classical algorithms. In fact, the need to embed fully connected problems onto the limited connectivity of QA hardware can put analog quantum annealers at a disadvantage relative to specialized classical solvers on those problems. Digital annealing serves as a benchmark and complementary approach: it shows what can be achieved by classical means on the same type of problems. Any quantum speedup must outperform these advanced classical strategies. Many view digital annealers as “quantum-inspired” tools – useful for near-term applications while quantum hardware continues to advance.

- Hybrid Quantum-Classical Approaches: Rather than viewing quantum and classical strategies as mutually exclusive, hybrid approaches seek to combine them. In the gate-model realm, hybrid algorithms like QAOA (Quantum Approximate Optimization Algorithm) and VQE (Variational Quantum Eigensolver) use a classical computer to optimize parameters of a quantum circuit. Similarly, in the QA/AQC context, hybrid methods involve using classical processing alongside the quantum annealer to overcome its limitations. A prominent example is D-Wave’s hybrid solvers, which integrate classical algorithms with quantum annealing to solve larger or more complex problems than the quantum processor could handle alone. The idea is to exploit the “complementary strengths” of each: the quantum annealer is very good at exploring certain energy landscapes quickly and finding good low-energy configurations, while classical methods are better at exact logical operations, handling constraints, or even preprocessing/postprocessing solutions. In practice, a hybrid workflow might break a big problem into pieces that fit on the quantum annealer, use the annealer to solve or sample from those pieces, and then stitch together or improve the overall solution classically. D-Wave’s Ocean software and cloud services support this by providing tools that delegate appropriate parts of the computation to classical solvers (which, for instance, don’t require the problem to be minor-embedded on hardware connectivity). These classical components can handle interactions or constraints that the quantum hardware cannot directly implement. The result is a iterative loop between quantum and classical: e.g., the system might use classical heuristics to initialize a good starting solution, then quantum annealing to refine it (or vice versa). This hybrid strategy is increasingly important in industry, as it often yields better performance than using either quantum or classical alone. It acknowledges that current QA devices have limits in size and noise, so the classical side carries some of the load. In comparison to pure gate-model algorithms, QA hybrids are somewhat analogous to how one might use a quantum accelerator for a specific subroutine within a larger classical algorithm. In fact, one vision for the future is to have both annealing and gate-model quantum processors working in concert with classical HPC, each tackling the aspects of a problem they are best suited for. D-Wave itself has emphasized that a combination of annealing, gate-model, and classical computing may be what’s needed for most real-world users to get value from quantum computing. Hybrid approaches thus form a bridge between the analog world of QA/AQC and the digital world of classical and gate-model computing.

Current Research and Industry Interest

Both quantum annealing and adiabatic quantum computing continue to be vibrant areas of research and development, in academia and industry alike.

On the research front, scientists are delving into several key questions: How well can QA/AQC actually perform on hard problems, and what are the mechanisms that might give them an advantage over classical methods? There is ongoing research into the role of noise and decoherence in quantum annealers – for example, understanding to what extent thermal excitations during an anneal hinder finding the ground state, and how techniques like error suppression or mid-anneal pauses might improve results. Researchers are also investigating algorithmic improvements such as optimized annealing schedules (varying the speed of evolution to avoid getting stuck at small gaps) and reverse annealing, where one can start from a candidate solution and quantum anneal backwards to refine it. Another active area is exploring non-stoquastic Hamiltonians and more complex quantum driving terms. As mentioned, current devices use stoquastic drivers (e.g., a transverse field); adding terms that are non-stoquastic (which introduce sign changes in the quantum amplitudes) could potentially allow sampling of more complex quantum states that are hard for classical algorithms to simulate. This is considered a route toward achieving a quantum speedup with annealing. There’s also theoretical work examining the boundaries of the adiabatic theorem – for instance, studying cases of diabatic quantum computing, where one deliberately runs faster than adiabatic and uses interference in clever ways to still reach the solution, essentially shortcuts to adiabaticity. All these efforts aim to broaden the understanding and capabilities of QA/AQC. Notably, a comprehensive review by Albash and Lidar (2018) summarizes many of the theoretical developments in AQC, including its algorithmic achievements and limitations, and discusses how stoquastic annealing fits into complexity theory.

In terms of industry and commercial development, the clear leader in quantum annealing is D-Wave Systems, a company that has been building superconducting flux-qubit annealers for over a decade. D-Wave’s systems have steadily grown in size: their latest machine, named Advantage, contains over 5,000 qubits connected in the highly-connected Pegasus topology. (Each qubit is connected to 15 others in Pegasus, which is a significant jump from the earlier Chimera topology and helps embed larger problems.) This makes D-Wave’s annealers the largest-scale quantum processors in the world by qubit count – as one researcher noted, the Advantage system is “the largest scale programmable quantum information processor currently available”. These machines are accessible through cloud services (like D-Wave’s Leap) and are being used in experimental applications ranging from traffic flow optimization and scheduling to materials science and machine learning. Organizations such as Lockheed Martin and NASA were early adopters: the USC-Lockheed Martin Quantum Computing Center was established in 2011 with a D-Wave system, and in 2013 Google and NASA jointly acquired a D-Wave system to form the Quantum Artificial Intelligence Lab. This early interest was driven by the potential of QA to tackle combinatorial optimization problems relevant to these organizations (e.g., air traffic management, mission scheduling, or machine learning model tuning).

Beyond D-Wave, there is a growing ecosystem of startups and research labs focusing on QA/AQC or hybrid optimization solutions. For example, companies like 1QBit (now rebranded as QuantumBasel in some contexts) and QC Ware have been developing software tools and algorithms to make use of quantum annealers for real-world problems, often in finance, scheduling, or drug discovery. These firms typically take a hybrid approach, creating workflows that integrate classical solvers with calls to a quantum annealer for the parts where it might give an edge. Another notable effort is by Fujitsu, which, while not building quantum hardware, created the Digital Annealer as a quantum-inspired solution for similar optimization tasks – underscoring industry appetite for the capabilities that QA promises, even via classical means.

Meanwhile, some major quantum computing players predominantly focused on gate-model QCs are also keeping an eye on annealing. For instance, research groups at Google have published work on simulating annealing processes with gate-model devices (sometimes called “digital annealing” or using algorithms like QAOA which mimic annealing). Moreover, D-Wave itself has signaled a broadening of scope: in addition to continuing improvements on their annealing technology (they have a roadmap for a next-generation Advantage2 system with higher connectivity and coherence), D-Wave announced plans to develop a gate-model quantum computer of their own. Their vision is that customers will benefit from both types of quantum computers, possibly used together. This is a significant development because it shows that even the company most associated with QA sees value in the gate model and in hybridizing different quantum modalities.

Academic research on QA/AQC remains very active, with annual workshops and conferences (like the Adiabatic Quantum Computing conferences) and a steady output of papers examining everything from quantum phase transitions in annealing to new qubit designs for better coherence. Universities and national labs are partnering with companies to get access to annealers for research – the example of USC hosting an Advantage system is one case where academic researchers like Daniel Lidar are studying “coherent quantum annealing to achieve quantum speedups in quantum simulation, optimization, and machine learning” using the latest hardware. There is also interest in using QA for quantum simulations (i.e., using an annealer to mimic the behavior of quantum materials or molecules, by encoding their Hamiltonians – essentially turning QA into a quantum simulator).

In summary, QA and AQC attract a lot of attention because they offer a different path to quantum computation that is potentially more scalable in qubit count in the near term. Industry players (led by D-Wave and followed by a host of startups and collaborators) are pushing the technology to solve practical problems, often via quantum-cloud services and hybrid computing frameworks. Academic interest ensures that the theoretical underpinnings continue to be refined – for instance, understanding exactly when an annealer might outperform classical algorithms, or how to mitigate noise and errors. This joint push by industry and academia is gradually clarifying the promise and realistic capabilities of quantum annealing and adiabatic computing.

Challenges and Limitations

While QA and AQC are promising, they face several significant challenges and limitations that researchers and engineers are actively working to address:

- Noise, Decoherence, and Finite Temperature: Both QA and AQC ideally require quantum coherence to be maintained throughout the evolution. In practice, environmental noise (e.g. interactions with surrounding materials, stray fields) and finite operating temperatures can cause qubits to decohere or thermally excite out of the ground state. If a system jumps to an excited state during the anneal (due to a thermal photon or noise perturbation), it may end up in a higher-energy state at the end, yielding a suboptimal solution. Current quantum annealers mitigate this by operating at extreme low temperatures (~15 millikelvin) and using shielding to reduce noise, but they are still open quantum systems to some extent. This inherent sensitivity means results are probabilistic – one must often run the annealer many times to find a good solution or ensure high probability of the ground state. Moreover, unlike the circuit model, there is no known efficient error correction scheme for adiabatic quantum computation that can correct errors on the fly. Without a method for fault-tolerance, any noise that enters the system can accumulate or irreversibly disturb the computation. The lack of proven fault-tolerance in AQC/QA is a fundamental challenge; as one report put it, “while fault tolerance is theoretically attainable in the circuit model, nobody yet knows how to achieve it in AQC”. This makes scaling to very long or complex adiabatic computations problematic – the larger and longer the process, the more opportunity for error. Robust error suppression techniques (like energy gap amplification or penalty terms) are being studied, but truly error-corrected QA/AQC remains an open issue.

- Encoding and Minor-Embedding Overhead: Real-world optimization problems often don’t directly match the hardware graph of a quantum annealer. D-Wave’s qubits, for example, are connected in a particular graph (Pegasus or previously Chimera), which is sparse. If a problem requires a connection between two variables that doesn’t exist on the hardware, one must use minor embedding – mapping the logical variable onto a chain of physical qubits such that the desired interaction is effectively realized. This process can inflate the size of the problem: a fully connected problem of NNN logical variables might require significantly more than NNN physical qubits to embed (often a quadratic overhead in qubit count). Embedding also requires setting strong couplings within each chain to make those qubits act in unison, which in turn introduces new parameters to tune and potential points of failure (if a chain “breaks,” the logical variable no longer behaves correctly). The net effect is that the effective problem size solvable on a QA machine is much smaller than the raw number of qubits might suggest. For instance, a 5000-qubit D-Wave might only handle a fully connected graph of a few hundred logical variables after embedding. Additionally, embeddings can slow down the solver: longer chains and extra qubits mean more opportunity for errors and a smaller minimum gap due to chain constraints, sometimes increasing the time needed to find the solution. One study demonstrated that standard embedding schemes incur not just a space (qubit) overhead but also a significant time-to-solution overhead, effectively putting current analog quantum annealers at a disadvantage compared to algorithms that don’t require such embedding (like certain classical or gate-model approaches that naturally handle full connectivity). Designing better hardware topologies (more connectivity) and smarter embedding algorithms can alleviate this, but encoding complexity remains a practical limitation. It also adds user complexity – formulating a problem for QA often requires an extra step of graph minor-embedding, which is not trivial for large graphs.

- Scalability and Performance Uncertainty: A critical open question is how well QA/AQC scales to larger problem sizes in terms of runtime and advantage over classical methods. The worst-case guarantee from the adiabatic theorem is not encouraging: if a problem has an exponentially small minimum gap (which many NP-hard problems likely do in certain cases), then an adiabatic algorithm would require exponentially long runtime to succeed with high probability. This is analogous to the fact that quantum computers (gate model) don’t efficiently solve NP-complete problems in general – there’s no magic to break the exponential barrier for the hardest cases. For typical or structured cases, it’s hoped that quantum effects can give a speedup, but it’s not automatic. So far, experimental evidence of a decisive quantum speedup with annealers has been limited. Some early benchmarking by researchers (e.g., comparing D-Wave to classical simulated annealing and optimized classical solvers) showed that on certain crafted problems quantum annealing could be faster, but in other cases classical algorithms caught up or even outperformed, especially once improvements like parallel tempering were applied. The presence of noise and analog precision errors in QA hardware also means that simply adding more qubits doesn’t linearly increase problem-solving ability – unlike a classical computer, where more bits and time can tackle larger instances deterministically, a larger annealer might suffer more from calibration issues and residual coupler errors, etc. There’s also a challenge in understanding the results: quantum annealers sometimes return not just one state but a distribution of low-energy states (which can be useful for sampling applications, but for optimization one must identify the best). Determining if the found solution is truly optimal might require additional classical checking. In terms of theoretical grounding, the limitation to stoquastic Hamiltonians (in current devices) means that known classical algorithms can often simulate the annealing process without exponential cost, implying that such QA devices might not offer exponential speedups over all classical approaches. To truly unlock better-than-classical performance at scale, improvements in hardware and algorithm (discussed below in Future Directions) will be needed. In summary, scalability is uncertain: will a 10x larger or 100x larger annealer solve problems that much faster or solve entirely new problems, or will diminishing returns set in? This is an area of active investigation, and it remains a caution that, as of now, QA/AQC must prove their merit on a case-by-case basis against cutting-edge classical solvers.

- Problem Class Limitations: Another limitation is that QA/AQC are fundamentally suited for finding ground states of classical Ising-like Hamiltonians (or performing computations that can be encoded in such ground-state searches). While this is a broad class (covering many optimization problems), it does not natively include all types of computations. Certain problems, like those involving logical circuits, arithmetic, or quantum chemistry simulations, might be very complex to map into an optimization form that an annealer can handle. In theory AQC can tackle these by encoding the entire computation into the ground state problem (even QMA-complete problems can be encoded in Hamiltonians), but the required Hamiltonians might be highly complex (involving many-body interactions, for example) and beyond what any current or near-term annealer can implement. This means that, practically speaking, QA is not a universal solver – it’s specialized. If your problem doesn’t naturally reduce to a QUBO/Ising form, using a quantum annealer may involve significant overhead in formulation (or it may not be feasible at all). This specialization is both a strength (focus leads to potentially better performance on that niche) and a limitation (it’s not a drop-in replacement for all computing needs).

Many of these challenges – noise, embedding, scaling, and specialization – are interrelated. They paint a picture of a technology that is powerful in concept but still maturing in execution. Overcoming these limitations is the focus of much of the current research and development in QA/AQC.

Potential Future Directions

Looking ahead, several developments could enhance the power and applicability of quantum annealing and adiabatic quantum computing. Future directions include:

- Advances in Hardware and Error Mitigation: One of the clearest paths forward is improving the hardware itself. Each generation of quantum annealers has brought more qubits and better connectivity – for example, moving from Chimera to Pegasus topology increased each qubit’s connectivity to 15 neighbors, reducing the embedding overhead for dense problems. Continuing this trend, future annealers (such as D-Wave’s planned Advantage2 system) aim for even higher connectivity and coherence per qubit. Higher coherence and lower noise directly improve the chances of the system staying in the ground state throughout the anneal, effectively pushing actual devices closer to the adiabatic ideal. There is also a push to introduce new controls and Hamiltonian terms in hardware. One promising direction is implementing non-stoquastic Hamiltonians – adding drivers or interactions that are not limited to positive off-diagonals (in the computational basis) could enable the exploration of problem landscapes that current annealers cannot efficiently navigate. This might involve, for instance, mixing in different types of quantum fluctuations or multi-qubit interactions that induce quantum interference patterns beyond what stoquastic setups allow. Another area of hardware improvement is analog control precision: refining how accurately one can set the initial and final Hamiltonians, and the annealing schedule. Better control could allow techniques like quantum optimal control to shape the anneal and avoid diabatic transitions at critical points (for example, slowing down when the gap is smallest, then speeding up after). On the topic of error correction, while a full-blown error correcting code for QA is extremely challenging (and arguably incompatible with simple stoquastic annealing), there are ideas for partial error mitigation – such as energy penalties for leaving the computational subspace, or using redundancy (embedding logical qubits with error-detecting codes) to detect excitations. In the long term, some researchers are exploring hybrid analog-digital error correction that could periodically intervene in an anneal to nudge it back on track. Any progress in error mitigation or correction for AQC would be a game-changer, as it is for gate-model quantum computing. Even without full error correction, incremental improvements in qubit coherence and reduction of noise (e.g., materials improvements in superconducting circuits, better shielding, etc.) will likely allow for longer and more complex adiabatic evolutions to be run reliably. The scaling up of QA hardware is expected to continue, but with a focus on useful scaling – meaning not just qubit count, but qubit quality and connectivity. All these hardware advances aim to close the gap between the real devices and the idealized conditions assumed by theory, thereby boosting performance.

- Hybridization with Other Quantum Approaches: The boundary between QA/AQC and circuit-model quantum computing may blur in the future. One vision is a hybrid quantum processor that can do both gate operations and annealing on the same platform. This could allow, for example, using adiabatic evolutions as subroutines within a gate algorithm or vice versa. While current hardware tends to specialize in one mode or the other, companies like D-Wave are now pursuing both, believing that a mixture of annealing, gate-model QCs, and classical computing will yield the best practical results. In terms of software, we already see convergence in algorithms like the Quantum Approximate Optimization Algorithm (QAOA), which essentially discretizes an annealing process into a series of gate operations and classical optimizations. As gate-model QCs grow and can handle more qubits, one could simulate larger and more complex “virtual annealing” processes via trotterization or QAOA – potentially performing annealing with Hamiltonians that are not limited by the physical constraints of annealer hardware. Conversely, annealers might incorporate digital elements: for instance, there could be future annealers that allow occasional projective measurements or quenches (sudden changes) mid-anneal, effectively injecting some gate-model style operations to correct course or amplify success probability. The integration of QA with classical HPC is also expected to deepen. Instead of being a standalone device, a quantum annealer might function as a quantum accelerator attached to a classical host that orchestrates complex algorithms (much like a GPU is used alongside a CPU today). We can anticipate more sophisticated hybrid algorithms that dynamically decide when to call the quantum annealer, possibly multiple times within an algorithm, to solve subproblems or to guide a classical search. This could mitigate some weaknesses of QA (for example, if the annealer returns a near-optimal solution, a classical algorithm could then take over to polish it or verify it). The overarching future direction here is convergence: leveraging the strengths of all available computational modalities. Rather than QA/AQC being in a silo, they will likely become one tool in the larger quantum computing toolbox, used in tandem with circuit QCs and powerful classical machines. This hybrid outlook is already reflected in the cloud offerings and roadmaps of quantum companies, and will likely become more pronounced as both annealers and gate-model QCs mature.

- New Algorithms and Applications: On the algorithmic side, researchers are continually seeking new ways to use adiabatic processes or to improve them. One intriguing direction is exploring shortcuts to adiabaticity, such as counter-diabatic driving (also known as the Quantum Approximate Driving – QAD, or adding auxiliary Hamiltonian terms that analytically cancel out some non-adiabatic transitions). If one can effectively guide the system such that it behaves adiabatically in a shorter time, that could yield faster solutions without requiring exponentially slow schedules. There is also research into diabatic annealing, where one intentionally runs somewhat faster than the adiabatic limit and relies on mechanisms like Landau-Zener transitions in a controlled way to hop between energy levels and possibly avoid getting stuck – essentially using non-adiabatic transitions to one’s advantage. These more exotic protocols blur the line between QA (as a strictly adiabatic process) and general quantum dynamics, but they fall under the broad category of analog quantum optimization and thus relate to the future of QA/AQC.In terms of applications, as hardware improves, we expect QA and AQC to tackle a wider array of problems. Optimization will remain the prime domain (covering things like logistics, scheduling, resource allocation, protein folding, etc.), but we may see growth in using QA for machine learning and sampling tasks. Quantum annealers can be used to sample from Boltzmann-like distributions by effectively drawing many low-energy states from a problem Hamiltonian. This is valuable in machine learning models such as Boltzmann machines or Bayesian networks, where sampling high-quality states is the bottleneck. In fact, D-Wave has demonstrated applications in training restricted Boltzmann machines by using the annealer to sample equilibrium states. The ability to get many candidate solutions (samples) from the quantum machine could also be leveraged in portfolio optimization (providing many nearly optimal portfolios for a financial analyst to choose from, for example). Another emerging application is quantum simulation via AQC: by encoding a physical system’s Hamiltonian into an annealer, one could in principle study its ground state or low-energy excitations. This would turn the QA device into a special-purpose simulator for quantum chemistry or materials science problems (though current annealers are limited in this because they typically encode classical Ising-like problems rather than the full quantum interactions of electrons). Researchers are looking into ways to use adiabatic algorithms for finding ground states of quantum Hamiltonians (a quantum-on-quantum problem), which could have implications for understanding high-temperature superconductivity or other complex quantum phenomena.

- Expansion in Industry and Accessibility: In the future, we may see more players entering the annealing space. Already, countries like Japan and China have initiated programs on quantum annealing research, and there’s academic development of annealers using different technologies (for example, some experiments with ion trap systems performing adiabatic optimization, or optical systems like the coherent Ising machine using photonics to simulate Ising spin dynamics). If D-Wave’s approach continues to show progress, other companies might spin up efforts to create alternative annealing hardware, perhaps using different qubit modalities that could offer advantages (like longer coherence or easier scaling). On the software side, the ecosystem will likely become more user-friendly, with higher-level tools that automatically map problems to QA, handle minor-embedding optimally, and integrate with classical optimization software. This would broaden the accessibility so that industry practitioners can leverage QA without deep quantum expertise.

Ultimately, the future evolution of QA and AQC seems poised towards a synergy with other computing modalities. Rather than completely separate paths, they are likely to become interwoven with the overall trajectory of quantum computing. If certain hard obstacles are overcome – e.g., if a reliable form of error reduction for annealing is found, or if non-stoquastic drivers markedly improve performance – QA/AQC could even take a central role in quantum computing for optimization tasks. Even if not, they will remain a valuable specialized tool. The consensus in the community is that different quantum approaches will complement each other: much as classical computing uses CPUs, GPUs, and FPGAs for different tasks, future quantum computing might use gate-model processors for some algorithms and quantum annealers for others. The ongoing research and industrial efforts indicate a healthy interest and continuous improvements, so we can expect QA and AQC to become more powerful and versatile in the coming years. As one industry perspective noted, combining annealing with gate-model and classical computing might be the key – leveraging “the combination of annealing, gate-model quantum computing and classical machines” to get the most value. This hybrid future, along with steady hardware advancements, suggests a robust roadmap for the evolution of quantum annealing and adiabatic quantum computing.

Quantum Computing Modalities Within This Category

Quantum Annealing (QA)

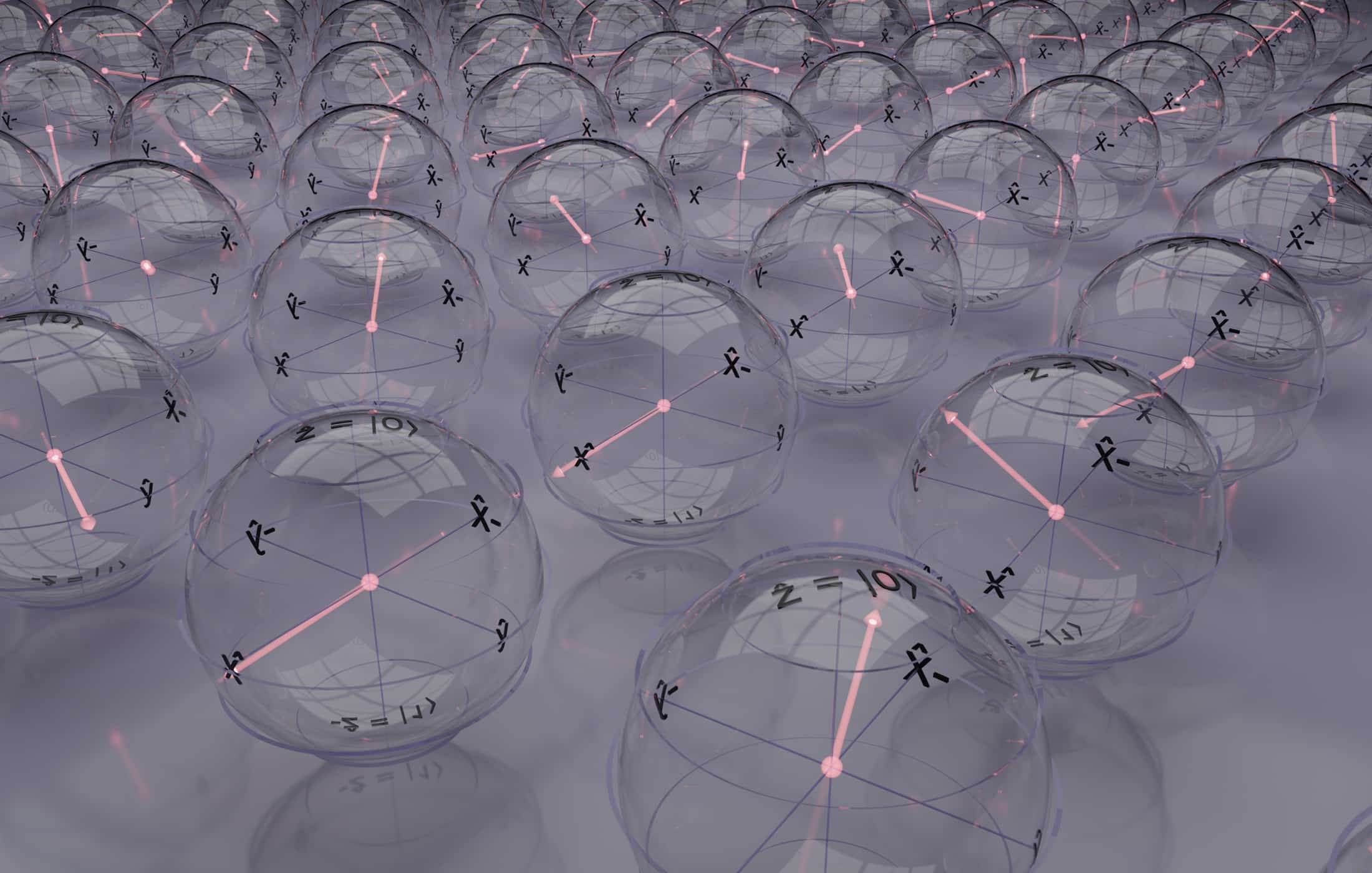

Quantum annealing is a specialized quantum computing approach designed to solve complex optimization problems by guiding a system toward its lowest-energy state, or global minimum, through a gradual transformation of its Hamiltonian (energy landscape) under quantum-mechanical principles.

Adiabatic Quantum Computing (AQC)

Adiabatic quantum computing is a modality that exploits the adiabatic theorem of quantum mechanics, where a system starting in the ground state of a well-understood Hamiltonian is slowly evolved into the ground state of a more complex target Hamiltonian.